This chapter discusses the respective roles of the Council of Nuevo León and the state public administration in regards to monitoring the Strategic Plan 2015-2030. It draws on comparative approaches to recommend the clarification of the institutional monitoring set-up and the reinforcement of the state public administration’s mandate in this regard. It highlights the need to increase quality assurance and quality control mechanisms for the monitoring process. The chapter also analyses the opportunity for monitoring results to provide the appropriate users timely performance feedback, so as to better public decision-making, accountability and information.

Monitoring and Evaluating the Strategic Plan of Nuevo León 2015-2030

3. Monitoring the Results of the Strategic Plan of Nuevo León

Abstract

Introduction

Sound monitoring means that monitoring is part and parcel of the policy cycle; that it is carried out systematically and rigorously; that decision makers use its results; and that information is readily available to the public (Lázaro, 2015[1]). It offers policy makers the tools and evidence to detect policy challenges, to adapt or adjust policy implementation, as well as to communicate policy results in a timely and accessible manner.

In Nuevo León, sound monitoring can facilitate planning and operational decision-making by providing evidence to measure performance and help raise specific questions to identify implementation delays and bottlenecks. It can also strengthen accountability and public information in regard to the implementation of the Plan, as information regarding the use of resources is measured and made public. Yet, the monitoring set-up in Nuevo León, whether for the Strategic Plan 2015-2030 or the State Development Plan lacks clarity for its actors and legibility for citizens.

This chapter discusses the respective roles of the Council of Nuevo León and the state public administration in regard to monitoring the results of the Strategic Plan. It includes a detailed description of the current institutional set-up for monitoring the Plan, from the data collection process all the way to communication of results. It draws on comparative approaches to recommend a clarification of this set-up and a reinforcement of the state public administration’s mandate in this regard.

The chapter analyses this institutional set-up and suggests diversifying the Plan’s indicators and strengthening their robustness, in order to improve the overall quality of the monitoring exercise. The chapter adopts a forward-looking approach that recommends the development of quality assurance and quality control mechanisms to strengthen the overall credibility of the monitoring set-up.

Finally, the chapter examines how these monitoring results can provide the appropriate users timely performance feedback, to improve decision-making, accountability and information.

Clarifying the monitoring set-up to better support the delivery of the Plan

The definition of monitoring contained in the Strategic Planning Law is not comprehensive and may lead to confusion about its purpose

In Nuevo León, article 18 of the Strategic Planning law defines monitoring, as well as evaluation, as “the measurement of the effectiveness and efficiency of the planning instruments and their execution” (State of Nuevo León, 2014[2]). This definition, therefore, does not provide information on the objectives of monitoring or the manner in which it should be conducted, in terms of methodology and quality attributes. As mentioned in chapter 1, this definition does not distinguish between monitoring and evaluation. This confusion is also present in article 37 of the “Reglamento de la Ley de Planeación”.

Monitoring differs from evaluation in substantive ways. The objectives of monitoring are to facilitate planning and operational decision-making by providing evidence to measure performance and help raise specific questions to identify implementation delays and bottlenecks. It can also strengthen accountability and public information, as information regarding the use of resources, efficiency of internal management processes and outputs of policy initiatives is measured and publicised. Unlike evaluation, monitoring is driven by routines and ongoing processes of data collection. Thus, it requires resources to be integrated into an organisational infrastructure. Whereas policy evaluation studies the extent to which the observed outcome can be attributed to the policy intervention, monitoring provides descriptive information and does not offer evidence to analyse and understand cause-effect links between a policy initiative and its results (OECD, 2019[3]).

A clear and comprehensive definition of monitoring would contribute to a shared understanding of its objectives and modalities among the main actors in Nuevo León. This would facilitate greater cooperation between relevant actors by not only eliminating confusion regarding the role of monitoring vis-à-vis other tools to measure government performance, but also by making stakeholders more aware of the mutual benefit of monitoring exercises (e.g. more informed decision-making processes, ongoing supply of performance indicators, etc.). Such a definition could be included in the regulatory framework.

Clarifying responsibilities for the monitoring of the Plan may allow for greater synergy and improved coordination between the council and the executive

A robust monitoring system first and foremost implies the presence of an institutional framework for monitoring that provides: (a) the legal basis to undertake monitoring; (b) macro-level guidance on when and how to carry out monitoring and (c) clearly mandated institutional actors with allocated resources to oversee or carry out monitoring (OECD, 2019[3]).

A whole-of-government legal framework exists for monitoring the Strategic Plan 2015-2030 and the State Development Plan

There is a solid legal basis for monitoring the Strategic Plan 2015-2030 (SP) and the State Development Plan (SDP) in Nuevo León. Article 18 of the Strategic Planning Law mandates monitoring and evaluation to be carried out for both planning instruments (State of Nuevo León, 2014[2]). Moreover, monitoring of the SDP is also embedded in the guidelines. The “General Guidelines of the Executive Power of the state of Nuevo León for the Consolidation of the Results-based Budget and Performance Assessment System” stipulate that “the agencies, entities and administrative tribunals, shall send quarterly reports to the Secretariat, in accordance with the provisions issued by the latter, reports on the performance of the budgetary exercise and programmatic progress.” (State of Nuevo Leon, 2017[4]), which have to be aligned to the objectives of the SDP (article 9 of the guidelines). However, the quality of these quarterly reports is not always high enough to help dependencies track spending decisions along budgetary programmes.

Some OECD countries have similarly adopted clear legal frameworks for performance monitoring. In the United States, for example, the Government Performance and Results Modernization Act of 2010 mandates the government to define government-wide performance goals, as well as each agency to define sectoral goals. In Canada, the Management Accountability Framework was implemented in 2003 to hold heads of departments and agencies accountable for performance management, and to continuously improve performance management (see Box 3.1).

Box 3.1. The Canadian Management Accountability Framework

The Management Accountability Framework (MAF) is an annual assessment of management practices and performances of most Government of Canada organisations. It was introduced in 2003 to clarify management expectations and strengthen deputy heads of departments and agencies’ accountability.

The MAF sets out expectations for sound public sector management, and in doing so aims to support management accountability of deputy heads and improve management practices across government departments and agencies. The MAF is accompanied by an annual assessment of management practices and performance in most departments and agencies of the Government of Canada against the criteria of the framework. Since, 2014, the assessments use a mix of qualitative and quantitative evidence, and results are presented within a comparative context to allow benchmarking across federal organisations.

Today, there are 7 areas of management, or criteria, against which federal organisations are evaluated (see Table 3.1). In 2017-2018, for example, the MAF report looked at 36 large and 25 small departments and agencies along the four core areas of management. Results in this performance show that only a minority of large departments and agencies systematically used both evaluation and performance measurement information in resource allocation decisions.

Table 3.1. Areas of Management (AoM) and teams assessed as part of the MAF

|

AOM Type |

Areas of Management |

|---|---|

|

Core AoMs |

Financial Management |

|

People Management |

|

|

Information Management and Information Technology (IM/IT) |

|

|

Results management |

|

|

Department-specific AoMs |

Management of acquired services and assets |

|

Service management |

|

|

Security management |

The Management Accountability Framework itself is evaluated every 5 years by the Internal Audit and Evaluation Bureau as part of the Treasury Board of Canada Secretariat’s (TBS) five-year evaluation plan. The evaluation looks at relevance, performance, design, and delivery of MAF 2.0.

Having a clear legal framework, in the form of a primary law accompanied by guidelines, would be useful in order to underline the importance that the state of Nuevo León attributes to this practice, within the council and across government. Nevertheless, the presence of a legal-basis for monitoring alone is not enough. A robust monitoring system needs to specify the actors involved, their mandates, the timeline, the methodology and tools for monitoring. In the case of Nuevo León, such a monitoring system would need to clarify the articulation of the monitoring for the SP and the SDP, as was done in the US for the 2010 Government Performance and Results Modernisation Act for instance (see Box 3.2).

Box 3.2. The United States Government Performance and Results Modernization Act

The United States undertook reforms to improve the central government’s performance management system, to foster a dialogue on performance across government, and to deliver targeted improvements on high-priority cross-government initiatives. In 2010, the Government Performance and Results Modernization Act (GPRAMA) was adopted to strengthen the efficiency of the system for government agencies to report their progress. The GPRAMA provides enhanced performance planning, management and reporting tools that can help decision makers to address current challenges.

A main objective of this act is to demonstrate the value of performance information and its usefulness in management decisions. A key element to drive such an effort has been to establish leadership roles and performance improvement responsibilities for senior management, making it their job to engage the workforce in a performance-based discussion as well as assuming accountability for agency performance and results.

The Modernisation Act requires every agency to identify two to eight (usually about five) Agency Priority Goals (APGs), which inform the setting of the APG Action Plan. The AGPs are set every two years and are subject to quarterly performance reviews by the chief operating officer (COO) (usually deputy) and the performance improvement officer (PIO).

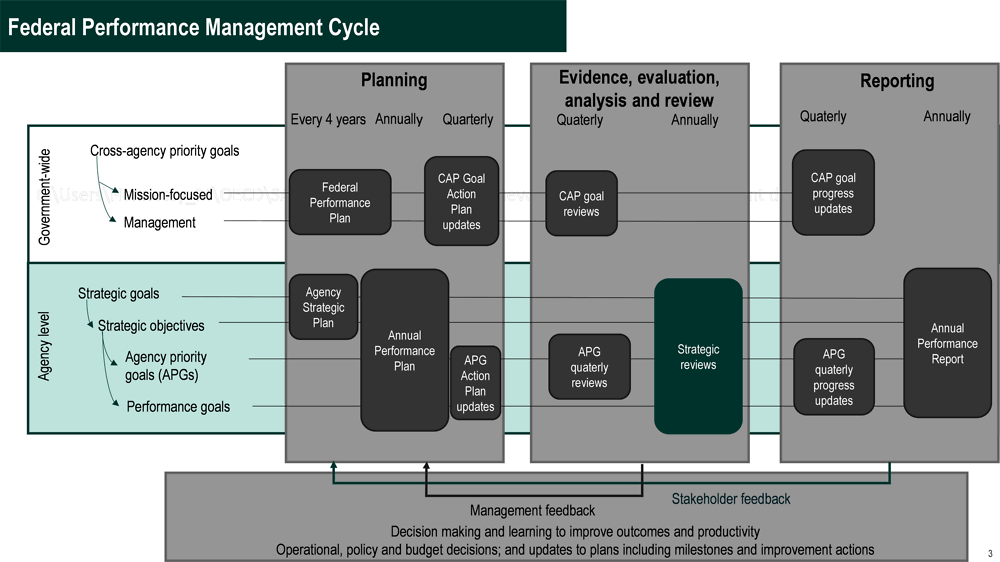

In addition to agency-level priority goals, federal cross-agency priority goals are set every four years, with performance assessed via quarterly reviews by the Office of Management and Budget (OMB) Director and a Performance Improvement Council. Finally, agency-level strategic goals and objectives, which inform the setting of the AGPs, are subject to annual strategic reviews by agencies and the OMB (see figure below).

Strategic reviews are annual assessments that synthesise the available performance information and evidence to inform budget, legislative and management decisions. Initial results of the first round of reviews are promising. Many agencies were able to identify strategic objectives with relatively weak evidence and thus identify areas for improving metrics. Moreover, agencies reported that the strategic reviews reduced inconsistencies by bringing programmes together to discuss cross-cutting, strategic issues. Agencies also reported new initiatives to begin directly aligning activities with strategic goals and objectives. Furthermore, according to the agencies, the majority of agencies’ performance staff were interested and engaged in finding value from strategic reviews.

Figure 3.1. United States’ performance framework

Sources: Based on information from the United States Office of Management and Budget; Implementing Strategic Reviews: A component of the GPRA Modernization Act of 2010, Mark Bussow, Performance Team Lead at U.S. Office of Management and Budget (OMB), presented at the 10th Annual meeting of the OECD Senior Budget Officials Performance and Results Network

There are numerous actors involved in the monitoring of the Plans and their mandates are not always explicit

The Strategic Planning Law assigns responsibility for monitoring the Strategic Plan and the State Development Plan to the council, with the collaboration of “the dependencies and entities of the state public administration” (State of Nuevo León, 2014[2]). However, a careful analysis of the Law and its regulations, suggests that the role of the various actors and units within the centre of government is not clear in terms of their responsibilities for the monitoring of the SP and the SDP. Regarding the Strategic Plan, the council is responsible for the coordination and promotion of its monitoring, as laid out in article 18 of the Strategic Planning Law. The council is also mandated to establish criteria for the identification of indicators to monitor the SP, to monitor their progress by collecting the data and documents necessary and to communicate the monitoring results to citizens (State of Nuevo León, 2014[2]).

However, it is unclear how the council is intended to collect this data, as it does not actually implement the policies outlined by the Strategic Plan. In practice, the CoG has played a facilitating role between the council and the line ministries in order to gather the aforementioned data. However, neither the CoG nor the line ministries have an explicit (i.e. included in their legal regulations) mandate to cooperate with the council in this regard and they do not have specific human or financial resources allocated to this task. Adapting the internal regulations of the line ministries to include data collection and analysis for the SP would greatly facilitate the cooperation between these actors.

Regarding the monitoring of the State Development Plan, on the other hand, the role of the state public administration and its dependencies is explicitly laid out. The centre of government is in charge of coordinating and promoting the monitoring of the SDP (article 14.I and 14.IV of the Strategic Planning Law regulation), while the line ministries monitor the budget and programmes of their own entities and report to the CoG (article 14.V.e of the Strategic Planning Law regulation).

This practice of attributing the monitoring of strategic priorities to the CoG can also be found in different forms in many OECD countries (see Box 3.3). This monitoring can either be done by the CoG itself, like in Finland, or by units within the CoG with a special mandate to do so, as is the case for the Results and Delivery Unit of Canada.

Box 3.3. Monitoring government-wide policy priorities at the level of the centre of government

The Prime minister’s office (PMO) in Finland is in charge of monitoring the government programme. Specifically, the strategy unit in the PMO monitors the implementation of 5 key policy objectives of horizontal nature and wide structural reform of social and health care services that are part of Finland’s government-wide strategy. Together with the 26 key projects, the key policy areas are monitored weekly at the level of the CoG in government strategy sessions reserved for situation awareness and analysis based on evidence and foresight. Milestones for each policy area and project are clearly defined and indicators for each strategy target are updated two to four times a year.

The Results Delivery Unit of the Privy Council of Canada is a centre of government institution in Canada, providing support to the Prime Minister on public service delivery. It was created in 2015 to support efforts to monitor delivery, address implementation obstacles to key priorities and report on progress to the Prime Minister. The RDU also facilitates the work of government by developing tools, guidance and learning activities on implementing an outcome-focused approach. The results and delivery approach in Canada is based on three main activities: (i) defining programme and policy objectives clearly (i.e., what are we trying to achieve?); (ii) focusing increased resources on planning and implementation (i.e., how will we achieve our goals?); and (iii) systematically measuring progress toward these desired outcomes (i.e., are we achieving our desired results and how will we adjust if we are not?).

This division of labour is also supported by the fact that certain line ministries are also explicitly mandated, through their organisational decree, to report on the implementation of the SDP. For example, the Secretary of Economy is responsible for preparing monitoring reports for the implementation of the State Development Plan (article 14.VIII of the regulations for the Ministry of Economy and Labour). There is no such provision regarding the Strategic Plan for any of the ministries.

Rather, the role of the council regarding the monitoring of the SDP is ambiguous. Indeed, the council is also mandated (article 18 of the Strategic Planning Law) to monitor the SDP, seemingly creating a duplication of this function between the council and the CoG (see Table 3.2).

Table 3.2. Mandates of the main actors in the monitoring system of the SP and the SDP

|

|

Coordinate and promote monitoring |

Collect data |

Analyse data |

Report data |

Use data |

|---|---|---|---|---|---|

|

STRATEGIC PLAN |

|||||

|

Nuevo León council |

Yes |

Yes |

Yes |

Yes |

No |

|

Centre of government |

No |

No |

No |

No |

Yes |

|

Line ministries |

No |

No |

No |

No |

No |

|

STATE DEVELOPMENT PLAN |

|||||

|

Nuevo León council |

Yes |

Yes |

Yes |

Yes |

No |

|

Centre of government |

Yes |

Yes |

Yes |

No |

Yes |

|

Line ministries |

No |

No |

No |

No |

No |

The overlaps and potential gaps in the mandates of the main actors of the monitoring system result in unnecessary complexity and a lack of incentives to collaborate. Monitoring and the resulting data collection processes often involve complex chains of command shared across stakeholders who do not always see the immediate benefits of the monitoring exercise. Clarifying mandates through formal mechanisms, such as laws or regulations, can therefore be an important tool for governments to create incentives for these stakeholders to participate in such an exercise.

In the case of Nuevo León, neither the CoG nor the line ministries have an explicit mandate to report to the council on the Strategic Plan’s programmes nor do they have the allocated resources to do so. As such, without an official delineation of the roles and the scope of the monitoring system (i.e. SP and SDP) in Nuevo León, the monitoring exercise becomes complex, potentially less effective and can generate unnecessary tensions.

It may therefore be useful to clarify the different roles of each actor by updating the regulatory framework, giving an explicit legal mandate to each of the actors and specifying their respective responsibilities (collecting data, analysing data, using data, coordinating and promoting monitoring, designing guidelines for monitoring, etc.). The internal regulations of the secretariats and their dependencies could also be updated to clarify their role in monitoring the plans. In doing so, it is important to clearly separate the coordination and promotion role of the CoG from that of data collection, analysis and use performed by line ministries, in order to avoid blurring incentives between actors.

The monitoring methodology and tools for the SP are not well-defined

Moreover, Nuevo León does not have macro-level guidance (document, policy) in place on when and how to carry out monitoring, despite the fact that article 19 of the law stipulates that the monitoring and evaluation report should be produced annually. For example, it does not elaborate on how this report ought to be practically prepared. By creating clear obligations without proper guidance, this blurs the institutional framework.

More importantly, the law and its supporting regulation do not outline the existence of a monitoring set-up beyond the production of an annual report. In particular, the following elements are missing:

the expected frequency of data collection for each level of indicator (SP impact/ outcome indicators or SDP outcome/output indicators);

the governance and calendar of the performance dialogue between line ministries, the CoG and the council, that is what actors meet to discuss what issues at what frequency and with what intent;

and the criteria according to which certain issues need to be escalated to a higher level of decision-making (for instance, from line ministry level to CoG level), as well as how to follow-up on decisions.

Despite the lack of a legally defined monitoring process, an informal process has been developed, both for the preparation of the annual report and for the infra-annual performance dialogue between the council and the state public administration. This process has been carried out in full once since the adoption of the Strategic Plan.

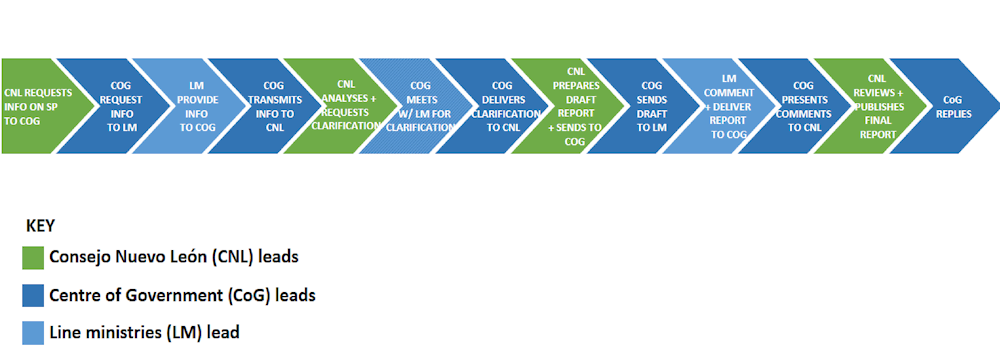

The council spearheads the monitoring report process; the commissions receive and review the relevant information from relevant ministries and generate recommendations accordingly. The CoG coordinates the relationship between different ministries and the council (and its commissions) by requesting and collecting information from the ministries on behalf of the council. The ministries respond to the council’s recommendations in an official document delivered to the legislative branch.

Figure 3.2 outlines a schematic view of this process.

Figure 3.2. Process for elaborating the annual report on the Strategic Plan

Note: This is a schematic view of this process. The process has not always been entirely followed in the past. For instance in 2018, the Council did not receive an answer from the CoG regarding the evaluation.

Source: Authors.

Before 2018, there was also no standard procedure for preparing the monitoring report across commissions; it was only during the second evaluation that the council attempted to harmonise procedures by standardising the questionnaires sent to ministries and the crosschecking procedures.

Likewise, an informal set-up has been developed whereby the state public administration reports to the council – and to each thematic commission in particular – on an infra-annual basis. In this exercise, working methods across commissions have been very heterogeneous, with each commission deciding, more or less collaboratively with the state public administration, on the number of meetings per year, the level and type of information they seek to receive and the potential outputs of these meetings. For example, the Education sub-commission did not have any meetings in 2018.

As a result of this informal set-up, the quality of exchanges between the state public administration and the council has often rested on the existence of trusting personal relationships between the Secretaries and the members of the commissions, rather than on sound and clear working methods and mandates. Moreover, the lack of standardised tools for data collection and analysis has meant that the intended output of the monitoring sessions between the commissions and the secretaries is not only heterogeneous, but also sometimes unclear. This may lead to a lack of incentive to collaborate, especially from the line ministries who do not have an explicit mandate and allocated resources to do so.

A clarification of the monitoring set-up, methodology and tools may allow for greater cooperation and coordination between the council and the state public administration, and the use of monitoring results.

Monitoring evidence can be used to pursue three main objectives (OECD, 2019[3]):

it contributes to operational decision making, by providing evidence to measure performance and raising specific questions in order to identify implementation delays or bottlenecks;

it can also strengthen accountability related to the use of resources, the efficiency of internal management processes, or the outputs of a given policy initiative;

it contributes to transparency, providing citizens and stakeholders with information on whether the efforts carried out by the government are producing the expected results.

Each of these goals implies using monitoring data in a distinct manner. Firstly, for monitoring evidence to serve as a management tool, it must be embedded in a performance dialogue that is conducted regularly and frequently enough that it allows practitioners and decision-makers to identify implementation issues, determine resource constraints and adapt their efforts/ resources in order to solve them. Such an exercise is closely tied to policy implementation and management. In the case of Nuevo Léon, this exercise should be conducted within the state public administration, ideally between the highest levels of the centre of government and the operational levels of the dependencies.

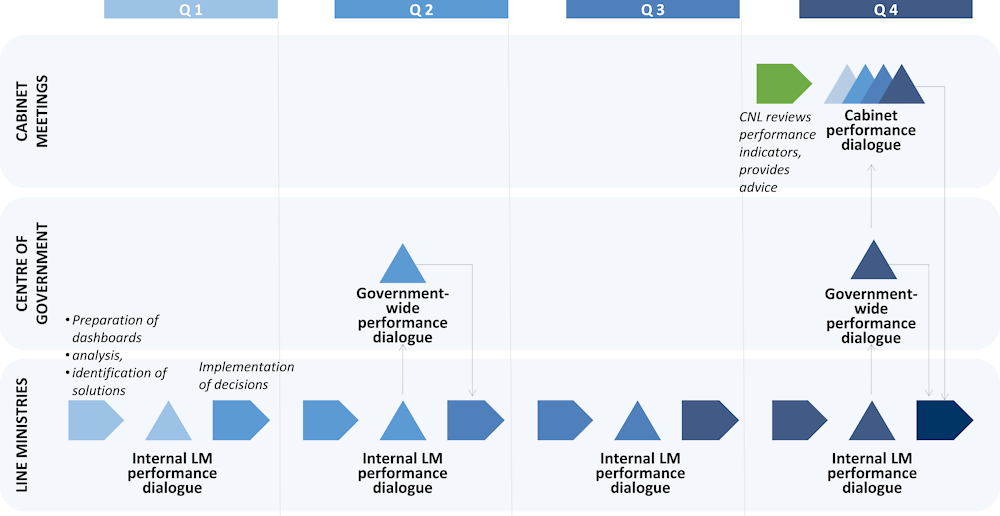

Nuevo León has both a Strategic Plan and a State Development Plan. Given the plurality of strategic frameworks, it is important to ensure that the monitoring processes are streamlined and aligned to minimise the additional burden occurring with each strategy (Vági and Rimkute, 2018[11]). As a result, it is important to clarify that the state public administration should conduct this performance dialogue regarding both the SP and the SDP simultaneously. Given the more strategic nature of the Strategic Plan, the performance dialogue for the Strategic Plan could be held on a biennial basis, depending on the theory of change of every strategic objective and information availability. Two main functions can be identified in the context of this performance dialogue: a coordination and promotion function, which can be naturally conducted by the centre of government, and a data collection, analysis and use function, which can be the responsibility of the line ministries.

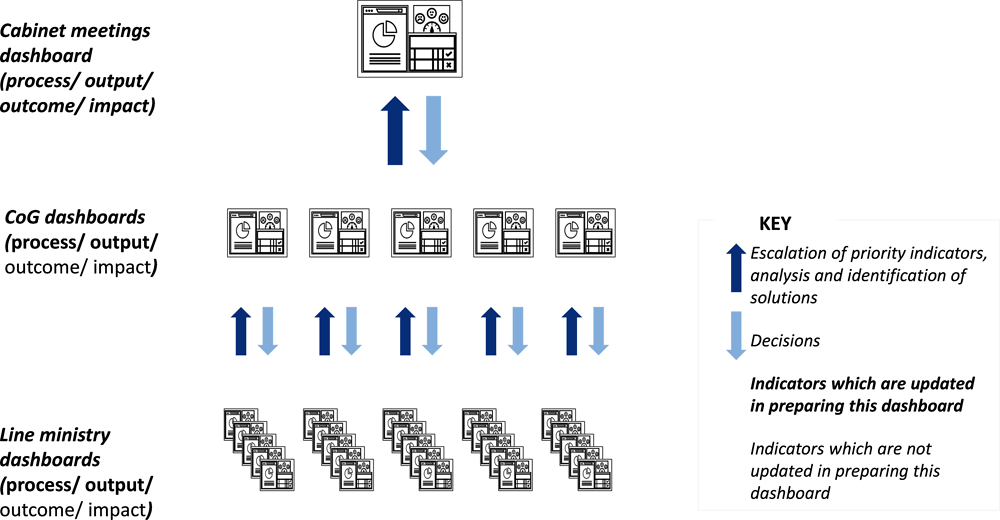

This performance dialogue could be centred around the action plans – as recommended in chapter 2 – to monitor the lines of action (processes) and strategies (outputs) which are common to the SP and the SDP, at the level of line ministries, on a quarterly basis1. This dialogue would create an incentive for line ministries to resolve implementation issues at the technical or sectorial level through a gradual escalation process. If the problem is still unresolved after two quarters, it could be referred to the CoG twice a year for decision. Finally, any implementation issues that would require cross-ministerial coordination and mobilisation of additional resources may be referred to cabinet meetings on a yearly basis (see Figure 3.3).

Figure 3.3. Articulation of different levels of indicator dashboards

Source: Authors

These cabinet meetings may also be an opportunity to update and analyse the outcome/impact indicators included in the SP and the SDP. Indeed, it is important to link process and output indicators to the progress of outcome and impact indicators to actually track results and not just activities (The World Bank, 2017[12]). Figure 3.4 demonstrates this process over a one-year period. The next section of this chapter will focus on the relevance and quality of indicators.

Figure 3.4. Proposed performance dialogue between the Line ministries, the CoG and the cabinet meetings

Source: Authors

As previously mentioned, monitoring evidence can also serve as a tool for remaining accountable to stakeholders in the implementation of the Strategic Plan. In order to do so, in preparation of the annual cabinet meeting on the SDP and the SP, the state public administration and the council could conduct a collaborative review of the plans’ indicators, which would be presented to the cabinet. This collaborative review, which could build on the four performance dialogue sessions held every year, could be conducted jointly by the state secretariats and the council’s commissions to review the data to be presented to cabinet, as well as give the opportunity to the council to serve as a sounding board for the administration. Indeed, while attempting to interpret the data and understand implementation gaps, the administration could seek the advice of the council. This review would be an opportunity for the council to offer the state public administration different insights on implementation gaps identified during the performance dialogue. Finally, given the council’s multi-stakeholder composition, it could also offer implementation support to the cabinet where relevant and possible.

In order to carry on the performance dialogue and contribute to better accountability, the state public administration could consider uploading and updating the indicators of the SDP and the SP in a dashboard. This dashboard would include information concerning both the implementation of the action plan recommended in chapter 2, as well as that of the SP and the SDP outcome/impact indicators, but only the action plan indicators will be updated quarterly – the indicators from the SDP and the SP may only be updated annually or when new information is available. Monitoring dashboards are intended as management and decision-making tools, and therefore offer a performance narrative that allows for the identification and analysis of implementation gaps and potential solutions. To develop these dashboards, the state public administration could draw on a range of good practices. For instance, the current citizen councillors who belong to the private sector could share their best practices with the public servants in regard to preparing dashboards and monitoring strategies.

Moreover, the commissions may want to clarify their working methods for conducting this annual review. In particular, the council could create a guide for commissioners and for their technical secretariats in order to define the agenda for review sessions, the role of the commissions, the tools they may use, and how decisions should be taken in regard to this annual review (see chapters 4 and 5 for a more general discussion of commissions’ working methods).

Finally, monitoring evidence serves to foster transparency, in particular to communicate to citizens. The centre of government could choose to produce a communication report on the SP. This communication report, which could be updated every three years, would replace the current “hybrid” monitoring/evaluation report conducted by the council on an annual basis. It would be designed primarily as a communication tool (see section on use of monitoring evidence for a further discussion of this leaflet). Moreover, it is important to communicate more regularly with citizens, notably through an up-to-date website showcasing the monitoring information of the SDP and the SP (see section on promoting the use of monitoring for a more detailed discussion of this recommendation).

Promoting the quality of monitoring

Collecting quality data is paramount in producing robust monitoring evidence

Access to robust and credible data is an essential component of building a results-based monitoring system. Data should not only be technically robust, valid, verifiable and policy-actionable, but they should also be transparent and free from undue influence (Zall, Ray and Rist, 2004[13]).

The lack of a systematic framework which would link indicators and opportunity areas in the SP hinders its monitoring

Developing performance indicators, their baseline and targets is an important stage in the strategy development process. Although article 9 of the Strategic Planning Law sets among the council’s responsibilities the establishment of criteria for the monitoring and evaluation indicators, there does not seem to be an explicit and systematic framework for their design.

Firstly, there is a lack of systematic linkage between each layer of the Plan and the indicators making it hard for stakeholders to monitor the progress in terms of aspirations/opportunity areas/strategic lines (see Table 3.3). While most commissions have identified a set of indicators, they are not presented in a way that clearly indicates their connection with elements of the Plan (opportunity areas, strategic lines or initiatives). All indicators for the commission/sub-commission are grouped together in a table at the end of the section related to that thematic area. Some sub-commissions2 have introduced indicators that cannot be directly or indirectly linked to either an opportunity area or a strategic line/initiative.

Moreover, not all commissions have defined indicators. For instance, four sub-commissions3 of the Human Development Commission have not defined any indicators, suggesting that there is no way for their members to monitor and evaluate the achievement of the policy goals set out in that part of the Strategic Plan. Similarly, the Economic Development Commission is the only one that has defined indicators for both priority opportunity areas and strategic lines: 40% of opportunity areas have no indicators relevant to them.

Table 3.3. Lack of unified framework for the design of indicators

|

Commission |

Sub-Commission |

Aspiration |

Strategic Axes |

Opportunity Areas |

Prioritised Opportunity Areas |

Indicators |

Strategic lines and Initiatives |

Indicators |

Strategic Projects |

|---|---|---|---|---|---|---|---|---|---|

|

Human Development |

Education |

1 |

3 |

11 |

5 |

4 |

12 |

NA |

4 |

|

Health |

1 |

4 |

17 |

4 |

NA |

15 |

14 |

7 |

|

|

Social Development |

1 |

4 |

14 |

5 |

9 |

15 |

NA |

6 |

|

|

Art and Culture |

1 |

3 |

10 |

5 |

NA |

17 |

NA |

3 |

|

|

Sports |

1 |

3 |

5 |

3 |

NA |

11 |

NA |

1 |

|

|

Values |

1 |

3 |

9 |

3 |

NA |

10 |

NA |

1 |

|

|

Citizen’s participation |

1 |

3 |

4 |

3 |

NA |

10 |

NA |

2 |

|

|

Sustainable Development |

. |

1 |

4 |

15 |

5 |

8 |

38 |

NA |

8 |

|

Economic Development |

. |

1 |

4 |

7 |

5 |

NA |

8 |

4 |

3 |

|

Security and Justice |

. |

1 |

6 |

16 |

5 |

13 |

39 |

NA |

5 |

|

Effective Governance and Transparency |

. |

1 |

4 |

10 |

5 |

NA |

15 |

5 |

5 |

Source: Authors.

Therefore, explicitly linking each indicator to an opportunity area (strategic objective as recommended in chapter 2), first and foremost visually in the strategy document, will be essential to clarify the monitoring structure of the Plan. This exercise should be undertaken by the council.

Such an analysis also suggests that some output indicators may have been linked to outcome-level objectives. It would be preferable to maintain, to a feasible extent, a focus on outcomes in a performance-oriented framework (see example of Scotland in Box 3.4).

Box 3.4. The National Performance Framework of Scotland

The National Performance Framework of Scotland proposes a Purpose for the Scottish society to achieve. To help achieve this Purpose, the framework sets National Outcomes that reflect the values and aspirations of the people of Scotland, which in line with the United Nations Sustainable Development Goals and help to track progress in reducing inequality. These National Outcomes include:

“We have a globally competitive, entrepreneurial, inclusive and sustainable economy”, in regards to the Scottish economy

“We are healthy and active”, in regards to health

“We respect, protect and fulfil human rights and live free from discrimination”, in regards to human rights

Each National Outcome has a set of 81 outcome-level indicators updated on a regular basis to inform the government on how their administration is performing concerning the Framework. A data dashboard where citizens can access data on these indicators is available on the Scottish Government Equality Evidence Finder website.

Source: Scottish Government (2020[14]), National Performance Framework, https://nationalperformance.gov.scot/

Both the Strategic plan and the action plans would benefit from identifying sound and policy actionable indicators

Monitoring a policy, programme or project – such as the strategic plan 2015-2030 or the action plans – implies identifying indicators that are:

sound, meaning that they are methodologically robust;

policy actionable, meaning that they correspond to an observable variable that captures the intended direction of change and is under the remit of the actor in charge of implementation.

Firstly, the indicators in the strategic plan 2015-2030 are not always sound. In order for indicators to provide decision makers information on what course of action to take in order to achieve the intended policy objectives, they should be accompanied by information that allows for their appropriate interpretation. That is why, regardless of their typology, all indicators should be presented in a way that provides enough information:

Description of the indicator: name, unit of measurement, data source and formula.

Responsibility for the indicator: institution, department or authority responsible for gathering the data;

Frequency of data collection and update of the indicator;

Baseline that serves as a starting point to measure progress;

Target or expected result.

For the most part, the indicators in the strategic plan are explicitly stated, as reflected by the fact that the commissions have chosen to present the indicators in a table often including information on the data source, the baseline and the target values. Nevertheless, some indicators do not have an existing baseline or source responsible for collecting data. The indicators also do not make explicit the institution or person responsible for collecting the data and updating the indicator.

Moreover, the indicators chosen for the Strategic Plan do not all fit the RACER approach (see Box 3.5 for an explanation of this criteria).

Box 3.5. Assessing indicators against the RACER approach

The quality of an indicator will depend on the purpose it serves, on the nature of the policy or programme it seeks to monitor, and on the development and maturity of the overall performance monitoring system. There are therefore no universal principles to be followed when defining indicators. One example of a quality framework for the assessment of indicators is the RACER approach, used amongst others by the Better Regulation Index. According to this approach, indicators should be:

Relevant: Closely linked to the objectives to be reached. They should not be overambitious and should measure the right thing.

Accepted by staff, stakeholders: the role and responsibilities for the indicator need to be well defined.

Credible for non-experts: Unambiguous and easy to interpret. Indicators should be as simple and robust as possible. If necessary, composite indicators might need to be used instead –such as country ratings, well-being indicators, but also ratings of financial institutions and instruments. These often consist of aggregated data using predetermined fixed weight values. As they may be difficult to interpret, they should be used to assess the broad context only.

Easy: To monitor (e.g. data collection should be possible at low cost). Built, as far as practicable, on available underlying data, their measurement not imposing too large a burden on beneficiaries, on enterprises, nor on citizens.

Robust: Against manipulation (e.g. administrative burden: If the target is to reduce administrative burdens for businesses, the burdens might not be reduced, but rather shifted from businesses to public administration). Reliable, statistically and analytically validated, and as far as practicable, compliant with internationally recognised standards and methodologies.

Source: (DG NEAR, 2016[15])

For instance, not all indicators are from public or reliable sources. The Strategic Plan also contains indicators that are largely based on international and national statistical data (see Box 3.6 for a detailed description of types of monitoring data). Yet, this type of data is best suited to long-term impact and context indicators, for which the underlying changes are less certain than for outcome indicators (DG NEAR, 2016[70]). Administrative data and data collected as part of an intervention’s implementation are usually better suited for process, output and intermediate outcome indicators, such as those found in the State Development Plan.

Box 3.6. Potential sources of data used for monitoring

Conducting quality monitoring requires quality data, which may come from various sources (as outlined below), each of which has associated strengths and weaknesses. Combining different data sources has the potential to maximise data quality, as well as to unlock relevant insights for monitoring.

Administrative data: This type of data is generally collected through administrative systems managed by government departments or ministries, and usually concerns whole sets of individuals, communities and businesses that are concerned by a particular policy. Examples of administrative data include housing data and tax records. Since administrative data are already collected and cover large sample sizes, they are time and cost-efficient sources for monitoring exercises. Furthermore, since administrative data consist mostly of activity, transaction and financial tracking data, they do not suffer from some of the quality pitfalls associated with self-reported data. However, administrative data can be limited in scope (e.g. exclusion of non-participants, no socioeconomic information on participants, etc.). Moreover, since administrative data collection systems are decentralised, the underlying quality of the data may vary across government departments or ministries.

Statistical data: this type of data is commonly used in research, and it corresponds to census data or more generally to information on a given population collected through national or international surveys. Survey data is a flexible and comprehensive source for monitoring exercises, as surveys can be designed to capture a wide range of socio-economic indicators and behavioural patterns relevant to the policy in question. However, data collection for surveys is resource-intensive and the quality of survey data is dependent on the survey design and on the respondents. For example, the quality of survey data can suffer because of social-desirability bias and poor recall of respondents.

Big data: This type of data is broadly defined as “a collection of large volumes of data” (UN Global Pulse, 2016[71]); it is often continuously and digitally generated by smart technologies on private sector platforms and captures the behaviour and activity of individuals. It has the advantage of coming in greater volume and variety, and thus represents a cost-effective method to ensure a large sample size and the collection of information on hard-to-reach groups. Moreover, big data analysis techniques (e.g. natural language processing, detection, etc.) can help identify previously unnoticed patterns and improve the results of monitoring exercises. Nevertheless, big data also introduces several challenges, such as time comparability (big data often comes from third parties who do not always have consistent data collection methods over time), non-human internet traffic (data generated by internet bots), selection bias (even if a sample size is large, it does not necessarily represent the entire population), etc.

Likewise, the frequency at which some indicators can be collected is not relevant to the yearly monitoring mandate of the council. This is the case for the sub-commission on Social Development that proposes an indicator for “Mujeres en situación de violencia de pareja” using data from the “Encuesta Nacional sobre la Dinámica de las Relaciones en los Hogares (ENDIREH, INEGI)”, which is only collected every five years.4 As a result, 23% of indicators could not be collected in 2018.

Moreover, the current indicators identified for the Strategic Plan are not sufficiently policy actionable, meaning that they do not provide relevant and timely information for decision-making related to the implementation of the Plan. Some indicators in the plan measure actions that fall outside the responsibilities and control of the executive, meaning that they are not actionable or relevant. Indeed, if the Strategic Plan is to be implemented by the executive, its specific actions should therefore be under the remit of this branch of government. Nevertheless, the commission on Security and Justice included opportunity areas and indicators (e.g. “Duración de casos no judicializados”) that fall under the responsibility of the judicial branch.

Similarly, some indicators proposed in the Plan do not have a state-level scope. The sub-commission on health, for example, uses OECD health data for its indicators, which are collected at a national level and therefore cannot directly reflect Nuevo León’s efforts in this area. Nevertheless, in some cases, proxy indicators may be of use when state-level indicators do not exist. This is the case, for instance, for metropolitan area indicators, which cover 90% of Nuevo León’s population.

The council could consider identifying a mix of indicators in order to best monitor the strategic plan

The Council of Nuevo León could consider identifying indicators for the Strategic Plan that are a mix of impact and context indicators, that would be available at state level, calculated on the basis of international and national statistical data – for instance in collaboration with INEGI – as well as outcome indicators calculated on the basis of administrative data or even ad hoc perception survey data. For example, these indicators could be taken from the national survey on government impact and quality prepared by INEGI every other year (INEGI, 2019[19]) (See Box 3.7). Indeed, while output indicators should not be used to measure outcomes and impacts, context and impact indicators say little about the policy levers to achieve the long-term goals associated with the strategic objectives. Instead, identifying intermediate outcomes, which respond fairly rapidly to changes in policy systems but can be linked to longer term objectives, may be beneficial in monitoring the plan (Acquah, Lisek and Jacobzone, 2019[20]).

Box 3.7. Typology of indicators

A classic typology of open government indicators discerns between:

Context indicators, when considering the public sector as an open system, can monitor external factors such as socio-economic trends, but can also include policy measures by other governments or supranational organisations. Ideally, a comprehensive M&E system should include indicators to monitor the existence and development of environmental/context factors that can influence the governance of open government strategies and initiatives.

Input indicators measure resources in the broad sense, i.e. human and financial resources, logistics, devoted to a particular open government strategy or initiative. In the context of the governance of open government, input indicators could include the number of staff working in the office in charge of open government or the budget allocated for a given open government initiative.

Process indicators refer to the link between input and output, i.e. activities that use resources and lead to an output. In the context of the governance of open government strategies and initiatives, these indicators could include the duration for creating an office in charge of the co-ordination of the open government strategies and initiatives or the time allocated to their design.

Output indicators refer to the quantity, type and quality of outputs that result from the inputs allocated. Output indicators refer to operational goals or objectives. For instance, in the context of this policy area, it can refer to the existence of a law on access to information or the existence of training courses for public officials on the implementation of open government principles.

Outcome/impact indicators refer to the (strategic) objectives of policy intervention. In a public policy context, intended effects often relate to a target group or region, but they can also relate to the internal functioning of an administration. Effects can occur or be expected with varying time gaps after the policy intervention. Outcome and impact are often the terms used together to refer to them. The difference is based on the chronological order: outcome usually refers to shorter-term effects, while impact refers to longer-term effects. In this field, these indicators could be the share of public servants aware of the open government strategy or the number of citizens’ complaints about public policy decisions.

Source: (OECD, 2020[21])

The council could consider applying the RACER approach in order to assess the extent to which the indicator could support decision-making5. It should include representatives of the different stakeholders, which during implementation have the responsibility of collecting, analysing and reporting on data (DG NEAR, 2016[15]). Each indicator, qualitative as well as quantitative, must correspond to an existing source, be it a statistical source or an administrative one.

Furthermore, in order to monitor the action plans, the state public administration could consider identifying process indicators mostly through administrative sources. Indeed, for this type of information/monitoring, authorities should tend towards regular flows of information, ideally at minimum administrative cost, automatically. The state public administration could consider submitting these indicators for review and comment by the Council of Nuevo León, in order to benefit from the opinion and expertise of a wider range of stakeholders.

The analysis of the data has to be fit for purpose

The current annual report is not well targeted to its audience

Although crucial to monitoring, collecting data is not sufficient in and of itself; in order to support decision-making and serve as a communication tool to the public, data needs to be analysed (Zall, Ray and Rist, 2004[13]). Indeed, the analysis of the data which serves as the backbone of the monitoring exercise needs to be tailored to the user, focused and relevant (OECD, 2018[22]).

In fact, it is important to identify the types of use for the monitoring information (demonstrate, convince, show accountability, involve stakeholders, etc.). The analysis of data cannot be targeted efficiently without a clear definition of its audience(s) and the types of questions that audience is likely to raise about findings. There are typically two main types of users of monitoring and reporting information:

External users: citizens, media, NGOs, professional bodies, practitioners, academia, financial donors, etc. They usually seek user-friendly information, which is provided using a simple structure and language, concise text and as visually as possible.

Internal users: congress, government, ministers, managers and operational staff, and in Nuevo León, the council. They are more interested in strategic information related to overall progress against the objectives and targets, and will seek to understand the challenges encountered in their implementation, as well as requiring indications of action required in response to data findings. Furthermore, the higher the level of decision-making, the more information should be aggregate and outcome-oriented (OECD, 2018[22]).

Currently, these types of users are not clearly differentiated in the annual monitoring report as the report is meant to serve as a transparency tool to communicate vis-à-vis external users (it is published on the website) and as an accountability and decision-making tool for internal users (for instance, the report is to be sent to Congress).

Clearly differentiating between the three different types of monitoring exercises will provide for more fit-for-purpose information

Clearly differentiating between the three monitoring exercises (performance dialogue, the joint review, and the annual report on the SDP) should allow for more fit-for-purpose analyses in each situation. The following section on promoting the use of monitoring evidence will discuss in further detail how to best communicate monitoring information to external users.

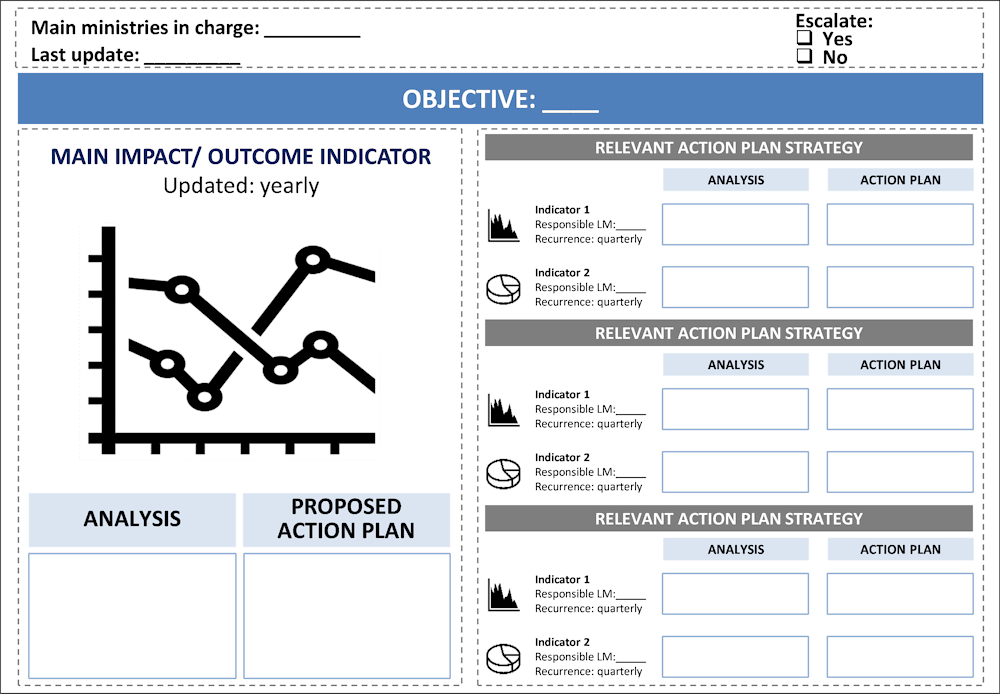

While the performance dialogue and the joint review are exercises aimed at an internal audience, they still need to present monitoring evidence in a manner that supports decision-making (i.e. by focusing on strategic implementation challenges, explaining them through analysis and proposing solutions). A robust monitoring dashboard could include the department or agency in charge of updating it, the frequency at which the relevant indicator(s) should be updated, as well as the analysis and action plan (or proposed solutions) in case implementation gaps can be identified. Finally, the dashboard could identify whether or not the issue must be brought to a higher level of decision-making (see Figure 3.3 for the proposed performance dialogue process). Figure 3.5 showcases an example of such a dashboard.

Figure 3.5. Creating user-friendly dashboards to monitor the SP and the SDP

Source: Authors.

More generally, the information included in the dashboards should be analysed and presented in a way that is (OECD, 2018[22]):

User-friendly, in that the information is provided using a simple structure and language, with visual information;

Focused, in that the performance information provided is limited to the most important aspects, such as objectives, targets and activities, and is linked to the achievement of results (outcome/impacts). It is therefore important that reports on the implementation of the action plan include both strategic (outcome/impact) and operational (process/ output) information, even if the main object of the meeting is the monitoring of the action plan.

Relevant, in that only the points requiring decisions or encountering implementation bottlenecks should be discussed, in order to avoid overloading the reader with non-critical information. Only information that helps decision-makers manage the implementation of the strategy and key policy decisions should be provided. Where possible, the reports should include explanations about poor outcomes and identify steps taken or planned to resolve problems.

Quality monitoring: quality assurance and quality control

Quality means credible, timely and relevant data and analysis. There are three main ways to ensure that the data and analysis are of quality: ensuring capacity, developing quality assurance and quality control.

The state public administration needs to upgrade its capacity to monitor the Plan

Establishing a monitoring set-up that can produce credible, timely and relevant data and analysis requires skills and capacities, which can be defined as “the totality of the strengths and resources available within the machinery of government. It refers to the organisational, structural and technical systems, as well as individual competencies that create and implement policies in response to the needs of the public, consistent with political direction” (OECD, 2008[23]). In particular, two types of skills are crucial:

Analytical skills to identify indicators, review and analyse data and formulate judgements or conclusions (Zall, Ray and Rist, 2004[13]).

Communication skills to structure monitoring reports and dashboards in a way that is attractive to the end user (OECD, 2018[22]).

In order to have the necessary skills and capacities, monitoring set-ups require a critical mass of technically trained staff and managers, such as staff trained in data analytics, data sciences, communication, and in at least basic information technology (Zall, Ray and Rist, 2004[13]). All three of these elements rest on the availability of dedicated resources (human and financial) for the monitoring function. These have been framed in a number of relevant cases as “delivery units” or “policy implementation units” and are often located at the centre of government. Yet, currently, the state public administration does not have such resources for monitoring of the Strategic Plan.

A first step in strengthening the capacities of the state public administration may be the creation of dedicated reporting units in each of the line ministries, who would be in charge of collecting and analysing the data in the context of the performance dialogue. Moreover, the centre of government may wish to strengthen its analysis and communication skills with a dedicated delivery unit in order to provide methodological support and direction to the line ministries in preparing impactful monitoring reports.

Quality assurance mechanisms could be developed alongside current quality control

Currently, the data collected for the monitoring report are subject to an audit by a private accounting organisation. This form of quality control (i.e. looking at the end product) serves to provide assurance that the data used for monitoring are valid and reliable, that is to say that the data collection system is consistent across time and space (validity), and that it measures actual and intended performance levels (reliability).

However, quality assurance (looking at the process) is also important. Some countries have developed mechanisms to ensure that monitoring is properly conducted, that is to say that the process of collecting and analysing respects certain quality criteria. In order to do so, countries have developed guidelines, which serve to impose a certain uniformity in this process (Zall, Ray and Rist, 2004[13]). Box 3.8 explores in more detail the difference between quality assurance and quality control.

Box 3.8. Quality assurance and quality control in monitoring

Firstly, countries have developed quality assurance mechanisms to ensure that monitoring is properly conducted, that is to say that the process of monitoring a policy respects certain quality criteria. In order to do so, countries have developed quality standards for monitoring. These standards and guidelines serve to impose a certain uniformity in the monitoring process across government (Picciotto, 2007[24]). While some governments may choose to create one standard, others may consider it more appropriate to adopt different approaches depending on the different purposes of data use (van Ooijen, Ubaldi and Welby, 2019[25]). Data cleaning activities or the automating of data collection processes can also be considered quality assurance mechanisms. Some countries have invested in the use of artificial intelligence and machine learning to help identify data that deviate from established levels of quality (van Ooijen, Ubaldi and Welby, 2019[25]).

Note: In various countries, quality control mechanisms have also been developed. Mechanisms for quality control ensure that the data collection and analysis have been properly conducted to meet the pre-determined quality criteria. While quality assurance mechanisms seek to ensure credibility in how the evaluation is conducted (the process), quality control tools ensure that the end product of monitoring (the performance data) meets a certain standard for quality. Both are key elements to ensuring the robustness of a monitoring system (HM Treasury, 2011[78]). Quality control mechanisms can take the form of audits, as in Nuevo León. Approaches that seek to communicate on performance data or make them available to public scrutiny can also be included in quality control efforts in that multiple eyes are examining the data and potentially confirming the quality (van Ooijen, Ubaldi and Welby, 2019[25]).

In Nuevo León, two types of guidelines may be of use. The state public administration could issue guidelines in order to clarify the working methods and tools that will support the performance dialogue to be conducted. In particular, these guidelines could specify quality assurance processes in order to strengthen the quality of the data that are collected in the context of this monitoring exercise, to be applied by every line ministry. Similarly, these guidelines may seek to clarify the criteria for escalating issues from the line ministry level to the CoG level, in order to harmonise this process across government.

Promoting the use of monitoring results

Using a system to measure the results in terms of performance and delivery is the main purpose of building a monitoring set-up. In other words, producing monitoring results serves no purpose if this information does not get to the appropriate users in a timely fashion so that the performance feedback can be used to better public decision-making, accountability and citizen information.

Communicating results to citizens

Keeping the annual monitoring report public and making data available on the Avanza NL platforms is important to maintain public accountability and engage with citizens

Greater publicity of monitoring results can increase the pressure on decision-makers for implementation and for a more systematic follow-up of recommendations, while providing accountability to citizens concerning the impact of public policies and the use of public funds.

The monitoring report for the Strategic Plan is made public every year on the council online platform (www.conl.mx/evaluacion).Similarly, the government report on the State Development Plan can be found on the government of Nuevo León website, which constitutes a first step in providing information to the public. However, the current monitoring report is not user friendly due to its length. The report includes an executive summary, which is a useful tool for diffusing key messages to citizens. Nevertheless, the executive summary is over ten pages long, separates the main findings from their associated recommendations and does not give a description of major issues encountered in the implementation of the Plan. A shorter and punchier executive summary may be more impactful in reaching stakeholders and the general public alike. Moreover, contrarily to the annual “Informe de gobierno”, the monitoring report is not very visual.

Overall, the format and timeline of the communication report on the advancement of the Strategic Plan could be revised to better fit its primary intended use: to reach a wider audience and inform a variety of stakeholders about the progress of the implementation of the Plan based on tangible and possibly outcome indicators. To that extent, the centre of government could choose to produce a communication report on the Strategic Plan, which could be updated at mid-term and at the end of every gubernatorial mandate, i.e. every three years. This report would replace the current monitoring/evaluation report conducted by the council and would be designed primarily as a communication tool, so it should have a clear summary and be accompanied by clear messages for the press.

The council has also created an online platform, which is currently under construction, which seeks to provide up-to-date information on the evaluation of the Strategic Plan’s indicators. In order to ensure timely updates and minimal use of extra resources, the platform could be directly updated by the state public administration. The platform could focus on the indicators from the Strategic Plan and the State Development Plan in order to ensure more focused communication of the objectives that concern and affect citizens. Importantly, the indicators defined for the State Development Plan should be aligned with those of the Strategic Plan and reflect the underlying theory of change. This could be shown on platforms similar to those created by other Mexican states, such as MIDE Jalisco (see Box 3.9). Avanza NL should thereby seek to remain as simple to use and up-to-date as possible.

Box 3.9. MIDE Jalisco: Good practice of transparent reporting of monitoring information

MIDE Jalisco is the comprehensive monitoring strategy of the state of Jalisco, operated by its Planning, Administration and Finances Secretariat and involving 35 state executive agencies and entities. It facilitates dynamic and periodic monitoring of the quantitative indicators pertaining to the goals of the state’s Governance and Development Plan. This monitoring mechanism contains 27 long-term impact-level indicators on the development of the state, 133 mid-term indicators measuring direct impact on the population, as well as 194 indicators that capture short and mid-term information on the implementation of programmes and policies. MIDE Jalisco has data dating back to 2006 for most of its indicators.

In addition to being a well-coordinated, inter-institutional and centralized platform for monitoring Jalisco’s strategic planning instrument, MIDE Jalisco represents a good practice for effectively communicating monitoring results to stakeholders. This is because MIDE Jalisco is hosted on an online platform, where members of academia, the press, CSOs and the public can access the indicators as open-source data. Furthermore, since these indicators are updated at least once a month (and more frequently if the source of the data allows), relevant stakeholders can access monitoring results in real (or near-real) time. Thus, this system fosters citizen participation and accountability. This participation is further facilitated by the existence of MIDE Jalisco’s Citizen Council, which allows for the collaboration between public servants, experts and citizens to improve indicator selection and target setting.

The state public administration and the council could consider developing communication strategies in order to increase public awareness and engagement in the Plan

Besides the communication report and the Avanza NL platform, the council and the state public administration could develop other communication tools in order to reach a wide audience. These could include social media strategies, newsletters with focuses on council actions and editorials in local newspapers (World Bank, 2017[68]), such as the newsletter, social media outlets and editorial column that the Council has on the local media Verificado. It could be useful to this end for the council to allocate a portion of its yearly budget towards communication.

Another way to increase public engagement in the Plan and its monitoring would be to collect periodic feedback on the Strategic Plan and its implementation through a survey of the general population, business leaders and civil society organisations. This survey could be conducted in collaboration with INEGI and the National Survey on Government Quality and Impact (INEGI, 2019[19]), which has experience in conducting opinion surveys on similar topics. It would be good to provide incentives to some of the NGOS to ensure that this could be published.

Ensuring the uptake of monitoring results in decision-making

Increasing the impact of monitoring results will require the development of a performance narrative focused on addressing inconsistencies in implementation

While preparing fit-for-purpose monitoring analysis is important, as it gives users quick and easy access to clear monitoring results, they do not systematically translate to better uptake of the outcomes in decision-making. In fact, it is pivotal that monitoring evidence be presented in a way that is compelling to its audience. Monitoring dashboards should include a narrative on performance, interpreting and using the results (The World Bank, 2017[12]) to understand implementation gaps and propose corrective policy action in a way that creates a coherent and impactful narrative. In order to create such a narrative, it may be necessary to filter the relevant data and to focus the information presented on the most pressing bottlenecks or the reforms with the biggest potential impact. Key messages, takeaways and suggested courses of action should accompany any raw data (i.e. indicators) (Zall, Ray and Rist, 2004[13]).

Increasing stakeholder engagement in the development of the Plan’s indicators matters for monitoring and implementation

Moreover, involving stakeholders in the design of the monitoring set-up can increase the legitimacy of the resulting evidence and ultimately lead to greater impact in decision-making. In fact, cross country evidence shows that it is important to create ownership and manage change in both the public and private sectors when seeking to implement such an ambitious transformation programme (The World Bank, 2017[12]). Involving internal and external stakeholders in the definition of indicators may improve their quality as stakeholders may sometimes be better placed in order to identify which dimensions of change should garner the most attention (DG NEAR, 2016[15]), in order words what output indicators best measure the progression of the causal chain (outcome/ impact).

The council itself is meant to involve stakeholders. Yet, the oversight of the council in the definition of the Plan indicators could have been stronger6. Revising the Plan could be an opportunity for the council to revise the indicators, in order to create buy-in among the implementers, within and outside the administration (through the composition of the council itself). Similarly, the state public administration (each ministry or entity) could consider asking the council for advice when selecting the indicators for the implementation action plan, in order to ensure that there is a common vision for how to operationalize the Strategic Plan and the State Development Plan.

Monitoring for results implies creating feedback loops to institutionalise the use of monitoring results

Formal organisations and institutional mechanisms constitute a sound foundation for use of evidence in policy and decision-making (Results for America, 2017[18]). Mechanisms that enable the creation of feedback loops between monitoring and implementation of policies can be incorporated either:

in the monitoring process itself, such as through the performance cycle (whereby performance evidence is discussed either at the level of the individual line ministry or at the CoG);

through the incorporation of performance findings into other processes, for instance the policy-making cycle, the annual performance assessment of senior public sector executives, the budget cycle or discussions in congress.

As discussed in the section clarifying the monitoring set-up, the creation of a performance dialogue could allow practitioners and decision-makers to use monitoring evidence to identify implementation issues, constraints and adapt their efforts/resources in order to solve them. In particular, linking the strategic objectives with individual performance objectives is key to creating incentives for results in the state public administration, particularly at the level of senior public sector executives and leadership. There is a need to ensure the participation of government officials, such as heads of agencies or departments and to ensure that their organisation is contributing to the achievement of high-priority cross-government outcomes such as the Strategic Plan. In Chile, for example, both collective and individual incentives have been used in order to promote public sector performance in line with strategic objectives (see Box 3.10 for a more detailed explanation of this system).

Box 3.10. The monitoring systems and accompanying incentives for performance in Chile

The Chilean monitoring system has 3 main actors: the Ministry of Finance, the Ministry of Social Development, and the line ministries. The system is comprised of four sub-systems that monitor the following elements :

The H form (Formulario H): a document that accompanies the Budget Bill and comprises performance indicators that include qualitative information on public goods and services. This is under the responsibility of the Ministry of Finance.

Programme monitoring with the objective of following up on programme execution and measuring progress against targets, under the responsibility of the Ministry of Finance.

Social programmes monitoring, under the responsibility of the Ministry of Social Development.

Internal management indicators (Indicadores de Gestión Interna) that focus on internal processes and procedures.

In parallel, there are three main mechanisms to create incentives for performance:

The Management Improvement Programme (Programa de Mejoramiento de la Gestión) that grants bonuses to public servants who reach specific targets.

Collective performance agreements (Convenios de desempeño colectivo) that encourage team work within work units towards annual institutional targets.

Individual performance agreements (Convenios de desempeño individual) that set strategic management targets for every civil servant.

Source: (Irarrazaval and Ríos, 2014[29])

Other uses of monitoring results also include using the data for evaluations, supporting strategic and long-term planning efforts by providing baseline information and communicating to the public (see discussion above). Moreover, monitoring information produced through the performance dialogue could be used to feed into the budget cycle. Given that the performance dialogue should be linked to the monitoring of budget programmes (see previous section on clarifying the monitoring set-up and how these are linked through the action plan), the evidence produced through this exercise could provide useful information to the congress about the efficiency and effectiveness of budgetary spending through spending reviews. According to data from the budgeting and public expenditures survey (OECD, 2007[30]), spending reviews are a widely used tool in OECD countries as part of the budget cycle, which can be informed by monitoring data (Robinson, 2014[31]) (Smismans, 2015[32]) (The World Bank, 2017[12]). Finally, monitoring evidence may be used in the budgetary cycle through performance budgeting practices, as can be seen in Box 3.3.

Box 3.11. Main models of performance budgeting

The OECD has identified 4 main models of performance budgeting, which reflect the different strengths of the links between performance evidence and budgeting:

presentational, where the outputs, outcomes and performance indicators are presented separately from the main budget document.

performance informed, which includes performance metrics within the budget document and involves restructuring of budget document on the basis of programmes. This is the form of performance budgeting that many OECD countries have adopted.

managerial performance budgeting is a variant on performance informed budgeting. It focuses on managerial impacts and changes in organisational behaviour, achieved through combined use of budget and related performance information.

direct performance budgeting establishes a direct link between results and resources, usually implying contractual type mechanisms that directly link budget allocations to the achievement of results.

Source: (OECD, 2018[33])

Recommendations

To clarify the monitoring set-up to better support the delivery of the plans:

Adopt a comprehensive definition of monitoring to establish a shared understanding of its objectives and modalities within the public sector. Such a definition could read as follows :

Monitoring is a routine process of data collection and analysis of the data to identify gaps in the implementation of a public intervention. The objectives of monitoring are to facilitate planning and operational decision-making by providing evidence to measure performance and help raise specific questions to identify implementation delays and bottlenecks. It can also strengthen accountability and public information, as information regarding the use of resources, efficiency of internal management processes and outputs of policy initiatives is measured and publicised.

Clarify the roles of the key actors, including the council and the state public administration.

This requires updating the Strategic Planning Law and its regulation, and giving an explicit legal mandate to each of the actors, to clarify their respective responsibilities: collecting data, analysing data, using data, coordinating and promoting monitoring, designing guidelines for monitoring, etc.

Update the internal regulations of the secretariats and their entities in order to clarify their role in monitoring the Plans.

Clarify, for example through guidelines, the different monitoring set-ups – including the actors involved, the timeline, the methodology and tools for monitoring, for each type of main objective pursued by monitoring: