The artificial intelligence (AI) landscape has evolved significantly since 1950 when Alan Turing first posed the question of whether machines can think. Today, AI is transforming societies and economies. It promises to generate productivity gains, improve well-being and help address global challenges, such as climate change, resource scarcity and health crises. Yet, the global adoption of AI raises questions related to trust, fairness, privacy, safety and accountability, among others. Advanced AI is prompting reflection on the future of work, leisure and society. This chapter examines current and expected AI technological developments, reflects on the opportunities and risks foresight experts anticipate, and provides a snapshot of how countries are implementing the OECD AI Principles. In so doing, it helps build a shared understanding of key opportunities and risks to ensure AI is trustworthy and used to benefit humanity and the planet.

OECD Digital Economy Outlook 2024 (Volume 1)

Chapter 2. The future of artificial intelligence

Copy link to Chapter 2. The future of artificial intelligenceAbstract

Source: OECD.AI (accessed on 10 March 2024).

Key findings

Copy link to Key findingsTechnical breakthroughs have enabled generative AI that is so advanced users may be unable to distinguish between human and AI-generated content

In late 2022, generative AI advances took many by surprise, despite some researchers anticipating such developments. Collaboration and interdisciplinarity between policy makers, AI developers and researchers are key to helping keep pace with AI progress and close knowledge gaps.

Research and expert opinions suggest that future impacts of AI could vary widely, promising considerable socio-economic benefits but also presenting substantial risks that need addressing

The future of AI may yield tremendous benefits, including enhanced productivity gains, accelerating scientific progress and helping address climate change. However, AI advances also present critical risks, including spreading mis- and dis-information, and threats to human rights.

The long-term trajectories and risks of AI are often discussed and widely debated

Most AI today can be considered “narrow” (designed to perform a specific task), but some experts argue that foundation models are an early form of more “general” AI. This includes progress towards artificial general intelligence (AGI) – a controversial concept that can be described as machines with human-level or greater intelligence across a broad spectrum of contexts.

Some experts argue that challenges in ensuring alignment of machine outputs with human preferences could result in humans losing control of AGI. However, the plausibility and potential nature of AGI are disputed. Many future risks do not require AGI, leading others to argue that focusing on hypothetical AGI distracts from near-term risks.

AI research and development and venture capital (VC) investments are poised to increase

Since mid-2019, the People’s Republic of China (hereafter “China”) published more AI research than the United States or the European Union. India is also making strides, more than doubling its AI research publications since 2015.

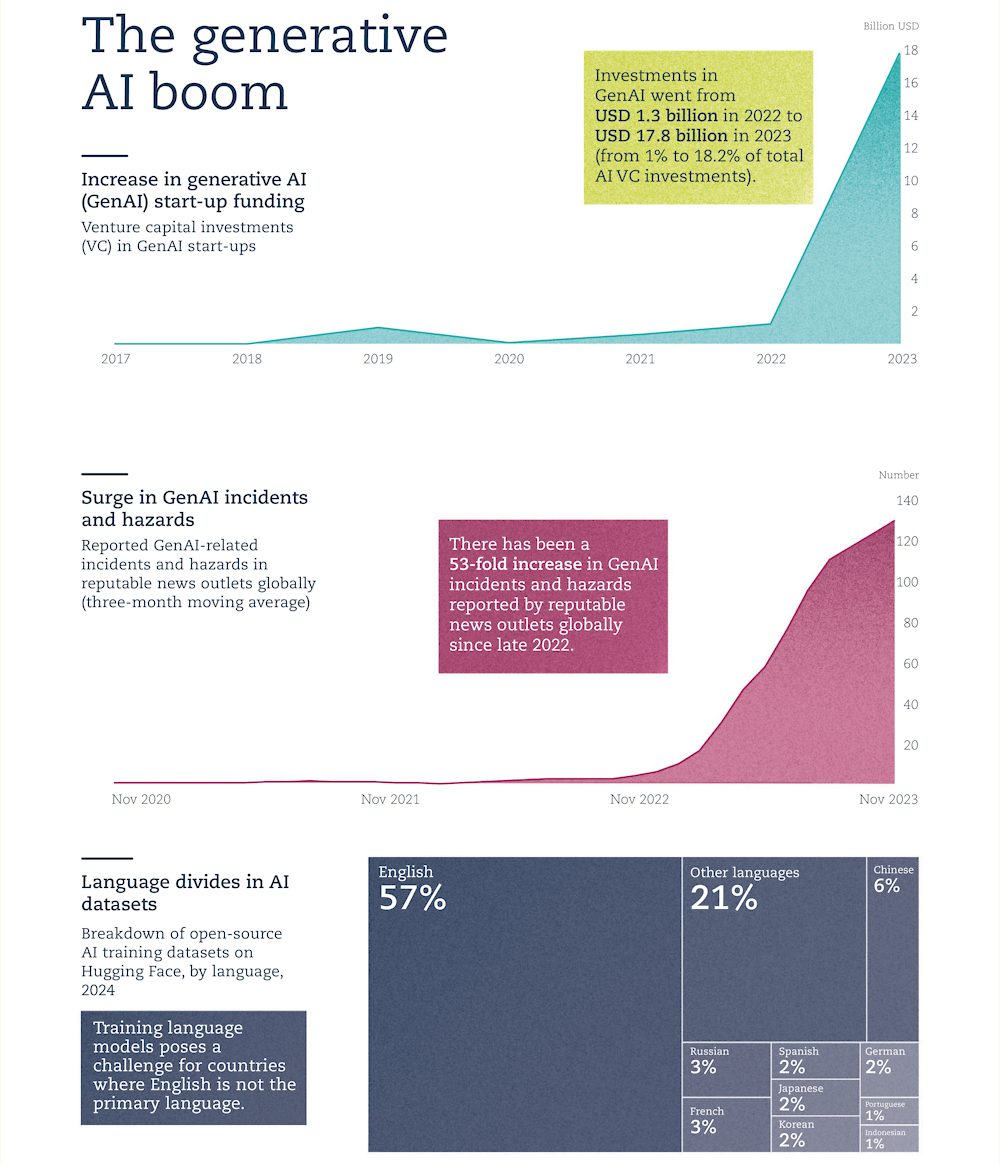

Between 2015 and 2023, global VC investments in AI start-ups tripled (from USD 31 billion to USD 98 billion), with investments in generative AI specifically growing from 1% of total AI VC investments in 2022 (USD 1.3 billion) to 18.2% (USD 17.8 billion) in 2023, despite cooling capital markets.

AI development and use is expected to continue to depend on access to computing infrastructure

Compute divides may worsen between and within countries. Increasingly, within countries, public sector entities lack the computing resources to train advanced AI.

AI benefits and risks have global reach, making international co-operation critical to ensure AI policies and laws are complementary, effective and interoperable

AI systems around the world can use the same underlying AI inputs and tools, such as AI algorithms, models and training datasets. This makes countries and organisations vulnerable to similar risks like bias, human rights infringements, and security vulnerabilities or failures.

Artificial intelligence (AI) is transforming economies and societies, but is the world ready? AI promises to generate productivity gains, improve well-being and help address global challenges, such as climate change, resource scarcity and health crises. Yet, as AI is adopted around the world, its use raises questions and challenges. AI has advanced significantly, prompting reflection on the future of work, education, leisure and society. This chapter looks to potential futures to help build an understanding of key opportunities and risks in ensuring AI is trustworthy and used for good.

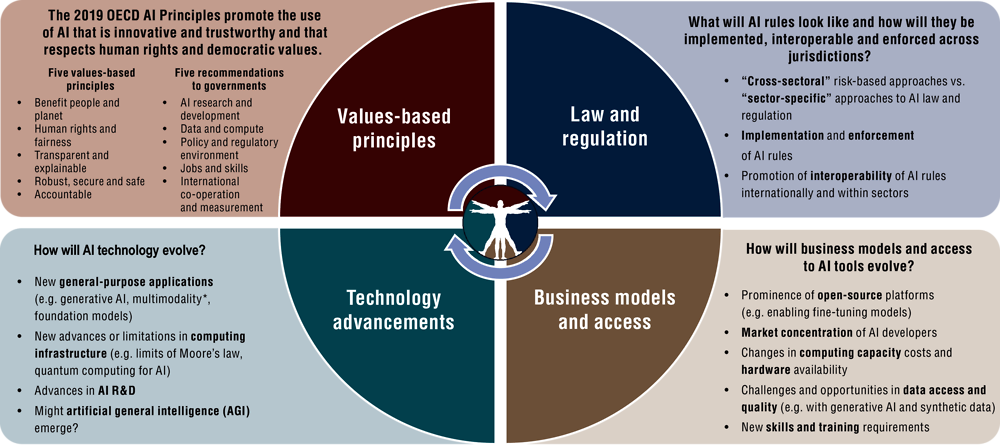

This chapter examines current and expected AI technological developments, reflects on the opportunities and risks foresight experts anticipate may lie ahead, and provides a snapshot of how countries are implementing the OECD AI Principles. It examines the interrelated factors that will likely shape AI governance in the decades to come (Figure 2.1), to help build a shared understanding of key opportunities and risks to ensure AI is trustworthy and used to benefit humanity and the planet.

Figure 2.1. Examples of interrelated factors that will likely shape AI governance in future decades

Copy link to Figure 2.1. Examples of interrelated factors that will likely shape AI governance in future decades

Note: *Multimodal AI combines multiple types of data – such as data from text, image or audio – via machine-learning models and algorithms. It is key for AI research and applications such as in manufacturing and robotics (The Alan Turing Institute, 2023[1]).

Source: Adapted from Figure 1.S.2. of the Spotlight “Next generation wireless networks and the connectivity ecosystem” in this volume.

The AI technological landscape today and tomorrow

Copy link to The AI technological landscape today and tomorrowThe AI technological landscape has evolved significantly from the 1950s when British mathematician Alan Turing first posed the question of whether machines can think (Turing, 2007[2]). Coined as a term in 1956, AI has evolved from symbolic AI where humans built logic-based systems, through the AI “winter” of the 1970s, to the chess-playing computer Deep Blue in the 1990s. The 21st century saw breakthroughs in the branch of AI called machine learning that improved the ability of machines to make predictions from historical data (OECD, 2019[3]). Recent years have witnessed the emergence of generative AI, including large language models, that can generate novel content and enable consumer-facing applications like advanced chatbots at people’s fingertips (OECD, 2023[4]). For many, AI became “real” in 2022 – the year that OpenAI’s ChatGPT became the fastest-growing consumer application in history (Hu, 2023[5]). To understand the evolution of AI technological developments to date, and how they might develop in future years, policy makers need a shared understanding of key AI terms (Box 2.1), as well as of the basic AI production function, described by three enablers: algorithms, data and computing resources (“compute”).

Advances in neural networks and deep learning are resulting in larger, more advanced and more compute-intensive AI models and systems

Copy link to Advances in neural networks and deep learning are resulting in larger, more advanced and more compute-intensive AI models and systemsThe application of machine-learning techniques, the availability of large datasets, and faster and more powerful computing hardware have converged. Together, they are dramatically increasing the capabilities, impact and availability of AI models and systems, moving from academic discussions into remarkable real-world applications. Inspired by the human brain, neural networks are made up of layers of “neurons”, known as “nodes”, that process inputs with weights and biases to give specific outputs (Russell and Norvig, 2016[11]). A subset of algorithms in the area of neural networks – called deep neural networks (in the field of study and set of techniques called deep learning) – allows machine-based systems to “learn” from examples to make predictions or “inferences”, based on the large amount of data processed during their training phase. Deep neural networks are distinct mostly in that they are general and require little adaptation (or “cleaning”) of input data to make accurate predictions.

In 2017, a group of researchers introduced a type of neural network architecture called “transformers”. It became a key conceptual breakthrough, unleashing major progress in AI language models. Transformers learn to detect how data elements – such as the words in this sentence – influence and depend on each other (Vaswani et al., 2023[12]). Unlike previous neural networks, transformers can process inputs from a sequence, such as words of text, in parallel. This enabled AI developers to design larger-scale language models with significantly more parameters – the numerical weights that define the model – and greater efficiency (OECD, 2023[4]; Vaswani et al., 2023[12]). Transformers have unlocked significant advances across language recognition (such as chatbots) and science (such as protein folding). Vision transformers are also becoming popular for various computer vision tasks (Islam, 2022[13]). Notable transformer models include AlphaFold2 (DeepMind), GPT-4 (OpenAI), LaMDA (Google) and BLOOM (Hugging Face) (Collins and Ghahramani, 2021[14]; Hugging Face, 2022[15]; Merritt, 2022[16]).

Box 2.1. “A” is for artificial intelligence

Copy link to Box 2.1. “A” is for artificial intelligenceAI has received notable attention in the media and in policy circles. Amid the flurry of headlines and analysis, policy makers require a shared understanding of key AI terms to help keep up with rapid AI advancements, and to respond with AI policies that can stand the test of time. This chapter uses the following terms:

Artificial intelligence: “An AI system is a machine-based system that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations or decisions that can influence physical or virtual environments. Different AI systems vary in their levels of autonomy and adaptiveness after deployment” (OECD, 2024[6]).

Algorithm: “[A] set of step-by-step instructions to solve a problem (e.g. not including data). [It] can be abstract and implemented in different programming languages and software libraries” (EU-US Trade and Technology Council, 2023[7]).

AI compute: “AI computing resources (‘AI compute’) include one or more stacks of hardware and software used to support specialised AI workloads and applications in an efficient manner” (OECD, 2023[8]).

Foundation model: A model that is trained on large amounts of data – generally using self-supervision at scale – that can be adapted (e.g. fine-tuned) to a wide range of downstream tasks. Examples include BERT (Google) and GPT-3 (OpenAI) (Bommasani et al., 2021[9]).

Generative AI: AI systems capable of creating new content – including text, image, audio and video – based on their training data, and usually in response to prompts. The recent growth and media coverage of generative AI, notably in the areas of text and image generation, has spotlighted AI’s capabilities, leading to significant public, academic and political discussion (Lorenz, Perset and Berryhill, 2023[10]).

AI language model: A model that can process, analyse or generate natural language text trained on vast amounts of data, using techniques ranging from rule-based approaches to statistical models and deep learning. Chatbots, machine translation and virtual assistants that recognise speech are all applications of language models. Not all language models are generative in nature, and they can be large or small (OECD, 2023[4]).

Machine learning: “[A] branch of artificial intelligence (AI) and computer science which focuses on development of systems that are able to learn and adapt without following explicit instructions imitating the way that humans learn, gradually improving its accuracy, by using algorithms and statistical models to analyse and draw inferences from patterns in data” (EU-US Trade and Technology Council, 2023[7]). The “learning” process using machine-learning techniques is known as “training”. A machine-learning technique called “neural networks”, and its further subset technique called “deep learning”, has enabled leaps in technological AI developments (OECD, 2019[3]).

Multimodal AI: AI that combines multiple types of data – such as data from text, image or audio – such as through machine-learning models and algorithms. Multimodal AI is key for AI research and applications such as in manufacturing and robotics (The Alan Turing Institute, 2023[1]).

Notes: This is an inexhaustive list of terms selected for this chapter. The EU-US Trade and Technology Council released a second edition of Terminology and Taxonomy for Artificial Intelligence in April 2024. For further information, please visit: https://digital-strategy.ec.europa.eu/en/library/eu-us-terminology-and-taxonomy-artificial-intelligence-second-edition.

Researchers are exploring whether transformers can create “generalist” and “multimodal” AI systems that can perform multiple of different tasks across robotics, computer vision, simulated environments, natural language and more. For example, Google DeepMind’s Gato (2022) can perform tasks such as stacking blocks with a robot arm, playing video games, captioning images and chatting with users. Unlike previous AI models that could only learn one task at a time, some new transformer models can learn multiple different tasks simultaneously, enabling them to switch between tasks and learn new skills without forgetting previous skills (Heikkilä, 2022[17]; Reed et al., 2022[18]). However, AI models and systems today still exhibit factual inaccuracy; “hallucinations” (making up facts in a credible way, often when a correct answer is not found in the training data); inconsistency; and misunderstanding when used in new contexts, often requiring human assistance and oversight for correct functioning. Some experts argue that advanced AI systems and models represent a step towards more capable and general forms of AI. Some even claim progress towards the hypothetical advent of artificial general intelligence (AGI) that would usher in significant benefits and risks. Such discussions are actively debated in the AI expert community (Box 2.2).

Box 2.2. Increasingly general AI systems

Copy link to Box 2.2. Increasingly general AI systemsSome experts believe the latest large language and other generative AI models can apply outputs in a general way across many contexts. However, the extent to which these models can generalise versus simply mimicking information from their datasets is actively debated.

Since their origin in the 1950s, AI systems have been “narrow” and context-specific. However, due to recent advances, some experts argue that state-of-the-art “foundation models” are moving to capabilities that are more general in nature.

Foundation models are trained on large amounts of data and can be adapted and built upon for a wide range of downstream tasks. Some experts argue that such models represent a significant step towards the hypothetical advent of artificial general intelligence (AGI), the timeline, definition and premise of which is intensely debated. AGI is a controversial concept that can be described as machines with human-level or greater intelligence across a broad spectrum of domains and contexts.

Many experts argue that a focus on speculative notions of AGI obfuscates potentially significant benefits and risks posed by existing and near- to medium-term AI systems. To move away from this debate, some AI experts have begun using the phrase artificial capable intelligence (ACI). This is meant to describe potentially transformative AI systems that require minimal human supervision but that may not achieve the hypothesised state of AGI.

Researchers at DeepMind (Google) have proposed a framework for classifying progress in capabilities based on AI systems’ levels of performance, generality and autonomy. They aim to provide a common language for comparing AI models, assessing risks and measuring progress in AI advancements.

AGI is the focus of active debates and media reporting. While an increasing number of experts from a wide array of disciplines are engaging in the debate around achieving more general AI systems, their views, forecasts and understanding of key related terms vary greatly.

Foundation models are enabling increasing AI generality across application domains, industries and tasks

Copy link to Foundation models are enabling increasing AI generality across application domains, industries and tasksRecent AI breakthroughs have shifted capabilities from task-specific models and systems to those that are more flexible and applicable across domains, industries and tasks. These advances centre on “foundation models” – models trained on large amounts of data that can be adapted to a wide range of downstream tasks such as OpenAI’s Generative Pretrained Transformers (GPT) series (Bommasani et al., 2021[9]; Jones, 2023[23]; Lorenz, Perset and Berryhill, 2023[10]). Foundation models can be further trained or “fine-tuned”, for example, with specific data to gain insights relevant to a specific sector or adapted to a wide range of distinct tasks (Bommasani et al., 2021[9]).

Today’s foundation models share several common characteristics. First, they often include billions of parameters, making them very large in size. Second, their outputs can be difficult to explain due to their complex computational processes. This has resulted in impressive performance and outputs, even beyond their developers’ expectations. However, the model’s steps or process to arrive at an output often cannot be explained. Third, training today’s foundation models requires massive amounts of AI compute, data and specialised AI talent, making them expensive to develop (Dunlop, Moës and Küspert, 2023[24]). However, as the technology, hardware/software and training methods advance, it is unclear whether future foundation models will require similarly large datasets and compute, and retain such large numbers of parameters.

Many have called the recent emergence of foundation models a major “paradigm shift” and technological advancement. Enabled by foundation models, some AI models can transfer capabilities from one domain to another. For example, they can combine multiple types of data across domains (e.g. image, text, audio). Foundation models, especially those available as open-source, can also allow AI developers and researchers with limited resources to fine-tune and deploy AI in their specific domains. Using foundation models, developers do not necessarily require access to advanced compute hardware and large datasets, or need to incur significant training costs to train the foundation model to begin with.

Foundation models will likely continue to drive advancement of AI capabilities, unlocking promising opportunities for innovation and productivity gains across domains and sectors. However, they also raise several challenges. A reliance on foundation models – for example by smaller and less well-capitalised downstream users like start-ups – could create dependency dynamics between the users and producers of such models. The cost and complexity of foundation models have limited their development to well-capitalised firms, such as models like GPT-4 (OpenAI/Microsoft), BERT (Google) or LlaMA 2 (Meta), or to organisations and governments that can afford to fund their training.

It is often difficult and expensive to understand what foundation model training datasets contain, and verifying the validity of outcomes sometimes requires specialised expertise. Moreover, foundation model developers may not disclose key information about such models, for a variety of business or technical reasons. Distribution mechanisms by developers – such as application programming interfaces (APIs) – can mask certain model properties. This makes it difficult to assess how models align with certain principles, or to use models in downstream contexts in ways that promote transparency, explainability and accountability (Dunlop, Moës and Küspert, 2023[24]).

Such issues illustrate the possible increasing imbalance between those who can perform the most resource-intensive first steps of building such models and those who rely on pretrained models (i.e. foundation models). Access to the most advanced AI models could be limited by those who own them. This would pose challenges for policy makers and governments as they seek to create a level playing field that allows smaller and less-resourced groups to innovate (OECD, 2023[25]). Policy challenges could include questions around access to tools for innovation and market dynamics concerning competition.

AI development and use is expected to continue to depend on access to computing infrastructure

Copy link to AI development and use is expected to continue to depend on access to computing infrastructureComputing infrastructure (“AI compute”) is a key component needed for AI development and expected to be a continued driver of AI’s improved capabilities over time. Unlike other AI inputs like data or algorithms, AI compute is grounded in “stacks” (layers) of physical infrastructure and hardware, along with software specialised for AI (OECD, 2023[8]). Advancements in AI compute have enabled a transition from general-purpose processors, such as Central Processing Units (CPUs), to specialised hardware requiring less energy for more computations per unit of time. Today, advanced AI is predominantly trained on specialised hardware optimised for certain types of operations, such as Graphics Processing Units (GPUs), Tensor Processing Units, Neural Processing Units and others. Training AI on general-purpose hardware is less efficient (OECD, 2023[8]). The high volume of AI-focused computing infrastructure has also enabled AI advances, in addition to technological advances in AI hardware itself.

The demand for AI compute has grown dramatically, especially for deep learning neural networks (OECD, 2023[8]). Securing specialised hardware purpose-built for AI can be challenging due to complex supply chains, as illustrated by bottlenecks in the semiconductor industry (Khan, Mann and Peterson, 2021[26]). Integrated circuits or computer chips made of semiconductors are a critical input for AI compute. They are called the “brains of modern electronic equipment, storing information and performing the logic operations that enable devices such as smartphones, computers and servers to operate” (OECD, 2019[27]). Any electronic device can have multiple integrated circuits fulfilling specific functions, such as CPUs or chips designed for power management, memory, graphics (e.g. GPUs used for AI). Demands on semiconductor supply chains have grown in recent years, especially as digital and AI-enabled technologies become more commonplace, such as Internet of Things devices, smart energy grids and autonomous vehicles. The semiconductor supply chain is also highly concentrated, making it more vulnerable to shocks (OECD, 2019[27]).

Training and inference related to advanced AI requires significant compute resources, leading to environmental impacts: energy and water use, carbon emissions, e-waste and natural resource extraction like rare mineral mining. Experts have raised concerns around the direct environmental impacts of AI (i.e. those created from training or inference). This is especially relevant as generative AI becomes more accessible, with applications like chatbots increasing demand for server time and AI inference. Some compute providers have given AI-specific estimates, but such standardised measures remain underreported and scarce (OECD, 2022[28]).

AI has advanced significantly thanks to increases in computer speeds and to Moore’s Law (i.e. computer speed and capability are expected to double every two years). However, experts have warned of potentially reaching the limits of such scaling, posing challenges for future leaps in computer performance (OpenAI, 2018[29]; Sevilla et al., 2022[30]). Researchers are looking at new ways to enable continued improvements. These include more powerful processors; faster data transmission; larger memory and storage; next-generation networks like 6G; and expanded and faster edge and distributed computing devices and quantum computing capabilities. Experts are also researching neuromorphic computing modelled after human cognition. This aims to make processors more efficient using orders of magnitude less power than traditional computing systems (Schuman et al., 2022[31]). Computing methods like optical computing – technology harnessing the unique properties of photons – are also being explored for AI applications (Wu et al., 2023[32]).

The availability of vast amounts of data for training AI has greatly enhanced systems’ capabilities, including their ability to generate realistic content

Copy link to The availability of vast amounts of data for training AI has greatly enhanced systems’ capabilities, including their ability to generate realistic contentAccess to data is crucial for AI, including for training, testing and validation. The demand for data to train AI (“AI input data”) has increased significantly, particularly with the rise of generative AI and large language models. Today, data used to train AI are aggregated from a variety of sources, such as through curated datasets, data-sharing agreements, collecting user data, using existing stored data and “data scraping” of publicly available data from the Internet.

The availability and collection of data raise policy questions. This is especially true when data contain personally identifiable information (i.e. personal data) or protected material, such as that under licence or copyright (e.g. software, text, images, audio or video). The limited availability of digitally readable text for non-English languages could also limit the benefits of AI for linguistic groups, including those using minority languages. Based on open-source AI training datasets available on Hugging Face, English represents more than half of all languages. This points to a potential diversity gap in terms of training dataset languages for AI (OECD.AI, 2024[33]) (Figure 2.2).

Figure 2.2. More than half of open-source AI training datasets are in English

Copy link to Figure 2.2. More than half of open-source AI training datasets are in EnglishPercentage breakdown of languages for open-source AI training datasets on Hugging Face from a list of 225 languages, 2024

Notes: This chart represents the language distribution of all datasets. Multilingual and translation datasets on Hugging Face contain more than one language and are thus double counted. More methodological information available at: https://oecd.ai/huggingface (accessed on 10 March 2024).

Source: OECD.AI (2024[33]), using data from Hugging Face. For more information, please see: https://oecd.ai/en/data?selectedArea=ai-models-and-datasets.

Privacy-enhancing technologies are emerging, including confidential computing methods and federated learning

Copy link to Privacy-enhancing technologies are emerging, including confidential computing methods and federated learningThe use of vast amounts of personal data in some AI training data raises policy questions around the protection of privacy rights. Several techniques are emerging to help preserve privacy when using large datasets (e.g. for machine learning) (OECD, 2023[34]). These “privacy-enhancing technologies” (PETs) can help implement privacy principles such as data minimisation, use limitation and security safeguards. For example, “confidential computing” methods help ensure that companies hosting or accessing data in the cloud or through edge devices cannot view the underlying data without unlocking it with controlled encryption methods (O’Brien, 2020[35]; Mulligan et al., 2021[36]). Another example is “federated learning”, where users can each train a model using data on their own device. The data are then transferred to a central server and combined into an improved model that is shared back with all the users (Zewe, 2022[37]). Researchers are also developing methods of training machine-learning models over encrypted data. For example, in homomorphic encryption, the underlying data remains undisclosed during the entire training process. This strengthens privacy protections, while allowing for data to be used effectively (OECD, 2019[3]).

The potential of PETs to protect confidentiality of personal and non-personal data is recognised and applications are maturing. However, many PETs are still at the research stage and not yet scaled-up and used in production for major consumer-facing AI systems. It is also generally recognised that use of PETs can still be associated with privacy breaches and thus should not be regarded as a “silver bullet” solution.

The use of synthetic data has attracted significant interest as a PET approach. Synthetic data are generated via computer simulations, machine-learning algorithms, and statistical or rules-based methods, while preserving the statistical properties of the original dataset.1 Synthetic data have been used to train AI when data are scarce or contain confidential or personally identifiable information. These can include datasets on minority languages; training computer vision models to recognise objects that are rarely found in training datasets; or data on different types of possible accidents in autonomous driving systems (OECD, 2023[25]). However, challenges remain. For example, “[r]e-identification is still possible if records in the source data appear in the synthetic data” (OPC, 2022[38]). Furthermore, similar to anonymisation and pseudonymisation, synthetic data can also be susceptible to re-identification attacks (Stadler, Oprisanu and Troncoso, 2020[39]).

Generative AI could lead to more representative datasets but also raises concerns about manipulation

Copy link to Generative AI could lead to more representative datasets but also raises concerns about manipulationSome types of AI, like generative AI, can also produce new data, creative works and inferences or predictions about individuals or a topic that could serve as AI input data (i.e. “AI output data” for training) (Staab et al., 2023[40]). Such AI-generated data may not preserve the statistical properties of the original data and therefore should not be conflated with synthetic data. Nevertheless, the ability of AI to generate new data and content has been highlighted as an opportunity for generating larger and eventually more representative datasets that could be used for training. However, emerging research finds that using AI-generated content can degrade AI models over time. This leads to “model collapse” where the use of model-generated content in training causes “irreversible defects” in the model’s results, causing models to “forget” (Shumailov et al., 2023[41]). This is particularly worrisome if training data are collected by “scraping” the Internet without the ability to verify whether a given data sample was AI-generated.

AI-generated content can also be manipulated for various harms, such as for mis- and dis-information campaigns and influencing public opinion (OECD, 2023[25]). Current research suggests there is no reliable way to detect AI-generated content, even by using watermarking schemes or neural network-based detectors. This could allow for AI-generated content to be spread widely without detection, enabling sophisticated spamming methods, mis- and dis-information like manipulative fake news, inaccurate document summaries and plagiarism (Sadasivan et al., 2023[42]). Bad actors could engage in large-scale and low-cost “data poisoning”. In these cases, training datasets are “poisoned” by inaccurate or false data, changing a model’s behaviour or reducing its accuracy. For example, datasets could be poisoned to deliver false results in all or specific situations.

Regulation around generative AI is emerging in response to such tools becoming widely available at the end of 2022. However, the lag in policy, implementation, and enforcement of such responses around the world, may result in damage to the quality of public discourse online and the quality of information itself on the Internet. This damage may be difficult, if not impossible, to rectify in the years ahead (OECD, 2023[43]).

Open-source resources can make progress more broadly accessible but introduce other challenges

Copy link to Open-source resources can make progress more broadly accessible but introduce other challengesMany resources and tools for AI development are available as open-source resources. This facilitates their widespread adoption and allows for crowdsourcing answers to questions. Tools include TensorFlow (Google) and PyTorch (Meta) (open-source libraries for the programming language Python). Such tools can be used to train neural networks in computer vision and object detection applications. Some companies and researchers also publicly share curated training datasets and tools to promote AI diffusion (OECD, 2019[3]). Open-source developers have adapted and built on notable AI models with impressive speed in recent years (Benaich et al., 2022[44]).

The open-source AI community uses platforms like GitHub and Hugging Face to access open-source datasets, code and AI model repositories, and to exchange information on AI developments. Between 2012 and 2022, the number of open-source AI projects worldwide (measured by AI-related GitHub “repositories” or “projects”), grew by over 100 times, showcasing the increasing popularity of open-source software platforms (OECD.AI, 2023[45]). Training AI has become more accessible through low or no-code paradigms, where user-friendly interfaces substantially lower the barrier to entry for training and using AI (Marr, 2022[46]).

Although several AI firms operate proprietary AI models and systems and commercialise their access, some – such as Meta’s language model LLaMA 2 (Meta, 2023[47]) – make them available as open-source. The emergence of several open-source AI models contributes to more rapid innovation and development. This could mitigate “winner-take-all dynamics” that lead a few firms to seize significant market share (Dickson, 2023[48]). Yet open-sourcing entails other potentially significant risks, including non-existent or weak safeguards against use by bad actors.

Computer vision capabilities continue to develop, but applications like facial recognition raise concerns

Copy link to Computer vision capabilities continue to develop, but applications like facial recognition raise concernsSince its emergence in the 1960s, computer vision has been key to developing AI that can perceive and react to the world (Russell and Norvig, 2016[11]). Advances in face detection and recognition or image classification underpin significant technological progress in areas like image captioning and image translation (the process of recognising characters in an image to extract text contained in the image). Some computer vision technologies are mature, like face detection and recognition, or image classification.

Facial recognition, a key application enabled by computer vision, has received significant attention in public debate and in policy circles as countries move to create laws to govern AI. In many jurisdictions, facial recognition has been central to discussions on “high-risk” applications of AI, including the question of its use by law enforcement. Risks of algorithmic bias and data privacy concerns have resulted in various calls and actions to limit certain uses of facial recognition technology, such as in public spheres (OECD, 2021[49]). Previous analysis of commercial AI-enabled facial-recognition technologies revealed their greater accuracy when identifying light-skinned and male faces compared to darker-skinned and female faces (Buolamwini and Gebru, 2018[50]; Anderson, 2023[51]). In many cases, this bias is caused by using large training datasets that lack representative population samples from diverse groups. This highlights the important role that synthetic data can play in generating more representative datasets for training. Facial recognition technology has also been involved in mistaken identifications and wrongful arrests, predominantly in communities of colour (Benedict, 2022[52]). For example, a facial recognition system in China mistook a face on a bus for someone illegally crossing the street (“jaywalking”) (Shen, 2018[53]). These examples point to the potential for this powerful technology to be misused to harm specific population groups. Jurisdictions are considering stronger regulation, including prohibiting public authorities to use AI for biometric recognition.

Experts have identified key considerations for policy makers in ensuring trustworthy computer vision technology. First, policy makers should consider the data used for training the model, which have recently led to privacy, bias or copyright issues. Second, AI models themselves often require large computational architectures that consume significant amounts of energy and are complex. This raises issues like explainability because they are too complex for people to understand. Some researchers are attempting to improve explainability through methods such as “mechanistic interpretability” by “reverse-engineering” model elements to be understandable to humans (Conmy et al., 2023[54]). Third, policy makers should consider the impact of deploying AI in the real world, particularly around societal and economic impacts like fairness (OECD, 2023[25]).

Robots are getting better and smarter thanks to AI

Copy link to Robots are getting better and smarter thanks to AIRobots can be defined as “physical agents that perform tasks by manipulating the physical world” where mechanical components such as arms, joints, wheels and sensors, such as cameras and lasers, allow perception of an environment (Russell and Norvig, 2016[11]). AI can be “embodied” into robots to perform a wide range of tasks, including manufacturing and assembly, transportation and warehousing. It can also be used for various applications where human interaction might be dangerous or physically impossible, for example in emergency rescue contexts. Robotics can also be combined with image, audio and video generation models to produce advanced systems with multimodal capabilities combining these functions (Lorenz, Perset and Berryhill, 2023[10]).

Pending more advanced technology, the market for robotics may grow rapidly. Further technological developments are still needed for robotics to reach wide commercial markets. Some estimated the market for autonomous mobile robots at USD 2.7 billion in 2020. Projections put the market as high as USD 12.4 billion by 2030 (Allied Market Research, 2022[55]).

Researchers are also advancing with “neuro-morphic” robots, machines controlled by human brain waves rather than a voice command, for example. This could include brain chip implants and wearable robotic “exoskeletons” that use machine learning and sensors to gather and process data in real time (Lim, 2019[56]). AI-enabled digital twins, copies of systems or networks for modelling purposes, have also been explored in remote surgery applications alongside robots and virtual reality. Such environments need low network latency (i.e. fast networks) and high levels of security and reliability (Laaki, Miche and Tammi, 2019[57]). These developments could significantly advance the strength and reliability of prosthetic limbs, bring precision to remote surgery applications and even to robot-assisted surgery in space and remote areas, and other cutting-edge health care applications (Bryant, 2019[58]; Newton, 2023[59]).

Will scaling-up current AI models continue to drive advancements in AI capabilities?

Copy link to Will scaling-up current AI models continue to drive advancements in AI capabilities?In deep learning, research around “scaling laws” offers insights into predictable patterns for “scaling up” AI (i.e. advancing AI by training models with more parameters, compute and data, often bringing significant training costs). These approximate laws describe the relationship between an AI model’s performance and the scale of key inputs. For example, some researchers have observed an increase in the performance of language models with increases in compute, dataset size and parameter numbers (Kaplan et al., 2020[60]). Typically, larger language models perform better, pointing to a relationship between performance and scale.

However, models may not be able to scale in perpetuity if AI developers become limited by access to compute and data. As AI models scale with an increasing number of parameters, trade-offs may emerge, such as the cost of compute and increasing memory requirements. Some also argue that large language models based on probabilistic inference cannot reason and thus might never achieve completely accurate outputs (LeCun, 2022[61]). Consequently, some hypothesise that current model architectures may never allow for complete accuracy and generalisation.

Some experts also question whether AI chat-based search models – such as ChatGPT – will benefit from scaling laws, given the significant cost required to retrain their underlying models to keep them up to date so users can search for the most recent information (Marcus, 2023[62]). In addition, some research shows the scaling of models may outpace data availability in the next few years (Villalobos et al., 2022[63]).

Experts offer varying predictions for the future trajectories and implications of AI

Copy link to Experts offer varying predictions for the future trajectories and implications of AIAI research and policy discussions often cover existing AI challenges. Yet the long-term implications of rapidly advancing AI systems remain largely uncertain and fiercely debated. Experts raise a range of potential future risks from AI, some of which are already manifesting in various ways. At the same time, experts and others expect AI to deliver significant or even revolutionary benefits. Future-focused activities are critical to better understand AI’s possible long-term impacts and to begin shaping them in the present to seize benefits while mitigating risks (OECD, forthcoming[64]).

Strategic foresight and other future-oriented activities can support policy makers to anticipate possible futures and begin to actively shape them in the present. Strategic foresight is a structured and systematic approach to using ideas about the future to anticipate and prepare for change. It involves exploring different plausible futures, and associated opportunities and risks. It reveals implicit assumptions, challenges dominant perspectives and considers possible outcomes that might otherwise be ignored. Strategic foresight uses a range of methodologies, such as horizon scanning to identify emerging changes, analysing “megatrends” to reveal and discuss directions and scenario exploration to help engage with alternative futures (OECD, forthcoming[64]).

In November 2023, recognising that future AI developments could present both enormous global opportunities and significant risks, 28 governments and the European Union signed the Bletchley Declaration. In so doing, they committed to co-operation on AI, ensuring it is designed, developed, deployed and used in a manner that is safe, human-centric, trustworthy and responsible (AI Safety Summit, 2023[65]). The Declaration also committed signatories to convene a series of future AI Safety Summits. This gave further impetus to ensuring that potential future AI benefits and risks remain a topic of international importance and dialogue.

AI is expected to yield significant future benefits

Copy link to AI is expected to yield significant future benefitsWhile many headlines focus on AI risk, tremendous benefits are also expected to accrue. Through the G7 Hiroshima Process on Generative AI, G7 members unanimously saw productivity gains, promoting innovation and entrepreneurship, and unlocking solutions to global challenges as some of the greatest opportunities of AI technologies worldwide, including for emerging and developing economies. G7 members also emphasised the potential role of generative AI to help address pressing societal challenges. These include improving health care, helping solve the climate crisis and supporting progress towards achieving the United Nations Sustainable Development Goals (SDGs) (OECD, 2023[66]). The benefits AI may generate include:

Enhancing productivity and economic growth. Projections vary for how much AI may contribute to gross domestic product (GDP) growth. Some market research estimates the global AI market, including hardware, software and services, will have a compound annual growth rate of 18.6% between 2022 and 2026, resulting in a USD 900 billion market for AI by 2026 (IDC, 2022[67]). Others estimate the AI market could grow to more than USD 1.5 trillion by 2030 (Thormundsson, 2022[68]). For generative AI specifically, estimates include raising global GDP by 7% over a ten-year period (Goldman Sachs, 2023[69]).

Accelerating scientific progress. AI could spur scientific breakthroughs and speed up the rate of scientific progress (OECD, 2023[70]). AI can increase the productivity of scientists and overcome previously intractable resource bottlenecks, saving time and increasing resource efficiency (Ghosh, 2023[71]). Impacts in this area can already be seen, with AI driving progress in areas like nuclear fusion and generating new life-saving antibodies to disease (Stanford, 2023[72]; Yang, 2023[73]).

Strengthening education. AI may be able to provide personalised tutoring and other individualised learning opportunities that are currently only available to those who can afford them, increasing access to quality education for all (Molenaar, 2021[74]). On the teaching side, AI can potentially help teachers create materials and lesson plans tailored to students’ needs (Fariani, Junus and Santoso, 2023[75]).

Improving health care. Advances in AI technologies could transform many aspects of health care service and delivery. These include interventions at the individual level to provide people with information about their personal health, as well as those that improve diagnoses and alleviate workload for health care providers (Anderson and Rainie, 2018[76]; Davenport and Kalakota, 2019[77]).

Potential benefits of AI come with risks and uncertain future trajectories

Copy link to Potential benefits of AI come with risks and uncertain future trajectoriesExperts, civil society and governments have all identified many potential risks from AI. The Bletchley Declaration, for example, notes the potential for unforeseen risks, such as those stemming from dis-information and manipulated content. It also points to potential safety risks from highly capable narrow and general-purpose AI models. These could result in substantial or even “catastrophic harm” stemming from the most significant capabilities of leading-edge models.

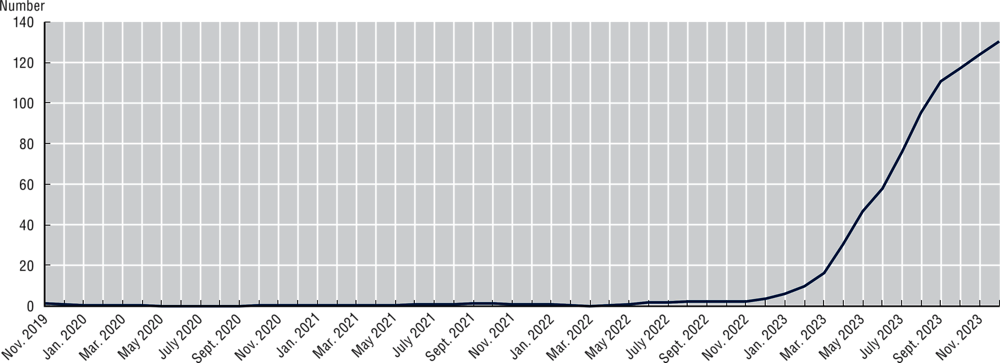

Although attention has been placed on preventing negative outcomes from advanced AI systems in the medium- to long-term, some risks are already apparent. Early efforts to track AI incidents found that generative AI-related incidents and hazards reported in the press have increased steeply since 2022 (Figure 2.3) (OECD, 2023[66]). G7 members see risks of mis- and dis-information, intellectual property rights infringement, and privacy breaches as major threats stemming from generative AI in the near term (OECD, 2023[66]).

Figure 2.3. Generative AI-related incidents and hazards reported by reputable news outlets have increased steeply since 2022

Copy link to Figure 2.3. Generative AI-related incidents and hazards reported by reputable news outlets have increased steeply since 2022Number of generative AI-related incidents and hazards, three-month moving average 2019-23

Notes: This chart shows the three-month moving average of real count of incidents and hazards reported. Results might differ from previous analysis due to modifications in the methodology of clustering articles into incidents.

Source: OECD.AI (2024[78]), AI Incidents Monitor (AIM), using data from Event Registry. Please see: www.oecd.ai/incidents for more information.

AI could amplify mis- and dis-information on a considerable scale. Generative AI can produce novel images or video from text prompts or produce audio in various tones and pitches with relatively limited input (such as a short recording of a human voice). Already today, generative AI outputs can be challenging to distinguish from human creation (Kreps, Mccain and Brundage, 2022[79]), raising policy implications about the ease of using AI to produce mis- and dis-information – fake news, deep fakes and other convincing manipulated content (Sessa, 2022[80]). Challenges may be particularly acute on issues related to science, such as vaccine effectiveness and climate change, and in polarised political contexts. Mitigation measures are emerging, such as watermarking and AI-enabled deep fake detection, but current approaches have limitations and may be insufficient to address future challenges (Lorenz, Perset and Berryhill, 2023[10]). Over time, some experts argue that increasingly advanced AI systems could fuel mass persuasion and manipulation of humans (Anderson and Rainie, 2018[76]). This, in turn, could cause material harm at the individual and societal levels, eroding societal trust and the fact-based exchange of information that underpins science, evidence-based decision making and democracy (OECD, 2022[81]).

AI hallucinations, information pollution and data poisoning harm data quality and erode public trust. AI outputs may decrease the quality of data online, as they exhibit hallucinations (making up facts in a credible way, often when a correct answer is not found in the training data). This can contribute to information pollution, allowing AI-produced data to harm the online “commons” and produce a vicious cycle whereby AI is trained on lower quality data produced by AI (Lorenz, Perset and Berryhill, 2023[10]). Bad actors could also use AI to sabotage or ruin robust data (i.e. data poisoning) (OECD, 2023[43]).

AI could significantly disrupt labour markets. Advanced AI could help people find jobs but also displace highly skilled professionals, or at the very least, change the nature of some jobs. Advanced AI can be designed to assist humans in the labour market, including with “collaborative robots” or “cobots”. However, some research and experts have raised concerns that increasingly capable AI, such as AI that can act as autonomous agents, could in some cases replace high-skilled and high-wage tasks (e.g. programmers, lawyers or doctors), leading to economic and social disruption (Russell, 2021[82]; Clarke and Whittlestone, 2022[83]; Metz, 2023[84]). Even if AI does not result in net job losses the task composition of jobs is likely to evolve significantly, changing skills needs (OECD, 2023[85]; 2023[86]).

Significant risks could emerge by embedding AI in critical infrastructure. AI is increasingly embedded in critical infrastructure because it can improve timeliness and efficiency, safety and/or reliability, or reduce costs (Laplante et al., 2020[87]). However, some argue this could enable bad actors using AI to cause physical or virtual harm (Zwetsloot and Dafoe, 2019[88]; OECD, 2022[89]). AI deployed in critical infrastructure, like in chemical or nuclear plants, could pose serious risks to safety and security if AI models and systems prove unreliable or unsafe.

AI models and systems can exacerbate bias and inequality. AI can echo, automate and perpetuate social prejudices, stereotypes and discrimination by replicating biases, including those contained in training data, in their outputs. This could further marginalise or exclude specific groups (Bender et al., 2021[90]; NIST, 2022[91]). For example, generative AI tools have been shown to produce sexualised digital avatars or images of women, while portraying men as more professional and career oriented (Heikkilä, 2022[92]). Biases involving specific religions have also been found (Abid, Farooqi and Zou, 2021[93]). As AI becomes more complex, it could also exacerbate divides between advanced and emerging economies, creating inequalities in access to opportunities and resources. “Automation bias” – the propensity for people to trust AI outputs because they appear rational and neutral – can contribute to this risk when people accept AI results with little or no scrutiny (Alon-Barkat and Busuioc, 2022[94]; Horowitz, 2023[95]). At the same time, AI tools can help human operators interpret and question complex AI decisions, discouraging overreliance. More generally, inequal access to AI resources risks creating and deepening inequality both within and between countries (e.g. between developed and emerging economies).

Generative AI raises data protection and privacy concerns. The vast amounts of data used to train AI, especially generative AI, raise data protection and privacy concerns. The processing of personal data for training purposes or its use for automated decision making may conflict with data protection regulations. For example, where data are used for automated decision-making, the European Union General Data Protection Regulation requires the operator to provide “meaningful information about the logic involved”. This may pose challenges with AI trained by processing information in “black box” neural networks that humans cannot understand or replicate.

Intellectual property rights represent another area of challenges and unknowns. Generative AI in particular raises issues around intellectual property rights. This could include unlicensed content in training data; potential copyright, patent and trademark infringement of AI creations; and ownership questions around AI-generated works. Generative AI is trained on massive amounts of data from the Internet that include copyrighted data, often without authorisation of the rights-owners. Whether this is permissible is being discussed across jurisdictions, with numerous court cases under way (Zirpoli, 2023[96]). Legal decisions will set precedents and affect the ability of the generative AI industry to train models. Because legal systems around the world differ in their treatment of intellectual property rights, the treatment of AI-generated works also varies internationally (Murray, 2023[97]). Although most jurisdictions agree that works generated by AI are not copyrightable (Craig, 2021[98]), views could shift as AI becomes ubiquitous.

Many solutions are being proposed to help yield AI’s benefits and mitigate its challenges

Copy link to Many solutions are being proposed to help yield AI’s benefits and mitigate its challengesActions are needed to answer unknowns and shape potential AI futures. AI researchers, experts and philosophers have proposed many potential solutions to enable future benefits, while mitigating risks. The OECD, through its Expert Group on AI Futures, has identified nearly 70 potential solutions being explored and analysed.2 Surveyed expert group members agreed on the most important potential solutions listed below:

Liability rules for AI-caused harms;

Requirements that AI discloses that it is an AI when interacting with humans;

Research and development (R&D) on approaches to evaluating the capabilities and limitations of AI systems and preventing accidents, misuse or other harmful consequences;

AI red-lines, such as regulation prohibiting certain AI use cases or outputs; and

Controlled training and deployment of high-risk AI models and systems.

Other solutions include moratoriums or bans on advanced AI, and international statements or declarations on managing potential risks from AGI. These proposals have gathered less widespread agreement among expert group members.

Demystifying debates on maintaining human control of AI systems and on the alignment of AI systems and human values

Copy link to Demystifying debates on maintaining human control of AI systems and on the alignment of AI systems and human valuesThe risk that humans lose control of AI systems received significant attention in 2023 both in news media and at the AI Safety Summit hosted by the United Kingdom. However, the topic is controversial. In a 2023 survey of the OECD Expert Group on AI Futures, respondents rated loss of control as both one of the most important and least important risks of AI.

As AI systems become more capable and are assigned more responsibility over important tasks, some experts believe that a lack or misalignment of shared values and goals between humans and machines could lead AI systems to act against the interests of humans. This concern has spawned “AI alignment” work to ensure the behaviour of AI systems aligns consistently with human preferences, values and intent (Dietterich and Horvitz, 2015[99]; Russell, 2019[100]; Bekenova et al., 2022[101]; Dung, 2023[102]).

It can be difficult to discern whether an AI system is pursuing objectives that align with its creators’ intent and goals. For example, such issues have begun to emerge through “reward hacking”. In these cases, a model finds unforeseen and potentially harmful ways of achieving its objective while exploiting reward signals (Cohen, Hutter and Osborne, 2022[103]; Skalse et al., 2022[104]). In trying to increase user engagement, for example, AI systems managing content on social media could promote articles that spread extreme views and keep users engaged. However, humans may benefit most from balanced and non-polarised content, and real human preferences may reflect this belief (Grallet and Pons, 2023[105]).

A number of experts believe that sufficiently robust methods are not available today to ensure that AI systems align with human values (OECD, 2022[89]). They consider this perceived gap to be one of the top “unsolved problems” in AI governance (Hendrycks et al., 2022[106]). In the 2023 survey of members of the OECD Expert Group on AI Futures, respondents ranked the absence of robust methods to ensure alignment between AI systems’ outputs and human values as one of the most important potential future risks. In connecting the concepts of alignment and control, some AI experts believe the negative consequences already witnessed in relatively basic AI systems could be taken to extremes. They argue this could happen if highly capable AI systems were developed in ways that unfold so quickly that humans cannot maintain control, for example if machines gained the ability to improve themselves independently (Cotton-Barratt and Ord, 2014[107]).

As another underlying issue, AI systems can be highly efficient at pursuing human-defined objectives but in ways their developers did not always anticipate. To achieve these aims, AI systems could develop subgoals (i.e. means to an end) that differ dramatically from the values and intent that underpin human-defined objectives. Some argue this could even lead to machines resisting being switched off since this could be considered counter-productive to the achievement of objectives (Russell, 2019[100]). However, as mentioned in Box 2.2, achieving AGI – the level of advancement hypothesised as needed for such scenarios – is hypothetical and highly controversial. Some experts argue that achieving AGI itself might be an exceedingly complex and challenging task. This would make the notion of misalignment a premature concern lacking agreed-upon definitions and clear boundaries (LeCun, 2022[109]). Research on such topics can be challenging, including a limited number of peer-reviewed articles, lack of appropriate modelling techniques and lack of specific definitions and standardised terminology (McLean et al., 2021[110]).

Policy considerations for a trustworthy AI future

Copy link to Policy considerations for a trustworthy AI futureThe rapid progress in AI capabilities has not yet been matched by assurances that AI is trustworthy and safe. AI is advancing rapidly and could produce unsafe outputs, especially as AI-enabled products are transferred from research contexts to consumer use before the full consequences and risks of their deployment are understood. Advanced AI techniques such as deep learning pose specific safety and assurance challenges: technologists and policy makers alike do not fully understand their functioning and therefore cannot use traditional guardrails to ensure reliability. Lack of explainability inhibits understanding of how AI generates outputs in the form of predictions, content, recommendations or decisions. This, in turn, creates issues related to transparency, robustness and accountability. “Narrow” AI focuses on optimising outcomes for well-defined contexts. However, the increasingly generality of AI applications makes it difficult to train advanced models and systems to produce an appropriate response for every relevant scenario.

There is little understanding or agreement on how significant future AI risks may be. Experts should build awareness and understanding of AI risks, particularly among policy makers, and identify key sources of these risks. To help mitigate some AI risks, improved methods to interpret and assure AI are needed. In addition, policy makers need to ensure that developers can deploy models and systems that operate reliably as intended, even in novel contexts.

Where are countries in the race to implement trustworthy AI?

Copy link to Where are countries in the race to implement trustworthy AI?AI research and development priorities feature prominently on national policy agendas, with investments poised to continue in the years ahead

Copy link to AI research and development priorities feature prominently on national policy agendas, with investments poised to continue in the years aheadAI R&D investments feature prominently in national AI strategies. Governments and firms are redoubling efforts to leverage AI for productivity gains and economic growth and such trends are poised to continue. Entirely new business models, products and industries emerge from AI-enabled R&D breakthroughs. This underscores the importance of basic research, especially as policies emerge to consolidate R&D capabilities to catch up with the leading players: China, the United States and the European Union (OECD.AI, 2023[111]).

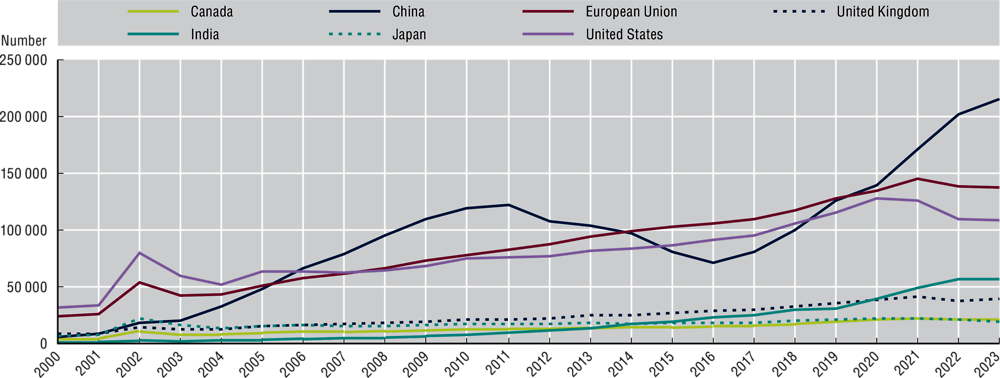

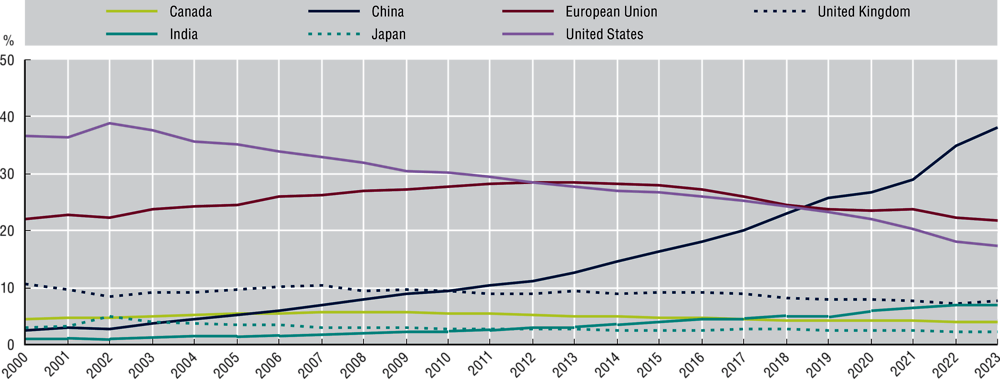

Progress in AI R&D can be measured by examining to what extent countries publish AI research. The United States and European Union have had steady growth in the number of AI research publications over past decades. These include journal articles, books, conference proceedings and publications in academic repositories like arXiv. Meanwhile, AI publications have increased dramatically in China, with India also making strides in recent years (Figure 2.4). Since mid-2019, China has published more AI research than either the United States or the European Union. India has also made significant advances, more than doubling its number of AI research publications since 2015 (OECD.AI, 2023[111]).

Figure 2.4. China, the European Union and the United States lead in the number of AI research publications, with India recently making strides

Copy link to Figure 2.4. China, the European Union and the United States lead in the number of AI research publications, with India recently making stridesNumber of AI research publications, 2000-23

Notes: This figure shows the number of AI research publications for a sample of top countries for 2000-23. OpenAlex publications are scholarly documents such as journal articles, books, conference proceedings and dissertations.

Source: OECD.AI (2023[111]) using data from OpenAlex available at: www.oecd.ai/en/data?selectedArea=ai-research. StatLink contains more data.

The number of AI publications alone does not provide a full picture of publication quality and impact. The number of citations can be a proxy indicator for “impact”, with increased citations possibly indicating a higher impact. Since 2022, the share of total high-impact AI research publications in the United States has declined, while China’s share has steadily risen. Notably, China had overtaken the United States and European Union by 2019 (Figure 2.5). In 2023, with respect to institutions most active in publishing AI research, seven of the ten leading AI research institutions were based in China, with one institution each in France, the United States and Germany3 (OECD.AI, 2023[111]).

Figure 2.5. China’s share of “high-impact” AI publications has steadily risen since 2000, notably overtaking the United States and European Union in 2019

Copy link to Figure 2.5. China’s share of “high-impact” AI publications has steadily risen since 2000, notably overtaking the United States and European Union in 2019Percentage of high-impact AI research publications by jurisdiction, 2000-23

Notes: This figure shows the percentage of “high-impact” AI research publications for a sample of top countries for 2000-23. OpenAlex publications are scholarly documents such as journal articles, books, conference proceedings and dissertations. A publication’s impact is calculated by dividing the number of citations by the average citations in the subdiscipline, discounted by the number of years since the publication was published, so that older publications that have been around for longer are discounted accordingly. Publications are classified as “high impact” if its score falls in the highest quartile.

Source: OECD.AI (2023[111]) using data from OpenAlex available at: www.oecd.ai/en/data?selectedArea=ai-research.

Countries dedicate significant funding for AI R&D through different instruments. The main trends include launching AI R&D-focused policies, plans and programmes, establishing national AI research institutes and centres, and consolidating AI research networks and collaborative platforms. Highlights from France, Korea, Türkiye, the United States, the European Union and China appear below.

France established its 2018 National AI Research Programme out of its National AI Strategy. The programme represented 45% of the National AI Strategy budget (about EUR 700 million) (INRIA, 2023[114]).

In 2021, Korea announced the AI promotion plan on the theme of “AI into all regions for our people”. It focuses on creating an “AI cluster village” in Gwang-ju city to serve as a base for AI innovation as well as projects across the country considering each region’s key strengths and industries (Ministry of Science and ICT, 2021[115]).

Türkiye has been funding AI R&D projects and programmes by launching calls for proposals or awarding grants, including multidisciplinary research that encourages collaboration across relevant fields. The Scientific and Technological Research Council of Türkiye and the National AI Institute have funded more than 2 000 R&D and innovation projects. Total funding for academic R&D projects amounted to more than USD 50 million. Meanwhile, industrial R&D projects received funding of more than USD 150 million (TÜBITAK, 2023[116]).

The United States introduced initiatives to retain its leadership position in AI R&D through the United States National AI Initiative Act of 2020. In 2023, the National AI Initiative Office helped manage billions of dollars for non-defence and defence-related AI R&D (NSTC, 2023[112]), following the 2016 and 2019 National AI R&D Strategic Plan. The 2023 budget includes funding for the National Science Foundation (NSF) that has long been supporting AI research, including through dedicated AI research grants. In 2018, the Defense Advanced Research Projects Agency announced an AI R&D investment of more than USD 2 billion. The NSF and the White House Office of Science and Technology Policy (OSTP) recently proposed a National AI Research Resource (NAIRR) to provide researchers access to critical computational, data, software and training resources to support AI R&D (NAIRR Task Force, 2023[113]).

The European Union allocated EUR 1 billion per year for AI, including for R&D, within the broader EUR 100 billion budget for the Horizon Europe and Digital Europe programmes (European Commission, 2023[117]). This follows the Co-ordinated Plan on Artificial Intelligence, which was released in 2018. In this plan, member states emphasise co-ordination in AI R&D to maximise impact, including “shared agendas for industry-academia collaborative AI R&D and innovation” (OECD.AI, 2024[118]).

In 2018, China invested an estimated USD 1.7-5.7 billion in AI R&D spending. This estimate includes basic research through the National Natural Science Foundation of China and applied research through the National Key R&D Programmes. While precise estimates are challenging to validate, some researchers believe that China’s AI R&D budget is closer to the upper bound estimate. Moreover, they believe it likely to have increased in recent years (Acharya and Arnold, 2019[119]). This investment is aligned with the country’s ambitions, set in the 2017 New Generation AI Plan, to become the world’s primary innovation centre by 2030.

Countries also establish national AI research institutes and research centres:

The Australian AI Action Plan allocated USD 124 million to establish a National AI Centre to further develop AI research and commercialisation (DISR, 2021[120]).

Canada’s Pan-Canadian AI Strategy emphasises research and talent, with the Canadian Institute for Advanced Research leading the strategy for the Government of Canada and three AI Institutes.

Japan’s AI R&D Network provides opportunities to exchange information among AI researchers and promote co-operation in R&D in Japan and abroad.

The Research Institutes of Sweden combine AI research with interdisciplinary research, a wide range of test beds, innovation hubs and educational programmes.

Established in 2020, the National Artificial Intelligence Institute of Türkiye serves as a driving force in disseminating AI. Acting as a bridge between academic research and industry needs, the Institute serves as a key stakeholder in the implementation of Türkiye’s National AI Strategy (2021-2025) (Scientific and Technological Research Council of Türkiye, 2023[121]).

The United States National Science Foundation-led National AI Research Institutes Program is the nation’s largest AI research ecosystem. It is supported by a partnership of federal agencies and industry leaders (National Science Foundation, 2023[122]).

Brazil’s Ministry of Science, Technology and Innovation and partners are investing BRL 1 million annually for ten years to create up to eight AI Applied Research Centres, with partner firms matching investments.

India’s 2021 Kotak-IISC AI-Machine Learning Centre offers education on AI and machine learning research (OECD.AI, 2021[123]). Other initiatives focus on reinforcing collaborative networks of experts and researchers.

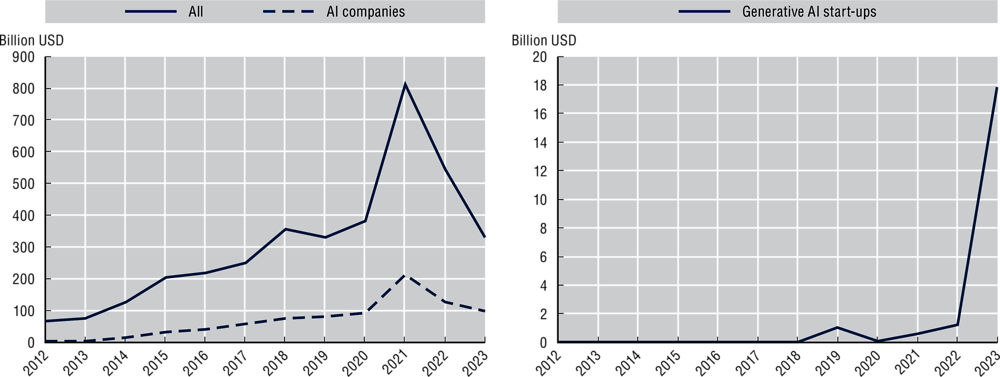

While global venture capital investments in AI and overall have declined since 2021, investments in generative AI start-ups have boomed

Copy link to While global venture capital investments in AI and overall have declined since 2021, investments in generative AI start-ups have boomedThe annual value of global venture capital (VC) investments in AI start-ups4 more than tripled between 2015 and 2023 (from more than USD 31 billion to nearly USD 98 billion) (Figure 2.6) (OECD.AI, 2024[124]). Notably, between 2020 and 2021, such investments jumped by over 2.3 times (from about USD 92 billion to USD 213 billion). Most of the funds flowed to AI firms in the United States and China. The United States has the advantage of a strong market for private sector R&D and VC funding, accounting for more than two-thirds of global private sector investment in software and computer services in 2022 (European Commission, 2023[125]).

Following trends in the global VC market, overall VC investments in AI firms experienced a significant drop of over 50% between 2021 and 2023 (from USD 213 billion to USD 98 billion (OECD.AI, 2024[124]). This reflects broader VC trends, with investors exercising caution after the technology boom of the COVID-19 pandemic, rising interest rates and inflationary pressures. Generative AI start-ups are one exception to this cooling trend in VC investments, jumping from USD 1.3 billion in 2022 to USD 17.8 billion in 2023, a large increase from 1% of total AI VC investments to 18.2%. The rise was spurred largely by Microsoft’s USD 10 billion investment in OpenAI (Figure 2.6) (OECD, 2023[66]).

VC investments in AI have focused on different areas over the past decade. In 2012 and 2013, the largest investments were made in media and marketing, and in IT infrastructure and hosting. The following years saw a VC boom in AI for mobility and autonomous vehicles, but its share of total VC investments declined significantly after 2020. Unsurprisingly, the pandemic years saw an increase in VC investments in AI for health care, drugs and biotechnology (OECD.AI, 2023[126]).

Figure 2.6. VC investments in generative AI start-ups have boomed since 2022, while VC investments overall and in AI start-ups reached a peak in 2021

Copy link to Figure 2.6. VC investments in generative AI start-ups have boomed since 2022, while VC investments overall and in AI start-ups reached a peak in 2021VC investments in AI and generative AI start-ups, 2012-23

Notes: AI start-ups are identified based on Preqin’s cross-industry and vertical categorisation, as well as on OECD’s automated analysis of the keywords contained in the description of the company’s activities. AI keywords used include: generic AI keywords, such as “artificial intelligence” and “machine learning”; keywords pertaining to AI techniques, such as “neural network”, “deep learning”, “reinforcement learning”; and keywords referring to fields of AI applications, such as “computer vision”, “predictive analytics”, “natural language processing”, “autonomous vehicles”. Please see: https://oecd.ai/preqin for more information.

Source: OECD.AI (2024[124]) using data from Preqin. Also available at: www.oecd.ai/en/data?selectedArea=investments-in-ai-and-data.

Governments are building human capacity for AI as demand for AI skills grows

Copy link to Governments are building human capacity for AI as demand for AI skills growsAI is changing the nature of work. Countries have realised that both managing a fair labour market transition and leading in AI R&D and adoption requires solid policies to build human capacity and attract top talent. The AI workforce has grown considerably, almost tripling as a share of employment from less than a decade ago (Green and Lamby, 2023[127]). However, according to LinkedIn in 2023, men are about twice as likely to work in an AI occupation or report AI skills as women. This suggests a gendered skills gap in global AI labour markets (OECD.AI, 2023[128]).

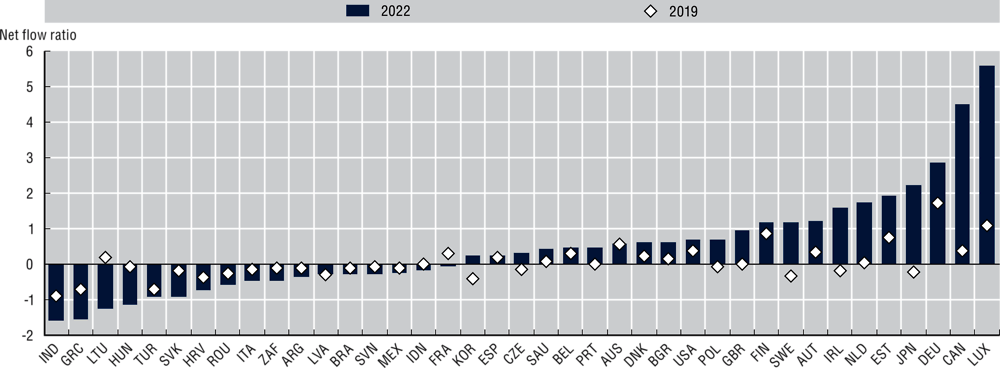

AI talent is in high demand and remains a mobile workforce across economies, with countries competing for the small pool of highly skilled AI workers. For example, economies like Luxembourg, Canada, Germany and Japan attracted more AI talent than they lost in 2022 (Figure 2.7). In contrast, countries like India, Greece and Lithuania saw a net outflow of AI talent from their borders. This might indicate an AI “brain drain”, where highly specialised workers move to another country for improved employment opportunities. India is noteworthy for its considerable AI talent growth rate in recent years. This rate is ahead of the United States, United Kingdom and Canada, as measured by the year-over-year change in LinkedIn members declaring to have AI skills (OECD.AI, 2022[129]).

With industry outpacing academia in developing advanced AI, countries increasingly see AI compute capacity as a crucial resource to be managed

Copy link to With industry outpacing academia in developing advanced AI, countries increasingly see AI compute capacity as a crucial resource to be managedThe demand for AI compute has grown dramatically as shown by valuations in the AI chip market. In 2021, some called the unfolding AI compute trends an “AI chip race”; the market value for AI chips was estimated at more than USD 10 billion with revenues expected to reach nearly USD 80 billion by 2027 (Pang, 2022[130]). Large technology companies have invested significantly to join the race.

The growing focus on AI compute is driven by a desire from AI developers to obtain the specialised hardware and software needed to train advanced AI. Industry, rather than academia, increasingly provides and uses compute capacity and specialised labour for state-of-the-art machine-learning research and for training AI models with a high number of parameters (Ahmed and Wahed, 2020[131]; Ganguli et al., 2022[132]; Sevilla et al., 2022[30]). Compute divides may emerge and worsen between the public and private sectors because, increasingly, public sector entities lack resources to train advanced AI (OECD, 2023[8]).

Figure 2.7. Advanced economies are competing for AI talent

Copy link to Figure 2.7. Advanced economies are competing for AI talentBetween-country AI skills migration in 2019 and 2022

Notes: This chart displays the net migration flows per 10 000 LinkedIn members with AI skills in 2019 and 2022 for a selection of countries with 100 000 LinkedIn members or more declaring to have AI skills both in 2019 and 2022. Migration flows are normalised according to LinkedIn country membership.

Source: OECD.AI (2022[129]) using data from LinkedIn, also available at: www.oecd.ai/en/data?selectedArea=ai-jobs-and-skills.

Based on the number of “top supercomputers”, the United States and China have led in compute capacity since 2012. According to the November 2023 Top500 list,5 35 economies have a top supercomputer. The highest concentration occurs in the United States (32%), followed by China (21%), Germany (7%), Japan (6%), France (5%) and the United Kingdom (3%). The remaining 29 economies on the list represent a combined 26% of supercomputers (Top500, 2023[133]). The United States and China have led in the number of supercomputers on the Top500 list since 2012, with China increasing its number of supercomputers significantly since 2015.