Dorothy Adams

Modernising Access to Social Protection

5. Managing the challenges of leveraging technology and data advances to improve social protection

Abstract

There are significant risks and challenges associated with deploying advanced digital technologies and data in social protection. Governments have put considerable effort into measures to mitigate the risks, including legal, regulatory and accountability frameworks to protect people’s privacy and to govern use of automated systems. Some countries are now going beyond these measures, implementing initiatives that also improve their overall interactions with citizens and modernise the way they do business, such as offering services through multiple channels, involving service users in solution design, and achieving incremental improvements through agile working methods. This chapter also discusses some of the broad range of capacities required to successfully meet the challenges of deploying digital solutions such as effective governance, a leadership culture that promotes innovation, an appropriately skilled workforce, and investments in modern technology infrastructure.

Key findings

The previous chapter in this report explores the different approaches countries are taking to leverage advances in technology and data to improve the design, delivery and coverage of social protection benefits and services. However, this does not come without considerable challenges and risks. Furthermore, while the potential benefits may be significant, they are uncertain and often only materialise in the longer-term. This chapter discusses the challenges governments face as they increasingly digitalise social protection systems, together with the measures they are adopting to manage those challenges. The literature and countries’ responses to the OECD’s questionnaire Harnessing Technology and Data to Improve Social Protection Coverage and Social Service Delivery (OECD, 2023[1]) highlight the following key issues and measures:

Technology projects can fail if the foundations that underpin and enable technological improvements are not in place. A wide range of foundations are necessary for building digital capacity, including supportive policy, legal and operational environments, the availability of a range of specialised skills, and modern technology infrastructure.

Technology improvements and innovations – particularly those aimed at better integration – can touch on and significantly alter the operational processes of a range of government agencies and other providers. This requires a high degree of cross-governmental collaboration which takes considerable organisational (and sometimes political) commitment, time and resources.

Data sit at the heart of much government innovation and as countries increasingly collect, link and share more data, countries are considering how to manage the risks involved to make the most of the vast amount of data being generated in social systems.

Commonly, data used for social protection purposes are people’s personal information and governments have a duty to protect people’s privacy when using their data. While legislation and rules exist to regulate the use of rapidly evolving technology and data, they are often complex and difficult to navigate making it challenging for agencies to act safely and effectively.

Discriminatory biases can be built into automated processes and decision-making. Public confidence in governments’ use of advanced technology and data solutions takes time to build and can be quickly lost. The possibility of errors and/or biases, particularly in relation to already disadvantaged populations, and the potential implications of those errors requires there to be transparent procedures in place that explain how people’s information is being used together with protections and controls for addressing any issues if they occur.

Greater use of data-driven and/or digitalised processes in social protection creates the risk of reinforcing or creating new sources of exclusion and disadvantage for some groups. Increased digitalisation can exclude those individuals who have limited access and/or ability to engage with digital services. This is a particular challenge when people with limited digital access are also key priority groups for social protection measures.

Governments are seeking to optimise the benefits of rapid developments in technology and data while mitigating the risks involved with instruments such as legal and regulatory frameworks for example to protect people’s privacy and to govern data management and use.

Governments are also going beyond these measures, implementing initiatives that improve their overall interactions with individuals and communities, that enhance public trust and confidence and modernise the way they do business, including offering services through multiple channels, involving service users in solution design, achieving incremental improvements through agile working methods and encouraging innovative technology and data cultures through leadership and champions.

5.1. Introduction

Significant benefits can be realised from harnessing technology and data advances to enhance national social protection systems, from improving the effectiveness and timeliness of social programmes, for example the speed at which benefits can be scaled up and down to expanding benefit provision to a larger share of eligible beneficiaries, examples of which are outlined in Chapter 4 in this report and (Verhagen, forthcoming[2]).

Many social benefits – even in the world’s wealthiest countries – do not reach all intended recipients (Chapter 1). Many individuals across OECD countries feel they cannot access benefits easily in times of need (Chapter 1, Figure 1.4), and people do not always enrol in benefit programmes for which they are eligible. They may have little or no information about a benefit or its eligibility criteria, and/or entitlement rules may be perceived as too complex or cumbersome. The application process can also make a programme less accessible: it may be bureaucratically cumbersome, requiring time, education, and other resources that potential claimants may not have, for different reasons (Chapter 2 and (OECD, 2023[3])).

Incomplete take‑up of benefits leads to suboptimal outcomes. When groups or individuals miss out on the social benefits for which they are eligible, benefits become less effective for poverty reduction, income smoothing and risk management. Importantly, poor benefits coverage may also prevent eligible individuals from accessing services that are tied to benefit receipt, such as job-search support and other active labour market policies. Ineffective social services can also contribute to poorer outcomes and inefficient use of resources.

While technology and data advances can play an important role in improving the design, delivery and coverage of social protection benefits and services, they also create complex challenges for governments, and the risks involved in adopting new digital and data technologies can be significant (Verhagen, forthcoming[2]). Governments are attempting to strike a balance between undertaking necessary transformations and mitigating the risks involved in doing this (ISSA, 2023[4]). For example, the Department of Work and Pensions (DWP) in the United Kingdom has created an Artificial Intelligence (AI) Lighthouse Programme to safely explore their use of emerging Generative AI technology; one project is looking explicitly at supporting the interaction between a Work Coach and a citizen when face‑to-face in a Job Centre. DWP see significant opportunities in using AI but are also very aware of the potential risks of such technology and have established a framework and process to explore such technology in a safe, ethical and transparent way.

This chapter first explores the challenges governments are facing as they increasingly utilise advanced technologies and data. Key challenges emerging from the literature and case studies provided in response to the OECD Questionnaire are discussed. These include mobilising the necessary capacities and enablers, getting the data right and using it appropriately, and mitigating the risks of further entrenching bias, discrimination and exclusion through accountability mechanisms and processes. Secondly, the chapter outlines some of the measures countries are adopting to manage those challenges, discusses lessons learned and potential ways forward for governments as a result.

5.2. The challenges of leveraging technology and data advances

5.2.1. Mobilising the necessary capacities and enablers

Digitalisation cannot be an objective of its own, as digital solutions only improve the provision of services if they are fulfilling their objectives well and are adopted by users. Thus, added value and user-friendliness are critical factors for digital platforms supporting service provision (OECD, 2022[5]). To ensure technology and data-driven improvements and innovations are successful, governments must first ensure a broad range of capacities and enablers are in place. Those capacities include the policy landscape, governance and leadership, operating environments, human resources, co‑operation across different levels of government, and investments in modern technology infrastructure to support advanced digitalisation projects.

Policy landscape

The policy landscape required to support increased and advanced uses of technology and data to improve public services including social protection coverage is multi-faceted. It includes legal, regulatory and governance frameworks, risk management models, strategies e.g., for promoting digital inclusion, clarifying data sovereignty and ownership issues and policy settings e.g., to avoid or manage the misuse of data. Embracing the results from greater use of technology and data can present significant challenges to the status quo and demands redirection of government resources, improved agency collaboration, changes to service delivery models, improved individual-level data, and better monitoring of policy and service outcomes.

Advanced uses of technology and/or data often requires an enabling legislative ecosystem which can include enabling general legislation such as privacy laws as well as changes to content-specific legislation (refer Chapter 4 for discussion on Article 22 of the EU’s GDPR). For example, in the Slovak Republic a legislative amendment was a prerequisite to the creation of a new proactive service for the citizen in the provision of a childbirth allowance upon the birth of a child. The childbirth allowance is a state social benefit, which the state provides proactively without the participation of a beneficiary (see Box 5.1).

Ideally the policy landscape will align with a government’s overall vision for digital government. International organisations are supporting governments’ efforts to realise digital transformation with legal instruments like the OECD’s 2014 Recommendation of the Council on Digital Government Strategies. The Recommendation offers a whole‑of-government approach that addresses the cross-cutting role of technology in the design and implementation of public policies, and in the delivery of outcomes. It emphasises the crucial contribution of technology as a strategic driver to create open, innovative, participatory and trustworthy public sectors, to improve social inclusiveness and government accountability, and to bring together government and non-government actors to contribute to national development and long-term sustainable growth (OECD, 2014[6]).

Digital government strategies need to become firmly embedded in mainstream modernisation policies and service design so that relevant stakeholders outside of government are included and feel ownership for the final outcomes of major policy reforms. The OECD recommends that strategies for effective digital government need to reflect public expectations in terms of economic and social value, openness, innovation, personalised service delivery and dialogue with people and businesses. In the Communiqué of the Meeting of the Public Governance Committee at Ministerial Level held in Venice in November 2010, Ministers acknowledged the importance of technology as key ally to foster innovation in governance, public management and public service delivery, and to build openness, integrity and transparency to maintain trust, acknowledging that trust in government is one of the most precious national assets (OECD, 2010[7]).

Box 5.1. Legal amendments as a prerequisite for automatic enrolment (Slovak Republic)

Several legal amendments were required for the Slovak Republic to automatically provide a childbirth allowance upon the birth of a child (a state‑provided social benefit) without any involvement from the beneficiary.

Changes and amendments to certain measures were required in several Acts, for instance to reduce the administrative burden by using public administration information systems. The government also had to amend and supplement certain Acts on the childbirth allowance and the allowance for multiple children born at the same time.

Source: (OECD, 2023[1]).

Governance and leadership

A critical enabler to support the more systemic use of technology and data (and possibly one of the most challenging enablers to affect) is the leadership required to execute the necessary change(s) to fully realise the value of technology advances. This includes leadership at the political and senior management level as well as at the functional and technical levels. Greater use of data in decision-making requires shifts in mind-sets, priorities and ways of working where there may be resistance due to other “business-as-usual” pressures and hard, inconvenient questions that can emerge with deeper data analysis. By way of example, quantitative evaluations may show programmes that have strong stakeholder and/or political support to be ineffective or of low impact.

Public trust, sometimes referred to as social licence, is important when scaling digital and data-driven innovations and automated decision-making. The OECD’s Good Practice Principles for Public Service Design and Delivery in the Digital Age promotes three principles that will help to achieve accountability and transparency in the design and delivery of public services to reinforce and strengthen public trust: be open and transparent in the design and delivery of public services, ensure the trustworthy and ethical use of digital tools and data, and establish an enabling environment for a culture and practice of public service design and delivery (OECD, 2022[8]).

These principles can be hard to observe. Initiatives are not always well publicised, the roll-out of new web-based applications is not always smooth, there may be general resistance to changing a system that “works”, and the public may not be able to easily access information about whether developments are pilots or fully operational. Governments and social security institutions may also not want to openly publicise the results from pilots or trials in cases where they did not achieve the desired results.

Public trust takes time to build and can be quickly lost. Prior poor experiences with government agencies, negative media stories and general distrust in governments can exacerbate doubts about governments’ ability to manage digital and data-driven innovations. (Wagner and Ferro, 2020[9]). Indeed, 81% of respondents to a cross-national survey covering 36 countries reported that a negative experience would decrease their level of trust in the government (Mailes, Carrasco and Arcuri, 2021[10]). More pointedly, in a US survey on attitudes to AI development and governance, just 27% of respondents said they have “a great deal of confidence” or “a fair amount of confidence” that the US federal government could develop AI. By contrast, 32% had “no confidence” that the US federal government could develop AI (Zhang and Dafoe, 2019[11]). Data from the OECD’s Trust Survey indicates that only about one‑third of respondents across 22 countries believe a public agency would even adopt innovative ideas to improve public service provision (OECD, 2022, p. 80[12]).

The Data Innovation Program in Canada illustrates one way in which trust was built with citizens over time. Because the project requires individuals’ consent for data sharing and use to be sought upfront, the project is both time and resource intensive but the benefits as a result are considered worthwhile (Box 5.2). It is important to note however that obtaining consent when using very large, national data sets is often difficult if not impossible. Some countries are working with their relevant national privacy body to develop better approaches that help build public confidence.

Box 5.2. Building trust through voluntary data sharing arrangements (British Colombia, Canada)

The Data Innovation Program consistently links, de‑identifies and provides access to administrative datasets in one secure environment and is available for use by government analysts and academic researchers to conduct population-level research. The Program aims to address a previous lack of a whole‑of-government approach to data sharing and usage, which made data-driven decision-making incomplete, time‑consuming, and resource intensive.

A key challenge the Program faces is data sharing and acquisition and in response to this challenge a critical success factor is that data sharing is voluntary. However, this means there is significant up-front time required to build trust with data providers.

The challenge has been approached through the following steps:

taking the time to educate potential data providers on the Program governance model,

developing a framework that allows data providers (government agencies) to maintain control over access to the data they provide, with the opportunity to pre‑review publications developed using that data; and

starting small and using completed research projects to demonstrate that the Program is a responsible data custodian.

Source: (OECD, 2023[1]).

Operating environments

Digitalisation represents a major opportunity to enhance service effectiveness and efficiency, via interfaces for people using the services, as well as the back-office infrastructure for service providers to deliver knowledge‑based services and automate administrative processes. The extent to which the benefits of digitalisation are realised in practice depends crucially on how the digital infrastructure is implemented and successful implementation relies in large part on operating environments that are ready or mature enough for greater digitalisation.

Since digitalisation efforts can fundamentally change the way organisations work, they may involve considerable structural change and/or standardisation in the way government departments, agencies, and providers are organised and operate. For example, Belgium’s Crossroads Bank for Social Security (discussed in more detail in Chapter 4) required the back-office functions of all 3 000 organisations involved to be restructured and the organisational processes to be re‑engineered and automated. Similarly, albeit on a smaller scale, New Zealand’s efforts to provide digitised services for new parents and caregivers through SmartStart (Box 5.3) required several agencies to adapt and co‑ordinate their processes. A key feature of these re‑organisation efforts is a focus on providing more customer-centric services and ensuring this remains the key goal requires engagement with external stakeholders, advocacy groups and service users themselves. The risk of not adapting organisational structures and processes is that technology enhancements are fragmented, projects are unsuccessful or worse still, lead to poorer outcomes. Simply automating processes may replicate existing errors and inefficiencies.

Box 5.3. SmartStart in New Zealand

SmartStart is an online tool aimed at parents and caregivers who are planning to or about to have a baby. It gives people online access to integrated government information, services and support related to each phase of pregnancy and early childhood development up to six years of age.

Using SmartStart, an expectant parent can create a profile and add their due date to personalise the timeline with key dates that align with the important tasks they need to complete, such as choosing a lead maternity carer. Parents and caregivers can get tips on keeping themselves and their baby or child healthy and safe, as well as contact details for organisations that can offer help and support.

Users can also complete specific tasks online such as registering the birth of a new baby. As part of the same process, users can consent to sharing their baby’s registration information with Inland Revenue to apply for an Inland Revenue number for their baby and Best Start payments, and with the Ministry for Social Development to update their benefit entitlement details. They can also complete a Childcare Subsidy application and submit the form online. Users are invited to apply for a new post-natal tax credit “Best Start” through SmartStart. As part of this process, families give consent for Inland Revenue to use the information they provide to determine their eligibility for other Working for Families tax credits. This appears to have resulted in high take‑up of Best Start. Take up of other Working for Families tax credits has also increased with the increase particularly pronounced for Asian mothers, a group is estimated to have had particularly low take‑up in preceding years.

A key challenge with this integrated service is that government agencies need to think broader than their own ministerial deliverables, strategically, operationally and technically. To ensure a modern, more joined-up and citizen-focused public service, the focus must be on the customer, and their needs, and not on the agency.

Building government digital services means more than offering new digital services. It means changing existing processes and practices, changing the functions of existing teams, and often integrating more than one different agency’s processes and practices into a single customer experience. Progressing such change is far more challenging than the development of a new online service.

Source: (OECD, 2023[1]).

Attracting, developing and retaining talented staff

To support a shift towards digital government, investment is needed in developing the skills of civil servants (Burtscher, S. Piano and B. Welby, 2024[13]). Social security organisations need to attract, develop and retain staff who are equipped for ongoing digital transformation, people with the necessary skills and mindsets. A continuum of skills is required, from frontline staff and senior decision-makers (who are confident using data to make decisions) at one end of the continuum who may need to be data aware and/or data capable to technical experts at the other. A recent OECD working paper that reviews good practices across OECD countries to foster skills for digital government presents different approaches in public administration to providing both training activities and informal learning opportunities. It also provides insights into how relevant skills can be identified through competence frameworks, how they can be assessed, and how learning opportunities can be evaluated (Burtscher, S. Piano and B. Welby, 2024[13]).

A broad range of technical expertise is necessary, for example, to collect, organise, and analyse data across different institutions; to exploit new data sources to better inform policy making; to improve the technical infrastructure; and to evaluate programme effectiveness. Specialised staff are also needed to interrogate, evaluate and keep systems and models up to date. The ability to evaluate systems is crucial not only for their basic functioning but also to ensure that they are not discriminatory or regenerating pre‑existing bias.

The skills required are even more specialised the more advanced and complex the emerging systems of data and analytics become (Redden, Brand and Terzieva, 2020[14]). Many relevant skills are already in short supply, in both social security institutions and in the broader labour market. For example, the Canada Revenue Agency experienced inefficiencies in their Chat Services Project relating to a lack of specialist staff to undertake a complex project that was treading new ground and being innovative. Given rapidly developing technologies, skill requirements will likely increase and change over time, which risks exacerbating the human resource challenges organisations face (ISSA, 2022[15]; Ranerup and Henriksen, 2020[16]). In addition, the public sector can struggle to compete with private sector salaries for highly sought after technical roles such as data scientists.

Given that certain skill in addition to specialised technical skills are necessary to support digitalisation efforts, for example content experts and behaviouralists, organisations can benefit from having multidisciplinary teams (ISSA, 2022[15]; OECD, 2022[17]). While some expertise can be developed within social security institutions, it is not always straightforward or desirable for welfare officials to transition from claims processing and benefit design to managing data innovation and advanced analytics projects. As such, the effectiveness of digital and data-driven improvements and innovations may depend on the way welfare officers interact with the system (Lokshin and Umapathi, 2022[18]).

Welfare experts are still required to interact with service users and for their knowledge about the needs of those service users, application processes and available service providers. When Sweden introduced automatic social assistance decision-making, welfare officers’ roles changed, but they were still needed to offer help and support to applicants as they underwent the process of applications and appeals in the automatic system (Ranerup and Henriksen, 2020[16]).

Multidisciplinary teams can be particularly valuable when deploying AI and predictive models, to help to ensure that decisions generated by these advanced analytical methods are accurate, explainable, and fair. AI is increasingly focused on how to act in unknown and complex situations. It will therefore be important to evaluate its performance against a range of metrics, informed by different fields, including statistics, philosophy and social science (ISSA, 2020[19]).

Cross-government co‑operation

Social protection systems sit within broader system and policy settings such as education, health, employment and tax policy, family and children policy, housing, legal aid and financial services (McClanahan et al., 2021[20]). Successful implementation of digital solutions aimed at improving social protection may require co‑operation across government agencies which can be costly in terms of time and financial resources making technology solutions, particularly those requiring significant co‑ordination, difficult to achieve in practice (OECD, 2022[8]; McClanahan et al., 2021[20]).

The “Chile Grows with You” (Chile Crece Contigo) policy for example which was implemented in 2006 as a holistic approach to early childhood development benefits and services had to scale back ambitions for a high degree of cross-sectoral co‑ordination. While in principle, the policy envisaged a high degree of cross-sectoral co‑ordination and even full integration, including shared policy making, one study found that co‑ordination was in fact limited to inter-sectoral financial transfers from the lead ministry (Ministry of Social Development) to other ministries involved. Multi‑agency plans and budgets were not prepared, followed, or assessed. Rather, co‑ordination in practice was limited to identifying performance indicators and sectoral contractual agreements. The education sector was not included in key decisions at all, despite the implications of the policy for it (McClanahan et al., 2021[20]).

Effective co‑operation and co‑ordination are particularly important when a project requires government agencies to share data. This requires not only a mutual willingness to co‑operate, but also practical agreements for shared resources, regulations and infrastructure (OECD, 2023[21]). Australia experienced this when developing the National Disability Data Asset using the new Data Availability and Transparency Act to undertake a multi‑agency data sharing project. Through the initiative Australia has found that to successfully establish multi‑agency arrangements requires commitment, time, co‑operation, and mutual respect – both vertical (different levels of government) and horizontal (different levels within government, from officer to Ministerial level) co‑operation.

Challenges involved in reaching practicable information-sharing agreements are also highlighted in a Canadian example where issues around ownership and control of data, particularly for Indigenous populations, has required active collaboration within and between federal, provincial and territorial governments (Box 5.4).

Box 5.4. Cross-government information sharing as a key challenge for service digitalisation in Canada

While advancements in service digitalisation have accelerated in Canada over the past five years, information sharing across governmental entities and between levels of government remains a gap in the current Canadian context. Privacy and enabling programme legislation, data security requirements, in addition to Ownership, Control, Access, and Possession (OCAP) considerations for First Nations, Indigenous, and Métis populations are all elements that require review and analysis. Adjustments will be necessary to ensure that when data are shared, all laws and regulations are respected.

These elements are under active exploration and collaboration within and between Federal/Provincial/Territorial government officials. The establishment of a Digital ID is a key file being advanced at the most senior levels across Federal/Provincial/Territorial governments, with a view to also enabling OCAP for all Digital ID users and removing barriers to data sharing within and across governments. Shared credentialing use is also expanding.

Source: (OECD, 2023[1]).

Investing in the necessary infrastructure

Modern IT infrastructure and processes are essential foundations for the provision of effective digitalised public services. In many cases it will be necessary to modernise existing infrastructure prior to or in conjunction with digital and data transformation(s). In 2021‑22 the OECD supported Lithuania to develop a new approach to personalised services for people in vulnerable situations which included reviewing Lithuania’s IT infrastructure. The OECD recommended that Lithuania modernise the IT infrastructure for both social and employment services to better support service provision including digital service offerings, involving end-users throughout each phase of the modernisation process (OECD, 2023[22]).

Investments in IT transformations require well-scoped and costed business cases to convince governments to make what are often significant investments in digital systems, particularly given potential benefits can be uncertain and often materialise in the longer-term. The complexity of designing and iteratively implementing an integrated digital system that fully responds to the changing needs of users at all levels of administration, while also placing people at the centre, is often under-estimated. The time and cost, not only for set-up, but also for take‑up, maintenance and continuous adaptation, needs to be considered. Ultimately, the cost for people to access and use a system needs to be minimal, and the benefits tangible to all. If the benefits are not visible, the risk is failure i.e., the new system is not used, or worse, creates significant setbacks (Barca and Chirchir, 2019[23]).

Investment cases should also consider the needs of marginalised groups who may lack access to the infrastructure and skills necessary to benefit from technology advancements. For example, Internet connections may be sparse or unreliable in rural or geographically isolated areas, some groups may not have access to devices. In addition, there may be skill gaps for current and potential applicants that need to be addressed.

Depending on the extent of infrastructure development or modernisation required governments may not have all the necessary capabilities and capacities and while development and maintenance tasks can be contracted out, governments should take care to ensure they retain system ownership. Private development partners may play a helpful role in building and maintaining technical solutions. For instance, the pension insurance DRV-Bund in Germany was able to use technology from a major cloud provider to cut costs involved with integrating a chatbot into its website (ISSA, 2022[24]). Likewise, British Colombia partnered with an academic institution to support the development of its Data Innovation Programme. However, governments may expose themselves to both short- and long-term risks if they do not retain ownership of systems and data when managing their public-private partnerships. This is particularly important where social protection organisations are nascent and evolving, such as in low- and middle‑income countries (Barca and Chirchir, 2019[23]).

5.2.2. Getting the data right

Countries are increasingly collecting, sharing and using more data. Some countries are creating new data, for example through increased linking of administrative datasets across government agencies and making that data more widely available in useable formats. A small number of countries are also testing the value of using new or non-traditional data sources such as cellular phone or banking data for policy and research and to improve service design and delivery. Governments are carefully considering how they manage the challenges of optimising the value of their expanding data holdings.

Greater use of data can help to drive efficiency, effectiveness and innovation. However, if something goes wrong, for example sensitive information is made available when it should not have been, it may harm not only the individual(s) involved but also damage public trust and confidence. This is particularly acute in social services where much of the information used is people’s personal, and often highly sensitive, information. For instance, if abusers of victims/survivors of domestic violence access classified information through privacy leaks, they may expose their victims to further violence (OECD, 2023[21]). Another example is the potential misuse of a person’s health data by an employer to discriminate against them in the workplace.

The answer however is not to avoid the use of data because of potential harms. There are both individual and public benefits to providing social services for example and evaluating their effectiveness. While Article 12 of the International Bill of Rights states that no one shall be subjected to arbitrary interference with their privacy, family, home or correspondence, Article 27 specifies that everyone has the right to freely participate in the cultural life of the community, to enjoy the arts and to share in scientific advancement and its benefits; arguably this includes the right to benefit from data and technology advancements. All OECD countries have legal safeguards in place to mitigate the risks associated with the collection, use and disclosure of personal information to ensure information is used in a responsible, transparent, and trustworthy way. There is also an increasing number of ways in which countries are protecting people’s data that go beyond laws and regulations, some of which are described below.

Data governance

Good data governance plays a fundamental role in helping governments and agencies become more data driven as part of their digital strategy and is critical to governments maximising the benefits of data access and sharing, while addressing related risks and challenges. The OECD describes data governance as a diverse set of arrangements, including technical, policy, regulatory or institutional provisions, that affect data and their cycle (creation, collection, storage, use, protection, access, sharing and deletion) across policy domains and organisational and national borders (OECD, 2024[25]). The characteristics of a mature data organisation might include data informing a continuous evolution of business strategy, the organisation constantly looking for ways to leverage new datasets, the right data protection measures being in place and data governance integrated into business processes.

Enabling the right cultural, policy, institutional, organisational, and technical environment is necessary to realising the value from data. Yet, organisations often face legacy challenges inherited from analogue business models, ranging from outdated data infrastructures and data silos to skill gaps, regulatory barriers, the lack of leadership and accountability, and an organisational culture which is not prone to digital innovation and change. New challenges have also arisen resulting from the misuse and abuse of peoples’ data. Furthermore, governments struggle to keep up with technological change and to fully understand the policy implications of data in terms of trust and basic rights (OECD, 2019[26]).

To achieve a data driven public sector the OECD proposed a holistic data governance model comprising three core layers (strategic, tactical and delivery) (OECD, 2019[26]). The strategic layer includes leadership, vision and national data strategies e.g. a data sovereignty strategy in countries with an indigenous and/or ethnic minority population whose conception of data is not the same as the democratic regime. The tactical layer enables the coherent implementation and steering of data-driven policies, strategies and/or initiatives. It includes data-related legislation and regulations as instruments that help countries define, drive and ensure compliance with the rules and policies guiding data management, including data openness, protection and sharing. The delivery layer allows for the day-to-day implementation (or deployment) of organisational, sectoral, national or cross-border data strategies.

The social sector can learn from the considerable advances that have been made in the health sector, including to data governance, to promote access to personal health data that can serve health-related public interests and bring significant benefits to individuals and society. In December 2016, the OECD Recommendation on Health Data Governance was adopted which identified core elements to strengthen health data governance, improve the interoperability of health data, thereby unlocking its potential while protecting individuals’ privacy (OECD, 2017[27]). The Recommendation provides policy guidance to promote the use of personal health data for health-related public policy objectives, while maintaining public trust and confidence that any risks to privacy and security are minimised and appropriately managed. It is designed to be technology neutral and robust to the evolution of health data and health data technologies.

In 2022, the OECD’s Health Committee in co‑operation with the Committee on Digital Economy Policy provided a report on how the Recommendation was being implemented (OECD, 2022[28]). The results of a survey that informed the report showed that many countries were still working toward implementation of the Recommendation. Among those countries who had lower scores for data governance, there were gaps in addressing data privacy and security protections for key health datasets such as having a data protection officer and providing staff training, access controls, managing re‑identification risks, and protecting data when they are linked and accessed. The OECD agreed it would continue to support the implementation and dissemination of the Recommendation and that a new series of country reviews of health information systems would be used to support countries in their efforts to develop health data governance.

The 2023 Health at a Glance contains a thematic chapter – Digital Health at a Glance which examines the readiness of countries to advance integrated approaches to digital health. The focus is on a non-exhaustive list of indicators of readiness to realise benefits from digital health while minimising its harms. The chapter also provides the groundwork for a more comprehensive approach to a robust suite of digital health indicators for readiness over time. While data are not currently available across all dimensions of digital health readiness (analytic, data, technology and human factor readiness) the chapter details the dimensions of a framework and signals where more regular data collection are needed (OECD, 2023[29]).

Data accuracy

Data quality is central to realising the potential of greater data use and is particularly important to initiatives aimed at improving social protection coverage, including through the use of predictive models and automated decision-making based on AI (Osoba and Welser, 2017[30]). Ideally, data need to be inclusive, timely and complete. No one data source is comprehensive. Administrative data for example suffers shortcomings in that records only cover those who are registered in government systems which may exclude, misrepresent or even overrepresent some groups. Administrative data are also often criticised for being deficit based because they are focused on the negative rather than positive aspects of a person’s life such as benefit receipt or being known to the justice system. Furthermore, the conditions and/or incentives for people to provide accurate data do not always exist.

Survey data also has limitations. While countries have developed surveys that attempt to cover traditionally marginalised and excluded groups and to collect more sensitive information, achieving better representation remains a challenge. Surveying hard to reach groups is both complex and expensive.

Access to timely data is important, particularly for operational purposes. As Employment and Social Development Canada (ESDC) found when developing the Canadian E‑vulnerability index, a key challenge is to find ways to ensure that data are both high-quality and timely (Box 5.5). Poor-quality or incomplete data may result in shortcomings in model predictions and automated decisions. For instance, research undertaken in Canada suggests that using poor-quality data (in the form of duplicate values) for predictive decision support in child protection services can create errors leading to sub-optimal foster care placement (Vogl, 2020[31]). Errors may compound when models rely on integrated data from various data sources and agencies.

Issues associated with incomplete data may have specific implications for disadvantaged and marginalised groups. For instance, certain populations might be over- or under-represented in datasets due to different experiences, statistical definitions and measurement. For example, Chile reports that undocumented migrants are underrepresented in its social registry as they typically do not have a national identification number, as are residents of very remote communities (such as islands) due to limited outreach or mobile connectivity. Similarly, OECD research shows that homelessness amongst women is typically underreported because homeless women tend to be less visible and are harder to capture in standard data collection approaches. Furthermore, those temporally sleeping in domestic violence shelters (a leading cause for women homelessness) are not statistically defined as homeless in around half of OECD countries (OECD, forthcoming[32]).

Box 5.5. The importance of data availability and timeliness – the case of Canada

The E‑vulnerability Index (EVI) uses existing survey data from Statistics Canada, including data from the Census of Population, the Canadian Internet Use Survey, and the Programme for International Assessment of Adult Competencies.

The EVI is a key data input for analysis and decision-making at ESDC, informing service design and targeted outreach activities to populations most disadvantaged by the move to digital services. Internal users show continuous demand for EVI, requesting additional data points and disaggregation. However, ongoing challenges related to data availability and timeliness make it harder to improve and update the EVI index over time.

While caveats on EVI source data staleness and on any other data limitations are added to publications to inform users of the index limitations, ESDC has also collaborated with key data partners to improve the timeliness of data source accessibility. Additionally, ESDC is exploring alternative methodologies for the EVI compilation, to leverage source data which is available on a timelier and/or disaggregated basis. The EVI will be updated during 2024.

Source: (OECD, 2023[1]; CONADI, 2023[33]).

Data privacy

The data collected and used for social protection can be highly sensitive and managing privacy is a constant challenge. Privacy risks are heightened when sensitive information is used for operational purposes such as generating automated decisions for individuals or contacting people directly (OECD, 2019[34]; OECD, 2013[35]).1 Creation and use of large datasets and data lakes also carry complex challenges that test the ability of governments and agencies to apply all relevant legal frameworks and regulations to protect individuals.

All OECD member countries, and 71% of countries around the world, have laws in place to protect (sensitive) data and privacy (OECD, 2023[36]). Perhaps the best-known instrument is the 2018 EU General Data Protection Regulation (GDPR) which has advanced data protection principles in Europe. The European Union continues to develop its regulatory framework with the European Parliament and the Council of the European Union reaching a political agreement in June 2023 to amend the European Data Act to harmonise rules on fair access to, and use of data (European Commission, 2023[37]). The United States is also strengthening its data regulatory regime with several new federal and state bills aimed at changing the way technology firms and privacy regulation works (Fazlioglu, 2023[38]).

Some countries have enshrined the right to privacy in national constitutions or bills-of-rights. In Chile and Mexico for example, privacy protection rules have been adopted from constitutions into social assistance operational manuals. The Ministry of Planning and Co‑operation in Chile must legally guarantee Solidario’s beneficiaries’ privacy and data protection. Despite these measures however, both countries experience significant enforcement gaps which weaken the effect of these regulations (Carmona, 2018[39]). There are also international human rights instruments and conventions that protect privacy such as the Universal Declaration of Human Rights while other international organisations such as the UN and OCED have created guidelines (Carmona, 2018[39]).

While critically important, multiple regulatory frameworks can cause confusion, they can be complex and difficult to navigate making it challenging for agencies to act safely and effectively. In addition to having to adhere to overarching data privacy frameworks and laws there may be other agreements, rules and responsibilities that must be observed, for example in specific legislation or government policies. Recognising this challenge, in 2021 the New Zealand Government introduced the Data Protection and Use Policy. A key aim was to help government agencies and social service providers navigate the various laws, regulations, rules, conventions and guidelines and to ensure the respectful, trusted and transparent use of people’s personal information.

Data breaches are becoming more common with governments finding themselves managing data breaches on an increasingly regular basis. In most cases, government data breaches involve personally identifying data, such as names, Social Security numbers, and birthdates, the loss of which can result in substantial consequences for victims as well as erode public trust in government’s use of data. The risk of a data breach may increase when aspects of a social protection programme are outsourced to a third party. For instance, if elements like payment delivery are managed by private firms, information flows become more complex, requiring additional data security rules related to both data sharing and processing (Carmona, 2018[39]). In the example of outsourcing data management for the Transport Agency in Sweden, there was a departure from the legislation that was supposed to govern data handling that occurred without any malicious intent (Box 5.6).

Countries are adopting a range of measures to both prevent data breaches occurring and for managing them when they do, measures such as protective security frameworks, staff training, data loss prevention tools, access controls and guidance for handling personal information security breaches. These efforts are often supported by Privacy or Information Commissioners.

Box 5.6. Risk of data regulation breach in public-private partnerships in Sweden

In 2015, the Swedish Transport Agency experienced a considerable data breach in association with outsourcing of data handling. Confidential data about military personnel, along with defence plans and witness protection details, were exposed. Fortunately, there is no evidence that information was leaked to third parties because of the security breach.

The Swedish Transport Agency had contracted private firm IBM to run its IT systems. The contract included outsourcing maintenance and functioning of hardware, networks and programmes. However, in the process of outsourcing data handling, the director general of the Transport Agency was able to abstain from closely following standard regulations under the National Security Act, the Personal Data Act and the Publicity and Privacy Act.

Investigations by the Swedish Security Service and the Transport agency found that IBM staff without the necessary security clearances had been able to access confidential information. While the data were found to have been exposed to non-cleared staff, there was no evidence that IBM had mishandled the information.

Overreach and lack of legal basis

Linking data across agencies and providers, which is becoming increasingly common, raises complex issues regarding informed consent. It can be difficult to predict when someone’s information is collected for a particular administrative purpose whether it will be linked with data from other sources and used for other purposes such as research, data analytics, or even enrolment in other programmes. This makes informed consent difficult (Lokshin and Umapathi, 2022[18]).

Data integration can introduce the potential for overreach, i.e. a deviation from the intention under which the data were originally collected (Levy, Chasalow and Riley, 2021[42]) and there are examples of integrated datasets created for one purpose being used for another. For example, the Florida Department of Child and Family collected multidimensional data on students’ education, health, and home environment. However, these data were subsequently interfaced with Sheriff’s Office records to identify and maintain a database of juveniles who were at risk of becoming prolific offenders.

Historically some social protection agencies have failed to fully consider the legal and ethical implications of automating a process or system. The United Nations Special Rapporteur for Extreme Poverty and Human Rights notes several cases where automated systems were implemented without paying sufficient attention to the underlying legal basis. For instance, in February 2020 the District Court of the Hague ordered an immediate halt to the Netherland’s System Risk Indication system because it violated human rights norms. In June 2020, the Court of Appeal ordered the United Kingdom’s DWP to fix a design flaw in the Universal Credit which was causing irrational fluctuations and reductions in how much benefit some people received (Special Rapporteur on extreme poverty and human rights, 2019[43]).

5.2.3. Mitigating the risks of further entrenching discrimination, bias and exclusion

Discrimination, stigmatisation and exclusion can result from use of models and automated systems

There is a risk that discrimination, stigmatisation and exclusion can result from the use of predictive models, automated decision-support tools, and other targeting mechanisms. Several factors can cause discriminatory outcomes including algorithmic bias (i.e. systematic and replicable errors in computer systems for example where algorithms have been trained on datasets reflecting existing prejudices), unevidenced variable selection or poorly constructed criteria, an algorithm being used in a situation for which it was not intended, and/or the use of poor including biased data.

Evaluations of algorithmic decisions have found they can be discriminatory even when variables by which discrimination can be measured, such as gender, ethnicity or age, are themselves not included. As Osoba and Walker (2017, p. 17[30]) state, “applying a procedurally-correct algorithm to biased data is a good way to teach artificial agents to imitate whatever bias the data contains”. Data may be biased for different reasons. First, certain population groups could be over- or under-represented. Second, an algorithm may mirror decisions taken by biased individuals and third, algorithmic decision support can create self-reinforcing feedback loops. When attention is focused on a certain population group(s) more data are gathered about them that may then provide evidence that even more attention should be focused on that same group (O’Neil, 2016[44]).

Examples of discriminatory outcomes resulting from the use of algorithms suggest that disadvantaged groups are more likely to be exposed to these outcomes than others. For example, in Austria an algorithm was used to allocate job applicants into two groups: one receiving a higher degree of job search support and one receiving less. However, it discounted the chances of employment among groups with certain characteristics who already tended to face disadvantages in the labour market in a way that disproportionately allocated them to the group receiving less support (Box 5.7).

The algorithm used to predict the risk of fraud among recipients of France’s Family Allowances Fund (CNAF) has been criticised for several reasons. One criticism is that the algorithm targets people in precarious positions because their status is associated with risk factors that are correlated with precariousness. For example, higher risk scores are allocated to individuals who must file complex income declarations (for APL, activity bonus, disabled adult allowance, etc.) which has allegedly meant that those on minimum-income benefits are disproportionately likely to be controlled for (Benoît Collombat, 2022[45]).

Similarly, the automated means-testing algorithm that underpins the Universal Credit programme in the United Kingdom has been criticised for miscalculating some individuals’ entitlements, causing benefit entitlements to fluctuate significantly. Monthly earnings are a key input variable and for those whose earnings are irregular, such as contractors and other workers in insecure jobs the algorithm can perform poorly (Human Rights Watch, 2020[46]). This is particularly problematic because it disproportionately affects those who are already in precarious situations who earn income from several and/or insecure jobs.

New research in the United States shows that Black American taxpayers are three to five times more likely to be audited on their tax returns, compared to other taxpayers (Hadi Elzayn et al., 2023[47]). Although the tax collection agency does not collect information on race, the algorithms used to select tax units for auditing have created a racial bias. People filing for the Earned Income Tax Credit are more likely to be selected for audits. The IRS has identified this problem in the algorithm and is making changes to how people are selected for audit.

Box 5.7. Risk of predictive models leading to misleading results in Austria

The Austrian Government used an algorithm to predict jobseekers’ employment prospects with the aim of tailoring employment support interventions for individuals. Services that actively help jobseekers into jobs, such as job search assistance and job placements, are prioritised for those who are predicted to have moderate employment prospects. Those who are predicted to have low employment prospects are allocated to crisis support measures.

However, studies show that the algorithm discounts the employment prospects of women over 30, women with care responsibilities, migrants, and persons with disability. Systematically misclassifying these groups of people risks limiting them to crisis support rather than active employment support thereby reducing their chances of entering employment. This is not only discriminatory, but also weakens the chances of groups who tend to already face disadvantages in the labour market.

Errors and biases in models and automated systems can be hard to detect. Explaining algorithmic models is complex and, in some cases, impossible because they are both inscrutable and nonintuitive (Selbst and Barocas, 2018[50]). This can result in errors going undetected until many people are affected (Redden, Brand and Terzieva, 2020[14]). Australia’s Robodebt Scheme, introduced in 2015 to assess entitlements to payments highlights the challenges of detecting a systemic issue in an automated system. While individual members of the Administrative Appeals Tribunal (the body responsible for conducting merit reviews of administrative decisions under Australian federal government laws) noted problems with overpayment calculations, the systemic nature of the problem was not identified immediately, and the scheme continued to operate until 2019 (see Box 5.8).

Box 5.8. Robodebt in Australia

From 2015 to 2019 the Department of Human Services implemented a debt recovery scheme – Robodebt – to recover overpayments to welfare recipients dating back to 2010‑11. To calculate the overpayments, social security payment data was matched with annual income data from the Australian Taxation Office and a process known as “income averaging” was used to assess income and benefit entitlement. Debt notices would then be issued to affected welfare recipients who would have to prove they did not owe a debt, which was often many years old.

The process both produced inaccurate results and did not comply with the income calculation provisions of the Social Security Act 1991. Despite adverse findings by the Administrative Appeals Tribunal to some cases, the systemic nature of the problem was not immediately identified, and the scheme continued to operate. By the end of 2016, the scheme was the subject of heavy public criticism, but it continued until November 2019, when it was announced that debts would no longer be raised solely based on averaged income. That was followed in 2020 by the settlement of a class action and an apology, in June 2020, from the then prime minister, the Hon Scott Morrison. A 2022 Royal Commission into the Robodebt Scheme made 57 recommendations as the result of its inquiry; the recommendation relating to automated decision-making is discussed below.

Errors and biases can make someone appear ineligible for a benefit they are legally entitled to – a false negative (OECD, 2019[34]). A false negative is an outcome where the model incorrectly predicts the negative class resulting in some individuals receiving less “treatment” or services than they need which may result in poorer outcomes for some priority groups. A false positive is an outcome where the model incorrectly predicts the positive class, and some individuals may receive more “treatment” or services than they need which can result in an inefficient allocation of resources. Both have implications although research suggests people are more concerned with avoiding false negatives.

Exclusion

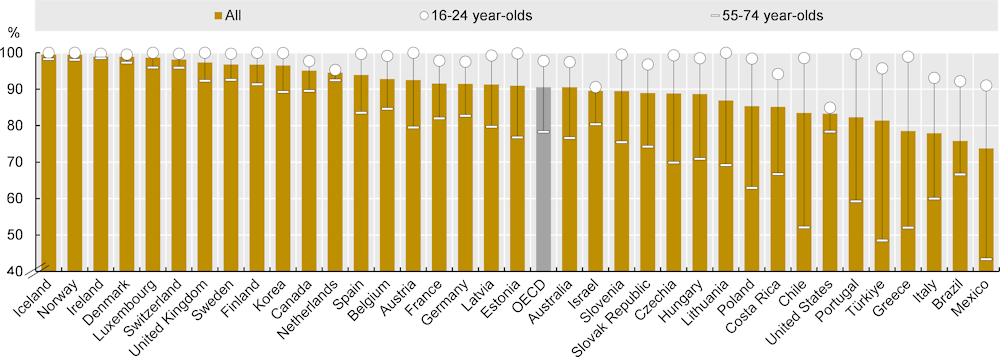

Increased reliance on digitalised services risks excluding people without digital access. Further, these people are likely to be the same people who already suffer poorer access to social services. Globally, more than 84% of national governments now offer at least one online service (ISSA, 2022[52]). Despite the increased opportunities digital services present, the access to, and use of digital infrastructure and tools, is uneven. An estimated 2.9 billion people do not use the Internet. The digital divide is even starker when viewed from the lens of age, gender, poverty and location (ISSA, 2022[52]). Across the OECD, 22% of 55‑74 year‑olds state that they do not use the internet, in Türkiye and Mexico it is more than 50% (Figure 5.1).

The risk of exclusion extends to linked datasets that governments increasingly use to determine eligibility for services and benefits. For example, the Canadian benefit system faces problems regarding its ability to include Indigenous populations in their linked data bases that provide the foundation for benefit eligibility (Box 5.9).

Figure 5.1. One‑fifth of 55‑74 year‑olds across the OECD do not use the Internet

Internet users by age, as a percentage of the population in each age group, 2021

Source: OECD (2022), ICT Access and Usage by Households and Individuals Database, http://oe.cd/hhind (accessed in January 2022).

The literature is very clear, while the potential positive impacts of the digital transformation are substantial, without deliberate efforts to correct digital inequities, it may compound existing vulnerabilities. Access to the internet and relevant devices, such as a mobile phone, will be critically important to how people benefit from new services (OECD, 2022[17]) and people will also need the necessary skills and capacities to use relevant technologies and devices to make use of services (ISSA, 2022[52]).

Many countries are already actively working to address this challenge with governments including in their digital strategies explicit provisions to promote digital inclusion for those more likely to miss out. Other approaches include engaging directly with people and providing training, intermediation or subsidies for devices. In the United States for example, eligible households can access the Affordable Connectivity Program which helps ensure households can afford the broadband they need for work, school and healthcare. The benefit provides a discount of up to USD 30 per month toward internet service for eligible households and up to USD 75 per month for households on qualifying Tribal lands. There is also a one‑time discount of up to USD 100 to purchase a device. However, due to funding constraints, this programme will no longer be accepting new applications after February 2024.

Governments are also taking indirect approaches, working on language and communication, improvements to the user experience and creating intuitive user interfaces (ISSA, 2022[52]). For instance, the German Social Insurance for Agriculture, Forestry, and Horticulture used a website as a forum to disseminate information about the rights of marginalised migrant seasonal workers in the languages most frequently spoken and understood (Box 5.9).

Box 5.9. Including everyone in digitalised solutions

Canada: Indigenous populations face difficulties accessing key social benefits

In Canada, several important social benefits at the federal level (e.g., Canada Child Benefit, Canada Workers Benefit) and at the provincial level are delivered through or linked to the tax system. To be eligible, individuals must therefore complete a tax return. In 2021, Statistics Canada reported just over 28.1 million tax filers, or roughly 87% of the population aged 15 years and over. This suggests about 13% of Canadians are not filing a tax return and are potentially not receiving benefits from key social programmes.

Rates of non-tax filing are particularly elevated among Indigenous populations. There are several reasons for this, including that Indigenous populations in Canada may be concerned that the disclosure of personal and financial information to the government might ultimately cause them harm. For instance, a report by Prosper Canada found that Indigenous peoples fear that applying for and receiving benefits may lead to “scrutiny by social services and potential removal of children” or that “additional one‑time income may jeopardise needed housing or childcare subsidies.” Heightened distrust in government may likely stem from historical experiences of discrimination.

Indigenous people are also more likely to lack personal identification, such as a birth certificate, which is required to obtain a social insurance number, itself a requirement for filing taxes and accessing many social benefits.

Geographical remoteness is another key barrier to completing tax returns, especially for Indigenous populations. There are many reasons why remoteness presents a barrier, including the lack of access to Internet for online tax filing software and virtual support. Indeed, only 43% of First Nations reserve areas and 49% of the North had 50/10 unlimited broadband coverage in 2021. This compares to about 91% of all Canadian households.

Germany: Communicating information about workers’ rights to non-native speakers

The German Social Insurance for Agriculture, Forestry, and Horticulture aimed to promote safety at work and protect the health of workers. However, they realised that there might be knowledge gaps about the issues of occupational health and safety among seasonal workers. Addressing this, they developed a web platform in 2021.

To ensure that seasonal workers, many of whom are from Central and Eastern Europe, can access information, the platform was made available in ten languages. It prioritises clarity of information and contains a mix of text, images, and videos to ensure that information is easily digestible.

The platform also contains a section with an updated list of real-world questions asked by workers, and points users to the telephone and email for other queries.

Mitigating the risks of generating discrimination, stigmatisation and exclusion

The increasing digitalisation of public services means issues associated with implementing automated systems including the use of algorithms will continue to arise and governments need to have in place appropriate accountability frameworks and procedures. Without them, technology and data-driven innovations risk disempowering and disengaging people and eroding public trust and confidence as discussed earlier. Principle 1.5 of the OECD’s AI principles (discussed in Chapter 4), which arguably can be usefully applied beyond AI technologies specifies that AI actors should be accountable for the proper functioning of AI systems, based on their roles, the context, and consistent with the state of art (OECD, 2019[61]).

In the Netherlands nearly 26 000 families were falsely accused of fraud by the Dutch tax authorities between 2005 and 2019 due to discriminative algorithms. Risk profiles were created for individuals applying for childcare benefits in which “foreign sounding names” and “dual nationality” were used as indicators of potential fraud. As a result, thousands of (racialised) low- and middle‑income families were subjected to scrutiny, falsely accused of fraud, and asked to pay back benefits they had obtained legally, which in many cases amounted to tens of thousands of euros. The consequences were devastating. Families went into debt, many ended up in poverty with some losing their homes and/or jobs. More than 1 000 children were placed in state custody as a result (The European Parliament: parliamentary question, 2022[62]). The Dutch Government’s lack of action and accountability even after it was clear something was wrong led to the eventual resignation of the government in 2021.

Incidents such as the Dutch childcare benefit scandal as well as the Robodebt Scheme in Australia offer important lessons for how the potentially negative impacts of automated systems and algorithms can be mitigated. According to Assistant Professor Błażej Kuźniacki, lack of transparency was one of the causes of the Dutch scandal. Dutch legislation did not allow AI automated decision-making to be checked and there was not enough human interaction; further, procedures were too automatised and secretive. AI was allegedly able to use information that had no legal importance in decision making, such as sex, religion, ethnicity, and address which can lead to discriminatory treatment. If tax authorities are not able to explain their decisions, they cannot justify them effectively. The higher the risks, the higher the explainability requirements should be (Błażej Kuźniacki, 2023[63]).

Two of the Australian Royal Commission into the Robodebt Scheme’s 57 recommendations specifically addressed automated decision-making:

Recommendation 17.1: Reform of legislation and implementation of regulation

The Commonwealth should consider legislative reform to introduce a consistent legal framework in which automation in government services can operate.

Where automated decision-making is implemented:

there should be a clear path for those affected by decisions to seek review,

departmental websites should contain information advising that automated decision-making is used and explaining in plain language how the process works,

business rules and algorithms should be made available, to enable independent expert scrutiny.

Recommendation 17.2: Establishment of a body to monitor and audit automated decision-making

The Commonwealth should consider establishing a body, or expanding an existing body, with the power to monitor and audit automate decision-making processes regarding their technical aspects and their impact in respect of fairness, the avoiding of bias, and client usability.

The Australian Government accepted, or accepted in principle, all recommendations made by the Royal Commission into the Robodebt Scheme, including recommendations 17.1 and 17.2.

The Australian Government accepted recommendation 17.1, and committed to consider opportunities for legislative reform to introduce a consistent legal framework in which automation in government services can operate ethically, without bias and with appropriate safeguards, which will include consideration of:

review pathways for those affected by decisions, and

transparency about the use of automated decision-making, and how such decision-making processes operate, for persons affected by decisions and to enable independent scrutiny.

The Australian Government accepted recommendation 17.2 and agreed to consider establishing a body, or expanding the functions of an existing body, with the power to monitor and audit ADM processes.

Both cases highlight the critical importance of transparency and explainability and the need for meaningful human involvement, particularly when automated decisions can, potentially and significantly impact people’s lives. Transparency involves disclosing when automated systems are being used e.g., to make a prediction, recommendation or decision, with disclosure being proportionate to the importance of the interaction. Transparency also includes being able to provide information about how an automated system was developed and deployed, what information was used and how, how an output was arrived at, who is responsible for that output and how it can be appealed. An additional aspect of transparency is facilitating, as necessary, public, multi-stakeholder engagement to foster general awareness and understanding of automated systems and to increase acceptance and trust (OECD, 2019[64]).

Explainability is the idea that an automated system or algorithm and its output can be explained in a way that “makes sense” to people at an acceptable level enabling those who have been adversely affected by an output to understand and challenge it. This includes providing – in clear and simple terms, and as appropriate in the context – the main factors included in a decision, the determinant factors, and the data, logic or algorithm used to reach a decision (OECD, 2019[64]). Some algorithms are more readily explainable but potentially less accurate (and vice versa) and so while requiring explainability may negatively affect the performance of an algorithm, it may in some cases be an outweighing factor.

There should always be a degree of human involvement in automated decision-making, proportionate to the potential impact of the outputs generated. Principle 1.2(b) of the OECD’s AI principles specifies that AI actors should implement mechanisms and safeguards, such as capacity for human determination, that are appropriate to the context and consistent with the state of art (OECD, 2019[61]).

Article 22 of the GDPR stipulates that organisations deploying automated decision-making under permissible uses must “implement suitable measures to safeguard the data subject’s rights and freedoms and legitimate interests.” The latter shall include, at least, the rights “to obtain human intervention on the part of the controller, to express his or her point of view and to contest the decision” (Sebastião Barros Vale and Gabriela Zanfir-Fortuna, 2022[65]). While inserting humans into the loop of automated systems is a crucial way of helping to achieve accountability and oversight, this doesn’t come without challenges. For example, what level of oversight, accountability and liability are attached to human-made decisions? What qualifications and/or expertise is required to question an automated decision?

European Data Protection Board guidelines on automated individual decision-making and profiling state that a controller cannot avoid Article 22 provisions by fabricating human involvement. For example, if someone routinely applies automatically generated profiles to individuals without any actual influence on the result, this would still be a decision based solely on automated processing. To qualify as human involvement, the controller must ensure that any oversight of the decision is meaningful, rather than just a token gesture. It should be carried out by someone who has the authority and competence to change the decision. The controller should identify and record the degree of any human involvement in the decision-making process and at what stage this takes place (European Data Protection Board, 2017[66]).

The right to review an automated decision or output is an important feature of an accountability framework. Those negatively impacted by automated decision-making should be able to appeal a decision and know how to do that. As the OECD’s 2019 Recommendation on AI specifies, those that are adversely affected by an AI system should be able to challenge the outcome(s) of the system based on easy-to‑understand information about the factors that served as the basis for the prediction, recommendation or decision (OECD, 2019[61]). Grievances and investigations should be taken seriously and made publicly available together with the outcome(s) so that lessons can be learned and shared with others undertaking similar work.

Some groups may not know they have been overlooked or have the resources to address any issues (Lokshin and Umapathi, 2022[18]; Barca and Chirchir, 2019[23]). Complaint processes should account for this with public agencies ensuring that marginalised and excluded populations are supported in making any application for a review of a decision. Staff who engage with social security applicants need to be able to explain how a decision was reached and provide information about how that decision can be reviewed. This requires staff to be adequately trained and for there to be sufficient complaint processes to be place. Furthermore, public agencies should consider developing algorithms in-house using internal experts and/or understand and be able to explain algorithms developed by external partners (OECD, 2019[34]).

It may also be necessary for lawyers, judges or other arbitrators to receive training on the functioning and fallibility of algorithms (Citron, 2007[67]; Gilman, 2020[68]). This will help those individuals who have been exposed to negative outcomes from issues such as data breaches, unjustified automated decisions, or other negative outcomes to question decision-makers’ actions and take legal action if necessary.

5.3. Embracing the challenges – a way forward

Governments have put considerable effort into measures such as legal, regulatory and accountability frameworks, data governance and management, and strategies and policies to promote the respectful use of people’s personal information and to protect their privacy. International organisations are supporting government efforts, for example by developing legal instruments such as the forementioned OECD Recommendation on Digital Government Strategies and Recommendation on Health Data Governance and international sharing of good practices such as the ISSA’s Webinar Series on AI. The European Law Institute has designed a set of Model Rules on Impact Assessment of Algorithmic Decision-Making Systems to supplement European legislation on AI in the specific context of public administration (European Law Institute, 2022[69]).

These measures are well covered in the literature, and they are continually being improved. This section explores approaches governments are taking to balance optimising the benefits of rapid developments in technology and data to improve public services with the challenges of doing so that go further than specific legal and technical solutions. Approaches or strategies that improve governments’ overall interactions with people and communities, enhance public trust and confidence and modernise government operations that countries can learn from as they undertake their own digital transformations.

5.3.1. Service offerings through multiple channels.