This chapter elaborates three fundamental policy tensions and objectives common to data governance policy making across different domains: balancing data openness and control while maximising trust; managing overlapping and potentially conflicting interests and regulations related to data governance; and incentivising investments in data and their effective re-use. For each of these policy tensions and objectives, it outlines underlying issues and presents promising approaches that can help address them. These approaches are based on the OECD Horizontal Project on Data Governance for Growth and Well-being, on OECD Recommendations relating to data governance, and on relevant policy examples.

Going Digital Guide to Data Governance Policy Making

3. Cross-cutting policy tensions and objectives for data governance

Abstract

3.1. Balancing data openness and control while maximising trust

Balancing the social and economic benefits of “data openness” with its associated risks represents a fundamental policy challenge inherent to the economic properties of data. This section looks at key concepts associated with data openness, as well as associated benefits and risks. It then explores how to balance data openness and control while maximising trust, by fostering a culture of risk management and transparency across the data ecosystem; by leveraging the full spectrum of the data openness continuum to balance risks and benefits; by enhancing users’ agency and control over data through legal means; by supporting adoption of technological and organisational measures to enhance control; and by enhancing technical interoperability for data openness.

Data openness exists along a continuum, with “open data” as one extreme. With each step towards openness, it becomes easier to access, share and re-use data, including across organisational and national borders. As such, the term “data openness” encompasses the ideas of “free flow of data” and “transborder data flows” (subsection 3.1.2).

The degree of data openness is determined by the legal, technical or financial requirements for data access, sharing and re-use.1 Legal requirements are, in turn, defined by policy and regulatory frameworks applicable to data. These include privacy and data protection frameworks, intellectual property rights (IPRs), and national security and other sector-specific frameworks, and trade provisions also increasingly affect openness of cross-border data flows (OECD, 2022[1]). Contract law also helps determine rights and obligations related to data and thus data openness (subsection 3.2.3).Legal requirements affecting data openness may also be defined by ex post enforcement measures, such as where data portability is mandated as a competition enforcement mechanism (OECD, 2021[2]).

Technical requirements mostly refer to access control mechanisms, as well as specifications and standards required to access data. Finally, financial requirements, including pricing, such as determined by licensing agreements, can also affect the extent to which data users can afford data access and re-use.

A range of social and economic benefits are associated with greater data openness. Evidence suggests that as data openness increases, positive social and economic benefits also increase for data providers (direct impact), their suppliers and data users (indirect impact) and for the wider economy (induced impact) (OECD, 2019[3]). However, the magnitude of the relative effects will vary depending on sectors and context.2

The social and economic benefits of greater data openness include the following:

enabling greater efficiency and productivity, transparency and accountability across society

boosting sustainable consumption and growth, and enhancing social welfare and health care

improving evidence-based policy making, as well as public service design and delivery

enhancing consumer decision making and empowering users of digital goods and public and private services

facilitating scientific discovery, enhancing its reproducibility, reducing duplication and enabling cross-disciplinary co-operation.

However, data openness can also pose risks. Data openness can generate significant risks for individuals and organisations related to three broad areas:

Violations of rights such as privacy and intellectual property rights (IPRs): the protection of privacy and to some extent of IPRs3 is often considered as the biggest challenge associated with data openness. These risks include the privacy interests of individuals and the commercial interests of organisations (OECD, 2019[3]). They embody risks of data being used and re-used in ways that violate the applicable legal frameworks and contractual terms, or that deviate from the (reasonable) expectations of stakeholders and the law.

Data and digital security: the risk of breaches or incidents that affect the availability, integrity or confidentiality of data (data security) and of information systems (digital security) also tend to increase with data openness. Opening information systems to access, share or transfer data may expose an organisation to digital security threats that affect data and information systems. The negative impacts of these breaches and incidents may have cascading effects along an entire supply chain. They may also undermine critical information systems, such as those in the health care, finance or energy sectors.

The unethical use of data: more open approaches to data governance may also increase the risk of data use that violates ethical values and norms. These values could include fairness, human dignity, autonomy, self-determination, and the protection against undue bias and discrimination between individuals or social groups. Unethical use of data may still be legal under existing frameworks (OECD, 2021[4]; 2016[5]).

In this context policy approaches to reconcile the benefits and risks of data openness are needed. Balancing the social and economic benefits of data openness with associated risks represents a fundamental policy challenge inherent to the economic properties of data. On the one hand, data are non-rivalrous, which calls for maximum data openness to maximise the potential spillover benefits of data. However, data are also imperfectly excludable in that organisations and firms cannot generally prevent other people or institutions from re-using their data.

Coupled with their ability to be easily copied and re-shared, these characteristics can imply a risk of loss of control of data that increases with the degree of openness.4 This risk is compounded as data are shared across multiple actors and machines, especially when these tiers cross multiple jurisdictions (OECD, 2022[1]). Consequently, the policy challenge lies in how data openness is positively correlated to both potential benefits and risks.

Many policies promote a “risk-based approach” to data governance to maximise the benefits of openness while minimising risks. Such an approach is helpful as it recognises that risk, like openness, is not binary but increases along a spectrum. This offers stakeholders the possibility to adjust the restrictedness of control and protection measures. In so doing, they would aim for the level of risk agreed upon or expected by stakeholders, while considering social and economic benefits and the public interest.

The rest of this section explores approaches to balance openness and control and materialise a “risk-based approach” to data governance. These approaches comprise fostering a culture of risk assessment and transparency across the data ecosystem (subsection 3.1.1); leveraging the full spectrum of the data openness continuum to balance risks and benefits (subsection 3.1.2); enhancing users’ agency and control over data (subsection 3.1.3); supporting use of technological and organisational measures (subsection 3.1.4); and optimising technical interoperability of data across organisations and sectors (subsection 3.1.5).

3.1.1. Foster a culture of risk management and transparency across the data ecosystem

Stakeholders must be able to trust that risks related to data access, sharing and re-use are well managed. This will allow societies to fully reap the benefits of data-driven innovation. To this end, data governance policies should foster a culture of risk management across the data ecosystem.

OECD (2021[6]) defines the data ecosystem as “the integration of and interaction between different relevant stakeholders including data holders, data producers, data intermediaries and data subjects, that are involved in, or affected by, related data access and sharing arrangements, according to their different roles, responsibilities and rights, technologies, and business models.” All stakeholders, including individuals, should be aware of risks as much as possible through appropriate transparency practices (OECD, 2019[3]).

Increasingly, privacy and data protection frameworks require businesses to evaluate risks before making decisions relating to data. For example, data controllers might need to conduct “risk assessments” to determine how best to protect organisational data. Depending on results, they may also have to report data breaches.

However, risk-based approaches remain challenging to implement for organisations. This is especially true where the rights of third parties are concerned. For example, it can be difficult to comply with third party rights with respect to personal data and the IPRs of organisations and individuals (OECD, 2019[3]).

In this context, stakeholders need different levels of transparency to trust the data ecosystem and make informed decisions. This transparency relates to what, how and by whom data are collected, accessed and used. Transparency is also crucial with respect to how data are governed. This includes information on processing, including with whom the data are shared, for what purpose and under what conditions access may be granted to third parties. It also addresses the rights, responsibilities and respective liabilities in case of violations.

Privacy and data protection frameworks have a variety of mechanisms to promote transparency in support of risk management. Data controllers, for example, may have obligations of transparency towards data subjects (individuals) and privacy enforcement authorities. At the same time, individuals could have the right to be informed, and to access and correct data. Such mechanisms, for example, can help identify risks related to discrimination. This measure empowers individuals to learn why certain decisions about them are made.5

However, mechanisms to promote transparency can fail. For example, the amount or complexity of the provided information may be too much for individuals to process (information overload). In addition, the presentation of information and related choices may make it difficult for individuals to exercise their rights effectively. This might be due to “dark patterns” in privacy consent mechanisms (subsection 3.1.3) or behavioural and cognitive biases (OECD, 2022[7]; 2022[8]).

New initiatives aim to rebalance asymmetries between stakeholders, better enabling individuals to reap the benefit and value of their data and enhancing transparency. These include, for example, a more user-friendly presentation of privacy policies, informed by behavioural insights and, potentially, facilitated through information intermediaries or the standardisation of information. They also include better defaults that make the choice for privacy protection settings at least as easy as privacy-intrusive ones (OECD, 2022[8]; 2022[7]).

How OECD legal instruments encourage risk management

The OECD (2021[6]) Recommendation of the Council on Enhancing Access to and Sharing of Data (hereafter the EASD Recommendation) calls on Adherents to

[t]ake necessary and proportionate steps to protect … legitimate public and private interests as a condition for data access and sharing” (V.b); [and] ensure that stakeholders are held accountable in taking responsibility, according to their roles … for the systematic implementation of risk management measures…, including [data security] measures. (V.c)

The OECD (2021[9]) Recommendation of the Council on Access to Research Data from Public Funding (hereafter the Research Data Recommendation) recommends that Adherents

[t]ake steps to transparently manage risks posed by enhancing access to sensitive categories of research data and other research-relevant digital objects from public funding, including personal data, by applying specific risk mitigation measures, as well as providing for a “right to know” in cases of digital security incidents affecting the rights and interests of stakeholders. (III.3)

The OECD (2016[10]) Recommendation of the Council on Health Data Governance (hereafter the Health Data Governance Recommendation) states that national health data governance frameworks should provide for control and safeguard mechanisms which

include formal risk management processes, updated periodically that assess and treat risks, including unwanted data erasure, re-identification, breaches or other misuses, in particular when establishing new programmes or introducing novel practices. (III.11.iv)

The OECD (2015[11]) Recommendation of the Council on Digital Security Risk Management for Economic and Social Prosperity provides a set of operational principles that apply specifically to digital security risk management in data governance. These include the principles on “risk assessment and treatment cycle” that emphasises that “risk assessment should be carried out as an ongoing systematic and cyclical process”, as well as the principle on “security measures”, according to which

[digital security] risk assessment should guide the selection, operation and improvement of security measures to reduce the digital security risk to the acceptable level determined in the risk assessment and treatment. Security measures should be appropriate to and commensurate with the risk and their selection should consider account their potential negative and positive impact on the economic and social activities they aim to protect, on human rights and fundamental values, and on the legitimate interests of others.

The OECD (2014[12]) Recommendation of the Council on Digital Government Strategies (hereafter the Digital Government Recommendation) suggests that governments

[d]evelop and implement digital government strategies which create a data-driven culture in the public sector, by balancing the need to provide timely official data with the need to deliver trustworthy data, managing risks of data misuse related to the increased availability of data in open formats. (II.3)

It further specifies that these strategies should “reflect a risk management approach to addressing digital security and privacy issues and include … effective and appropriate security measures …” (II.4).

The OECD (2013[13]) Recommendation of the Council concerning Guidelines on the Protection of Privacy and Transborder Flows of Personal Data (hereafter the Privacy Guidelines) in Part Three on Implementing Accountability recommends that “a data controller should … have in place a privacy management programme that … provides for appropriate safeguards based on privacy risk assessment.”

How OECD legal instruments encourage transparency across the data ecosystem

The OECD (2021[6]) EASD Recommendation calls on Adherents to

[e]nhance transparency of data access and sharing arrangements to encourage the adoption of responsible data governance practices … that meet applicable, recognised, and widely accepted … standards and obligations, including codes of conduct, ethical principles and privacy and data protection regulation. Where personal data is involved, Adherents should ensure transparency in line with privacy and data protection frameworks with respect to what personal data is accessed and shared, including with whom it is shared, for what purpose, and under what conditions access may be granted to third parties. (III.c)

The OECD (2021[9]) Research Data Recommendation recommends that Adherents should

[f]oster searchable access to metadata that describes those datasets while respecting legal rights, ethical, principles, and/or, legitimate interests (III.2.b) [and] promote, and require where appropriate, the inclusion of information about rights and licensing in the metadata of all research data and other research-relevant digital objects from public funding …. (V.4)

The OECD (2016[14]) Recommendation of the Council on Consumer Protection in E-commerce contains principles on transparency in consumer data practices, such as the principles on Fair Business, Advertising and Marketing Practices. It states that “businesses should not make any representation or omission or engage in any practice that is likely to be deceptive, misleading, fraudulent or unfair” (paragraph 4). Furthermore:

“Businesses should not engage in deceptive practices related to the collection and use of consumers’ personal data.” (paragraph 8)

“Businesses should comply with any express or implied representations they make about their adherence to industry self-regulatory codes or programmes, privacy notices or any other policies or practices relating to their transactions with consumers” (paragraph 11)

“Businesses should protect consumer privacy by ensuring that their practices relating to the collection and use of consumer data are lawful, transparent and fair, enable consumer participation and choice, and provide reasonable security safeguards” (paragraph 48).

The OECD (2016[10]) Health Data Governance Recommendation provides guidance on “transparency, through public information mechanisms which do not compromise health data privacy and security protections or organisations’ commercial or other legitimate interests” (III.7). These mechanisms should clarify:

“the purposes for the processing of personal health data, and the health-related public interest purposes that it serves, as well as its legal basis”

“the procedure and criteria used to approve the processing of personal health data, and a summary of the approval decisions taken, including a list of the categories of approved data recipients”

“information about the implementation of the health data governance framework and how effective it has been.” (III.7.iii)

The OECD (2013[13]) Privacy Guidelines in their Openness Principle recommend “a general policy of openness about developments, practices and policies with respect to personal data. Means should be readily available of establishing the existence and nature of personal data, and the main purposes of their use, as well as the identity and usual residence of the data controller.”

3.1.2. Balance risks and benefits via the full spectrum of the data openness continuum

Data governance approaches can be characterised by the different levels of openness that they establish. At one end is full and unrestricted openness (e.g. open access to data; no regulation of cross-border data flows). Along the continuum are arrangements that condition or limit the access, sharing, transfer and/or use of data to specific users or countries, or for specific use cases. Governing approaches often seek a balance so that data are “as open as possible, as closed as necessary” (OECD, 2021[6]).

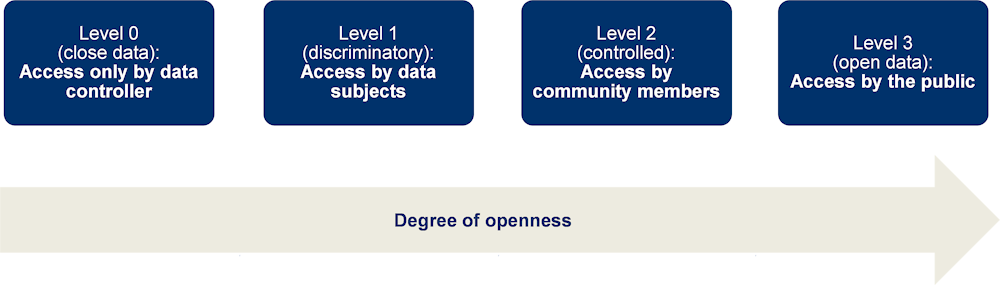

In the case of data access and sharing, policy makers can leverage a wide range of arrangements along the data openness continuum (that moves from Level 0 to 3 as indicated in Figure 3.1). At one extreme, arrangements include those that limit access and re-use of data only to the data holder (Level 0) (OECD, 2019[3]). At the other extreme are open data arrangements (Level 3). These are “non-discriminatory data access and sharing arrangements, where data is machine readable and can be accessed and shared, free of charge, and used by anyone for any purpose subject, at most, to requirements that preserve integrity, provenance, attribution, and openness”(OECD, 2021[6]). Open arrangements are the most prominent approach to enhance access to data in particular in the public sector6 (OECD, 2018[15]) and in science (OECD, 2020[16]).

In between the two extremes, data can be made available to a specific external stakeholder (Level 1). In data portability arrangements, for example, a natural or legal person can request that a data holder transfer to the person, or to a specific third party, data concerning that person in a structured, commonly used and machine-readable format on an ad hoc or continuous basis (OECD, 2021[17]). Data portability has become an essential tool for enhancing access to and sharing of data across digital services and platforms (subsection 3.1.3). It can empower users to play a more active role in the re-use of their data and increase interoperability. In this way, it can enhance competition and innovation by reducing switching costs and lock-in effects (OECD, 2021[17]; 2021[2]).

Figure 3.1. The degrees of data openness in approaches to data access and sharing

Source: OECD (2019[3]), Enhancing Access to and Sharing of Data: Reconciling Risks and Benefits for Data Re-Use Across Societies, http://doi.org/10.1787/276aaca8-en.

Data can also be shared non-discriminatorily among members of a community7 (Level 2), for instance, through conditioned data access and sharing arrangements. These arrangements “permit data access and sharing subject to terms that may include limitations on the users authorised to access the data (discriminatory arrangements), conditions for data use including the purposes for which the data can be used, and requirements on data access control mechanisms through which data access is granted” (OECD, 2021[6]). Conditioned data access and sharing arrangements are typically used in two scenarios. In the first, data are considered too confidential to be shared openly with the public (as open data arrangements). In the second, there are legitimate (commercial and non-commercial) interests opposing such sharing. In the latter cases, however, there can still be a strong economic and/or social rationale for sharing data between data users within a restricted set of trusted members of a community, under voluntary and mutually agreed terms.

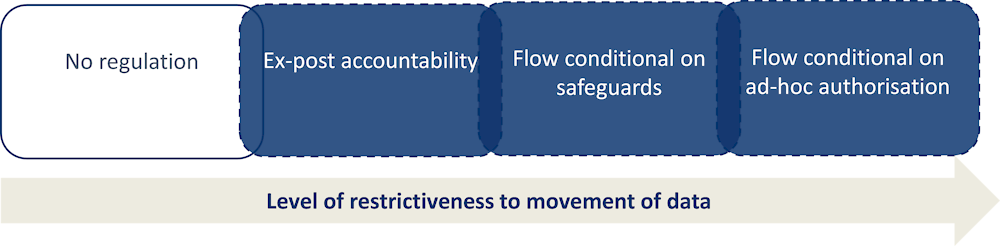

Arrangements governing cross-border data flows is another example. Here, the continuum spans four categories of approaches to cross-border data transfers (Casalini, López González and Nemoto, 2021[18]) from the most restrictive to the most open (Figure 3.2):

Data flows are conditional on ad hoc authorisation. These typically “relate to systems that only allow data to be transferred on a case-by-case basis subject to review and approval by relevant authorities” (Casalini, López González and Nemoto, 2021[18]).

Data flows are conditional on safeguards. This includes approaches relying on the determination of adequacy or equivalence as ex-ante conditions for data transfer.

Ex-post accountability. This approach does not prohibit cross-border transfer of data or require fulfilment of conditions. However, it provides for ex-post accountability for the data holder sending data abroad in case of misuse.

No regulation of cross-border data flows. This is the most common open approach, having no privacy and data protection legislation at all.

Figure 3.2. The degrees of openness in approaches to cross-border data flow regulation

Source: OECD based on Casalini, López González and Nemoto (2021[18]), “Mapping commonalities in regulatory approaches to cross-border data transfers”, https://dx.doi.org/10.1787/ca9f974e-en.

The optimal level of data openness along possible continuums will vary on a case-by-case basis. The level will depend on the risks associated with data openness, and therefore largely on the use cases or the type of data concerned. For example, the transfer of health data will tend to be less open than data related to product maintenance, which tends to pose lower risks (OECD, 2022[19]). In other words, high risks entail a greater need for control measures to protect the rights of stakeholders, and thus a tendency for less data openness. The presence of risks will in many cases incentivise and justify more controlled and restrictive arrangements, such as “conditioned” data access and sharing arrangements. Conversely, fully open arrangements such as open data will tend to be more appropriate where risks, including digital security and privacy risks, are insignificant.

How OECD legal instruments encourage leveraging of the data openness continuum to better balance risks and benefits

The OECD (2021[6]) EASD Recommendation states that adherents should “encourage data access and sharing arrangements that ensure that data are as open as possible to maximise their benefits and as closed as necessary to protect legitimate public and private interests.” (V.a)

The OECD (2021[9]) Research Data Recommendation recommends to

[f]oster and support open access by default to research data and other research-relevant digital objects from public funding. … In cases where access needs to be partially or totally restricted to conform to legal rights, ethical principles and/or to protect legitimate private, public, or community interests, and with the ultimate objective of facilitating access which is as open as possible: … foster more limited forms of access such as access to aggregated or de-identified data, restricted access within safe and secure environments to certified users with clearance adapted to the sensitivity of data, or access via analyses that share only de-identified results.

The OECD (2008[20]) Recommendation of the Council for Enhanced Access and More Effective Use of Public Sector Information (hereafter the PSI Recommendation) recognises, under its “Openness” principle, the importance of access but also the need for refusal and limitations to access by

[m]aximising the availability of public sector information for use and re-use based upon presumption of openness as the default rule to facilitate access and re-use. … [d]efining grounds of refusal or limitations, such as for protection of national security interests, personal privacy, preservation of private interests for example where protected by copyright, or the application of national access legislation and rules.

3.1.3. Enhance users’ agency and control over data through legal means

Different types of consent models

Protection of privacy and personal data is a common concern in data governance. It raises questions for policy makers on how best to balance data openness and control of personal data, while maximising trust. In this context, consent has been recognised as a mechanism to allow individuals to control the collection and (re-use) of personal data about them. Consent generally requires organisations to provide clear information to individuals about what personal data about them are being collected and used, and for what purpose. These rules are specified in the data protection and privacy laws of most countries and in the OECD (2013[13]) Privacy Guidelines (see subsection 3.1.1 on transparency). Individuals are also to be given “reasonable means to extend or withdraw their consent over time” (OECD, 2015[21]). As such, consent can be seen as an effective tool to empower individuals with respect to openness and control decisions, thereby helping to maximise trust.

At the same time, individuals may often not read or comprehend information regarding use of their data (including from mandatory disclosures) due, for example, to information overload (OECD, 2022[7]). Furthermore, some businesses have used “dark commercial patterns”8 in privacy consent mechanisms such as cookie consent notices. These make it easier for individuals, for example, to opt into privacy-intrusive settings than to opt out. Often, this approach exploits behavioural and cognitive biases, effectively obstructing a deliberate consent decision (OECD, 2022[8]).

Additionally, data can be used and re-used, often in ways unforeseen at the time of collection. In this context, to retain flexibility in their compliance with privacy legislation, some organisations rely on one-time general or broad types of consent. These types of consent ostensibly provide some degree of control to individuals about the collection and use of data about them, without requiring consent for each specific instance of use. On the one hand, these types of consent seem to respect individuals’ rights without pre-empting the social benefits that may accrue when those data are used for additional, previously unspecified aims. On the other, data subjects may not realise the full implications of broad consent, particularly in the context of artificial intelligence (AI) and big data analytics.

Increasingly, as highlighted in subsection 3.2.1, individuals cannot be fully aware of how observed, derived, inferred and personal data could reveal information about them. Nor can they know how these data could be used and shared between data controllers and third parties. They also cannot anticipate to what extent their data will be used for purposes that may transgress their moral values.

Consent remains a useful tool, especially in some contexts and circumstances. However, uncertainty about its use and effectiveness has led to the proposition of new models in the scientific literature, including “adaptive” or “dynamic” forms of consent (OECD, 2022[22]; 2015[21]). In time-restricted consent models, for example, individuals consent to use of personal data about them only for a limited period. This enables them to consent to new projects or to alter their consent choices in real time as circumstances change, while having confidence these changing choices will be respected.

However, behavioural and cognitive limitations or the presence of dark commercial patterns can call into question when and to what extent individuals can meaningfully consent to the collection and use of their personal data. Therefore, measures based on consent and information disclosure are likely insufficient in isolation. They may thus need to be accompanied by other regulatory measures. These could include requirements for “privacy-by-design” or prohibition of certain harmful data practices, such as privacy-intrusive dark patterns or potentially exploitative data-driven personalisation practices (OECD, 2022[8]; forthcoming[23]).

Data portability

Data portability has become an essential tool for enhancing access to and sharing of data across digital services and platforms. It can help increase interoperability and data flows and thus enhance competition and innovation by reducing switching costs and lock-in effects (OECD, 2021[2]; 2021[17]). Most importantly, an increasing number of countries is considering data portability as a means to grant users better agency and control over “their” data. This, in turn, empowers users to play a more active role in the re-use of these data.

Data portability initiatives and arrangements differ significantly along five key dimensions (OECD, 2021[17]):

sectoral scope, including whether they are sector-specific or horizontal and thus directed potentially at all data holders regardless of the sector

beneficiaries, including whether only natural persons (individuals) or also legal persons (i.e. businesses) have a right to data portability

the type of data subject to data portability arrangements, including whether data portability is limited to personal data and includes volunteered, observed or derived data

legal obligations, especially the extent to which data portability is voluntary or mandatory and if the latter, how it is enforced

modalities of data transfer, meaning the extent to which data transfers are limited to or include ad hoc (one-time) downloads of data in machine-readable formats (regarded as “data portability 1.0”), ad hoc direct transfers of data to another data holder (“data portability 2.0”), or real-time (continuous) data transfers between data holders that enables interoperability between their digital services (“data portability 3.0”).9

How OECD legal instruments address the need to enhance users’ agency and control over data

The OECD (2021[6]) EASD Recommendation recommends that Adherents should

[e]mpower individuals, social groups, and organisations through appropriate mechanisms and institutions such as trusted third parties that increase their agency and control over data they have contributed or that relate to them, and enable them to recognise and generate value from data responsibly and effectively (III.d)… and [f]oster competitive markets for data through … appropriate measures, including enforcement and redress mechanisms that increase stakeholders’ agency and control over data. (VI.a)

The OECD (2021[9]) Research Data Recommendation recommends that Adherents should

[r]equire that consent or comparable legal basis be sought consistently for all collections of sensitive human subject data and metadata, including personal data, and that any use be in conformity with the consent granted, applicable privacy regulations, and ethical principles. Where it is proposed that personal data be used in ways not initially foreseen in the consent granted and seeking consent for such new use is impractical, specific case-by-case arbitration implemented by ethics review boards or similar authorities may be appropriate. Such case-by-case arbitration should also be accompanied by a review taking into account legal aspects of the planned change of purpose. (III.5)

Data portability is addressed in the section on “Sustainable Infrastructures”, where the Recommendation calls on Adherents to “[e]ncourage private investment in research data infrastructures … while taking measures to facilitate their openness, reliability and integrity, and to protect the public interest over the long term by avoiding vendor lock in and ensuring data portability.” (VII.2)

The OECD (2016[10]) Health Data Governance Recommendation recommends that

[c]onsent mechanisms should provide: Clarity on whether individual consent to the processing of their personal health data is required, and, if so, the criteria used to make this determination; what constitutes valid consent and how consent can be withdrawn; and lawful alternatives and exemptions to requiring consent, including in circumstances where obtaining consent is impossible, impracticable or incompatible with the achievement of the health-related public interest purpose, and the processing is subject to safeguards consistent with this Recommendation. (III.5.a.i)

Where the processing of personal health data is based on consent, “such consent should only be valid if it is informed and freely given, and if individuals are provided with clear, conspicuous and easy to use mechanisms to provide or withdraw consent for the future use of the data.” (III.5.a.ii)

The OECD (2013[13]) Privacy Guidelines and their Individual Participation Principle recommends that

[a]n individual should have the right: a) to obtain from a data controller, or otherwise, confirmation of whether or not the data controller has data relating to him; b) to have communicated to him, data relating to him within a reasonable time; … and d) to challenge data relating to him …

The Individual Participation Principle is complemented by two other principles which refer to the consent of the data subject, in both cases, however, with an alternative legal basis. According to the Collection Limitation Principle “[t]here should be limits to the collection of personal data and any such data should be obtained by lawful and fair means and, where appropriate, with the knowledge or consent of the data subject.” According to the Use Limitation Principle, “[p]ersonal data should not be disclosed, made available or otherwise used for purposes other than those specified in accordance with Paragraph 9 except: a) with the consent of the data subject; or b) by the authority of law.”

3.1.4. Support the adoption of technological and organisational measures to enhance control

The sphere of control of a data holder10 or a data producer11, as well as governments, over data is defined by technological boundaries (e.g. a personal device of a consumer), organisational boundaries (e.g. an organisation’s information system) or national boundaries (e.g. a government’s legislation without extraterritorial effects). All these boundaries affect the costs (including opportunity costs) of excluding others from accessing and using those data. Beyond this sphere of control, data holders and producers, as well as governments, progressively lose their ability to control how data are used and to oppose any such use. Therefore, once data leave their sphere of control, stakeholders rely mainly on available enforcement and redress mechanisms for their rights to be respected.

A range of technical and/or organisational measures helps preserve (or enhance) the sphere of control of data holders and producers even as data are accessed, used and re-used across society. This enables data openness, while minimising associated risks (OECD, 2022[19]). Policy makers therefore may consider the range of technological and organisational measures available for different contexts and different data types and promote their use through policies for data governance. This includes, for example, providing guidance on their implementation or pioneering their research and development (R&D).

Technical and organisational measures do not guarantee that the rights of stakeholders are always protected. Their use therefore requires an appropriate risk assessment. This, in turn, may need to be combined with other data governance mechanisms, such as enforceable legal commitments to e.g. not re-identify de-identified information (OECD, forthcoming[24]).

PETs as key technological measure

Privacy-enhancing technologies (PETs) are a prominent example of technological measures that can expand the sphere of control over data (OECD, forthcoming[24]). According to OECD (2002[25]), PETs “commonly refer to a wide range of technologies that help protect personal privacy [ranging] from tools that provide anonymity to those that allow a user to choose if, when and under what circumstances personal information is disclosed.” Typically, they are not stand-alone tools but can be viewed as new functionalities to manage data. OECD (forthcoming[24]) differentiates between the following four classes of PETs:

Data accountability tools provide enhanced control to the sources over how data can be gathered, access and used, or else greater transparency and immutability into tracking data access and use. These include, for example, accountable software systems that manage the use and sharing of data by controlling and tracking how data are collected and processed, and when they are used. Such a design aims, in part, to grant access with limitations attached to the data. In other words, data remain within the sphere of control wherever they flow.

Data obfuscation tools, like encrypted data processing and distributed analytics tools (see bullets below), reduce the need for sensitive information to leave a data source’s sphere of control, where the underlying data are kept and processed. Unlike other types of tools, however, obfuscation alters the data by adding “noise” or removing identifying details. Data obfuscation tools commonly include anonymisation and pseudonymisation techniques.12

Federated and distributed analytics allows executing analytical tasks (e.g. training models) upon data that are not visible or accessible to those executing the tasks. In federated learning, a technique gaining increased attention, raw data are pre-processed at the data source. In this way, only the summary statistics/results are transferred to those executing the tasks. These results can then be combined with similar data from other sources for all of the data to be indirectly processed centrally. In distributed analytics, sensitive data never leave the custody of a data source but may be analysed by third parties who are members of a common network. Distributed analytics is increasingly applied in the health sector and was particularly important for accelerating the pace of global COVID-19 research (OECD, 2022[22]).

Encrypted data processing tools allow running computations over encrypted data that are never disclosed. Unlike data obfuscation tools, the underlying data remain intact but hidden by encryption. Examples include homomorphic encryption through which a data processor can perform increasingly complex calculations over the encrypted data. It extracts an encrypted result that can only be unlocked with the original data source’s cryptographic key.

Developments in data analytics and AI have combined with the increasing volume and variety of available data sets and the capacity to link these different data sets. This has made it easier to infer and relate seemingly non-personal or anonymised data to an identified or identifiable entity (OECD, 2019[3]; forthcoming[24]).13 This, in turn, limits the use of some PETs as a single means of protection. Therefore, PETs-related initiatives need to be complemented by legally binding and enforceable obligations to protect the rights and interests of data subjects and other stakeholders as articulated in the OECD (2021[6]) EASD Recommendation.

Organisational measures

Organisational measures refer to institutional arrangements that may involve contractual arrangements (OECD, 2022[19]) (subsection 3.2.3). They are often used in combination with PETs to help manage and enhance control over data, enabling a more balanced approach to data openness. Organisational measures can vary greatly in their model and implementation.

Organisational measures play a key role where data are considered a collective resource of common interest and thus for the implementation of “data commons”.14 Data commons are formal or informal governance institutions to enable a viable and shared production and/or use of data that reflects the interests of stakeholders. They facilitate joint production or co-operation with suppliers, customers (consumers) or even potential competitors (co-opetition). Data commons have often led to open data arrangements. However, other arrangements that foresee restrictions on data access and sharing (conditioned data access and sharing arrangements) are increasingly in use as well.

Indeed, a wide range of approaches can be used to enable data commons to enhance individual and organisational control over data while unlocking the benefits of greater data use and sharing:

Independent ethics review bodies (ERBs) are committees that review proposed research methods to ensure they are ethical. ERBs have been highlighted in some cases as critical trusted third parties, especially around access to and sharing and re-use of personal data. For example, in the scientific community, the evaluation of applications for access to publicly funded personal data for research can depend on ERBs. The OECD Global Science Forum concluded that ERBs can increase trust between parties with an interest in the use of personal data for research purposes, particularly in situations where consent for research use is impractical or impossible (OECD, 2016[5]).15

Data sandboxes are isolated environments through which data are accessed and analysed. Analytic results are only exported, if at all, when they are non-sensitive. Data sandboxes typically require execution of the analytical code at the same physical location as the data. These sandboxes can be realised through technical means of various complexity. One example is isolated virtual machines that cannot be connected to an external network or federated learning solutions. It is also possible to maintain a physical presence within the facilities where data are located (OECD, 2019[3]).

Data intermediaries: The OECD (2021[6]) EASD Recommendation defines data intermediaries as “service providers that facilitate data access and sharing under commercial or non-commercial agreements between data holders, data producers and/or users.” As the Recommendation notes, data intermediaries can be data holders. By defining common legal and technical standards, data intermediaries facilitate data access and sharing (e.g. data spaces).However, they can also be trusted third parties (e.g. data trusts) that essentially act on behalf of other stakeholders, including data holders, data producers and/or users.

Data spaces have been proposed as a decentralised type of data intermediary. At a technical level, data spaces rely on common standards for accessing, linking, processing, using and sharing data between different endpoints where the data are located. Data spaces have been proposed as a solution, especially with respect to “industrial” or “non-personal” data. For example, Gaia-X is a European initiative that aims to develop a software framework for data governance. It would be implemented by cloud service providers based on common rules to enhance transparency, controllability, portability and interoperability across data and services.16 This government-backed standard aims to establish an ecosystem in which data are made available, collated and shared in a trustworthy environment. In such an environment, generators of data maintain full control and visibility on the context and purpose for which other actors access data.

Data trusts are a more centralised type of data intermediary. Data trusts are institutional arrangements whereby a trusted third party (an informed person or organisation) takes on a fiduciary duty to steward/govern data use or sharing on behalf of its members in relation to third parties. This is intended as a means to increase access and sharing of the data, while safeguarding the rights and interests of the data holders (Hardinges, 10 July 2018[26]; Ruhaak, 2021[27]). Examples of data trusts include personal data stores or Personal Information Management Systems (PIMS). PIMS are service providers that enhance an individual’s control over their personal data by choosing where and how they want their data stored, accessed or processed at a granular level. Examples also include trusted data-sharing platforms, where major data holders either designate an existing trusted organisation or create a new trusted organisation and platform to share data with third parties. In the United States, for example, health care and health insurance companies (e.g. Aetna, Humana, Kaiser Permanente and United Healthcare) designated the non-profit Health Care Cost Institute (HCCI) as a trusted organisation. After removing information about which company has provided the data, HCCI shares information about health care use and costs in the United States with selected research institutions (OECD, 2019[3]).

Governments can act as, or create, a trusted third party. In certain countries, national statistical offices have acted as in this capacity. In Australia, under the Data Integration Partnership for Australia, the government initiated a three-year AUD 131 million (around USD 90 million) investment to maximise the use and value of the government's data assets between 2017 and 2020 (Australian government, 2017[28]). Agencies in social services, health, education, finance and other government entities would provide data for linking and integration. Meanwhile, “sectoral hubs of expertise, independent entities that are funded by the Commonwealth” and denominated Accredited Integrating Authorities (AIAs), would enable integration of longitudinal data assets. These data would be “housed in a secure environment, using privacy preserving linking methods and best practice statistics to link social policy and business data” (Productivity Commission, 2017[29]). The Australian Bureau of Statistics – the national statistical office – was the country’s first institution to be recognised as an AIA.17

All these organisational measures have strengths and weaknesses to address concerns around data access and sharing. On their own, they often cannot provide a solution. A combination of technological and organisational measures will be necessary to address stakeholders’ concerns and risks while enabling co‑operation. Policy makers could provide incentives or directly experiment with some organisational measures to ensure optimal levels of data sharing occur based on technological and organisational possibilities.

How OECD legal instruments promote adoption of technological and organisational measures that help reduce the risk of loss of control

The OECD (2021[6]) EASD Recommendation calls on Adherents to

[e]ncourage competition-neutral data-sharing partnerships, including Public-Private Partnerships (PPPs), where data sharing across and between public and private sectors can create additional value for society. In so doing, Adherents should take all necessary steps to avoid conflicts of interest or undermining government open data arrangements or public interests. (III.b)

[e]mpower individuals, social groups and organisations through appropriate mechanisms and institutions such as trusted third parties that increase their agency and control over data they have contributed or that relate to them, and enable them to recognise and generate value from data responsibly and effectively. (III.d)

[f]oster the adoption of conditioned data access and sharing arrangements with the use of technological and organisational environments and methods, including data access control mechanisms1 and privacy enhancing technologies, through which data can be accessed and shared in a safe and secure way between approved users, combined with legally binding and enforceable obligations to protect the rights and interests of data subjects and other stakeholders. (V. d)

The OECD (2021[9]) Research Data Recommendation recommends that

[i]n cases where access needs to be partially or totally restricted to conform to legal rights, ethical principles and/or to protect legitimate private, public, or community interests, and with the ultimate objective of facilitating access which is as open as possible, [Adherents should] foster more limited forms of access, such as access to aggregated or de-identified data, restricted access within safe and secure environments to certified users with clearance adapted to the sensitivity of data, or access via analyses that share only de-identified results. (II.2.a)

The OECD (2016[10]) Health Data Governance Recommendation recommends that governments establish and implement national health data governance frameworks that provide for “control and safeguard mechanisms”. Such mechanisms should “include technological, physical and organisational measures designed to protect privacy and security while maintaining, as far as practicable, the utility of personal health data for health-related public interest purposes” (III.11.v). This should be done with

[m]echanisms that limit the identification of individuals, including through the de-identification of their personal health data, and take into account the proposed use of the data, while also allowing re-identification where approved. Re-identification may be approved to conduct future data analysis for health system management, research, statistics, or for other health-related public interest purposes; or to inform an individual of a specific condition or research outcome, where appropriate. (III.11.v.a.) … Where practicable and appropriate, … alternatives to data transfer to third parties, such as secure data access centres and remote data access facilities. (III.11.v.c.)

The OECD (2013[13]) Privacy Guidelines in Part Five on National Implementation recommend that “[i]n implementing these Guidelines, Member countries should: … consider the adoption of complementary measures, including … the promotion of technical measures which help to protect privacy.”

1. “Data access control mechanisms” are defined as “technical and organisational measures that enable safe and secure access to data by approved users including data subjects, within and across organisational borders, protect the rights and interests of stakeholders, and comply with applicable legal and regulatory frameworks.”

3.1.5. Enhance technical interoperability for data openness

Technical interoperability refers to the ability of two or more systems or applications to exchange information and to mutually use that information through seamless exchanges, updates or transfers (ISO, 2017[30]). If technical specifications are incompatible, however, it can lead to fragmentation across organisations, sectors and countries. Indeed, in some cases this result is inevitable. With respect to data, technical interoperability is usually associated with the need for machine readability, common standards and other interoperable technical specifications, and metadata. These enable what is commonly called the FAIR (findability, accessibility, interoperability and re-use) and the correct interpretation and analysis of data across systems.

Syntactic and semantic interoperability

Commonly used machine-readable formats are not enough to guarantee technical interoperability (OECD, 2019[3]; 2020[16]). These formats may enable data syntactic interoperability, i.e. the transfer of “data from a source system to a target system using data formats that can be decoded on the target system.” However, they do not guarantee data semantic interoperability, defined as “transferring data to a target such that the meaning of the data model is understood within the context of a subject area by the target.” Both syntactic and semantic interoperability are needed for data interoperability and to enable data openness.

The private sector, including data intermediaries (subsection 3.1.4), has played an important role to develop and promote data-related standards and other technical specifications. Data intermediaries often provide their data in common data formats that assure both syntactic and semantic interoperability – driving their adoption across industries. In so doing, they can define de facto standards for their industry. One example is Google's General Transit Feed Specification (GTFS), a common format for public transportation schedules and associated geographic information (OECD, 2019[3]).

Governments nonetheless play a role to promote development and adoption of interoperable specifications for effective data access, sharing and use, and to ensure these specifications maximise trust. These specifications include common standards for data formats and models, as well as open-source implementations (OECD, 2021[6]). Promotion of their adoption can enhance technical interoperability and be an effective strategy for fostering competition between data-driven services and platforms. This is especially the case where limited interoperability – through, for example, closed application programming interfaces – may serve as an entry barrier (OECD, 2021[2]).

Data quality, which can be understood as “fitness for use” in terms of the ability of the data to fulfil user needs, is an important factor for technical interoperability. In this respect, “government initiatives that focus on the production of good-quality data can also contribute to greater interoperability, sharing and openness in later stages” of the data value cycle (OECD, 2019[31]).

Impacts of interoperability on investment

Although achieving technical interoperability across societies and economies represents a great opportunity, it can also have unintended consequences. Interoperability requirements, for example, may have a negative effect on firms’ willingness to invest in data. They may perceive that lower switching costs enabled by interoperability creates a risk of losing their user base. In addition, interoperability standards that are too prescriptive and have a high cost of compliance can harm competition and innovation. This is especially the case for small and medium-sized enterprises (SMEs) as they may lack capacity to develop and maintain interoperability specifications (Competition Bureau Canada, 2022[32]). Therefore, interoperability needs to be considered in connection with the cross-cutting tension Incentivising investments in data and their effective re-use (section 3.3).

How OECD legal instruments promote technical interoperability of data

The OECD (2021[6]) EASD Recommendation recommends that Adherents “[f]oster where appropriate the findability, accessibility, interoperability and reusability of data across organisations, including within and across the public and private sectors” (VIII.b). In particular, Adherents should “[a]ssess and, whenever possible, promote development and adoption of interoperable specifications for effective data access, sharing and use, including common standards for data formats and models as well as open source implementations.”

The OECD (2021[9]) Research Data Recommendation recommends that Adherents

[p]romote interoperability by requiring the use of semantic (including ontologies and scientific terminology), legal (rights of use), and technical (such as machine readability) standards as appropriate” (IV. 3) [and] take necessary measures to support development and maintenance of sustainable infrastructures to support the findability, accessibility, interoperability, and reusability of research data and other research-relevant digital objects from public funding free of charge at the point of use (VII). [To this end, Adherents should] support efforts to improve interoperability among global research infrastructures to leverage national investments and innovation, and to encourage interdisciplinarity. (VII.b)

The OECD (2016[10]) Health Data Governance Recommendation recommends that national health data governance frameworks should provide for “cooperation among organisations processing personal health data, whether in the public or private sectors” (III.2.i). To achieve this, it indicates they should “encourage common data elements and formats; quality assurance; and data interoperability standards.” Further,

[It] recommends that governments engage with relevant experts and organisations to develop mechanisms consistent with the principles of this Recommendation that enable the efficient exchange and interoperability of health data whilst protecting privacy, including, where appropriate, codes, standards and the standardisation of health data terminology.

The OECD (2008[20]) PSI Recommendation in its principle on “[n]ew technologies and long-term preservation” recommends that Member countries aim for “improving interoperable archiving, search and retrieval technologies and related research including research on improving access and availability of public sector information in multiple languages, and ensuring development of the necessary related skills.” They should also aim for “avoiding fragmentation and promote greater interoperability and facilitate sharing and comparisons of national and international datasets and striving for interoperability and compatible and widely used common formats.”

3.2. Managing overlapping and conflicting interests and regulations related to data governance

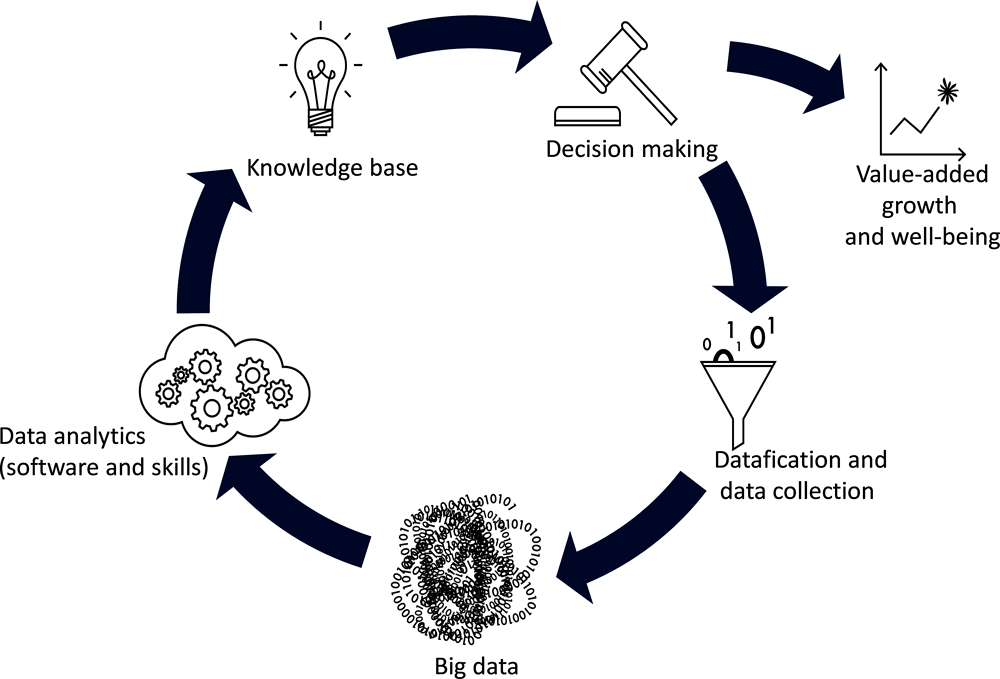

The second fundamental policy tension and objective in data governance relates to balancing the interests of different stakeholders and policy communities, while ensuring consistency across different policy and regulatory frameworks. This challenge reflects that multiple parties with potentially conflicting interests are involved at different phases of the data value cycle (data collection, analysis, use, deletion) (Figure 3.3). It is also the result of different policy communities being involved in the governance of data. This engagement gives rise to multiple, and sometimes overlapping, policy and regulatory frameworks (sectoral or cross-sectoral, as well as national and international) that focus on concerns mainly relevant to these policy communities.

Figure 3.3. The data value cycle

Source: OECD (2015[33]), Data-Driven Innovation: Big Data for Growth and Well-Being, http://dx.doi.org/10.1787/9789264229358-en.

Data can pertain to different domains and be subject to different policy and regulatory frameworks. Privacy and data protection frameworks are the most prominent legal and regulatory framework that define control and use rights over (personal) data. These include an individual’s right to restriction of processing and, according to some frameworks, a right to data portability (Banterle, 2018[34]; Drexl, 2018[35]; Purtova, 2017[36]). On the other hand, data (including personal data) collected by an organisation will typically also be governed by IPRs. These are essentially copyrights in respect to the collection of data, sui generis database rights in some jurisdictions, and trade secrets for confidential business and technical data.18

However, in the case of personal data, control or “ownership” rights of the organisation will hardly be comparable to other (intellectual) property rights. This is because most privacy regulatory frameworks give data subjects control rights over their personal data, which may interfere with “the full right to dispose of a thing at will” (Determann, 2018[37]). Therefore, in the case of personal data, no single stakeholder will have exclusive access and use rights. Different stakeholders will typically have different rights and obligations depending on their roles, which may be affected by different legal and regulatory frameworks.

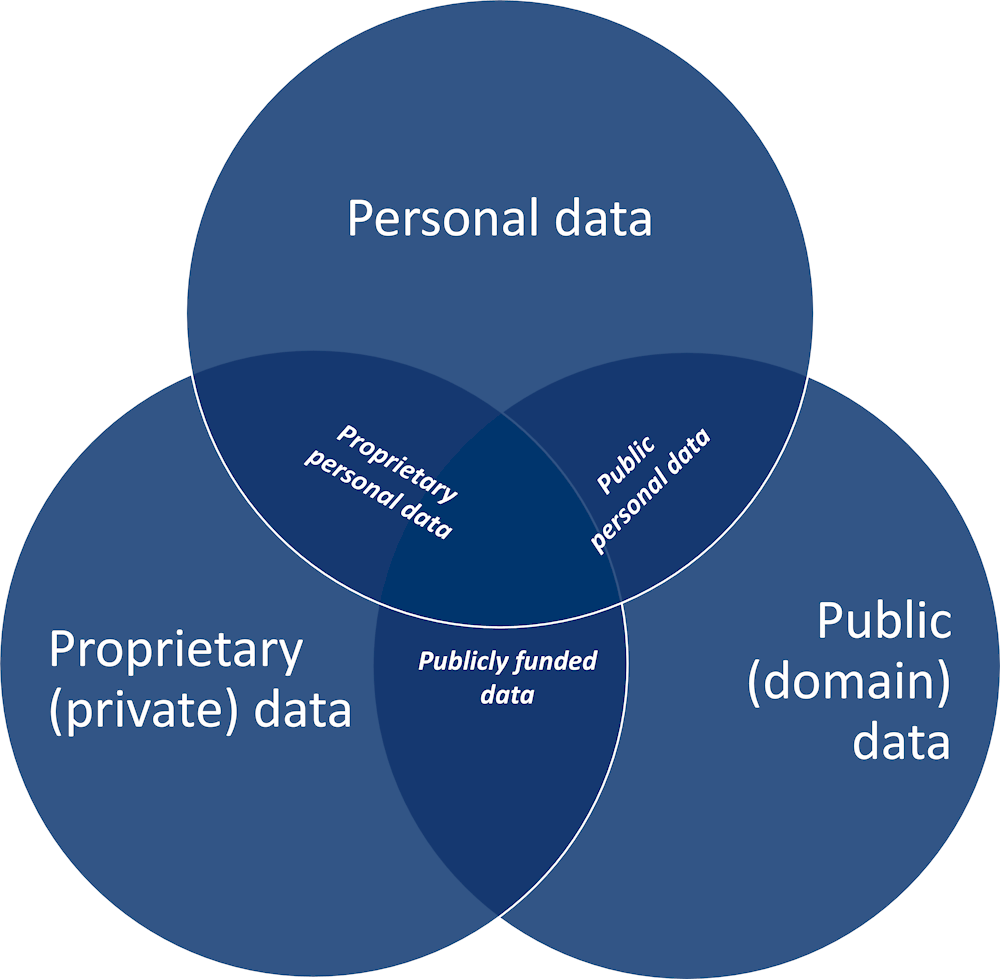

Figure 3.4 illustrates the overlapping domains of data and the relevant policy and regulatory frameworks that can affect each domain differently, yet with overlaps:

the personal domain, which covers data “relating to an identified or identifiable individual” (personal data) for which privacy and personal data protection frameworks apply

the private domain, which covers proprietary data protected by IPRs or by other access and control rights (e.g. provided by contract, cyber-criminal or competition law), and for which there is usually an economic business interest to the exclusion of others

the public domain, which covers data not protected by IPRs or rights with similar effects, but that most importantly lie in the “public domain” (understood more broadly than to be free from copyright protection), thus in principle available to access and re-use, as well as data for which there are a public interest.

Each of these domain-specific frameworks are important to foster trust in the data ecosystem. However, the “intricate net of existing legal frameworks” (Determann, 2018[37]) creates legal uncertainties. These concerns have been identified as a possible reason for low adoption of data analytics and related digital technologies such as AI. At the time, they may explain scarce use of new data governance approaches such as data portability (OECD, 2019[38]; 2019[3]; Business at OECD, 2020[39]).

Figure 3.4. The personal, private and public domains of data

Source: OECD (2019[3]), Enhancing Access to and Sharing of Data: Reconciling Risks and Benefits for Data Re-Use Across Societies, http://doi.org/10.1787/276aaca8-en.

Data governance has proven to be challenging where public interest in data is concerned, especially when such interest “overlaps” with the interests in proprietary and personal data (the centre of the Venn diagram in Figure 3.4). This is the case with data of public or general interest, a relatively new class of data. Some countries19 have started to recognise and regulate these data to fulfil societal objectives that otherwise would be impossible or too costly to fulfil. These objectives can include development of national statistics, development and monitoring of public policies, tackling of health care and scientific challenges and, in some cases, provision of public services (OECD, 2022[19]).

In this context, the challenge is how to disentangle and reconcile these multiple interests, and policy and regulatory frameworks, through clear policies that best reflect societal values and the public interest. Approaches to this trade-off aim to strike an effective balance between stakeholders’ interests and ensure coherence across different policies and regulations. These include identifying and considering the contribution of different stakeholders throughout the data value chain, including by promoting multi-stakeholder engagement and participation (subsection 3.2.1); adopting a whole-of-government approach through national data strategies and cross-agency co‑operation to help reconcile different domestic frameworks affecting data governance (subsection 3.2.2); leveraging contracts to address legal uncertainties in respect to data governance (subsection 3.2.3); and promoting international policy and regulatory co‑operation to reconcile data governance differences across countries and enable cross-border data flows (subsection 3.2.4).

3.2.1. Promote multi-stakeholder engagement

Data and the value derived from their use are often (co-)created through interactions of various parties in the global data ecosystem,20 sometimes even without their awareness. Multiple stakeholders are involved in the collection, control and use of data at different stages of the data value chain. This engagement provides the rationale for each of them to be claiming rights and interests with respect to those data. However, some stakeholder interests may overlap or even conflict. In the case of personal data, individuals and businesses may have opposing interests regarding their sharing and re-use. As another example, businesses may also object to mandatory sharing of their data with public authorities for regulatory purposes.

The contributions of different stakeholders throughout the data-driven value creation process

Determining fair distribution of risks, benefits and liabilities across stakeholders for data governance is a multi-pronged process. One important first step is to identify and consider the respective contributions of different stakeholders throughout the data-driven value creation process (from data collection to processing through to use and re-use) and the different types of data that may be involved thereby.

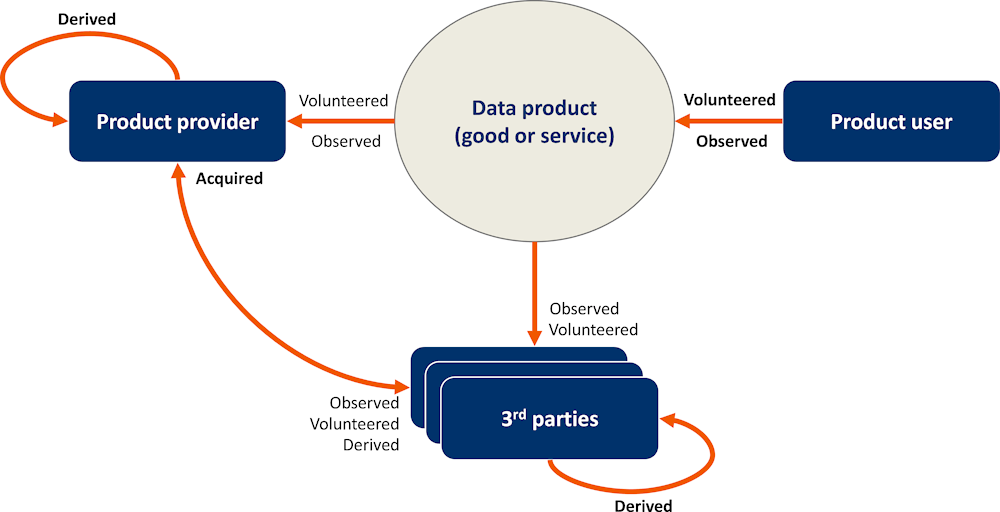

Figure 3.5 presents a stylised process of how a product user (e.g. a consumer) typically interacts with a data product (e.g. an online service or portable smart device) provided by a product provider (e.g. a business). The data product (i) observes the activities of its user, creating observed data;21 and/or (ii) collects input data volunteered by its user, i.e. volunteered data.22 These data can then be accessed for further processing (iii) by the product provider and/or by a third party granted access, leading to derived data.23 Derived data can also be enriched when a set of observed and/or volunteered data is combined with a set of acquired data24 from (other) third parties (OECD, 2019[3]).

Figure 3.5. Data products and the different ways in which data originate

Note: Arrows represent potential data flows between the different actors and a data product (good or service). The type of data is highlighted in bold to indicate the moment at which the data are created.

Source: OECD (2019[3]), Enhancing Access to and Sharing of Data: Reconciling Risks and Benefits for Data Re-Use Across Societies, http://doi.org/10.1787/276aaca8-en.

Observed, volunteered, derived and acquired data

This differentiation between observed, volunteered, derived and acquired data highlights the contribution of various stakeholders to the data value cycle. As such, it indicates the potential interests and claims of stakeholders with respect to those different types of data. Product users are most aware of the data they have voluntarily provided. Consequently, they typically tend to have stronger interests in volunteered data. For their part, product providers will have a particularly strong interest in derived and acquired data given their respective creation and acquisition directly involved financial and non-financial resources.

The differentiation between observed, volunteered, derived and acquired data has been reflected in recent policy considerations on the allocation of access and use rights on data. As countries increasingly adopt data portability regimes, to what extent should data portability rights apply to all types of personal data? Some data held by organisations may have been inferred or derived by the organisations. In other cases, users have been the main contributors (OECD, 2021[17]).

Recent policy decisions treat this question differently. The “Right to Data Portability” (Art. 20) of the European Union (2016[40]) General Data Protection Regulation (GDPR), for instance, only applies to personal data “provided by” the data subject with consent or under contract that is electronically processed. The (former) Article 29 Working Party indicates in its guidance that the definition of provided personal data should include data volunteered by the individual, as well as observed by virtue of their use of the service or device. However, it should not include personal data that are inferred or derived (OECD, 2015[33]).

The differentiation between observed, volunteered, derived and acquired data can also shed light on other aspects of interest to policy design. For example, it can help determine what product users (such as data subjects) may reasonably know about the scale and impact of activities by service providers with personal data about them. This is relevant, for example, when designing policies that seek to protect privacy by strengthening the control capacity of the product users such as through individual consent requirements (subsection 3.1.3).

Multi-stakeholder engagement and participation

The differentiation between observed, volunteered, derived and acquired data is crucial to disentangle the contribution of stakeholders at different phases of the data value cycle conceptually. However, multi-stakeholder engagement and participation is another fundamental instrument to disentangle the multiple interests entrenched in data governance. Available evidence shows that engagement about data governance with stakeholder communities can help better identify and make explicit their various interests, and better define responsibilities and acceptable risk levels across the data ecosystem (Frischmann, Madison and Strandburg, 2014[41]; OECD, 2021[4]; 2019[3]; 2016[5]). In this sense, it also helps build trust in data governance and the data ecosystem, strengthening subsequent buy-in and compliance from all stakeholders.

To be effective, multi-stakeholder engagement and dialogues require establishing whole-of-society, open and inclusive processes where:

all relevant stakeholders are represented, their roles, responsibilities and modalities of participation are identified, and their various interests recognised and made transparent

appropriate mechanisms ensure that all stakeholders’ interests are considered in the design, implementation and monitoring of data governance frameworks, that stakeholder feedback is given appropriate attention and consideration and that a rationale is given where feedback is not taken on board (OECD, 2019[31]).

How OECD legal instruments help disentangle the various interests of stakeholders

The OECD (2021[6]) EASD Recommendation recommends that Adherents should

[p]romote inclusive representation of and engage relevant stakeholders in the data ecosystem – including vulnerable, underrepresented, or marginalised groups – in open and inclusive consultation processes during the design, implementation, and monitoring of data governance frameworks (III.a) [and] work together with key stakeholders to clearly define the purpose of [data access and sharing] arrangements and identify data relevant to these purposes, taking into account their benefits, costs, and possible risks. (IV.a)

The OECD (2021[9]) Research Data Recommendation calls on Adherent to

[c]onsult with communities of stakeholders about open access to, sharing of, and re-use of research data and other research digital objects from public funding for reinforcing trust. This should include establishing open and inclusive processes that ensure equitable representation of stakeholder groups and consideration of their respective needs (III.4) [and] to prioritise, in consultation with stakeholders at the national and international level, research data and other research-relevant digital objects from public funding for short-, medium-, or long-term preservation. (VII.1.a)

The OECD (2016[10]) Health Data Governance Recommendation calls on governments to establish and implement a national health data governance framework that provides for

[e]ngagement and participation notably through public consultation, of a wide range of stakeholders with a view to ensuring that the processing of personal health data under the framework serves the public interest and is consistent with societal values and the reasonable expectations of individuals for both the protection of their data and the use of their data for health system management, research, statistics or other health-related purposes that serve the public interest. (III.1)

The OECD (2014[12]) Digital Government Recommendation recommends that governments develop and implement digital government strategies that “encourage engagement and participation of public, private and civil society stakeholders in policy making and public service design and delivery” (II.2). This is to be done by

[a]ddressing issues of citizens’ rights, organisation and resource allocation, adoption of new rules and standards, use of communication tools and development of institutional capacities to help facilitate engagement of all age groups and population segments, in particular through the clarification of the formal responsibilities and procedures (II.2.i) [and] identifying and engaging non-governmental organisations, businesses or citizens to form a digital government ecosystem for the provision and use of digital services. This includes the use of business models to motivate the relevant actors’ involvement to adjust supply and demand; and the establishment of a framework of collaboration, both within the public sector and with external actors. (II.2.ii)

3.2.2. Support a whole-of-government approach

As highlighted above, data are typically governed by multiple, and sometimes overlapping, policy and regulatory frameworks. These frameworks include, most prominently, privacy and personal data protection frameworks and IPR frameworks, which can affect conditions for access, sharing and processing of data differently. Other relevant policy and regulatory frameworks include sector- and domain-specific regulations such as those targeting the financial sector (open banking), the public sector (open government data) or science and research (open science). Conditions for access, sharing and processing of data may also be defined by ex-post enforcement measures. In some cases, for example, data portability may be mandated as a competition enforcement mechanism (OECD, 2021[2]).

Against this background, policy makers must identify and address potential overlaps and incoherencies across their domestic policy and regulatory frameworks that directly or indirectly govern data (e.g. between personal data protection and sectoral data frameworks). This should ensure a proper interplay across the frameworks and provide legal certainty for stakeholders. For this purpose, policy makers need to acknowledge the strengths and weaknesses of different data governance approaches. Unlike general approaches that apply across sectors, for example, approaches specific to data types or sectors tend to better address the legal, organisational and technical needs of individual sectors and better protect specific categories of data. At the same time, varying standards and requirements across sectors or by data types can create challenges for data sharing within and across sectors. For example, due to the complexities of sharing, accessing and processing, databases may contain both personal and non-personal data that are subject to different rules.

Policy makers need strategic whole-of-government approaches to foster data access and sharing within and across society, while ensuring policy coherence across data governance frameworks. Such approaches should take advantage of both the general and sector/data-type specific approaches to data governance. National data strategies, in combination with sectoral data strategies, which integrate cross-cutting economic, social, cultural, technical and legal governance issues, could be instrumental. This combination of strategies should create the conditions for effective data governance frameworks to better protect the rights and interests of individuals and organisations. At the time, it should provide the flexibility for all to benefit from data openness (OECD, 2022[19]). Such national and sectoral data strategies would need to integrate, or be reinforced by, efforts to foster cross-agency co‑operation.

National and sectoral data strategies

National and sectoral data strategies could help address many of the policy issues highlighted above in a comprehensive manner by incorporating a whole-of-government perspective. Many countries have adopted national digital economy and national AI strategies. However, equivalent data strategies are emerging spurred by the recognition of their potential to support the data economy strategically and the responsible use of data across society (OECD, 2022[19]).

National data strategies are cross-sectoral by nature. In many instances, they are designed to help reach higher level objectives. These could include gross domestic product growth, productivity, well-being and/or combatting climate change and fostering sustainable development. To achieve these strategic objectives, many national data strategies build on specific strengths of the country. For example, they often integrate pre-existing national digital economy strategies (Gierten and Lesher, 2022[42]), national broadband strategies, digital government strategies and national AI strategies, which national data strategies often complement. In turn, national data strategies are often complemented by sector-specific data strategies. The most prominent sectoral data strategies relate to health but also science and research, as well as smart cities, smart transportation and smart energy systems.