Bulgaria’s Pre-school and School Education Act (2016) introduced a new competency-based curriculum, new learning standards and more formative approaches to assessing students, including the use of start-of-year diagnostic tests and the implementation of qualitative marking. However, while these policies have the potential to enhance learning, changes in school and classroom assessment practices have been slow to implement and the country’s high-stakes selection and examinations culture continues to reinforce the perception of student assessment as a primarily summative exercise. This chapter recommends tangible steps Bulgaria can take to use assessment as a means to improve teaching and learning.

OECD Reviews of Evaluation and Assessment in Education: Bulgaria

2. Making student assessment an integral part of student learning

Abstract

Introduction

Student assessment can be a key enabler of student learning by helping teachers, students and parents determine what learners know and what they are capable of doing. This information may also help educators identify specific learning needs before they develop into more serious obstacles, as well as support students in making informed decisions about their educational trajectories. Meaningful assessment practices are especially important in light of the COVID-19 pandemic, facilitating the adaption of instruction where learning has been disrupted. In Bulgaria, student assessment policies have undergone several changes in the last six years as part of broader reform efforts introduced by the Pre-school and School Education Act (2016) to modernise schooling. In particular, the new competency-based curriculum provides a foundation for achieving national goals and improving the educational outcomes of all students, supporting them and the country to advance.

However, the intended impact of Bulgaria’s education reforms has not yet come to fruition and there is a notable implementation gap at the school and classroom levels. Schools and teachers will need to make assessment a central part of the learning process in order to better detect and address learning issues, redress inequalities related to background or location and promote the complex competencies needed for success in school and beyond. The role of standardised assessment in Bulgaria also needs to be reviewed: national assessments and examinations are not currently contributing to improvements in the quality of education in the classroom or to the choices students make about their pathways. These factors exist within a highly competitive and traditional schooling environment whereby assessment is primarily viewed as a way to sort students into prestigious schools. Overcoming these challenges and establishing a more inclusive and competency-based approach to education will likely require further structural changes to schooling in Bulgaria. In the meantime, there are tangible steps the country can take to use assessment as a means to improve teaching and learning practices and outcomes.

Student assessment in Bulgaria

Bulgaria’s competency-based curriculum introduced important changes to student assessment policy, such as start-of-year diagnostic tests, qualitative marking and expected learning outcomes for each subject and grade level. While these policies have the potential to enhance the quality of education, tangible changes in school and classroom practices have been slow to take effect. Teacher assessments continue to focus on traditional summative tests with a narrow emphasis on a limited range of tasks as opposed to broader, deeper learning. This encourages an educational approach that risks undermining student agency, engagement and progress. The ability of teachers to adopt new assessment practices is constrained by political and public expectations of how students in Bulgaria should be assessed and successful achievement demonstrated. This is, in part, a cultural legacy of education under the Soviet bloc, which was characterised by centralisation, control and a focus on memorisation combined with a culture of competition and performance in contests and examinations. This is not unique to Bulgaria: several other education systems in the region have confronted or are confronting similar challenges (Li et al., 2019[1]; Kitchen et al., 2017[2]; OECD, 2019[3]).

Bulgaria’s national assessment and examination practices, which centre on a high-stakes sorting and examinations culture, have further entrenched these more traditional attitudes. The wider assessment ecosystem is not conducive to implementing the intended changes of Bulgaria’s competency-based curriculum. To move forward, the country needs to create the conditions for teachers and students to take the lead on assessment practices that enable learning. This requires shifting the focus from summative, high-stakes assessment to emphasising formative practices and an improvement-led assessment culture from the earliest years. Supporting teachers in developing these pedagogical skills while simultaneously changing public attitudes towards assessment – and education more broadly – will be key to building buy‑in for the new competency-based curriculum among educators and society at large. This chapter will discuss the different types of student assessment practices currently found in Bulgaria (Table 2.1) and identify elements that could support the country in making this shift. Some details are covered in more depth in Chapter 5, which looks further into how Bulgaria’s national assessments can support system-level monitoring and help advance national education goals.

Table 2.1. Overview of student assessment in Bulgaria

|

Reference standards |

Types of assessment |

Body responsible |

Process |

Guideline documents |

Frequency |

Primary use |

|---|---|---|---|---|---|---|

|

National Curriculum Framework |

Classroom assessment |

Teachers |

School-readiness assessment at the end of pre-primary education |

State Educational Standard (SES) for Evaluation of the Results of Student Learning (2016) |

Once |

Certification of readiness for transition to primary education |

|

Start-of-year readiness/ diagnostic assessment |

Once a year |

Assessing gaps in learning |

||||

|

Continuous assessment (current and term assessment) |

Up to four times per term (subject-dependent) |

Monitoring student progress during a school year |

||||

|

End-of-year/end-of-phase examination |

Once a year |

Completion of grade level/phase |

||||

|

National assessment |

The Center for Assessment |

Census-based National External Assessments (NEAs) (Grades 6, 7, 10) |

SES for the Evaluation of the Results of Student Learning (2016) |

Three in total; takes place annually |

Monitoring system performance Selection mechanism for upper secondary school (Grade 7) |

|

|

National examination |

The Center for Assessment |

State Matriculation examination (Grade 12) |

SES for the Evaluation of the Results of Student Learning (2016) |

Once |

Diploma of completion of upper secondary education; application to tertiary education |

|

|

State Matriculation examination for acquiring professional qualification (Grade 12) |

Vocational Education and Training Act (latest amendments: 2018) |

Once |

Certification of acquisition of vocational qualification |

|||

|

International Association for Evaluation of Educational Achievement (IEA) Standards |

International assessment |

International Association for Evaluation of Educational Achievement (IEA) |

Progress in International Reading Literacy Study (PIRLS) (Grade 3) |

Five years |

Measurement of system performance |

|

|

Trends in International Mathematics and Science Study (TIMSS) (Grades 3, 7) |

Four years |

Measurement of system performance |

||||

|

International Programme for International Student Assessment (PISA) Standards |

International assessment |

OECD |

PISA (15-year-olds) |

Three years |

Measurement of system performance |

Source: Ministry of Education and Science (2020[4]), OECD Review of Evaluation and Assessment: Country Background Report for Bulgaria, Ministry of Education and Science of Bulgaria, Sofia.

Overall objectives and policy framework

High-performing education systems successfully align curriculum expectations, subject and performance criteria and desired learning outcomes (Darling-Hammond and Wentworth, 2010[5]). National learning goals and expected outcomes, as expressed through qualifications frameworks, curricula and learning standards, help establish an education culture within which assessment supports learning. Bulgaria’s reforms under the Pre-school and School Education Act signalled a clear effort to establish a coherent, learner- and learning-focused policy framework. However, more than five years on, the changes to teaching and learning envisaged by the reform have not yet materialised in classrooms, nor has the desired effect on student outcomes. Considerable gaps between the intended curriculum, the taught curriculum and the assessed curriculum persist and further implementation and alignment efforts are required.

The new curriculum aligns with international frameworks and continues to be updated

Bulgaria’s move towards a competency-based curriculum aims to modernise teaching and learning, in line with international trends, emphasising the mastery and practical application of knowledge and skills, as well as reorienting the teacher’s role from a source of information to that of a mentor or learning partner (Government of Bulgaria, 2020[6]). The Pre-school and School Education Act established nine interdependent and transversal competencies to be embedded across school education for both general and vocational education and training (VET) programmes. These competencies reflect the European Parliament and Council of Europe’s Recommendation on Key Competences for Lifelong Learning (2006, updated 2018), with the addition of sustainable development, healthy lifestyles and sports competency. Efforts were made to take into account and align with international competency frameworks to ensure Bulgarian students have opportunities to study and work abroad after school and that their skills are competitive internationally.

The introduction of the new curriculum, overseen by the Directorate for the Content of Pre-school and School Education within the Ministry of Education and Science (hereafter, the Ministry), has been gradual. The 2021/22 academic year marks the first time that all students in Bulgarian schools are following the new curriculum. Further curriculum updates are planned with the goal of developing more flexible and modular VET programmes and updating general curricula to better promote the key competencies (Government of Bulgaria, 2020[6]). Bulgaria’s National Recovery and Resilience Plan1 also commits to better promoting science, technology, engineering and mathematics (STEM) skills as well as further developing core cognitive skills (Government of Bulgaria, 2020[7]). While these are important developments, it is essential that further reform efforts do not distract from the implementation and consolidation of previously updated curricula. Change takes time and a sustained focus on core priorities is important for impact and for avoiding curricular reform fatigue among teachers, trainers and school leaders, which can make improvements even harder to achieve. Specifically, Bulgaria will need to prioritise classroom-level curricular implementation for younger students and in priority competencies, such as language literacy and mathematical and scientific competency, to ensure that all students are supported in developing the core attitudes and skills that provide the foundations for future learning.

Multiple instructional documents aim to guide the organisation of teaching and learning but can lack clarity and coherence

As part of broader education reforms, Bulgaria introduced a range of new policy documentation relating to the organisation and content of teaching and learning (Table 2.2). The State Educational Standard (SES) for General Education sets out expected learning outcomes by the end of each education phase in every subject. The Framework Curricula, included in the SES for Curriculum, set out organisational aspects for different types of education (i.e. by school or programme type and delivery mode) at each education phase and for each subject. Grade-level subject syllabi are intended to guide teachers’ classroom planning. For the first time, these documents provide expected learning outcomes related to subject competency as well as suggested activities that teachers can do to support the development of these competencies, the share of time dedicated to assessing students and the different modes of assessment to be employed (e.g. continuous assessment, examination, homework, projects, etc.). They also identify links between subject competencies and the nine transversal competencies. While these are all important and necessary resources and many appear of good quality in and of themselves, there is a lack of clarity among teachers as to the role of each one and a lack of coherence among the documents themselves.

Table 2.2. Policy documentation to support schools and teachers in organising and planning learning

|

Policy document |

Date |

Purpose |

Content |

|---|---|---|---|

|

Pre-school and School Education Act |

2015 |

To establish the overall aims and objectives of pre-school and school education. |

|

|

SES for General Education |

2015 |

To determine the goals, content and characteristics of general education at the school level. |

|

|

SES for Curriculum |

2015 |

To set out the characteristics, content and organisational structure of the curriculum. |

|

|

Subject syllabus |

2016-21 |

To establish the requirements and expected learning outcomes for every subject at every grade. |

|

|

School curriculum |

Annual |

To determine the organisation of school curricula. |

|

|

Individual curriculum |

Annual |

To determine curricula for students with specific needs. |

|

Teachers struggle to navigate curriculum documents and apply changes to their classroom practice

Teachers implementing Bulgaria’s new curriculum have been provided with more curricular information than ever before. However, a sense of confusion about the role of the various documents prevails, as well as a perception that the curriculum is overloaded (Ministry of Education and Science, 2019[8]). Interviews undertaken by the OECD review team also indicate that, rather than using the syllabi as intended, teachers continue to rely heavily on textbooks for their planning, teaching and assessment of learning. While the Ministry perceives that the expected learning outcomes act as learning standards, teachers do not consistently apply them in the classroom to support student assessment and there is little monitoring or accountability to incentivise them to do so (Ministry of Education and Science, 2020[4]).

This misapplication may be partly due to ambiguity in the content of the outcomes. Although most are defined as expected results and some are process- or skill-focused, others better describe teaching activities or specific content knowledge (Dimitrova and Lazarov, 2020[9]). For example, in the Grade 6 history and civilisations syllabus, students are expected to “determine causes and consequences of historical events, and research and select information via the Internet” (process/skill-focused), but also “know the most significant conflicts of the period and describe historical figures” (content knowledge) (Ministry of Education and Science, 2016[10]). Furthermore, the suggested teaching and assessment approaches can be very generic and are often repeated across grades and subjects. Teachers of Grade 12 mathematics, for instance, are told that assessment can take the form of an oral examination, written test, classwork or practical work (Ministry of Education and Science, 2020[11]). This adds no value to the information included in higher-level documents.

Each of these challenges – perceived overload, use of textbooks over syllabi and low application of the expected learning outcomes – suggests a considerable gap between the uses of the curricular documentation as intended by the Ministry and the real-life application as carried out by schools and teachers. The Ministry has tried to address these challenges, for example by publishing informative brochures and running a set of regional workshops in 2019, yet the disparities between the intended and implemented curriculum continue to impede the overall success of Bulgaria’s curricular reforms.

A new national evaluation and assessment framework provides detailed instructions regarding the organisation and administration of assessments

Complementing various curricular documents, Bulgaria also introduced a new student assessment framework in 2016, the SES for the Evaluation of the Results of Student Learning (Ordinance 11). This Ordinance aims to align student assessment practices with a competency-based approach, namely by encouraging a greater focus on diagnosing and monitoring student progress across the school year. Specifically, the framework establishes the main types (normative, criterion and mixed) and forms (diagnosis, prognosis, certification, information, motivation, selection) of assessment, as well as how to organise classroom- and school-level assessment, National External Assessments (NEAs), State Matriculation examinations and the certification of learning across education phases (Ministry of Education and Science, 2016[12]).

Ordinance 11 introduces some important changes to Bulgaria’s more traditional student assessment approaches, including the use of qualitative marking and diagnostic assessments in classrooms. However, it also remains focused on the organisational elements of different assessments, such as detailed requirements for timing, frequency and administration. Despite the fact that a move to a competency-based curriculum requires changes in the pedagogical approach to assessment, Ordinance 11 offers minimal information or guidance to support teachers to make such changes.

Implementing competency-based assessment remains a challenge

The introduction of a competency-based curriculum poses a challenge to student assessment practices in any education system because competencies are difficult to assess: they combine knowledge, skills and attitudes and are underpinned by dimensions that are hard to capture but are learned simultaneously (EC, 2010[13]). There are some specificities in Bulgaria’s education system that may have made this shift towards more multi-dimensional assessment even harder to achieve. First, school-level assessments are currently constrained by multiple intermittent, often high-stakes, traditional assessments of student learning, as prescribed in Ordinance 11. These approaches create a negative backwash effect on the curriculum, as “teaching to the test” narrows the focus of learning in the classroom (OECD, 2013[14]). Moreover, Bulgaria’s extensive and frequent changes to curricular documentation may be reducing the space, or at least perceived space, for teachers to understand and engage in more innovative assessment practices. For example, during interviews conducted by the OECD review team, teachers implementing project-based learning in primary education expressed concerns about replicating these approaches in Grades 5-7 when classroom assessment carries consequences for students’ progression and preparations for the high-stakes external assessment in Grade 7 begin.

At the same time, Bulgarian teachers face a highly traditional educational culture among the wider public that emphasises high-stakes assessment and quantitative marking. Both system and institutional actors reported to the OECD team that they have tried to reduce reliance on traditional assessments and increase more competency-based approaches (e.g. projects or case studies) but such efforts often lead to complaints from parents. This context may influence teachers to avoid changing instruction altogether or to implement changes while maintaining traditional types of assessment, meaning more classroom time dedicated to administering assessments as opposed to acting upon the results to enhance learning.

Professional capacity in Bulgaria is another obstacle. When introducing a competency-based approach, systems need to develop the expertise and technical capacity of teachers to design, develop, deliver and evaluate more complex assessments (Nusche et al., 2014[15]). This requires training for teachers but also for other actors in the system such as, in Bulgaria’s case, those working in national assessment agencies or those based in the regional departments of education (REDs) that offer methodological support to schools. However, training for the new curriculum in Bulgaria has been limited to teaching professionals only and has been knowledge-focused as opposed to pedagogy-focused, meaning that assessment practices may have been neglected. Although some specific assessment-focused training is available to teachers in Bulgaria, it is rare and focuses on preparing students for national or international examinations and assessments. Even in cases whereby teachers are creating their own assessments, these appear to be about measuring the acquisition of knowledge as opposed to measuring competencies.

Classroom assessment

Ongoing and regular identification and interpretation of evidence about student learning is a key component of effective instruction (Black and Wiliam, 2018[16]). In Bulgaria, however, alongside the over-reliance on traditional formats, classroom assessment is often viewed by teachers and students – and society – as a validation exercise rather than an integrated part of the learning process.

Teachers in Bulgaria must administer frequent classroom assessments

The purpose of classroom assessment in Bulgaria, as defined in Ordinance 11, is to establish students’ educational outcomes and determine their progress. To this end, teachers are expected to undertake frequent classroom assessments during the academic year (Table 2.3). The school year begins with a diagnostic assessment for all students to ascertain entrance levels of performance and identify areas for support. Following this, regular assessments must take place for all students to determine current marks. The frequency is dependent on the number of subject teaching hours per week and can amount to four assessments per academic term for core subjects such as mathematics and Bulgarian language and literacy. These assessments can be oral, written or practical and administered individually or by group.

Table 2.3. Different types of classroom assessment administered to students in Bulgaria

|

Type of assessment |

Purpose |

Scope |

Timing |

Format |

|---|---|---|---|---|

|

Diagnostic assessment |

To establish entrance level and assimilation of key concepts from the previous year, identifying deficits and measures to overcome them |

All students |

Within three weeks of the start of the school year |

Written test |

|

Continuous assessment |

To establish the student’s current mark and to support the achievement of the expected learning outcomes |

All students |

Between two and four times an academic term |

Oral, written and practical tests, and according to the scope - individual and group |

|

Equivalency examinations |

To support the transition of an upper secondary student from one class or school to another |

Students transitioning from one school or pathway to another |

Written test |

|

|

Corrective examinations |

To enable students who receive a poor mark (2) an opportunity to improve their annual grade |

Students who receive a 2/“poor” mark in end-of-year assessments |

Annually from Grade 5; from two weeks after the end of the school year and two weeks before the start of the next one |

|

|

Resit examinations |

To give students the opportunity to change their end of stage assessment |

Students who want to improve their end-of-year assessments |

End-o- phase – Grade 7, Grade 9, Grade 12 |

Three subjects maximum, no resits except in Grade 12 |

Source: Adapted from Ministry of Education and Science (2016[12]), Наредба No. 11 от 1 Септември 2016 г. за Оценяване на Резултатите от Обучението на Учениците [Ordinance No.11 of 01 September 2016 for the Evaluation of the Results of Student Learning], https://www.lex.bg/en/laws/ldoc/2136905302 (accessed on 18 August 2021).

Bulgarian teachers use qualitative and quantitative descriptors when assessing students

When conducting classroom assessments in Bulgaria, teachers of students from Grades 1-12 must assign a qualitative descriptor (excellent, very good, good, intermediate or poor). Ordinance 11 provides generic descriptions for these. For example, an “excellent” should be awarded only to students who “achieve all the expected results from the curriculum, and master and independently apply all new concepts”. For students in Grades 4-12 only, this qualitative descriptor must be paired with a numerical mark (“excellent” equates to a mark between 5.50 and 6.00; “poor” equates to a mark between 2.00 and 2.99). For continuous assessments, teachers must report results to students within two weeks of administering the test and enter them into the relevant school information system.

Bulgaria’s introduction of qualitative descriptors is positive and could support students and teachers to better contextualise numerical marks within the learning process. Moreover, the exemption of younger students from receiving numerical marks is in line with other countries in the region although numerical marks are introduced earlier (e.g. Grade 2 in Serbia) or later (e.g. Grade 5 in Georgia and Romania), depending on the system. However, without student-, subject- and task-specific clarification, Bulgaria’s qualitative descriptors cannot direct students on how to improve. Teachers are not required to formally record nor report such targeted feedback so while students receive their marks promptly, these marks are not always justified (Ministry of Education and Science, 2020[4]). Teachers in Bulgaria also seem focused on numerical marks: interviews undertaken by the OECD review team suggest that even when assessing project-based learning, teachers developed complex formulae to calculate a student’s mark. This may reduce the impact of written comments by becoming the main focus of learners’ attention (Elliott et al., 2016[17]).

As well as assigning qualitative and quantitative descriptors for continuous assessments, teachers must assign an end-of-term (Grades 4-12) and end-of-year evaluation (Grades 1-12). In Grade 1, this is a general mark for all subjects; from Grade 2, marks are awarded for each subject. These evaluations should be based on both the student’s performance in continuous assessments and a final examination. The lowest value, “2” or “poor”, is considered a “fail” and requires either additional support only (Grades 1-3) or additional support and a resit examination if awarded at the end of the year (Grades 4-12). If the mark does not improve in the resit examination, students must repeat the school year.

This emphasis on achieving a better mark in order to proceed to the next grade, as opposed to focusing on ensuring a fuller mastery of the subject, has the potential to narrow learning further. Moreover, PISA data indicate that this policy is not effectively supporting the remediation of learning gaps. Grade repetition is not common in Bulgaria: only 4.5% of students participating in PISA 2018 reported having repeated a grade, which was below the OECD average of 11.4% (OECD, 2020[18]). While this is positive – grade repetition is both educationally and financially inefficient – given that PISA data also indicate that around a third (32%) of Bulgarian 15-year-olds failed to meet minimum proficiency levels in any of the three core PISA disciplines (reading, mathematics and science), many Bulgarian students appear to be advancing through the school system without having learning gaps identified and addressed. This raises several concerns about the focus on examinations and numerical marks over learning, the accuracy of teachers’ judgements and the extent to which assessments evaluate important knowledge and skills.

Students are also awarded a final evaluation at the end of each education phase, which is entered on the relevant certificate of completion. Particularly for lower education phases, the inclusion of end-of-year results on certificates of completion is not common among OECD countries or other countries in the region. This practice means that even Bulgaria’s continuous assessments have high-stakes consequences because they feed into the end-of-year evaluations that determine progression to the next grade level, appear on certificates and, in some grade levels, inform competitive selection processes for school places. This practice risks undermining more formative forms of assessment.

Formative assessment is not consistently applied in classrooms

In many education systems, the move to competency-based curricula has been paired with more formative approaches to assessment. In addition, there have been efforts to create a better balance between this and summative assessment in the classroom, recognising that both play a role in student learning. Bulgaria’s Ordinance 11 establishes formative approaches to assessment, such as the use of start-of-year diagnostic tests. Such tests can produce detailed information about individual students’ strengths and weaknesses and should inform future planning, differentiated instruction and remedial efforts (OECD, 2013[14]). In the wake of school closures and disrupted instruction during the COVID-19 pandemic, such efforts are particularly valuable (OECD, 2020[19]). In Bulgaria, there is scope to expand formative approaches with younger learners as teachers cannot assign numerical marks to students in Grades 1‑3 and there is an explicit expectation to implement remedial measures in the case of “poor” performance.

In reality, for both younger and older students in Bulgaria, formative classroom assessment is not a common practice and there appears to be some misunderstanding among teachers about the difference between summative and formative assessment methods and how they are interrelated. For example, some practitioners who spoke with the OECD review team did not distinguish between formative assessment and continuous assessment. In fact, continuous assessment can serve both summative and formative purposes (Muskin, 2017[20]). Furthermore, the start-of-year diagnostic assessments are not consistently applied and do not always serve the intended purposes (i.e. identifying gaps in students’ learning, tailoring teaching and learning to students’ needs, or supporting evidence-based progress-focused conversations between teachers, learners and parents). Other countries mandating diagnostic assessments (e.g. Romania and Serbia) face similar challenges (Maghnouj et al., 2019[21]; Kitchen et al., 2017[2]). In Bulgaria, teachers appear more likely to use diagnostic tests to establish an entry-level mark, with a view to comparing this to an exit-level mark at the end of the school year. Some subject syllabi even appear to promote this approach (Ministry of Education and Science, 2017[22]) while, during interviews conducted by the OECD review team, teachers sometimes referred to facing resistance from parents when recommending their child receive remedial instruction following the diagnostic test. Although there are some effective remediation efforts within the system, such as the Support for Success programme, these also reinforce the idea that remediation is an additional support mechanism rather than being a key element of effective assessment cycles within classroom practice.

Bulgaria’s assessment policy framework may be contributing to these misconceptions or misapplications. Ordinance 11 lacks clear comparative definitions of formative and summative assessment that outlines their distinct roles. Although ultimately the two approaches are synergic and cannot be clearly separated (Black and Wiliam, 2018[16]), for teachers working in a system under transition, clarification around the two approaches would be useful. Furthermore, by requiring very regular continuous assessment with numerical marks, Ordinance 11 directs teachers to implement assessments that emphasise performance as opposed to process or improvement. There is also little time within the assessment schedule for formative feedback loops, particularly given that Bulgaria’s academic year is comparatively short (EC/EACEA/Eurydice, 2020[23]) and that teachers perceive the curriculum to be overloaded. Although there is some reference to the formative function of assessment within Ordinance 11, time pressures make realising this seem unlikely. For example, between the end-of-year examinations and corrective examinations, there may only be two weeks for remedial efforts.

National assessments

National assessments are designed to provide nationally comparable information on student learning, principally for system monitoring. As such, Bulgaria’s national assessments are covered primarily in Chapter 5 of this report. Like examinations, national assessments are usually externally designed and administered but, unlike examinations, they do not carry consequences for students’ progression. In addition to enabling national system monitoring of learning outcomes, they can also serve other purposes, such as ensuring that students meet national learning standards and supporting broader school accountability efforts. Across the OECD, the vast majority of countries (around 30) have national assessments to provide reliable data on student learning outcomes that is comparative across different groups of students and over time (OECD, 2015[24]). Bulgaria’s national assessment does not currently measure progress over time and has limited pedagogical value. Moreover, the assessment’s selection function has been criticised for pressuring students and encouraging a narrow focus on test preparation. These features not only prevent the national assessment system from serving either monitoring or formative functions but also risk having an adverse effect on students who do not plan to attend competitive, elite upper secondary schools.

Bulgaria’s national assessment system has significant implications for students

Students in Bulgaria sit census-based national assessments at three key transition points in their schooling: Grade 4 (end of primary education), Grade 7 (end of lower secondary education) and Grade 10 (end of compulsory education). These National External Assessments (NEAs) are developed and administered by the Center for Assessment of Pre-school and School Education (hereafter, the Center for Assessment). All students are assessed in mathematics and Bulgarian language and literature and some choose to take assessments in foreign languages. The NEA uses a single test instrument to serve multiple purposes, including system monitoring and identifying individual student progress (see Chapter 5).

In some respects, the NEA reflects national assessment systems found in other European Union (EU) and OECD countries; however, a unique feature of Bulgaria’s NEA is that it can have important implications for individual students. In all three grades, NEA results are entered onto the student’s certificate of completion for the education phase, although a minimum level is not required for phase completion. For a small share of Grade 4 students, NEA results help determine academic selection into high-performing, elite schools that specialise in mathematics or foreign languages. For a similarly small number of Grade 10 students, specifically those transitioning from an integrated school to a school that offers the second stage of upper secondary, the NEA also informs admission processes. The implications of NEA results in Grade 7 are much more significant, as explained below. For this reason, although the Grade 7 NEA is covered in detail as a system evaluation tool in Chapter 5, it also needs to be taken into account when reviewing how effectively assessments and examinations are supporting learning at the level of individual students. The fact that the NEA also has some consequences, for teachers and schools (see Chapters 3 and 4), means that its influence on the teaching and learning that takes place in the system is significant.

National examinations

National examinations are centrally developed standardised assessments that have formal consequences for students. In Bulgaria, the State Matriculation examination in Grade 12 certifies student achievement at the end of upper secondary education and supports progression to tertiary education, for example by allocating state scholarships. Most OECD countries administer national examinations at the end of upper secondary education for one (or both) of these purposes; however, national examinations are becoming less common at other key transition points, as policy makers seek to remove barriers to progression and reduce early tracking (Maghnouj et al., 2020[25]). This is not the case in Bulgaria where the Grade 7 NEA acts as a national examination at the end of lower secondary education.

The Grade 7 NEA acts as a national selective examination to allocate students to upper secondary education

The Grade 7 NEA has two key uses. The first is to assess student proficiency in core skills, which helps to fulfil a system monitoring function and determine whether students have achieved the minimum standards required to graduate and progress from lower secondary education. The second use, which is more challenging, aims to inform the placement of students into upper secondary school. The selection process sees students access their NEA results on line then apply to an unlimited number of schools of their choice. REDs determine a minimum score required for entry into each school, based on students’ Grade 7 NEA results and teacher-assigned marks for mathematics and Bulgarian language and literature. The weighting of results is at the discretion of each school so that a profiled school with mathematics and science pathways may place more weight on mathematics results. Students are then offered a school place and if they do not accept the offer, they enter a second round of selection, then a third and so on until all students have been placed.

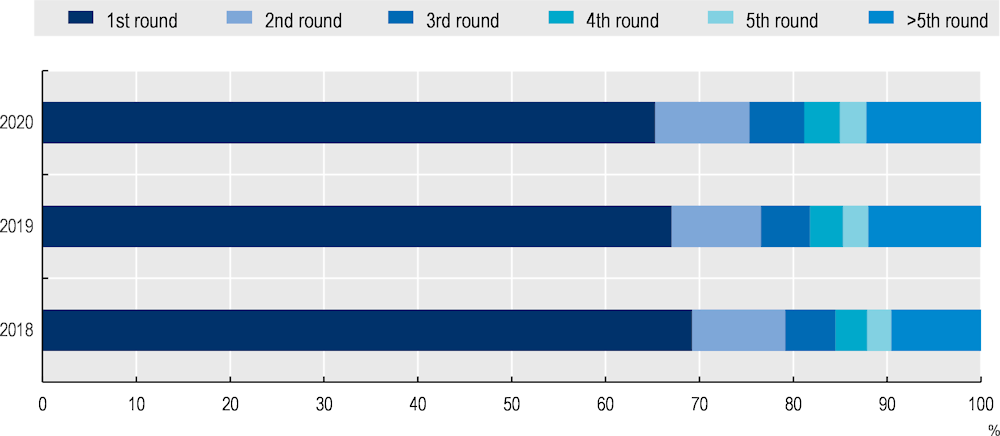

Under this ranking system, around two-thirds of students get their first choice of school (see Figure 2.1). This suggests that many students are not applying to over-subscribed or highly selective schools and so the competitive pressures of the examination are not the same for all students. However, a small share – around one in ten – participate in the ranking process more than five times and a considerable share – around one in four in 2020 – go through it three times or more. This could signal that either these learners have not been sufficiently supported to apply for schools or programmes that realistically suit their abilities or that the opportunities available to them are limited because the schools perceived to be of higher quality are over-subscribed and highly selective, for example, or because other available schools are an unattractive choice.

Figure 2.1. The share of students whose school application is accepted by application round, 2020

The high stakes associated with the Grade 7 NEA have implications for educational quality and equity

Interest in the Grade 7 NEA results, known as the “Little Matura” to the general public, is intense among parents and the media alike. From the view of broader society, enabling students to transition to a good school is now the NEA’s main role (Dimitrova and Lazarov, 2020[9]). In 2019/20, when the COVID-19 pandemic led to school closures and learning disruption, Bulgaria’s ombudsman proposed cancelling the Grades 4 and 10 NEAs, backed by a petition signed by 18 000 people (Kovacheva, 2020[26]); there was no public discussion about cancelling the Grade 7 NEA.

Until 2010, the Grade 7 NEA was explicitly designed in two parts: a compulsory part 1 determined minimum proficiency in core skills across all students; an optional part 2 fed into the competitive selection process and was only required for students applying to specific elite schools – about 40% of the cohort. Now, even though all students must participate in the selection process, disparities in educational outcomes across Bulgaria’s school network mean that, for students in rural areas at least, school choices are limited and competition for places varies considerably. Although students have the option to apply to schools outside their region, as schools with higher educational outcomes tend to be located in urban areas and clustered in Sofia, only those students with the means to travel or leave home for upper secondary education can access these opportunities. This process raises equity concerns and means that Grade 7 in general, and the NEA in particular, carries high stakes for many students. Moreover, it indicates that the large share of students getting their first “choice” may mask significant disparities in opportunity.

Despite these concerns, some teachers maintain positive attitudes towards the Grade 7 NEA, identifying it as an important factor in motivating students, testing their capacity to perform under stress and facilitating upper secondary teaching by grouping students by ability. While this may be true, the high-stakes nature of the Grade 7 NEA has considerable negative implications for the education system. First, in response to the pressure on students in Grade 7, families may engage in private tutoring. Although evidence and data regarding the extent of private tutoring in Bulgaria are scarce, anecdotal evidence reported to the OECD review team indicates that, among families that can afford it, private tutoring in the months – or even years – leading up to the Grade 7 NEA is widespread. Moreover, this is a common practice in neighbouring countries which also have high-stakes examinations at key transition points (Kitchen et al., 2019[27]; 2017[2]). Internationally, such practices have been seen to increase the achievement gap between advantaged and disadvantaged students (Zwier, Geven and van de Werfhorst, 2021[28]).

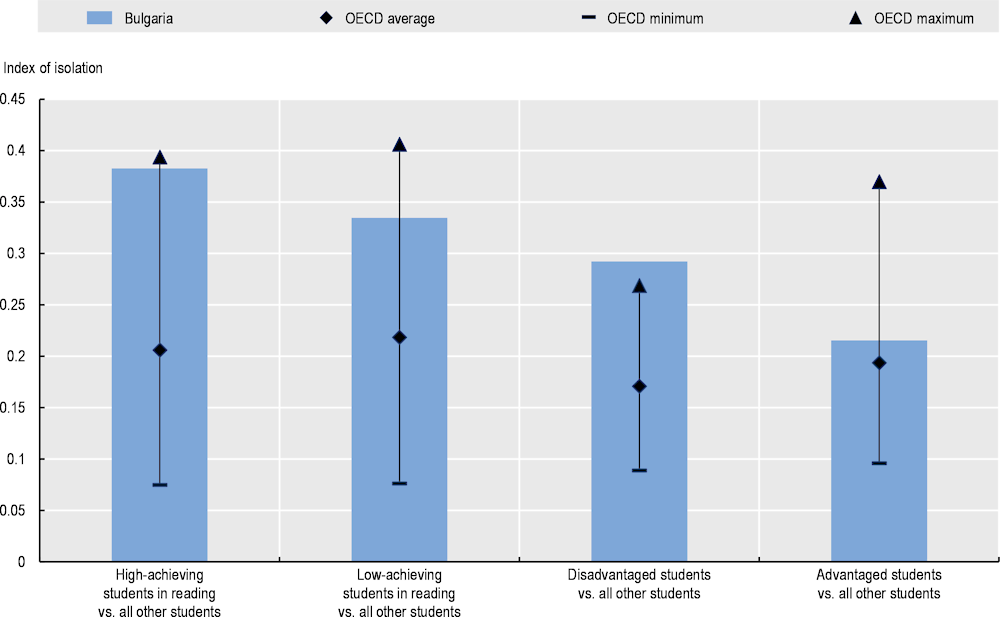

Furthermore, while having a greater variety of school types and programmes can cater for the diverse needs of students, without careful regulation and implementation, it can also increase horizontal stratification as students’ background may inform decisions about school choice more strongly than their interests or aptitudes. As shown in Figure 2.2, Bulgarian schools are more highly segregated along socio-economic lines than in any OECD member country. On paper, Bulgaria has up to 10 different school types available to students in upper secondary education and 14 different curricula pathways through the profiled subjects, offering students the greatest level of choice among EU countries (EC/EACEA/Eurydice, 2020[29]). However, academic selection means that educational pathways are often decided at age 13 and that real choice by the time students reach upper secondary level is highly constrained. While there may be advantages to providing older students with a range of pathway choices that can better tailor to their strengths, needs and ambitions, very early tracking, as seen in Bulgaria, has been shown to strengthen the association between socio-economic background and achievement and widen the learning differences between students (EC/EACEA/Eurydice, 2020[29]; Levin, Guallar Artal and Safir, 2016[30]; Woessmann, 2009[31]).

Figure 2.2. Indicators of academic and school segregation in Bulgaria and OECD countries, 2018

Note: The index of isolation is related to the likelihood of a representative type (a) student to be enrolled in schools that enrol students of another type. It ranges from 0 to 1, with 0 corresponding to no segregation and 1 to full segregation.

Source: OECD (2019[32]), PISA 2018 Results (Volume II): Where All Students Can Succeed, https://doi.org/10.1787/b5fd1b8f-en.

Bulgaria’s Grade 7 NEA and associated selection process may also be inhibiting educational quality in other ways. International research indicates that the existence of academically selective schools does not have a positive association with a school system’s overall performance (Andrews, Hutchinson and Johnes, 2016[33]). In fact, some research suggests that academic streaming and specialisation are much more common in low-performing education systems (Daniell, 2018[34]). At the same time, the NEA may inhibit the implementation of the competency-based curricula as the high-stakes nature can have a distorting effect on the curriculum. Finally, as the assessment does not yet assess competencies in a meaningful sense, teachers and students are less motivated to spend learning time on these skills.

State Matriculation examination results certify completion of upper secondary education and support progression to tertiary education

Bulgaria’s State Matriculation examinations perform several functions. Since the school year 2007/08, students in Bulgaria must pass the State Matriculation examination in order to certify completion of upper secondary. Students who successfully complete this examination, and their upper secondary education courses, receive a diploma of upper secondary education. However, the State Matriculation examination is not compulsory; students who do not take or pass the examination are still awarded a certificate of completion of Grade 12 with which they can progress into post-secondary vocational education programmes.

The State Matriculation examination also supports progression into higher education. All students applying to tertiary education must have successfully passed the State Matriculation examinations and many universities or university programmes use the results from the State Matriculation examination as part of their specific criteria for selection and enrolment. This aligns with international practices: most OECD countries have centralised examinations at the transition point between schooling and tertiary education (OECD, 2017[35]) and an increasing number of countries use a single examination for both school graduation and university selection purposes. Nevertheless, in Bulgaria, some universities or faculties continue to set their own examinations or selection criteria; this includes the most competitive ones (e.g. medicine).

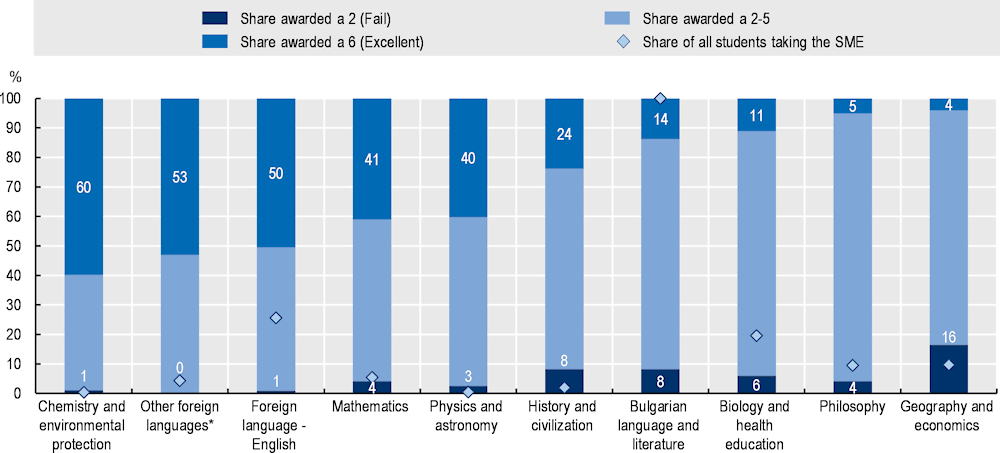

All students opting to take the State Matriculation examination must take Bulgarian language and literature and, as of 2012, a second compulsory examination in a subject of their choice. Students also have the option to take the examination in an additional two subjects. For students in general education who have studied profiled programmes, the additional subjects must come from among their profiled subjects (e.g. a foreign language). Compared to other countries in the region, most students in Bulgaria sit fewer examinations and with a narrower coverage of the curriculum. In Albania, North Macedonia and Romania for example, alongside optional subjects, national examinations at the end of upper secondary education have three compulsory subjects: the native language, a foreign language and mathematics (or computer skills in Romania). In recent years, the most popular elective subjects among Grade 12 students in Bulgaria were English, and biology and health education. Very few students opted to take physics and astronomy or the chemistry and environmental protection examinations, and only 7% choose to take mathematics, subjects more aligned with Bulgaria’s national priorities to enhance STEM skills (Figure 2.3). The OECD review team heard that this may be due to students opting to take subjects that are perceived to be less demanding.

Finally, results from the State Matriculation examinations are used to award state scholarships for students progressing to higher education in public universities. For a student to be able to apply to receive one they must perform among the top 10% of students in Bulgarian language and literature and at least meet the national average in their second subject. Alternatively, for mathematics, physics and astronomy or chemistry and environmental protection, they must come in the top 30% of students sitting the examination and meet or exceed the national average in Bulgarian language and literature. The government prioritises certain courses or fields for state scholarships; these are decided annually by the Council of Ministers.

Figure 2.3. Share of students taking State Matriculation examinations and achievement level, 2020

Note: * Includes data for examinations in French, German, Italian, Russian and Spanish.

SME: State Matriculation examination.

Source: Data provided to the OECD from the Center for Assessment.

Administration and marking of the State Matriculation examination is highly trusted

Development and administration of the State Matriculation examinations are overseen by the Center for Assessment and processes are tightly controlled and carefully monitored (Table 2.4). Numerous expert and technical commissions annually carry out different stages of the design, administration and marking process. There are also high-security measures such as video surveillance in examination centres and police escorts for the movement of papers. This has helped to build a high level of public confidence in the process over a reasonably short amount of time. The Center for Assessment has also been working to strengthen the State Matriculation examination’s validity, reliability and integrity to encourage higher education institutions to accept results as a metric for admissions decisions. These efforts have been successful: currently, 38 out of 52 higher education institutions in Bulgaria accept the results as an entry requirement for their programmes, although they may also choose to apply additional criteria. As explained above, those that do not accept the exam’s results, tend to be the most competitive institutions or programmes. However, there are signs that this is changing too: in 2021, a Council of Ministers decision formally enabled law faculties to accept undergraduate students solely based on the results of the State Matriculation examinations. These are positive developments, since prior to 2008, all tertiary institutions applied their own entry criteria, making the transition into higher education less transparent.

Table 2.4. Design and procedural considerations for the State Matriculation examination

|

Topic |

Specifications |

Notes |

|---|---|---|

|

Testing mode |

Paper-based. Oral and practical examination where relevant. |

|

|

Testing conditions |

Administered in schools; students sit the examinations in a school in their region but not necessarily the school in which they studied. Examination rooms are under video surveillance. |

Overseen by regional commissions. |

|

Test subjects |

Compulsory 1. Bulgarian language and literature. 2. Profile subject (compulsory modules only). Optional 1. Student’s free choice. |

For vocational students: Compulsory 1. Bulgarian language and literature. 2. State examination for awarding professional qualifications. |

|

Item types |

Mixed approach (closed-ended or fixed-response and open-ended). |

For each subject, the item types and their distribution are prescribed in the SES for Profiled Programmes. |

|

Marking |

Computer-based marking by humans. |

Results are published on line around two weeks after the examinations. The diploma of secondary education specifies a general performance mark. |

|

Management and leadership |

At the national level: The Center for Assessmet, overseen by the Ministry. At the regional level: REDs, which establish regional commissions for the administration of the examinations. |

The Center for Assessment establishes national commissions: for the preparation of examination tasks in each subject; for assessing the examination tasks; for inspecting the examination papers in each subject; for classification and declassification of examination papers; for electronic processing of the papers. |

|

Use of results |

Certification of completion of secondary education. Application to higher education (38/52 higher education institutions). Awarding of state scholarships for tertiary studies. |

Vocational students are also issued a certificate of vocational qualifications. Results can be transformed into equivalent marks for the European Credit Transfer and Accumulation System (ECTS) and recorded in the European annexe to the diploma for secondary education. |

Source: Ministry of Education and Science (2016[12]), Наредба No. 11 от 1 Септември 2016 г. за Оценяване на Резултатите от Обучението на Учениците [Ordinance No.11 of 01 September 2016 for the Evaluation of the Results of Student Learning], https://www.lex.bg/en/laws/ldoc/2136905302 (accessed on 18 August 2021).

Safeguards are in place to mitigate potential negative effects of the State Matriculation

There is a risk that high-stakes assessments might distort the education process by narrowing the curriculum and putting an excessive focus on assessed skills (OECD, 2013[14]). It is therefore important to establish safeguards that manage the pressure and attention placed on a particular assessment. For the State Matriculation examination, Bulgaria has several such measures in place. For example, students who do not pass the examination have the opportunity to take the test again an unlimited number of times. The pass mark for all subjects is 30% and few students (6-8%) fail the examinations at the first sitting. In fact, in many subjects, a substantial share of students achieve the highest mark; this is particularly true of foreign languages where over half of the cohort achieve “excellent” scores (Figure 2.3).

While the very low rate of failure in the State Matriculation examination could help minimise the sense of academic pressure students may experience, it is important that results accurately reflect student competencies. At age 15, 47% of students in Bulgaria were considered to have not reached minimum proficiency (Level 2) in reading in PISA 2018 whereas 2 years on, only 8% of students taking the State Matriculation examinations in 2020 failed the examination in Bulgarian language and literature. Although some students will have chosen not to continue into the final stage of upper secondary education, the wide disparity between these shares indicates considerable inconsistencies in how minimum proficiency is defined. Furthermore, awarding an “excellent” to such large shares of students can devalue the examination and render it less illustrative of the differences in students’ abilities. Efforts to mitigate the consequences of this high-stakes test, therefore, need to be more carefully balanced with the examinations’ purpose and design to ensure an accurate reflection of minimum proficiency and sufficient mark distribution among students.

Another safeguard of the State Matriculation examination is that students choose three of the four examination subjects and may even choose to only sit the two compulsory subjects. This level of flexibility allows students to select subjects based on their study interests, personal strengths and any possible requirements for admission into the further education or training pathway of their choice. Nevertheless, while this element of choice can be important in motivating older students to continue with their education and personalise their pathways, it must not be to the detriment of ensuring a minimum common base of core knowledge and skills.

Recent revisions indicate efforts to embed a competency-based approach within examination materials

In the 2021/22 academic year, Bulgaria will implement newly updated curricula for Grade 12, revised to embed a competency-based approach to instruction and new requirements of profiled education (see Chapter 1). Accordingly, the specifications for the State Matriculation examination in each subject have been updated and will be administered starting in May 2022. While some assessed competencies under the new subject specifications are still expressed in terms of what students should know (e.g. “Knows the main processes in the development of the Bulgarian literary language”), the vast majority are expressed through higher-order cognitive verbs that require demonstrating specific skills (e.g. “Evaluates texts according to the success of the communicative goal” and “Analyses and creates written texts, adequate to the communicative situation”). This contrasts significantly with the previous iterations of the State Matriculation examinations’ specifications, which demonstrated learning in much more abstract and general terms (e.g. “Knows the structure and functioning of a work of art” [Bulgarian language and literature]). Although it remains unclear how these changes will be reflected in the design of new test items, the updated specifications signal a shift from knowledge recall to more complex outcomes and higher-order competencies and provide a useful reference from which item writers can ensure the State Matriculation examinations test student competencies in real-world contexts.

National student assessment agencies

The Center for Assessment is responsible for national assessments and examinations

Bulgaria’s Center for Assessment is responsible for developing and approving test material for the NEAs and the State Matriculation examinations, as well as supporting REDs and school management teams to administer the tests. The Center for Assessment also manages Bulgaria’s participation in international assessments and undertakes an analysis of the national and international assessment results. This information is reported periodically to the Ministry to help monitor the quality of schooling. As the Center for Assessment’s mandate has expanded in recent years with the introduction of new national testing instruments (and at additional grade levels), the centre’s responsibilities have outgrown its resources. The number of permanent staff is small (around 20 individuals) and external experts are recruited annually to help design and manage various testing instruments. While this process helps mobilise and strengthen assessment expertise within Bulgaria, it also inhibits the development of institutional memory and expertise within the Center for Assessment. To ensure the range of assessment tools are relevant and sustainable, the Ministry will need to ensure the Center for Assessment has adequate financial and human resources, as well as support to develop the expertise of staff in areas of need, such as psychometrics. Chapter 5 of this report explores this further.

Policy issues

There is a clear political will to improve educational outcomes for all students in Bulgaria. However, despite numerous high-level reforms in recent years, such practices are not yet a reality in many Bulgarian classrooms. Narrow assessment approaches focused on knowledge memorisation are deeply entrenched and a longstanding strong focus on summative scores is hindering the use of more formative practices that have the potential to improve learning outcomes. While the government has taken initial steps to address these issues, for example by introducing diagnostic assessments at the start of the school year, teachers need additional training and support to use these tools effectively and develop their classroom assessment literacy. Bulgaria also needs to review the validity and fairness of the upper secondary education entrance examination, while critically questioning its place in the overall school system in the longer term. Finally, by introducing improvements to the validity of the State Matriculation examination, Bulgaria can take advantage of an opportunity to positively influence learning and assessment in classrooms while also facilitating students’ transitions beyond formal schooling. Together, these efforts are critical if Bulgaria is to achieve its dual goals of enhancing the educational quality and improving outcomes for all students.

Policy issue 2.1. Building a shared understanding of student assessment as a means to support teaching and learning

Bulgaria has a clear intention to modernise pedagogical and other educational approaches within its school system. Nevertheless, extensive reform to policy documentation has not been accompanied by pedagogical innovation or practical changes in student assessment. As a result, student assessment at the classroom and system levels does not align with the type of learning valued in Bulgaria’s new curriculum, diminishing the intended impact of reforms. This is, at least in part, a cultural challenge evident in other countries in Eastern Europe and Central Asia. However, it is also symptomatic of an implementation gap following the 2016 curricular reforms. To fully realise the promise of its educational reforms, Bulgaria needs to communicate the need and rationale for adopting new approaches to assessment, especially in the classroom. At the same time, school leaders and teachers will need support to implement pedagogical changes. Enhancing the link between assessment and learning in a clear and coherent policy framework, as well as providing practical supports for educators to apply in the classroom can help in these regards.

Recommendation 2.1.1. Establish a coherent national vision of student assessment

There are contradictions within Bulgaria’s current evaluation and assessment policy framework that send mixed messages about the role and purpose of student assessments. Ordinance 11 calls for frequent classroom assessment in all subjects with the assignment and reporting of numerical marks. This is not conducive to measuring more complex competencies and does not allow time for impactful feedback loops. Bulgaria’s emphasis on high-stakes, summative assessments may also inhibit the intended changes. For example, the Grade 7 NEA, originally intended as a system monitoring tool, has become the pivotal moment in a child’s education, with strong potential for a negative backwash effect on the curriculum in preceding grades. Recent policy efforts have tried to address some of these challenges by including formative assessment among the criteria covered by the new school inspection criteria, for example. However, there remains a pressing need for a shared national vision of student assessment that is clear and can be applied to real-life teaching and learning situations, as well as to high-level policy processes and communications with stakeholders.

Formulate a high-level national vision of student assessment

Bulgaria needs to clearly establish student assessment as a critical and central part of the learning process in the minds of students, educators and the wider public. Establishing broad consensus around a common vision of assessment that can be upheld across administrations and levels of government will be crucial in achieving deeper and more long-lasting changes in teaching and learning. This shared vision should be formalised in both legislation and accompanying explanatory materials for different audiences to establish a clear reference point for actors across the education system in years to come. Such documentation has proved useful in high-performing education systems as a way of enhancing transparency around national values with regard to assessment practices. In New Zealand, for example, a national vision of assessment has helped ensure that key principles, endorsed by a broad coalition of actors, have informed reform processes for over a decade (Box 2.1).

Box 2.1. Formalising a national vision of assessment in school education in New Zealand

In 2011, New Zealand’s Ministry of Education introduced a Position Paper on Assessment (2011[36]). The paper provides a formal statement of the country’s vision for assessment at the school level. It places assessment firmly at the heart of effective teaching and learning and describes what the assessment landscape should look like if assessment is to be used effectively to promote system-wide improvement within and between all layers of the schooling system. The paper broadly informs and directs policy processes rather than describing in detail how to achieve the ideal assessment landscape. The intention was to promote a shared philosophy among parents, teachers, school leaders, school boards, Ministry of Education and other sector agency personnel, professional learning providers, writers of educational materials and researchers, as well as journalists, commentators and other thought leaders who access, publish and comment on assessment data. As of 2021, it remains in place, having informed and directed policy reviews across multiple administrations.

The paper was informed by a comprehensive expert review of assessment practices in New Zealand and includes a presentation of the context, current assessment practices and approaches and detailed illustration of how assessment can drive learning for the learner, the school and the system as a whole. The key principles highlighted in the paper are: the student is at the centre; the curriculum underpins assessment; building assessment capability is crucial to improvement; an assessment capable system is an accountable system; a range of evidence drawn from multiple sources potentially enables a more accurate response; effective assessment is reliant on quality interactions and relationships.

Source: Nusche, D. et al. (2012[37]), OECD Reviews of Evaluation and Assessment in Education: New Zealand 2011, https://doi.org/10.1787/9789264116917-en; Hipkins, R. and M. Cameron (2018[38]), Trends in Assessment: An Overview of Themes in the Literature, https://www.nzcer.org.nz/system/files/Trends%20in%20assessment%20report.pdf (accessed on 18 August 2021); NZ Ministry of Education (2011[36]), Ministry of Education Position Paper: Assessment [Schooling Sector], Ministry of Education of New Zealand, Wellington.

While existing policy documentation in Bulgaria often focuses on logistical and organisational aspects, the national vision of assessment should adopt a more substantive, evidence-based approach. It should include a clear statement of purpose, providing the rationale for a shift in assessment culture and underlining what the new approach means for pedagogy. Given their absence in other policy documentation, a comprehensive overview of the various components and instruments included in Bulgaria’s national assessment framework, as well as their different purposes, added value and how they work together would also be useful. In this way, developing the shared national vision for assessment can help build a new assessment culture but also align Bulgaria’s broader evaluation and assessment framework for the education sector.

Engage stakeholders in developing the new vision of student assessment

The complexity of 21st century education systems means that a vision imposed from above is unlikely to gain traction and may exacerbate mistrust (Viennet and Pont, 2017[39]). To achieve real change in Bulgaria’s student assessment practices, the full range of education stakeholders will need to be engaged in an evidence-based discussion on the role of assessment and how it can best support learning, as well as establishing practical steps for implementing change. The Ministry should identify key stakeholders (e.g. students and parents, school community, system actors, researchers, non-governmental organisations, media) and facilitate a national conversation by holding a combination of in-person and online workshops and consultations. This will support more efficient use of resources, as well as a more inclusive and timely process that can facilitate real change. For example, in 2015, Ireland introduced the Junior Cycle Profile of Achievement, a new reporting process for student achievement which shifted from a focus on end-of-cycle examination to emphasising ongoing assessment for and of learning, and continuous formative feedback to students. The government held regular consultations with key actors and representatives of the profession were able to voice concerns about the extra workload the changes would bring to educators. As such, the government and the teacher unions established five immutable principles of the reform focused on supporting teachers during the implementation stages (OECD, 2020[40]). Reform implementation became a more collaborative process and has received wider buy-in from the profession.

In Bulgaria, the Directorate for the Content of Pre-School and School Education would be well-placed to oversee these consultations, as this body organised workshops in the past to support Bulgaria’s curricular implementation. The Ministry could also partner with external actors (e.g. a non-governmental or international organisation) to offer some external validation of the process, which may help build consensus. Reviewing good practices nationally and internationally, such as achieving a strategic balance of formative and summative assessment and building assessment capacity among educators and other actors across the system, could help the government ensure the consultation process is informed by evidence. Mapping current assessment practices and regulations would also be important in this regard.

Clarify and better communicate expected learning outcomes to guide student assessment

Many OECD countries have introduced learning outcomes and performance standards to help enhance teaching and assessment practices (OECD, 2013[14]). These define and illustrate in measurable terms what students are expected to master at a certain level of education and can support teachers and other actors responsible for preparing assessment material to develop valid assessment instruments and thus elicit more reliable data about student progress (OECD, 2019[3]). With the move to a competency-based curriculum, Bulgaria introduced expected learning outcomes by subject and grade level. However, perceived curriculum overload, a proliferation of related documentation and a lack of specificity mean that Bulgaria’s expected learning outcomes are not consistently used in classrooms. This should not trigger a rewriting of the expected learning outcomes, as a lot of good work has already been done in developing these across the curricula. However, Bulgaria can strengthen the existing set of expected learning outcomes by making them more coherent, accessible and practical. This can be achieved through the following actions:

Enhance the structure and layout of the outcomes to support clarity. Currently presented as a list and organised according to subject content, teachers in Bulgaria often misinterpret learning outcomes as a checklist of content to cover rather than a means of assessing and improving learning (Ministry of Education and Science, 2019[8]). Presenting the outcomes as part of a learning progression across consistent subject skill areas over an education phase could help address this and may reduce the sense of overload. It could also encourage subject teachers across age groups to collaborate.

Build-in performance standards. Several countries that have well-established learning standards, have broken down expected outcomes into different levels to support teachers in evaluating students’ progress towards mastery. For example, the Assessing Pupils’ Progress initiative (2010) from England in the United Kingdom provided detailed criteria against which judgements could be made about students’ progress in relation to National Curriculum levels (Ofsted, 2012[41]). Teachers were provided with various materials for their subject and age group: a handbook to guide them in implementing the approach; guidelines for assessing pupils’ work in relation to the performance levels; a one-page matrix organising success criteria; and annotated student work that exemplified national standards at each level (Ofsted, 2012[41]). In Bulgaria, defining each performance level in more measurable terms and illustrating these with examples of student work would help equip teachers to apply the expected outcomes in their classroom and help students assess their own progress.

Make expected learning outcomes accessible to students and parents. To encourage students’ self and peer assessment, and foster parental engagement in learning progress, Bulgaria could develop a version of expected learning outcomes accessible to those who are not pedagogical or subject professionals. In England, for example, schools commonly transformed the Assessing Pupils’ Progress criteria into “I can…” statements that were expressed from a student’s point of view. While teachers may find such statements oversimplify success criteria, they can support learners, particularly younger ones, to better understand what is expected of them.

Ensure alignment and coherence with wider evaluation and assessment practices

Aligning other components of Bulgaria’s evaluation and assessment framework with the national vision of student assessment will help implement the vision and reduce inconsistencies in practice. Previous OECD analysis of education policy processes has found that proactively aligning policies at different levels of the system (e.g. institution, local or system levels) can facilitate stakeholder buy-in, capacity building and greater clarity in terms of progress (OECD, 2019[42]). Bulgaria’s national vision of assessment should not therefore only inform approaches to student assessment but also underpin broader evaluation and assessment efforts in the following areas:

School evaluation: The national vision should trigger updates to Bulgaria’s school quality criteria (see Chapter 4). Including these in school evaluation rubrics could encourage schools to build their assessment capacity in line with the philosophy set out in the national vision.

Teacher development and appraisal: Bulgaria will need to review the professional profile for teachers to ensure that standards related to assessment align with the national vision (see Chapter 3). Promoting a new assessment culture through initial teacher education and continuous professional development, as well as through the attestation and other appraisal processes could further incentivise adherence to the new vision of student assessment.

System evaluation: The design, purpose and use of the NEAs, as well as other external assessments, will also need to be considered in developing Bulgaria’s new vision of assessment (see Chapter 5).

Communicate the vision in a strategic way to build trust and support for change

Once achieved, Bulgaria will need to find ways to ensure that the national vision remains a “living” document for actors across the system. One way to do this is to establish a website dedicated to the national vision of student assessment. For example, when introducing the Project for Autonomy and Curricular Flexibility in 2017 to support the implementation of a new curriculum, Portugal’s Ministry of Education established a website as a digital resource for reflection and the sharing of practices, as well as a digital library for reference documentation to support teachers in their curricular and pedagogical decisions (Portuguese Ministry of Education, 2021[43]). Four years on, the website continues to grow and to document and support the implementation of the project and the curriculum reform. The site includes official legislative and other documentation relating to the reform, examples of good practice from across the country, access to webinars and presentations to support implementation and regularly updated news and events.

In Bulgaria, this website or digital platform could initially document the national conversation, with news about upcoming online and in-person events, summary records of meetings, consultation exercises and expert reviews. Once developed, the vision and any associated strategies or action plans can be presented on the platform. This would also be a suitable place to house digital versions of expected learning outcomes and support materials. Over time, the website can become a one-stop-shop for student assessment in Bulgaria, with content aimed at teachers, students, parents and the wider public. Several other recommendations in this chapter suggest specific ways to use this platform.

Recommendation 2.1.2. Adapt the reporting of student learning information to promote a broader understanding of assessment

As in other countries, Bulgaria faces the challenge of balancing the tensions between stated commitments to broader forms of assessment on the one hand and public, parental and political pressure for accountability in the form of scores and rankings on the other. While attention to results and data is a positive feature of education systems, an overemphasis on these may have a negative impact and undermine the formative role of assessment (OECD, 2013[14]). Changing specific marking and reporting practices will therefore be important in making the national vision of student assessment a reality in classroom practice. Other OECD countries where summative scoring has tended to weigh heavily, such as France, have found revisions to student reports and marking to be a particularly effective way to communicate and embed new expectations

Make classroom and school-level marking practices more conducive to student learning