Korea has made good progress in recent years in managing for results at the project level. In order to be able to use results to plan and budget at a more strategic level in the future, Korea will need to develop a stronger concept of what constitutes success. It will also need to clarify how to measure performance across its development co‑operation, including by drawing on data and systems from partner countries. Achieving this will require leadership and incentives to drive a results-based culture across the entire system so that Korea can learn and adapt based on experience, as well as ensuring that results contribute to the Sustainable Development Goals across partner countries and themes.

OECD Development Co-operation Peer Reviews: Korea 2018

Chapter 6. Korea’s results, evaluation and learning

Management for development results

Peer review indicator: A results-based management system is being applied

A new results framework provides a good basis for monitoring and evaluating at the project level

Since the 2012 peer review, Korea has undertaken significant reforms to support a better quality, more coherent approach to monitoring and managing for results at the project level. These have been complemented by increased devolution of results-based management to the Korean EXIM Bank (KEXIM) and Korean International Cooperation Agency (KOICA) country offices, as well as commitments to strengthen staff capacity in managing for results. To assist in this transition, both KOICA and KEXIM introduced compatible results frameworks in 2016 (KOICA, 2016a; KEXIM 2016). In parallel, KOICA has introduced a requirement that at least 3% of the total project budget should be allocated for monitoring and evaluation, and both KOICA and KEXIM have introduced mandatory baseline studies for all relevant initiatives (KOICA, 2016b).

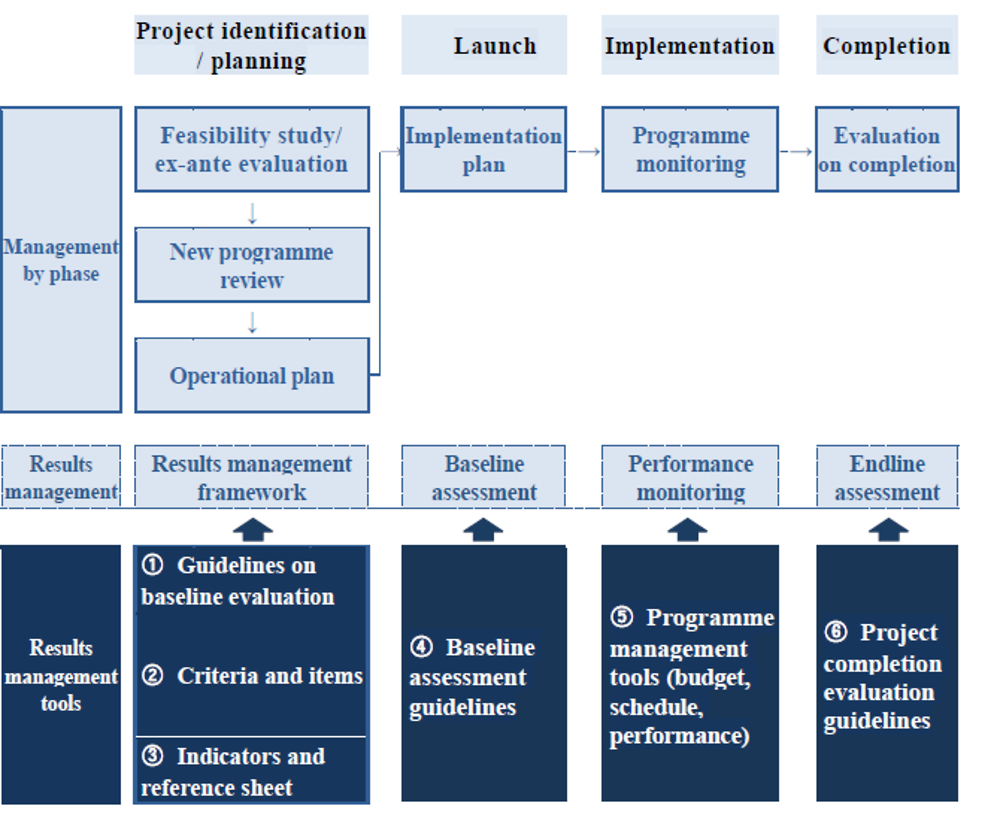

Furthermore, Korea has rolled out a new on-line project management tool for grant-based projects to measure and manage project-level results against the standardised pool of indicators contained in its results framework (GoK, 2017). This tool is one of the first examples among DAC members of an open project management system that can also be used directly by external implementing partners. Box 6.1 shows how Korea is managing for development results in projects, from identification to evaluation. In implementing this approach, KOICA and KEXIM are aligning objectives with those of partner countries, and integrating monitoring data produced by partner countries (particularly for loans projects). At headquarters and in Cambodia, there was evidence that these approaches are well internalised by KOICA and KEXIM staff and that results information is helping to inform decisions on the ground.1 This is good practice and is helping Korea to operationalise its new thinking on results-based management, although it is unclear to what extent other government ministries involved in implementing Korea’s ODA are matching these efforts. Ensuring that ODA-funded activities across all institutions involved in managing aid are subject to results-based management (including monitoring) and that the results framework and associated guidance applies throughout the system would strengthen Korea’s efforts in this area.

Box 6.1. Korea’s results management system (project level)

Source: GoK (2017), “Memorandum of Korea, OECD DAC Peer Review 2017”, Government of Korea, (unpublished).

Results are not yet aggregated by country or theme

At a more strategic level, Korea is taking steps to aggregate results by theme across government to improve quality assurance and strategic direction as well as supporting accountability and communication. As such, Korea recognises that improving how it aggregates thematic results information will improve its ability to claim success and communicate its contribution to the Sustainable Development Goals in partner countries and in line with partner country development priorities.

In an effort to address this challenge, Korea has selected a sub-set of the many indicators in its results framework (more than 100 for KOICA and 40 for KEXIM for use at the project level) to develop thematic results frameworks for KOICA and EDCF aligned to the Sustainable Development Goals. Several Development Assistance Committee (DAC) members have found that reporting against an ever smaller sub-set of 10-15 indicators is more useful for measuring the aggregate results of development co-operation programmes (OECD, 2016 and 2017). As Korea proceeds with its plans to aggregate data for sectors (GoK, 2017), it could benefit by working closely with other members to harmonise indicators and distil which indicators can be used for higher level reporting. It might also review existing practices on:

how results can be disaggregated by sex, age and other variables

how to ensure clear guidance across agencies and for external implementing partners on using the framework

how to distinguish between output and outcome indicators and use them in the right context2

how to align results to – and provide evidence of contribution to – Korea’s overarching objectives (e.g. poverty reduction and inclusive growth).

Evaluation system

Peer review indicator: The evaluation system is in line with the DAC evaluation principles

Korea has comprehensive evaluation policies and guidelines that incorporate the DAC principles. The body that oversees evaluation has been strengthened, injecting more independence and transparency into Korea’s entire system for development co‑operation. Going forward, rather than evaluating all its activities, Korea would benefit from being more strategic in its evaluation coverage, guided by risk assessments or the need to learn. It should also involve its partners and stakeholders more systematically in designing and carrying out evaluations.

Public scrutiny of development results is increasing

A recent investigation by the National Audit Office into alleged incidences of corruption has resulted in increased public and parliamentary scrutiny as stakeholders seek more information on the results of Korea’s expanding development co-operation programme (Kim, 2017; Moon, 2016). Meeting this demand will require adequate resourcing for evaluation across Korea’s development co-operation system. It will also require a re-think of whether Korea has the right balance between strategic and project‑level evaluations, and how it might deepen the involvement of key partners and stakeholders to improve quality.

Korea is strengthening its evaluation system, including through external oversight

Evaluation was identified as a key challenge in the DAC’s first peer review of Korea (OECD, 2012). The committee recommended that Korea strengthen the independence and procedures of the Committee for International Development Cooperation’s (CIDC) sub-committee on evaluation; improve ongoing monitoring during project implementation; improve ex-post evaluation; strengthen capacities and delegate authority to support critical evaluation in field units; and systematically integrate lessons from evaluations into future programmes (Annex A).

Korea has made progress against this recommendation. For example, since the 2012 review, the CIDC has decided to strengthen its evaluation sub-committee by extending membership to both public and private representatives, improving scope for consultation and contestability in decision making. The sub-committee has since improved coherence across evaluations by introducing a unified standard based on DAC guidance for both grants and loans. In addition, the Framework Act (National Assembly, 2010) now requires each implementation agency to submit an annual plan for self-evaluation to the sub-committee for approval. It is now possible to outsource evaluations or include external experts within in-house evaluation teams to increase capacity. In parallel, the number of ex-post evaluations has also increased. Since 2016, baseline studies are compulsory and ex-post evaluations are planned for all projects where such studies have taken place.

Korea should consider how take a more strategic approach to its evaluations

At a more strategic level, the sub-committee has started to commission three to four of its own evaluations annually in an effort to look across agency operations, choosing projects where it considers there is a need for urgent reform.3 This represents a potentially interesting shift, not least because it helps Korea to take a more strategic view of development co-operation results and effectiveness. In the future, the development of Korea’s results monitoring framework will provide a stronger basis from which to carry out more strategic evaluations for overall learning and accountability purposes. In turn, if the monitoring systems work well, Korea will not need to continue its current practice of evaluating all initiatives, freeing up capacity to increase the focus on evaluations most likely to affect strategic decision making. By June 2017, 35 agencies had submitted 83 self-evaluation reports (concerning 10 loan projects and 73 grant projects) to the evaluation sub-committee for review.

There is also a new requirement that all evaluations be made public (see Chapter 1), with a summary of evaluation results reported annually to the National Assembly. In parallel, external oversight of the development programme has also improved. In May 2017, Korea’s Board of Audit and Investigation published its first comprehensive report on Korean ODA (BAI, 2017). Together, these initiatives are helping to develop a stronger evaluation culture within the Korean development co-operation system, which in turn increases publicly available information on the aid programme and satisfies demand for improved aid quality.

Stakeholder consultation and consistent approaches across ministries are still challenges

There is considerable variation in the way that different government ministries conduct and carry out evaluations, and how they comply with the CIDC’s requirement to make evaluations public. For example, some evaluations are published in full and others in summary form, there are no standard selection criteria across agencies managing ODA activities and it is not clear whether all evaluations are published. In addition, there is no formal system for publicly responding to evaluations. Korean-based civil society organisations also noted that the quality of evaluations varied significantly across Korean government agencies, with many failing to comply with central government guidelines and standards (KCOC, 2017).

Greater involvement of stakeholders – including implementing partners, partner governments, community organisations, and the direct recipients of Korean aid – would help Korea to strengthen mutual accountability in evaluation and buy-in for resulting recommendations. It would also help Korea to communicate more transparently and credibly on the results of its aid. A good starting point would be to centralise all evaluations on the ODA Korea website.4

Institutional learning

Peer review indicator: Evaluations and appropriate knowledge management systems are used as management tools

Korea has strengthened its evaluation feedback systems and the impacts on programme management and accountability have been positive. Korea now needs to pay more attention to centralising lessons across its entire system. Furthermore, Korea could do more to proactively share institutional learning with partners.

Korea is using a variety of innovative means for promoting institutional learning and ensuring lessons are learned

Since its first DAC review in 2012, Korea has prioritised institutional learning, particularly in KOICA and KEXIM offices, where evaluation processes have been strengthened and lessons are systematically fed back into future programme design and delivery. In addition, all Korean ODA implementing agencies must submit an annual plan for self-evaluation to the CIDC’s evaluation sub-committee. In this plan, results of evaluations are presented in summary form, along with a plan to integrate learning into future activities. Following review by the sub-committee, the plan goes to the CIDC for approval before being handed back to the agencies for implementation. The sub‑committee then undertakes biannual progress checks to ensure that evaluation lessons help to guide future programming and management decisions. To strengthen this learning culture, both the Office of the Prime Minister and KOICA run ODA evaluation training programmes.

KOICA has been tracking evidence of the uptake of evaluation findings since 2009. In 2014, it introduced a new system to record progress on organisational targets for learning from evaluation findings. Since 2009, KOICA has proactively applied its guideline, which stipulates that implementing agencies must create a plan to incorporate evaluation results into future activities (KOICA, 2008).

In another interesting development, it is now mandatory that KEXIM’s approvals process for new loans takes into account lessons from similar initiatives before being allowed to proceed. In addition, the bank shares the results of loan programme evaluations with internal and internal stakeholders through its annual evaluation report.

Each of Korea’s many implementing agencies has its own system for sharing knowledge and lessons, although the online project-management tool for grants now allows staff from all ministries and implementing agencies to consult resources across the system. Targeted knowledge-sharing efforts across agencies do occur, but tend to be ad-hoc (e.g. brown bag lunches) rather than formal attempts to synthesise lessons and disseminate knowledge. Recently, both KOICA and KEXIM have introduced new ways of disseminating evaluation lessons. These include short films,5 on-line learning forums and “card” news – a description of evaluation methods used, often with illustrations or graphics to catch the reader’s attention (see Box 6.2).

Box 6.2. Korea’s card news for evaluations

KOICA and EDCF regularly use “card news” – short, illustrated summaries – to explain key evaluation lessons and practice to staff and the public. For example, the above illustration explained how sanitation programmes in South East Asia and Africa used random control trials to set goals and improve performance. The accompanying text explains how KOICA used the findings from these trials to help establish its goal of 80% toilet coverage in the Democratic Republic of Congo, including through designing a competition between villages to incentivise uptake, with prizes ranging from additional water infrastructure to building a village monument.

Source: KOICA (2016), “KOICA평가심사실_지식충전 2화: 성과중심 M&E 체계 구축”, [Card News 2 impact evaluation of sanitation programs], Korea International Development Cooperation Agency, Seoul, www.odakorea.go.kr.

Korea could do more to harness development knowledge and share lessons proactively, building on innovative work in communicating key achievements

Building on these many good practices, Korea could do more to harness knowledge across its development co-operation system. For example, as noted above, while evaluations are generally published, they are not always published in full or in a central place. The ODA Korea website provides a useful venue to ensure consistency in the publication of evaluations and dissemination of lessons. In improving its system further, it will be critical for Korea to build on its existing learning partnerships (e.g. with partner governments, NGOs and international organisations), including by extending its combined training programmes and joint monitoring and evaluation efforts with key stakeholders. Current examples of good practice include efforts to significantly increase the number of joint evaluations with partner countries, other DAC donors (most recently Germany, Japan and the United Kingdom) and multilateral development banks to build capacity and support mutual learning.

Bibliography

Government sources

BAI (2017), “공적개발원조 (ODA) 추진실태 보도자료” [Summary of Report on Korea’s Official Development Assistance], Board of Audit and Inspection Office of Korea, Administrative Safety Audit Bureau, in Korean only, www.bai.go.kr/bai, accessed 21/07/2017.

GoK (2017), “Memorandum of Korea”, OECD DAC Peer Review 2017, Government of Korea, Seoul (unpublished).

KEXIM (2016), “EDCF results framework (2016-2020) by sector”, (unpublished), KEXIM, Seoul.

KOICA (2016a), “Mid-term sectoral strategy (2016-2020)”, results framework, Korea International Development Cooperation Agency, Seoul, www.koica.go.kr/dev/download.jsp?strFileSavePath=/ICSFiles/afieldfile/2017/06/01/10.pdf&strFileName=KOICAs%20Mid-term%20Sectoral%20Strategy%20Brochure.pdf.

KOICA (2016b), “Baseline study guidelines”, KOICA Office of Assessment and Evaluation, Seoul, www.koica.go.kr/download/2016_eng.pdf.

KOICA (2008), “Development co-operation evaluation guideline”, Korea International Development Cooperation Agency, Seoul, www.koica.go.kr/download/eng_evaluation_guide.pdf.

National Assembly (2010), “Framework Act on International Development Cooperation”, Act No. 12767, revised Oct. 15, 2014, National Assembly of the Republic of Korea, Seoul, https://elaw.klri.re.kr/eng_mobile/viewer.do?hseq=33064&type=part&key=19\, accessed 02/08/2017.

Other sources

KCOC (2017), “Korean civil society report for the OECD DAC peer review 2017, redi.re.kr/.../ODA-Watch-ReDIShadow-Report-to-OECD-DAC-Midterm-Review.pdf.

Kim, B. (2017), “[특검 수사결과] 외교라인, 금융권, 공공기관까지 ‘최순실의 인사전횡”, [Choi involved in a Myanmar diplomatic scandal (short form title)], Newspim webnews, Seoul, www.newspim.com/news/view/20170306000225.

Moon, K. (2016), “South Korea’s shamanic panic: Park Geun-hye’s scandal in context”, Foreign Affairs magazine, 1st December 2016, Council on Foreign Relations, Tampa, Florida, www.foreignaffairs.com/articles/south-korea/2016-12-01/south-koreas-shamanic-panic.

OECD (2017), “Strengthening the results chain: discussion paper: synthesis of case studies of results-based management by providers”, OECD, Paris, www.oecd.org/dac/peer-reviews/results-strengthening-results-chain-discussion-paper.pdf.

OECD (2016), “Results-based decision making in development co-operation: providers’ use of results information for accountability, communication, direction and learning”, survey results, August 2016, OECD, Paris, www.oecd.org/dac/peer-reviews/Providers'_use_of_results_information_for_accountability_communication_direction_and_learning.pdf.

OECD (2012), OECD Development Assistance Peer Reviews: Korea 2012, OECD Publishing, Paris, http://dx.doi.org/10.1787/9789264196056-en.

Notes

← 1. In Cambodia, KOICA and KEXIM staff were applying the results framework for grants and loans, working with the national government to agree selection of indicators in the design phase and using the framework to guide monitoring and evaluation efforts. While the team noted some implementation challenges, these were due to recent capacity gaps following the departure of key technical expertise and were described as a temporary problem (see Chapter 4 and Annex C).

← 2. While most of the indicators in Korea’s results framework are defined in terms of outputs (e.g. the number of education materials distributed), Korea does not yet distinguish output from outcome indicators.

← 3. Examples of CIDC’s recent strategic evaluations include those on country partnership strategies to identify lessons and policy recommendations for future strategies; meta evaluations based on internal evaluations by ODA execution agencies to explore options for improving internal evaluations; and an evaluation of Korea’s ODA procurement systems.

← 4. See the ODA Korea website at www.odakorea.go.kr/eng.