Employment and Social Development Canada (ESDC), the federal ministry with responsibility for employment insurance and active labour market policy (ALMP), is responsible for policy decisions and provides funding to provinces and territories (PTs) who are required under the labour market transfers, to consult annually with labour market stakeholders in their respective jurisdictions to inform the appropriate mix of programmes to support their local populations. The Labour Market Development Agreements (LMDA) is the largest ALMP package in Canada. It provides employment support services and training, which together accounted for around CAD 2 billion of the total 2019 spending of CAD 5 billion on ALMPs. A highly capable analytical team within ESDC has conducted impact assessment of the LMDA since 2010, demonstrating that ALMPs offer value‑for-money to the taxpayer. Analysts have worked closely with policy colleagues and officials from the PTs to ensure evaluation plans are agreed collaboratively.

Assessing Canada’s System of Impact Evaluation of Active Labour Market Policies

2. Delivery and analysis of active labour market policies in Canada

Abstract

2.1. Introduction

In 2019, prior to the pandemic, Canada’s federal government invested around CAD 5 billion in active labour market policies (ALMPs) to help individuals find work. Employment and Social Development Canada (ESDC) is the government ministry with federal responsibility to improve the standard of living and quality of life for its citizens via the promotion of a highly skilled labour force and an efficient and inclusive labour market. Given its mandate, ESDC provides a portfolio of programmes aim to improve skills development in Canada. The largest programmes take the form of transfer payments to provinces and territories via the Labour Market Transfer Agreements (LMTAs), which encompass two distinct funding streams:

The Labour Market Development Agreements (LMDAs) provide eligible individuals with programmes such as skills training, recruitment and start-up subsidies, direct job creation and employment support services (including employment assistance services providing lighter touch interventions such as employment counselling, job search assistance and needs assessments). Eligible participants must be actively claiming employment insurance, have previously completed an employment insurance claim in the last five years, or have minimum employment insurance premium contributions in at least five of the previous ten years. Employment assistance services are open to all Canadians, regardless of previous work and contribution histories.

The Workforce Development Agreements (WDAs) fund training and employment support for individuals and employers regardless of their employment status, including those that have no ties to the employment insurance. The WDAs support individuals with weaker labour force attachment and include specific funding targeted for persons with disabilities. They are also used to support members of underrepresented groups (such as Indigenous peoples, youth, older workers, and newcomers to Canada). The agreements also allow provision of supports to employers seeking to train current or new employees.

In 2019, Canada transferred CAD 2.35 billion in funding to PTs via the LMDAs, covering 630 000 clients and 970 000 interventions (ESDC, 2021[1]). Its WDAs provide for annual funding for CAD 720 million, and a further annual top-up of CAD 150 million (CAD 900 million spread over six years from 2017/18 to 2022/23). Due to the nature of this funding and the flexibility in its delivery, ESDC do not collate participant data in the same manner as the LMDAs. Some other smaller funding streams also exist to deliver ALMPs. Most notably the federal Indigenous Skills and Employment Training Program provides annual funding of CAD 410 million from 2019 to 2028 (ESDC, 2020[2]) for ALMPs that are similar in type to the LMDAs, but targeted at the Indigenous Canadian population.

While the Government of Canada provides funding and sets parameters under the LMDAs and the WDAs, provinces and territories consult with labour market stakeholders in their jurisdictions to set priorities and inform the design and delivery of employment programs and services that meet the needs of their local labour markets. This is subject to the constraints laid down in the legislation and/or in the agreements for the different funding streams. For the LMDAs, this is the 1996 Employment Insurance Act, which governs the types of programmes and services that PTs may offer. Policy makers in ESDC are interested in which of these programmes work and for whom, so that future policy changes are cognisant of the available evidence and deliver the best outcomes for Canadians.

Skills training in Canada is a shared responsibility between the federal government and PTs. At the federal level, programming focuses on issues of national and strategic importance, that extend beyond local and regional labour markets. For example, programming to advance research and innovation, to support those further from the labour market and to engage employers in demand-driven training is considered at the federal level. Since devolution of federal training programmes to PTs in the mid‑1990s, PTs have developed expertise in programme design, built up training infrastructure, and established relationships with stakeholders to deliver training aligned with their specific labour market conditions. In addition to programming offered under the LMDAs and WDAs, PTs deliver programming funded from their own revenues.

This report focusses on information from the quantitative evaluation of the LMDAs. There are a few principle reasons for this. The first is that it represents the largest and most significant body of work that ESDC undertake with respect to impact evaluation of ALMPs and the LMDAs are the primary funding stream for delivery of ALMPs. The second is that the underlying dataset created for evaluation of the LMDAs – the Labour Market Program Data Platform (see Annex B of ESDC (2020[3])) – and the techniques employed in the LDMA evaluation have been subsequently adopted for use in evaluation of other, smaller funding streams (Indigenous Skills and Employment Training (ESDC, 2020[2]), and the Youth Employment and Skills Strategy (ESDC, 2020[4]; 2020[3])).1 The most significant omission is the lack of evaluation of the WDAs. The WDAs were first implemented in 2017‑18, so it has not yet been possible to complete evaluation on them. However, findings from the third cycle of LMDA evaluation, may help inform the effectiveness of certain type of WDAs interventions for some participant sub-groups. Separate WDA evaluation is also being conducted. Understanding the effects of the WDAs programming will be important to ensure that funding decisions can be made on the overall package of support to jobseekers that is able to contextualise the pros and cons of the different elements alongside each other.

2.2. Active labour market policy spending

2.2.1. Canada spends less than the OECD average supporting jobseekers

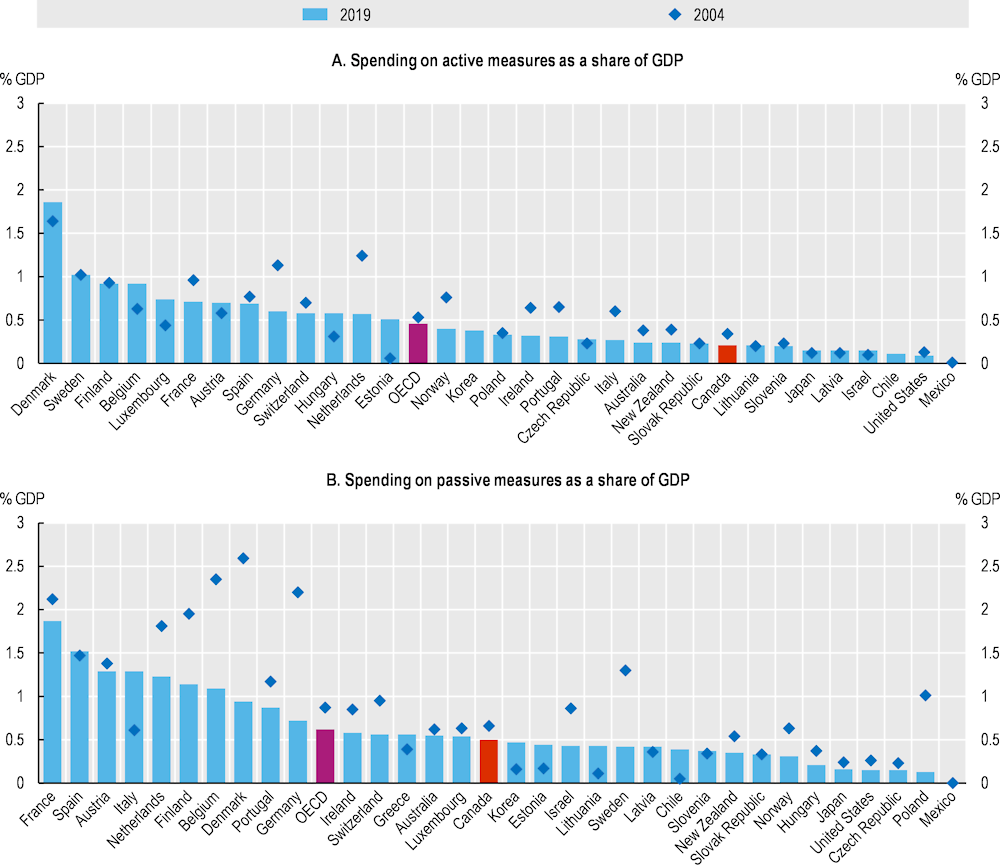

Canada is below the OECD average on both passive and active labour market spending. In 2019 it spent around 0.5% GDP on passive measures and 0.2% of GDP on active measures (Figure 2.1). In terms of spending per unemployed person on active measures, this placed it 23rd out of the 32 OECD countries for which there are data, a fall from its rank of 18th in 2004. In this context, it is apparent that ensuring the money available is spent on programmes that ensure the best outcomes for individuals is vitally important. Because the amount of federal funding for the LMDAs has been fixed in nominal terms since its inception, it may explain in part why Canada’s ranking has declined relative to other OECD countries. Explicit top‑ups of funding have to occur in order to increase spending, which mean spending does not automatically adjust to changes in demand.

Figure 2.1. Canada spends 20% less than the OECD average on passive measure and 50% less on active measures

Note: OECD is an unweighted average. 2019 data for Australia and New Zealand for employment incentives and for passive measures refers to budget year July 2018 to June 2019 and not July 2019 to June 2020 unlike for the other ALMPs as this category was highly affected by the exceptional measures taken to address the challenges of COVID‑19. Similarly data for passive measures for the United States refers to 2018.

Source: OECD Database on Public expenditure and participant stocks on LMP, http://stats.oecd.org//Index.aspx?QueryId=8540.

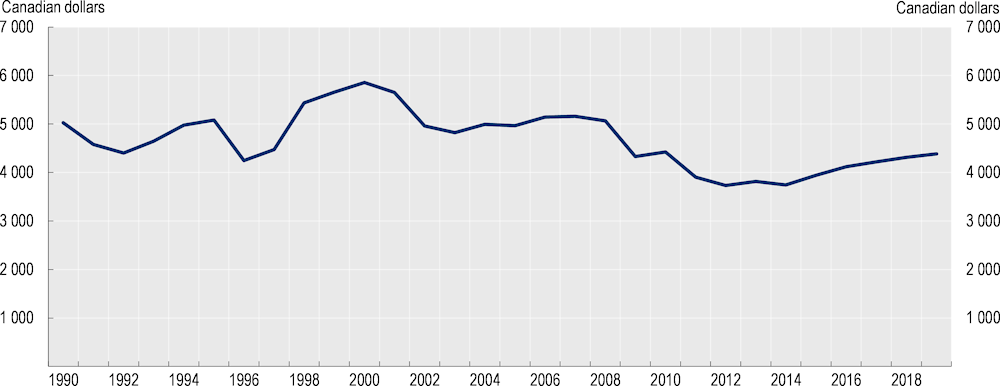

These dynamics can be seen clearly when real spending on active measures per unemployed jobseeker over time is analysed (in terms of 2020 price levels). From the mid‑1990s Canada spent around CAD 5 000 per unemployed jobseeker on active measures. Following the 2008 Financial Crisis this reduced to around CAD 4 000 and has never really recovered. In the decade to 2019, spending was some 22% lower per person than the decade to 2008 (Figure 2.2).

Figure 2.2. ALMP spending per unemployed person in Canada has fallen in the decade to 2019

Note: Spending deflating using the consumer price index (CPI) taking 2020 as the base year.

Source: OECD Databases: Public expenditure and participant stocks on LMP, http://stats.oecd.org//Index.aspx?QueryId=8540 for spending, Main Economic Indicators Publication http://stats.oecd.org//Index.aspx?QueryId=17074 for CPI and LFS by Sex and Age http://dotstat.oecd.org//Index.aspx?QueryId=9571 for unemployed persons.

2.2.2. A range of ALMPs are offered to Canada’s citizens via the LMDAs

The LMDAs are designed to help eligible clients into employment and to secure better jobs. To do this, they comprise a combination of different programmes to meet different needs. Table 2.1 maps the different programmes according to the type of service. The only notable omission from the main ALMP categories is category five, supported employment and rehabilitation. Programmes in this category are provided under a separate set of funding in the Opportunities Fund for Persons with Disabilities.

Table 2.1. LMDA offer a basket of different ALMPs

|

LMDA Name |

ALMP Categorisation |

Description |

|---|---|---|

|

Targeted Wage Subsidy (TWS) |

Employment Incentives (Category 4) |

Encourage employers to hire individuals who they would not normally hire in the absence of a subsidy |

|

Self-Employment Assistance (SE) |

Start-up Incentives (Category 7) |

Help individuals to create jobs for themselves by starting a business |

|

Job Creation Partnerships (JCP) |

Direct Job Creation (Category 6) |

Provide individuals with opportunities through which they can gain work experience that leads to on-going employment |

|

Skills Development (SD) |

Training (Category 2) |

Help individuals obtain skills, ranging from basic to advanced skills through direct assistance to individuals |

|

Employment Assistance Services (EAS) |

Placement and Related Services (Category 1) |

Provide employment services such as counselling, developing a career or training plan, and job search assistance |

Note: Classification according to the OECD LMP database, please see https://www.oecd.org/els/emp/Coverage-and-classification-of-OECD-data-2015.pdf.

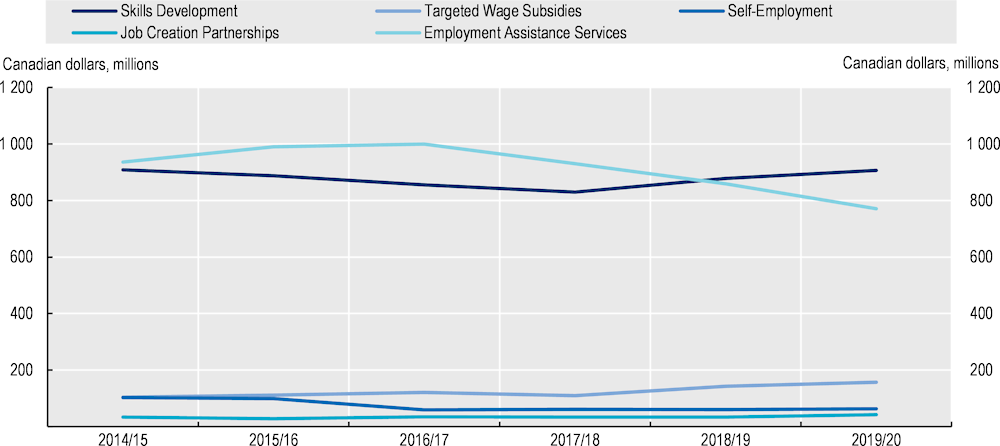

2.2.3. Employment Assistance Services and Skills Development comprise the majority of the ALMPs offered

In the five years to 2019/20, Employment Assistance Services and Skills Development accounted for 88% of total spending on LMDAs (Figure 2.3).2 Relative to other OECD countries, Canada has a strong focus on these measures in the basket of ALMPs it offers. It ranks fourth out of 32 OECD countries when looking at employment support services and training as a percentage of total ALMP spending. When looking at training in isolation it ranks fifth (OECD, 2021[5]).3 So it has a relatively strong focus on supporting individuals to look for work and improve their search strategies and in helping them to improve their skills to secure better paid work. Employment Assistance Services serve as the gateway to the extra programmes that PTs offer. All unemployed individuals, or those employed or underemployed looking for a better job, have the opportunity to meet with a job counsellor who helps to provide them with a structured approach to their job seeking. They can use these meetings to determine need and create an “action plan” with individuals, to guide them in their job seeking and assess need for participation in other ALMPs. Skills Development is the ALMP that it utilised most often to aid jobseekers to improve and augment their skills and help them find new employment. In 2019/20 there were some 170 000 of this type of training-related interventions compared to 25 000 of the other programmes combined.

In the share of the LMDAs programme spending, removing Employment Assistance Services due to their universal nature, Targeted Wage Subsidies have seen the largest growth from 9% in 2014/15 to 13.4% in 2019/20. Job Creation Partnerships share has grown by 24% and represented some 3.6% of spending by 2019/20. Both Skills Development and Self-Employment have seen reductions in their share of spending. While Skills Development still receives the lion’s share of the combined programme spending, this relative shift towards the other programmes is at least consistent with the evidence from Canada that in value for money terms they offer particularly good returns to society (ESDC, 2017[6]).

Figure 2.3. Employment Assistance Services and Skills Development comprise the majority of the spending

Note: Author’s aggregation of administrative information from annual employment insurance Monitoring and Assessment reports.

Source: Employment and Social Development Canada, (ESDC), employment insurance Monitoring and Assessment Report, (2016‑2021).

2.3. ALMP effectiveness

To scrutinise the effectiveness of the ALMPs that ESDC offers, it conducts extensive evaluation of the LMDAs and their underlying ALMPs. This incorporates a range of outcome indicators to inform their impact on labour market attachment. These indicators include the likelihood of employment, earnings in employment and subsequent receipt of employment insurance and social assistance. Comparing the combination of these indicators for participants and non-participants with similar characteristics, alongside data on costs of delivering the programmes, allows ESDC to determine how much value for money ALMPs provide.

2.3.1. ALMPs are effective, but with some variation between and within programmes

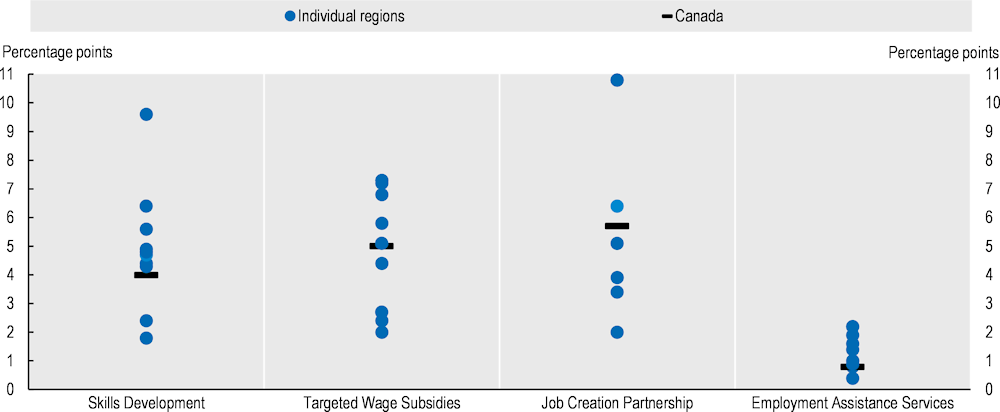

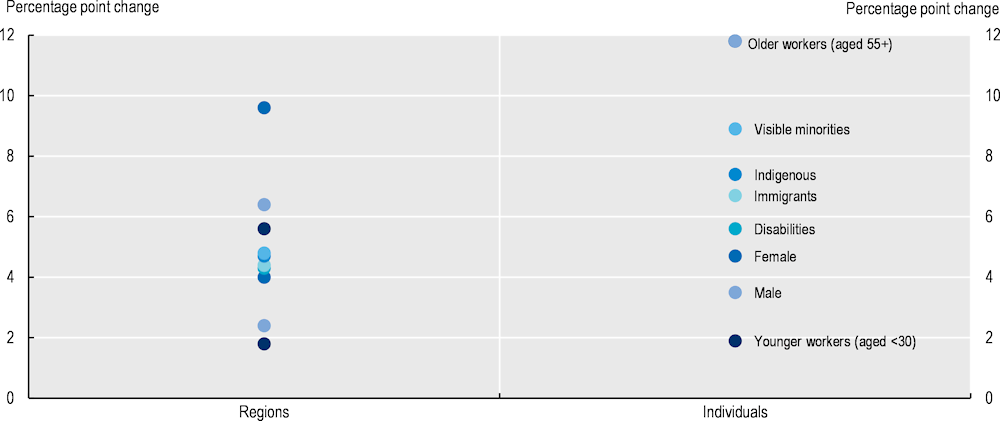

The impact assessments conducted by ESDC demonstrate that programmes are effective in helping individuals to advance in the labour market (ESDC, 2017[6]). However there is variation in the effectiveness between programmes (for example, Skills Development has a larger average employment effect than Employment Assistance Services) and variation within the same programme across different regions (for example, Skills Development employment effects vary from 2 percentage points to 10 percentage points across PTs).

Figure 2.4 shows that effects on the incidence of employment, differences between PTs for the same programme are larger than the average national differences across programmes. Similar patterns hold for the other outcome variables evaluated.

Figure 2.4. There is greater variation between regions in the same programme than there is across programme types

Note: Results for provinces and territories (PTs) are contained separately in the individual reports. They have been compiled here and each individual PTs estimate is a separate data point. The series for Canada has been extracted from the aggregate evaluation report on Canada.

Source: Individual Employment and Social Development Canada (ESDC) impact assessment reports on provinces and territories (2017‑2018), available at www.canada.ca.

Variation is also evident when looking into heterogeneous effects across groups of individuals. In fact, there is a greater variation when looking at different groups of people, than there is when looking at different regions (Figure 2.5). There could be numerous reasons to expect different programme impacts for different group of individuals, dependent on their underlying challenge in the labour market. For instance, if older workers were discriminated against in the labour market, then a skills development intervention, that demonstrated their proficiency in a particular subject, might unlock more job activities for them relative to the average participant, if it removed that discrimination in addition to adding skills. Without specific and detailed evaluation of the underlying causal mechanisms of these effects on sub-groups though, it is impossible to be precise on the reason why differences occur.

Presently, the analysis conducted by ESDC (2017[6]) cannot distinguish to what extent these differences in outcomes between regions are driven by policy implementation differences, differences in the eligible population or regional labour markets differences. This could be addressed by looking at whether treatment effects differ by PTs in the aggregate assessment for Canada, or by weighting the individual PTs outcomes into a Canadian average of jobseeker groups. Doing this would provide further insights on the extent to which policy delivery choices drive outcomes and could lead to the development of an analytical programme of work designed to provide further evidence on this.

Figure 2.5. There is a greater variation in outcomes for different groups of people than there is for different regions

Note: Regions taken from Cycle II labour market development agreement (LMDA) analysis, for individual cohorts 2002‑05. Individuals taken from Cycle III analysis for individual cohorts 2010‑12. Aggregate impacts for Canada are the same across these two reports, though the breakdowns reported here may not be. Neither report series has both breakdowns contained within. The comparison between them is made for illustration only. “Visible minority” refers to whether a person identifies as a visible minority, as defined by the Employment Equity Act. The Employment Equity Act defines visible minorities as “persons, other than Aboriginal peoples, who are non-Caucasian in race or non-white in colour”. “Indigenous” indicates whether the client identifies themselves as of Aboriginal origin. “Disability” refers to individuals with a self-identified disability.

Source: Individual Employment and Social Development Canada (ESDC) impact assessment reports on provinces and territories (2017‑2018) available at www.canada.ca for regions and ESDC (2021), Analysis of Employment Benefits and Support Measures (EBSM) Profile and Medium-Term Incremental Impacts from 2010 to 2017 (unpublished), for individuals.

Knowing to what extent differences between regions are driven by compositional differences in the eligible jobseekers is important in the Canadian context, given the considerable flexibility afforded to the PTs to design and deliver programme supports and services. Differences in policy design and implementation across PTs may play a role in how effective programmes are at securing better work for individuals. These issues are pertinent in most OECD countries, as even in situations where policies are designed nationally, there is room for significant variation in how they are implemented in different localities, even when centrally by one central agency.

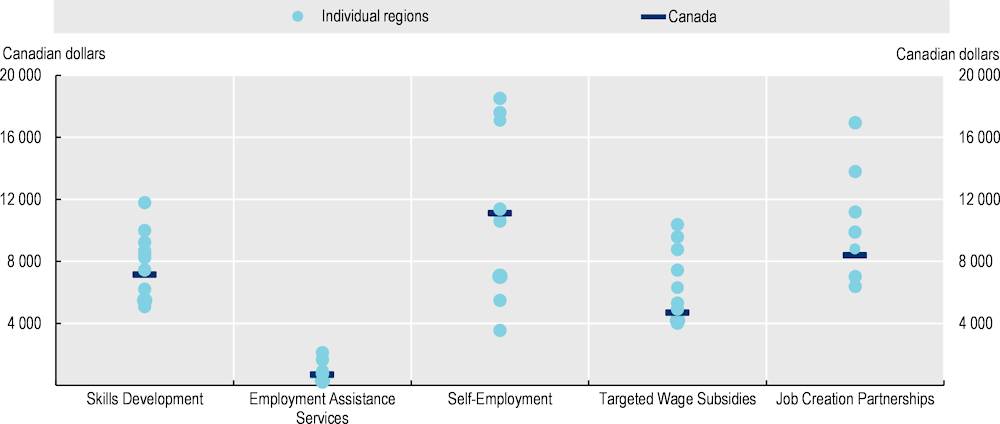

Differences in the delivery costs of PTs are large (Figure 2.6). Aside from broad legislative definitions of programme types, PTs have the flexibility to choose the precise nature and content of these programmes. In addition, programmes may be delivered centrally by regional administrations, or contracted out and delivered by third party partners. Therefore, the potential for significant differences in programme delivery between PTs is large.

Skills Development has the smallest proportional difference between the lowest and highest PTs average cost- even here, it costs 2.3 times as much to deliver the programme in the PT with the highest average cost compares to the PT with the lowest average. The important point is not the comparison of costs per se – a higher delivery cost may be more than justified if the outcomes are better, or if redistribution is a particular concern. But without knowing how the composition of programme delivery affects outcomes, it is difficult to give any particular insight into how programmes should be designed to provide better outcomes for individuals. For example, in the United Kingdom, evaluation is being undertaken on the Work and Health Programme to determine whether it is possible for government to provide services as effectively as third party providers (DWP, 2021[7]). This type of analysis can shed more insight into the precise delivery challenges and how to optimally deliver policy.

Figure 2.6. Cost of programme provision varies significantly by region

Note: Average cost per participant 2002‑05. Individual provinces and territories (PTs) estimates are extracted from the PTs report. Canada is taken from the aggregate report on Canada.

Source: Individual Employment and Social Development Canada (ESDC) impact assessment reports on provinces and territories (2017‑2018), available at www.canada.ca.

2.4. ESDC’s analytical structure

Conducting analytical research on the LMDAs involves the co‑ordination and co‑operation of a broad set of staff within the ministry. Planning analysis, allocating resources, accessing data and working with PTs mean that an extensive network of interactions and governance is required, to ensure the smooth and efficient conduct of work that is vital to understanding how ALMPs help individuals into work. This section reviews how those resources are organised in ESDC to conduct analysis.

ESDC is a large federal government ministry, headed up by four government ministers and five deputy ministers. Its broad remit is to “build a stronger and more inclusive Canada, to support Canadians in helping them live productive and rewarding lives and to improve Canadians’ quality of life” (ESDC, 2018[8]). This covers responsibility for pensions, unemployment insurance, student and apprentice loans, education savings and wage earner protection programmes and passport services. How any ministry organises itself has implications on the functioning of its programmes and services. This section reviews how ESDC organises the functions that relate to evaluation of its ALMPs and how the teams that do this interact with wider departments and services. It also reviews how this has changed over time. Setting this information out up front will provide a framework with which to contextualise some of the details explored further in the report.

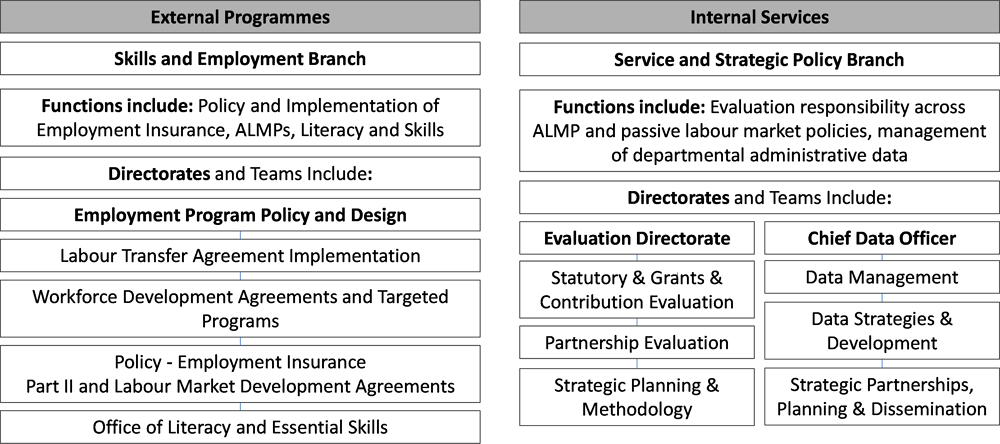

2.4.1. ESDC separates out evaluation from the teams responsible for policy development

The division into external and internally facing groups means that not all of the responsibility for a particular programme lies within the same chain of command. For evaluation work in ESDC, this means that teams that conduct the work, for example on the LMDA, do not sit in the same group or directorates as the one with policy and implementation responsibility for them. The organisation of evaluation sits within a broader federal requirement, via the Treasury Board “Policy on Results”, to implement and maintain a neutral evaluation function.

ESDC broadly organises itself into two groups, determined by whether the function of the underlying branch is to deliver externally facing work or whether they are internally facing (Figure 2.7). The functions within the external group are focussed on the delivery of programmes, including responsibility for policy development and for managing the implementation of these policies in Canada (incorporating those that are federally delivered and those delivered by PTs). The internal services group primarily delivers functions to support the operation of ESDC and the functions contained within the external programme group. For example, pan-ESDC services such as Chief Audit Executive and Human Resources Services sit within this group.

Figure 2.7. The split of work into external and internal groups means policy spans different organisational jurisdictions

Note: Both Skills and Employment and Service and Strategic Policy Branches have more functions and directorates within them. This figure displays only those that concern the discussion of LMDA. Similarly, the External Programmes group includes; Income Security and Social Development Branch, Office of Disability Issues, Learning Branch, Program Operations Branch, Skills and Employment Branch, Office of Literacy and Essential Skills. The Internal Services group includes; Chief Audit Executive, Chief Financial Officer, Corporate Secretary, Human Resources Services Branch, Innovation, Information and Technology Branch, Legal Services Branch, Public Affairs and Stakeholder Relations Branch, Strategic and Service Policy Branch.

Source: ESDC organisational charts, https://www.canada.ca/en/employment-social-development/corporate/organizational-structure.html.

The Strategic and Service Policy branch delivers functions related to ALMP analysis and data storage

The Strategic and Service Policy Branch integrates strategic and operational policy and service delivery across ESDC. Its responsibilities include the development of economic, social and service strategic policies related to the mandate of the department and it conducts research activities in these areas. It has responsibility for the strategic management of data, for programme evaluation and for intergovernmental and international relations.4 The evaluation function in ESDC exists as a directorate, the Evaluation Directorate, within this branch, as does the Chief Data Officer (CDO) directorate.

Within the evaluation directorate there are three separate divisions covering statutory and grants and contributions evaluation, partnership evaluation and strategic planning and methodology.

The LMDA evaluations are conducted by staff within the partnership evaluation and strategic planning and methodology divisions of this directorate.

The Skills and Employment branch is responsible for policy and programme delivery

The Skills and Employment branch provides programmes and initiatives that promote skills development, labour market participation and inclusiveness, as well as programmes ensuring labour market efficiency. This branch includes the employment programme policy and design directorate. Within this directorate there are divisions that have responsibility for the implementation of the LMTAs, Labour Market Transfer Targeted Programs, and policy on employment insurance benefits and support measures (ALMPs). A data and systems team is responsible for the LMDAs and WDAs data upload, quality and integrity of these data, and documenting data changes over time, is also situated within this directorate.

This corporate structure means that the two different branches of the organisation have to collaborate to bring together policy development and evidence. If corporate organisation was wholly aligned by policy area, then evaluation and policy would sit within the same area (for example, LMDA policy, implementation and evaluation would fall under the same directorate).

The current organisation brings both benefits and challenges. The benefits of a centralised evaluation function within ESDC, mean that it is easier to co‑ordinate evaluations, ensure the neutrality of the function, share expertise and gain through a coalescence of that expertise in a specific area. The challenge is then to ensure that priorities are aligned with the policy and implementation of the specific work area and that evaluation and evidence are properly brought to bear within that policy domain. Duplication of work needs to be constantly reviewed. Separate analytical teams exist within the policy and programme divisions. If there is not careful co‑ordination of respective remits and priorities, it may mean that teams do similar work or overlap in function, reducing the efficiency of the analytical resources deployed.

2.4.2. Teams within the evaluation directorate are defined by function to increase specialisation

The successful delivery of analytical impact assessments of ALMPs over the years has helped the evaluation function in ESDC embed itself as a central part of the policy making process. In recent years, the evaluation directorate has increasingly relied on conducting evaluation internally rather than relied on costly external contracts. This shift allowed the directorate to increase its workforce from around 50 staff in 2013 to about 70 in 2021 while maintaining the same overall level of resources allocated to the evaluation function. Furthermore, this shift allowed it to take on more and varied activities to support policy making within the Department and also to further develop specialised teams within the directorate itself.

Broadly the three main functional areas for evaluation are:

Data preparation (Strategic Planning and Methodology Division) – This area became particularly important prior to the move from survey based analyses to administrative data based analyses. It was the catalyst for that move and remains an integral part of the analytical set-up. This ensures the data provided by CDO are turned into the requisite analytical files for analysis. Extensive data documentation has been produced to ensure business continuity.

Impact analysis (Strategic Planning and Methodology Division) – These teams work directly with the data to apply the statistical techniques needed to produce estimates of programme impacts. The specialisation of these teams allow them to focus on ensuring they are applying the most rigorous and up-to-date techniques to interrogate the data, without having to dilute as much expertise on data assimilation and preparation.

Liaison with PTs and qualitative analysis (Partnerships Evaluation Division) – A vital part of the whole analytical process is to liaise with PTs to discuss issues and jointly plan evaluation work. Without this function, the whole process would be untenable. Planning and conducting contextual qualitative analysis to supplement the quantitative impact assessment is important to provide PTs with insight into what works in their province or territory and why.

Increased resource has allowed further specialisation

Following the swift towards the internal conduct of quantitative evaluation activities, the resources available for methodology and data as part of the Evaluation Directorate increased from three people in 2015 to about 10 in 2021. This has allowed them to expand work areas (for example, starting to conduct gender based evaluation) but has also enabled increased specialisation. Dedicated functions have now been carved out for methodology (advising on the tools and techniques to use for impact assessment and providing quality assurance protocols to follow) and data development (turning the data provided by CDO into analytical datasets that are ready to be used for impact assessments, ensuring that comprehensive data documentation and meta-data exist to support their use).

In-house delivery of counterfactual impact evaluation, which started with the LMDAs, has since expanded to the former Youth Employment Strategy as well as the Aboriginal Skills and Employment Training Strategy and the objective is to include the Workforce Development Agreements. The previously outlined split of responsibility, so that the evaluation function is centralised and conducts all of these evaluations in the same directorate, means that organisation, prioritisation and resource considerations can be managed in one division. The challenge is to reach consensus on priorities between the evaluation director and directors of policy and implementation, who sit in different areas of the department.

2.4.3. The establishment of a separate Chief Data Officer function helps to support evaluation

An important element to support the evaluation teams in conducting their work is the separation of the corporate function that deals with data management, data transformation and integration, development, procurement and dissemination. This organisation also brings benefits to other analytical teams, as it creates a coherent and consistent structure and direction around data management and use to analysts.

The Chief Data Officer (CDO) role was created in 2016 in order to lead the implementation of an enterprise‑wide data strategy focused on unlocking the business value of its data assets while protecting the privacy and security of its clients. The enterprise strategy brings a horizontal perspective to ESDC’s management and use of data, empowering data users with the right knowledge, tools and supports, creating several advantages for ESDC, and specifically for evaluation:

Provides a central point of contact for data transfer requests, streamlining interactions and ensuring a common standard for transfer. In the context of LMDA this ensures that all provincial data are managed and processed according to the same set of rules.

It has created common data access protocols for data users, ensuring consistency of use and enhancing protection of data privacy and security.

Progress has been made in improving access to properly contextualised and curated data, including the introduction of a web-based portal for access requests, and the establishment of a Data Foundations Programme to deliver the enterprise data infrastructure to enable secure and timely access to high quality data (including a data catalogue, enterprise data warehouse, and a data lake).

Teams within the CDO directorate process, transform, standardise and clean data to make it easier to use for users and create common files for data usage.

Fosters collaboration and data stewardship, breaking down silos and encouraging partnerships to maximise the responsible and ethical use of data and tools and methods for analysis (e.g. machine learning, advanced analytics) across the policy to service continuum (from policy analysis and research, through to service delivery, evaluation and reporting)

2.4.4. Relationships function well between federal government and PTs

LMDA delivery and evaluation of ALMPs is the responsibility of PTs. Federally conducting the evaluation element within ESDC (jointly with PTs) therefore requires extensive communication, excellent organisation and collaborative leadership. The relationship between the federal level and PTs in this context is reported to be harmonious and filled with trust. Formal governance procedures and honest and accountable leadership have been cited as laying the foundations for this relationship.

The governance procedures in place ensure that all parties get a voice in proceedings (all PTs have an equal vote, so it means there is no size bias in terms of PTs with larger populations having greater influence) and the work plans developed are based on mutually agreed outcomes. There is an Evaluation Steering Committee with representatives from all participating PTs and federal officials, which decides on all matters relating to evaluation of the LMDA at a working level.

The Forum of Labour Market Ministers, created in 1983, provides a forum for ministers from federal, provincial and territorial levels to discuss high-level issues relating to the overall labour market policies and strategies, of which evaluations is one. Its various working groups also allow for information sharing and discussion of issues between PTs and federal officials. This ensures that any issues arising from the evaluation work can be discussed further among senior policy makers.

An important element within this dynamic has been the value of the quantitative analysis in helping to make the case with PTs that they could also benefit from projects led by ESDC with their collaboration. For example, research that proved the value of early interventions with jobseekers (Handouyahia et al, 2014[9]) was considered instrumental in securing broad agreement among PTs on the value of collection of good quality data, so that proper evaluation could be conducted on policy delivery.

Officials from PTs are also grateful for the opportunities that these formal face‑to-face channels provide for more informal networking and peer learning among each other. That these processes have been suspended somewhat as a consequence of COVID‑19 means that there is less of an opportunity than was previously the case. It will be important to re‑establish these once sanitary circumstances allow, so that PTs can continue this process of peer learning.

2.4.5. Canada delivers a suite of ALMPs that are underpinned by evidence generated by internally delivered analysis

In conclusion, Canada has a range of ALMPs to support its jobseekers find better work, primarily orientated around employment support services and training to improve skills. However, funding for this has been eroded over the years and Canada spends less than its OECD counterparts. ESDC has established an evidence base that its policies are effective and deliver value for money for the taxpayer, but evidence shows that there is variation to this geographically and among different groups of individuals. Much of this evidence has been generated using internal analysis, with resource that it has built over the years. In line with federal policy guiding the internal conduct of performance measurement and evaluation, ESDC’s evaluation function is centralised within the ministry and it sits outside of the policy and programme teams that manage the implementation of the policy. This ensures the neutrality of the function and provides an opportunity to benefit from collective expertise controlled within the same directorate, so that work priorities can be aligned. For instance, the evaluations on the former Youth Employment Skills Strategy as well as the Aboriginal Skills and Employment Training strategy benefit from being conducted by the same teams within the evaluation directorate. As they use the same underlying data to produce the evaluations,5 this reduces the need for duplication in expertise if separate teams were to conduct them instead. The expansion of data resources and the establishment of a Chief Data Office in ESDC has also allowed the department to streamline and adopt a strategic approach to data management, facilitating data access across the department and further allowing the evaluation teams to focus on the quantitative analysis. An established system of governance and forums for exchange has facilitated ESDC’s ability to conduct these evaluations jointly with PTs.

References

[7] DWP (2021), Work and Health Progamme statistics: background information and methodology, Department for Work & Pensions, United Kingdom, https://www.gov.uk/government/publications/work-and-health-programme-statistics-background-information-and-methodology/work-and-health-progamme-statistics-background-information-and-methodology (accessed on 20 December 2021).

[1] ESDC (2021), 2019/2020 Employment Insurance Monitoring and Assessment Report, Employment and Social Development Canada, http://www12.esdc.gc.ca/sgpe-pmps/p.5bd.2t.1.3ls@-eng.jsp?pid=72896.

[2] ESDC (2020), Evaluation of the Aboriginal Skills and Employment Training Strategy and the SKills and Partnership Fund, Employment and Social Development Canada, https://www.canada.ca/en/employment-social-development/corporate/reports/evaluations/aboriginal-skills-employment-training-strategy-skills-partnership-fund.html.

[3] ESDC (2020), Hoizontal Evaluation of the Youth Employment Strategy: Career Focus Stream, Employment and Social Development Canada, https://www.canada.ca/en/employment-social-development/corporate/reports/evaluations/horizontal-career-focus.html.

[4] ESDC (2020), Horizontal Evaluation of the Youth Employment Strategy: Skills Link Stream, Employment and Social Development Canada, https://www.canada.ca/en/employment-social-development/corporate/reports/evaluations/horizontal-skills-link.html.

[8] ESDC (2018), Employment and Social Development Canada 2017/18 Departmental Results Report, Employment and Social Development Canada, https://www.canada.ca/en/employment-social-development/corporate/reports/departmental-results/2017-2018.html.

[6] ESDC (2017), Evaluation of the Labour Market Development Agreements: Synthesis Report, Employment and Social Development Canada, https://publications.gc.ca/site/eng/9.841271/publication.html.

[9] Handouyahia et al (2014), Effects of the timing of participation in employment assistance services : technical study prepared under the second cycle for the evaluation of the labour market development agreements, Employment and Social Development Canada, https://publications.gc.ca/site/eng/9.834560/publication.html.

[5] OECD (2021), Labour Market Programmes Database, https://stats.oecd.org//Index.aspx?QueryId=112084.

Notes

← 1. The Indigenous Skills and Employment Training was formerly called The Aboriginal Skills and Employment Training. The Youth Employment and Skills Strategy was formerly The Youth Employment Strategy. Both of the referenced papers assessed the programmes under their old names.

← 2. This report refers to ESDC even where its functions may have been undertaken by a predecessor ministry prior to its formation.

Not including programme administration. Some PTs will finance programmes directly from this budget, for example internally delivered EAS

← 3. Using OECD LMP database categories 11: Placement and related services, 20: Training, 40: Employment incentives, 50: Sheltered and supported employment and rehabilitation, 60: Direct job creation, 70: Start-up incentives as the denominator, and categories 11 and 20 for the numerator. Data are based on 2019. Except for New Zealand and Australia for whom 2019 data are affected by the onset of COVID‑19 and 2018 data are used.

← 4. https://www.canada.ca/en/employment-social-development/corporate/organizational-structure.html.

← 5. The WDAS is conducted separately using survey data, due to current limitations on the administrative data available.