This chapter considers policy efforts to protect young children in digital environments, with a specific focus on the role of early childhood education and care (ECEC). Building on the OECD Recommendation of the Council on Children in the Digital Environment (2021), it explores the ways in which three groups of key actors can be supported to fulfil their respective roles. First, the chapter considers policy measures to engage digital service providers in efforts to keep young children safe in the digital world. Next, it examines the role of parents and families, and how governments can best support and advise them about safeguarding young children against digital risks. Finally, the chapter investigates the complex and expanding role ECEC professionals play in supporting young children in navigating digital opportunities and risks and outlines policy actions underway to do so.

Empowering Young Children in the Digital Age

3. Protecting young children in digital environments

Abstract

Key findings

The use of digital technologies by young children, under the right conditions, can benefit their learning, development and well-being. However, with these opportunities come expanded risks, some of which young children may be more vulnerable to because of their age and circumstances, such as their specific digital habits or the different ways adults mediate their digital interactions.

Results from the ECEC in a Digital World policy survey (2022) indicate that many countries and jurisdictions see risk-focused policy challenges relating to digitalisation and young children (0‑6 year‑olds) as highly important. While the same is true of risk-focused policy challenges relating specifically to digitalisation and ECEC, governments are generally not prioritising restrictive approaches for the sector.

There is growing recognition of the essential role of digital service providers (DSPs) in providing a safe and beneficial digital environment for children, including 0-6 year-olds. However, concrete policy actions in this area have only recently started emerging. Efforts to protect privacy dominate, particularly through legislation. In contrast, safety-by-design approaches are less common and more likely to be non‑mandatory. Moreover, despite a high need for strategic leadership and accountability in this area, there are not always relevant oversight bodies in place.

In general, guidelines and recommendations published or endorsed by governments to support parents and ECEC professionals more commonly cover risks to young children’s socio-emotional well-being or the amount of screen time than other topics, such as protecting young children’s privacy.

Support and guidance targeting parents of young children cover many topics related to digital safety and come from a variety of sources. Much of the guidance for parents endorsed by governments focuses on risks and restrictive approaches, while public discourse also tends to be biased towards the negative impacts of digital technologies. This sometimes ignores the fact that not all risks translate into harms for all children and could potentially exacerbate parental anxieties around digital parenting.

Support and guidance targeting ECEC professionals specifically are less common and less comprehensive than those for parents. This likely reflects the fact that technology is already embedded in young children’s home lives while it is still an emerging feature of ECEC environments. Nevertheless, conflicting or incomplete guidance means professionals may adopt different approaches – of varying quality – depending on their own ability and initiative.

Among the specific guidance aimed at ECEC professionals that does exist, there is evidence of messaging that promotes a balance of digital risks and opportunities. However, the misalignment of messaging between the risk-focused supports for parents and the more balanced supports for ECEC professionals has the potential to inhibit constructive collaboration between the two groups of key actors.

Introduction

Technological developments, accelerated by the COVID-19 pandemic, have increased both the intensity and breadth with which young children engage with the digital world. From watching videos on YouTube to playing educational games on mobile applications (apps) and interacting with voice-recognition Barbies, technology is firmly implanted in the day-to-day realities of even the youngest children. These technologies offer many opportunities for alternative and sometimes enhanced forms of learning, communication and play, but also come with higher exposure to an ever-expanding suite of risks. This is important, as both children’s digital experiences and their vulnerability to risks are dependent on the age and circumstances of these first experiences.

High-level international frameworks exist to help guide governments’ efforts to safeguard children in digital environments. In 2012, the OECD Council adopted the Recommendation of the Council on the Protection of Children Online. Following technological, legal and policy advances, a revised version, the Recommendation of the Council on Children in the Digital Environment (OECD Recommendation), was adopted in 2021. The United Nations Committee on the Rights of the Child’s General Comment No. 25 (2021) on children’s rights in relation to the digital environment and the Council of Europe’s Guidelines to Respect, Protect and Fulfil the Rights of the Child in the Digital Environment (2018) are other notable examples. All of these adopt a children’s-rights perspective, guiding countries to develop coherent policies that support governments, parents, DSPs and education professionals to better protect 0‑18 year‑olds from digital risks while enabling them to benefit from digital opportunities.

While other chapters in this report look into the opportunities digital technologies present to the ECEC sector, this chapter considers governments’ policy efforts to protect and empower young children in the digital environment. First, it explores young children’s engagement with the digital world and the potential risks they encounter. Second, it considers actions taken to better regulate the activities of DSPs whose services and products are used by young children. Next, it examines efforts to support and advise parents and ECEC professionals about how to safeguard young children against digital risks. The chapter concludes with policy pointers to strengthen governments’ efforts to protect young children in the digital age.

Young children in digital environments: Risk, opportunity and challenge

As younger children’s engagement with the digital world covers more areas of their lives, encompasses a wider range of technologies and increases in intensity, their exposure to risks and potential harms also grows. Young children may be more vulnerable to certain risks simply due to their age and circumstances, including their specific digital habits and the different ways in which DSPs, parents and educators mediate their digital interactions. While not all risks translate into harms for all children, effectively managing the digital risk landscape is an important and pressing policy challenge for today’s governments.

Digital risks and opportunities for young children

The omnipresence of technology in 21st century society means that the digital environment is now an established feature of young children’s lives. Recent studies from a range of OECD countries indicate that substantial shares of 0-6 year-olds regularly use digital technologies. For example, in Japan, data from 2018 reveal that while 6% of children under age 1 use the Internet, the share quickly rises to 47% of 2 year‑olds and 66% of 6 year-olds (Cabinet Office of Japan, 2019[1]). In the same year in Canada, 30% of 0-4 year-olds spent 1-2 hours per weekday on a digital device and 33% used digital technology in the hour before bed every or most nights (Brisson-Boivin, 2018[2]). In the United States, parental reports in 2020 showed that 57% of 0-2 year-olds watched YouTube videos online, as did 81% of 3-4 year-olds (Pew Research Center, 2020[3]) (see Chapter 2 for further explorations of these trends).

These figures are likely to have increased: a qualitative study of digital habits in 21 European countries concluded that very young children (0-8 year-olds) have shown the fastest growth in Internet use (Chaudron, Di Gioia and Gemo, 2018[4]). Furthermore, the COVID-19 pandemic increased young children’s use of digital technologies in the home, particularly for entertainment, and is likely to have accelerated uptake in both ECEC and home settings for learning and development (OECD, 2021[5]; Bergmann et al., 2022[6]; Ribner et al., 2021[7]).

There is evidence that young children’s use of digital technologies, under the right conditions, benefits their learning, development and well-being. Research undertaken with children across the 0-6 age range indicates that young children’s interactions with talking smart toys and voice assistants can support their ability to search for information, early language development and imaginative play (OECD, 2021[8]; Marsh et al., 2018[9]; Charisi et al., 2022[10]). The use of touchscreen technology has been associated with the development of young children’s fine motor skills, creativity and a range of positive learning outcomes (Bedford et al., 2016[11]; Xie et al., 2018[12]; Herodotou, 2018[13]). Interactions with social robots may promote social behaviours and problem solving (Charisi et al., 2022[10]). Later chapters consider ways to harness these digital technologies and others to provide high-quality and more equitable and inclusive ECEC (see Chapters 4-8).

But with these opportunities come expanded risks. The OECD’s Typology of Risks (2011, updated in 2021) identifies four categories of digital risks for children: content, conduct, contact and consumer. Cutting across these categories are three cross-cutting risks: privacy, advanced technology, and health and well‑being (OECD, 2021[14]). The OECD’s 21st Century Children project observes that personality factors (e.g. low self-esteem), social factors (e.g. lack of parental support) and digital factors (e.g. weak digital skills) make some children particularly vulnerable to online risks (Burns and Gottschalk, 2019[15]).

While previous OECD work considers digital risks for 0-18 year-olds, the project ECEC in a Digital World focuses on how risks manifest and can be managed specifically for 0-6 year-olds. This is important, as both children’s digital experiences and their vulnerability to harms are age dependent. There is variation even within the 0‑6 year‑old group: research suggests that for children under 2 in particular, the benefits of digital media may be limited and more dependent on adult interaction during digital media use, while negative physical effects impacting sleep and weight patterns have been identified (Hill et al., 2016[16]). Parental attitudes mirror the increased concern for younger children, even though these concerns are not always evidence-based (see below): in the United Kingdom and the United States, survey data indicate that parents of young children more commonly believe that the risks of technology outweigh the benefits (Ofcom, 2022[17]; Pew Research Center, 2020[3]). At the same time, research on older children (8‑11 year‑olds) suggests that the younger the child, the more prone they are to both overestimate their ability to stay safe online and to lack concrete skills to identify and navigate specific risks (Macaulay et al., 2020[18]).

With the exception of negative implications for children’s sleep, research on the impact of digital technology use on children’s developmental and well-being outcomes across ages is generally inconclusive (see Chapter 2 and Gottschalk (2019[19])). Nevertheless, the 0-6 year-old cohort may be particularly vulnerable to certain risks based on their digital habits. The most popular digital activity for 0-6 year-olds is generally watching videos or television online (Chaudron, Di Gioia and Gemo, 2018[4]; Ofcom, 2022[17]; eSafety Commissioner, 2018[20]; Cabinet Office of Japan, 2019[1]). Other uses such as finding information, listening to music, communicating with family or friends, and playing games are also common. While some of these activities may take place on child-specific services (e.g. YouTube Kids, Wiki for Kids), many occur on platforms not designed for children. This increases potential exposure to age-inappropriate content. The growing prevalence of automatic play functions and algorithmic recommender systems may exacerbate this.

In addition, an important share of young children is interacting with technologies designed for older users. For example, in the United Kingdom, more than one-fifth of parents of 3-4 year-olds said they would allow their child to have a profile on social media before they reached the minimum age (i.e. generally 13 years old, depending on the platform) (Ofcom, 2022[17]). Furthermore, the growth in popularity of voice assistants, wearables, home surveillance technologies and Internet of Things devices in the home means that young children are exposed to increasingly sophisticated forms of adult-centric technology from birth. Young children are particularly likely to attribute anthropomorphic characteristics and agency to such technologies, which makes them more vulnerable to associated risks such as data disclosure, over-trust and over-reliance (Charisi et al., 2022[10]).

Young children most commonly use mobile devices (e.g. tablets or smartphones), but smart televisions, game consoles, and desktop or laptop computers are also popular. First use generally takes place via a parent’s or sibling’s device, which may not have the controls or child-friendly device settings expected on a young child’s device. Nevertheless, in some countries, many children have access to their own device from an early age, particularly tablets, smartphones and increasingly – although still only for a minority – smart toys (Brisson-Boivin, 2018[2]; Ofcom, 2022[17]; eSafety Commissioner, 2018[20]). Furthermore, children quickly become more independent digital users: in the United States, 5-8 year-olds mostly use digital tools independently and qualitative research from across Europe concludes that young children learn how to interact with digital devices individually and autonomously (Pew Research Center, 2020[3]; Chaudron, Di Gioia and Gemo, 2018[4]). Access to personal devices and increased autonomy increase the likelihood of unsupervised online activities.

Table 3.1 offers some examples of how digital risks can manifest for young children. The examples are not exhaustive; nevertheless, they help illustrate that there is a complex digital risk landscape beyond commonly cited threats such as cyberbullying for young children as for children of other ages. For example, while children of any age risk being exposed to age-inappropriate content through their use of digital technologies, 0-6 year-olds are perhaps particularly vulnerable to this risk due to their lower awareness of what is and is not age-appropriate and to the wider scope of content that is inappropriate for this age group. At the same time, conduct risks (i.e. activities in the digital environment whereby children create risks for other children) may be less common among this age group as they are less present on social media or other tools via which users communicate independently with their peers.

Table 3.1 includes two types of risk, “technoference” or “phubbing” and sharenting, that are not included in the OECD’s Typology of Risks but are increasingly common phenomena for which there is growing evidence of negative implications and to which young children may be particularly at risk. For example, research is beginning to reveal the negative impact of technology-related disruptions to parenting behaviours, which may affect young children, particularly given the importance that frequent and highly sensitive parental interactions have for their early development. When it comes to interactions between parents and young children, “technoference” (i.e. everyday disruptions in interpersonal interactions due to the use of digital devices) can lead to low-quality interactions marked by less positive affect, weaker engagement in play and educational activities, and more conflict (Konrad et al., 2021[21]; Kildare and Middlemiss, 2017[22]). In addition, the term “sharenting” refers to a growing trend among parents to share information and photos of their children on social media. Research indicates that a significant number of parents engage in this practice without considering privacy and safety issues and other risks to children’s present and future emotional well-being and identity formation (Siibak, 2019[23]). Further research into digital risk manifestations for young children is required, including into risks for children of specific ages within the 0-6 age group, to explore these hypotheses further.

Table 3.1. Examples of digital risk manifestations for young children

|

Digital risks |

Examples of digital risk manifestations for young children |

|---|---|

|

Filter bubbles |

Recommender systems within online platforms propose content based on previous consumption. As such, they risk narrowing young children’s opportunities to discover different information and new perspectives (Charisi et al., 2022[10]). |

|

Harmful/age‑inappropriate content |

In one study of user logs on mobile devices, 3-5 year-olds commonly accessed general audience apps such as Cookie Jam and Candy Crush as well as age-restricted apps such as gambling apps (Radesky et al., 2020[24]). A YouTube trend has been identified which sees videos that look to be child-friendly spliced with violent and other age-inappropriate content (e.g. a Peppa Pig video spliced with images of self‑harm) (Zon and Lipsey, 2020[25]). |

|

Hacking |

In 2018, toymaker VTech reached a settlement with the US Federal Trade Commission following legal action for failing to protect its smart toys from hackers (Zon and Lipsey, 2020[25]). |

|

Mistreatment of personal data |

A study of the smart toys “Cayla” and “i-Que” found numerous security risks, including data tracking for third parties (Myrstad, 2016[26]). |

|

Hidden purchasing |

A nationally representative survey of parents of 0-5 year-olds using digital apps in the United Kingdom found that 10% of the children made accidental in-app purchases (Marsh et al., 2018[9]). |

|

Aggressive advertising |

Ninety-five per cent of a sample of popular apps for 1-5 year-olds were found to contain at least one type of advertising, many of which were classed as “manipulative” (Meyer et al., 2019[27]). |

|

Technoference/phubbing |

In the United States, 68% of parents reported sometimes feeling distracted by their phone when spending time with their children. This share was 75% among young parents, who were more often the parents of younger children (Pew Research Center, 2020[3]). |

|

Sharenting |

Increasingly, parents share a large volume of private information about young children, often without consent. This raises potential risks such as identity theft and unauthorised resharing and may inhibit personal identity formation (Zon and Lipsey, 2020[25]). |

Challenges related to managing digital risks for young children

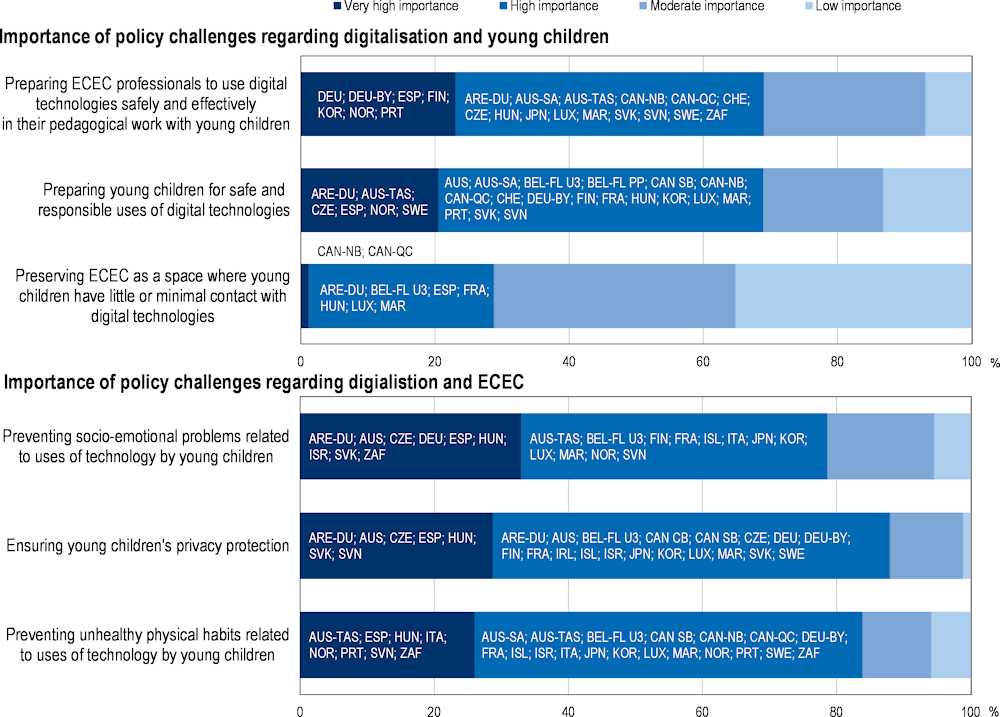

Protecting young children from digital risks is a key priority for governments. In the ECEC in a Digital World policy survey (2022), risk-focused policy challenges were consistently identified by a large share of governments as being of “very high” or “high” importance (see Figure 3.1). For example, with regards to digitalisation and young children in general, 88% of countries and jurisdictions participating in the survey attributed “high” or “very high” importance to ensuring young children’s privacy and 84% to preventing unhealthy physical habits related to uses of technology, such as negative impacts on sleep, exercise and nutrition. Preventing socio-emotional problems related to young children’s use of technology – including, for instance, social isolation, stress, anxiety or harassment – was identified as being of “very high” importance by nearly one-third of participating countries and jurisdictions.

With regards to digitalisation and the ECEC sector specifically, both preparing ECEC professionals to use digital technologies safely and effectively in their pedagogical work and preparing young children for safe and responsible uses of digital technologies were identified as being of “very high” or “high” importance by two-thirds (68%) of participants (see Figure 3.1). Notably, and in contrast, preserving ECEC as a space where young children have little or minimal contact with digital technologies was attributed the same level of importance by just over one-quarter (28%) of participants. This indicates that despite governments being highly concerned about the risks related to digitalisation and the ECEC sector, they are not pursuing restrictive approaches. Rather, by focusing on promoting safe and responsible use through the sector, many governments are positioning ECEC as an important element in supporting young children to confidently navigate risks in digital environments.

Specific legislation or regulation already exists to protect children from many offline risks (e.g. child labour, sexual abuse, aggressive advertising, etc.). Where relevant, this has generally been expanded to cover related risks in the digital environment. However, there is a mounting sense of urgency and complexity when it comes to broader protections for children across the full range of present and future digital risks. This is partly due to the speed of technological and related social developments. At the same time, public discourse also tends to be biased towards the negative impacts of digital technologies, often over‑simplifying research findings and ignoring the fact that not all risks translate into harms for all children (Brito, Dias and Oliveira, 2018[28]; Green, Wilkins and Wyld, 2019[29]).

This bias has allowed a series of myths to emerge, including that young children and technology should not mix and that technology dominates young children’s lives (Plowman and McPake, 2013[30]). Compounded by intensive parenting trends, this has produced a collective anxiety that further disconnects the available evidence on risks from public perceptions and related policy approaches (OECD, 2020[31]; Radesky and Hiniker, 2022[32]). For example, parents often report being more concerned by the amount of time their child spends in front of a screen than by what they are doing on the screen (Livingstone et al., 2018[33]). In reality, the evidence base for the dangers of extended exposure, even for young children, is increasingly brought into question (see Chapter 2). Meanwhile, as Table 3.1 illustrates, potential risks extend far beyond the intensity of exposure. Furthermore, beyond quantity, the quality (i.e. context and content) of young children’s engagement plays a crucial role in their exposure to both risks and benefits (Livingstone et al., 2015[34]).

Figure 3.1. Policy challenges related to digital risks

Notes: Responses are weighted so that the overall weight of reported responses for each country equals one. See Annex A.

BEL-FL PP: pre‑primary education in Belgium (Flanders). BEL-FL U3: ECEC for children under age 3 in Belgium (Flanders). CAN CB: centre-based sector in Canada. CAN SB: school-based sector in Canada.

Source: OECD (2022[35]), ECEC in a Digital World policy survey, Tables B.1 and B.2.

Just as 0-6 year-olds interact with digital technologies differently than older children, thus encountering a particular digital risk landscape, the ways in which adults mediate young children’s digital engagement also differ. Effectively managing risks, even for young children, is not a question of simply eliminating them: a zero-risk digital environment is both unattainable and undesirable. Digital opportunities and risks are intrinsically related, so that maximising opportunities to build digital skills can increase exposure to digital risks, while attempts to minimise risk exposure can limit children’s opportunities (Smahel et al., 2020[36]; Livingstone, Mascheroni and Staksrud, 2015[37]). For young children specifically, trial-and-error approaches – which naturally entail taking risks – are key to developing foundational competencies, including early digital literacy (see Chapter 4). Nevertheless, research indicates that the younger the child, the more adults favour restrictive approaches (Chaudron, Di Gioia and Gemo, 2018[4]). This has the potential to create a vicious cycle, as young children are deprived of opportunities to develop key skills that help them safely navigate the risks they will inevitably encounter on an increasingly regular basis as they age.

Given the specific nature of young children’s experiences of the digital environment, supporting them to safely navigate the digital world requires a targeted approach. However, studies have shown that, compared to other age groups within the 0-18 range, young children’s digital experiences have been neglected in research and policy efforts (OECD, 2020[31]; Burns and Gottschalk, 2019[15]). Similarly, secondary and tertiary levels of education have dominated national digital education strategies, to the neglect of ECEC settings and staff (van der Vlies, 2020[38]). As digital technologies become increasingly embedded in children’s lives, governments have an opportunity to review and redouble efforts to protect young children specifically, including through ECEC policies. This entails policy action aimed at the sector itself, but also at DSPs and parents and families, taking advantage of the interconnections between the three sets of actors to enhance efforts across all areas of young children’s engagement with digital technologies. The rest of this chapter explores what governments are currently doing to safeguard young children in digital environments and where further work could be done.

Digital service providers and young children’s safety in digital environments

DSPs are any natural or legal person that provides products and services, electronically and at a distance (OECD, 2021[39]). They may target young children directly (e.g. smart toy companies, educational app designers) or a wider general audience but count young children among their users (e.g. video streaming platforms, cloud services). The OECD Recommendation recognises the essential role DSPs play in providing children with a safe and beneficial digital environment (OECD, 2021[39]). The OECD Guidelines for digital service providers set out guidance in four key areas: 1) child safety by design; 2) information provisions and transparency; 3) privacy, data protection and commercial use; and 4) governance and accountability (OECD, 2021[40]). This section considers policy efforts governments are taking across these areas for young children specifically.

The role of digital service providers in protecting young children in digital environments

Approaches to combatting digital risks have traditionally placed responsibility on users themselves, emphasising self-regulation and education as key protective strategies. However, the high speed of change of digital technologies and digital practices means that new risks are constantly emerging. At the same time, commercial forces and adult-centricity in the conception of digital technologies lead to safety and privacy often being overlooked at the design stage (Edwards, 2021[41]). This places a heavy burden on individual users, asking them to be resilient to a system that is increasingly difficult to comprehend and often has an inherent disregard for their security (5Rights Foundation, 2019[42]).

An individualistic approach to risk management seems particularly inappropriate for young users. Young children are unlikely to comprehend the simplest notions around digital technology, including what it means to be online, or the difference between advertising and content (Chaudron, Di Gioia and Gemo, 2018[4]; Hartung, 2020[43]). By default, many safety or privacy decisions fall on parents or ECEC professionals. But these actors often lack the resources to fulfil a role which demands increasing amounts of knowledge, skills and time (OECD, 2020[31]; Burns and Gottschalk, 2019[15]).

Engaging DSPs can therefore make important contributions to young children’s safety in digital environments. First, it leverages sectoral expertise in the face of increasingly complex digital risks. While parents may favour protective or restrictive approaches, DSPs have a greater capacity to manage risk in a proactive manner, anticipating and addressing potential harms ex ante while also optimising opportunities. Regulatory and policy actions that place more responsibility on DSPs can also help mitigate inequalities: the differing levels of skills and resources with which each child, their parents or educators are equipped to take decisions around safety and privacy can lead to unequal levels of protection (Livingstone et al., 2018[44]).

Finally, as ECEC professionals look to engage further with digital technologies, policy action to establish DSPs’ responsibilities for young children’s safety can provide greater clarity for the sector. Holding DSPs to account for developing high-quality, safe and secure digital content and services for young children reduces the burden placed on ECEC staff responsible for selecting and monitoring the suitability and safety of the technologies that may be used in ECEC settings. Furthermore, such action can establish the conditions by which DSPs engage with the expansion of digital technology into the ECEC sector responsibly.

Safety-by-design and transparent, age-appropriate information

The objectives and success indicators by which digital services and products are designed (i.e. to maximise reach, activity and time spent online) often contravene the need to keep children safe. Designers themselves recognise that this results in a fundamental conflict of interest between DSPs and their young users (5Rights Foundation, 2021[45]). Furthermore, practices and regulations to protect children from risks in the digital environment are often less developed than those for the physical environment (Livingstone, Byrne and Carr, 2016[46]). In the case of commercial risks, for example, this is affecting the youngest digital users: a review of apps aimed at 0-5 year-olds found that 95% contained at least one advertisement, including aggressive and covert approaches such as video adverts interrupting play or adverts disguised as games (Meyer et al., 2019[27]). Meanwhile, children, parents and educators often feel frustrated about the complexity of both the interfaces and language used to inform them of their rights and security (Farthing et al., 2021[47]; OECD, 2020[31]).

To address these challenges, the OECD Guidelines for Digital Service Providers call upon providers to adopt a precautionary approach when designing and delivering services targeted at or potentially used by children. This includes considering children’s safety in the design, development, deployment and operation of products and services. In addition, the guidelines call on DSPs to provide transparent information. This means presenting information (e.g. terms of service, policies, community standards, etc.) to children and their parents that is concise; intelligible; easily accessible; and formulated in clear, plain and age‑appropriate language (OECD, 2021[40]).

Governments can encourage DSPs to adopt approaches promoting safety-by-design and transparent, age-appropriate information through various framework conditions. This includes formal tools such as legislation and mandatory codes of conduct and less formal approaches such as recommendations, best practices, or industry standards and guidelines (Hooft Graafland, 2018[48]). In the ECEC in a Digital World policy survey (2022), 15 of the 37 participating countries and jurisdictions indicated that they had standards related to safety-by-design in place for DSPs whose services or content could be used by young children (Table 3.2). Of these, 9 had formally regulated standards while 11 had guidelines or recommendations. An important minority (5) reported having both formal and less formal efforts in place. Notably, 11 participating countries and jurisdictions reported having no such standards in place, which suggests that many governments have further work to do to shift some of the responsibility for young children’s online safety to industry.

Nevertheless, legislative efforts to enhance children’s online safety at the national level appear to be gaining momentum across the OECD. Although no examples have been identified for this report that focus on digital safety specifically for young children, some countries have introduced legislation to protect children in general, including Germany and Japan, with provisions that take account of children’s age (see Box 3.1). Among other requirements, France’s Parental Control Law (2022) requires DSPs to educate users and parents about the specific risks around early exposure to screens for the youngest children (Government of France, 2022[49]). Still under debate, the United Kingdom’s Online Safety Bill calls for all DSPs to establish risk management practices for children that are differentiated by age (Government of United Kingdom, 2022[50]). The United States’ proposed Kids Online Safety Act also calls for age‑appropriate control measures and information provision, and requires DSPs to engage in transparent reporting and market research disaggregated by age (e.g. 0-5 year-olds) (Senate of United States, 2022[51]).

Table 3.2. Efforts to protect young children in digital environments targeted at digital service providers

Countries and jurisdictions reporting having introduced the following in relation to the role of digital service providers in ensuring a safe digital environment for young children, 2022

|

Standards for providers of digital services and content that may be used by young children |

Standards for the processing of young children's data |

Oversight bodies with specific responsibilities for monitoring the protection of young children in the digital environment |

||||

|---|---|---|---|---|---|---|

|

Australia |

||||||

|

Australia (South Australia) |

m |

m |

m |

|||

|

Australia (Tasmania) |

||||||

|

Australia (Victoria) |

m |

m |

m |

|||

|

Belgium (Flanders PP) |

||||||

|

Belgium (Flanders U3) |

||||||

|

Canada CB |

||||||

|

Canada SB |

||||||

|

Canada (Alberta) |

||||||

|

Canada (British Columbia) |

||||||

|

Canada (Manitoba) |

||||||

|

Canada (New Brunswick) |

||||||

|

Canada (Quebec) |

||||||

|

Czech Republic |

||||||

|

Denmark |

||||||

|

Finland |

||||||

|

France |

||||||

|

Germany |

||||||

|

Germany (Bavaria) |

||||||

|

Hungary |

||||||

|

Iceland |

||||||

|

Ireland |

||||||

|

Israel |

||||||

|

Italy |

||||||

|

Japan |

||||||

|

Korea |

||||||

|

Luxembourg |

||||||

|

Morocco |

||||||

|

Norway |

||||||

|

Portugal |

||||||

|

Slovak Republic |

||||||

|

Slovenia |

||||||

|

South Africa |

||||||

|

Spain |

||||||

|

Sweden |

||||||

|

Switzerland |

||||||

|

United Arab Emirates (Dubai) |

||||||

|

Formally regulated mechanisms |

9 |

14 |

12 |

|||

|

Guidelines or recommendations |

11 |

8 |

5 |

|||

|

Not in place |

10 |

4 |

8 |

|||

Notes: Belgium (Flanders PP): pre-primary education in Belgium (Flanders). Belgium (Flanders U3): ECEC for children under age 3 in Belgium (Flanders). Canada CB: centre-based sector in Canada. Canada SB: school-based sector in Canada. Canada (Manitoba): kindergarten sector only in Canada (Manitoba).

Formally regulated or established – a legal instrument or statutory body

Formally regulated or established – a legal instrument or statutory body

Guidelines or recommendations – codes of conduct without a legal obligation

Guidelines or recommendations – codes of conduct without a legal obligation

Not in place

Not in place

Not applicable or Not known

Not applicable or Not known

m: Missing

Source: OECD (2022[35]), ECEC in a Digital World policy survey, Table B.4.

Legislative efforts in Australia and the United Kingdom are supported by mandatory codes of conduct that include age-specific provisions. Australia’s guidance for these codes notes that younger children are at greater risk from the social, emotional, psychological and physical impacts caused by exposure to harmful content and behaviour online (eSafety Commissioner, 2021[52]). The United Kingdom’s Age‑Appropriate Design Code (2020) goes further, differentiating risks, protections and the responsibilities of DSPs according to age (see Box 3.2).

Other countries have published recommendations or guidelines for DSPs. These often address more specific digital risks, such as advertising or consumer rights, and come from a variety of actors, including government bodies (e.g. media authorities, consumer agencies, ministerial departments), professional associations and civil society. Some of these, such as a guide for stakeholders in Sweden (Box 3.1), include relevant provisions for young children. Guidance in Spain highlights the development and use of search engines and apps specifically designed for children as being particularly effective in protecting the youngest users (Spanish Data Protection Authority, 2020[53]). A policy statement from the American Academy of Pediatrics in the United States recommends that DSPs work with developmental psychologists and educators to design quality interfaces for children ages 0-6, as well as to eliminate advertising and unhealthy messages on apps for this age group (Hill et al., 2016[16]).

Box 3.1. Framework conditions for digital service providers that encourage safety-by-design

In 2008, Japan introduced the Act for an Enhanced Environment for Youth’s Safe and Secure Internet Use to, among other key objectives, reduce the chances of young people (ages 0-18) viewing harmful content online. Accompanying policy efforts have included promoting the development and design of safety features, obliging digital service providers (DSPs) to provide filtering services for young users, developing and disseminating related standards, and establishing a public-private partnership framework. In 2018, Japan updated the act in part to reflect the growing use of digital technologies by younger children. The accompanying action plan focused on promoting the use of filtering services and software for digital users from the earliest age.

Germany’s amendment to the Youth Protection Act (2021) aims to implement the requirements of the United Nations’ General Comment on the Rights of Children in the Digital Environment through federal law. One key measure is the introduction of a legal obligation for DSPs to appoint a qualified youth protection officer. The youth protection officer has responsibility for supporting design processes from a child-safety perspective, identifying potential risks to children, promoting the use of age labels or other technical solutions to limit children’s access to potentially harmful content, and championing the protection of minors in all internal decision making. The amendment also introduced a Safety-by-Design Standard for DSPs which, among other requirements, obliges DSPs to take precautions relative to both the level of risk of their service or product and the child’s age.

In Sweden, the Swedish Authority for Privacy Protection, the Ombudsman for Children in Sweden and the Swedish Media Council collaborated to publish guidance for DSPs on children’s rights in digital environments. Regarding young children specifically, the guidance notes that they may lack the tools required to handle certain media content or to understand the consequences of publishing images or sharing personal data. It also emphasises the need to adapt information to ensure it reaches the child, regardless of age. Thus, even in cases where the parent is required to give consent, the guidance recommends addressing children too. To facilitate this, the guidance recommends involving young children of the target age in the development of the text.

Sources: Germany: German Association for Voluntary Self-Regulation of Digital Media Service Providers (2022[54]); Japan: Government of Japan (2020[55]); Sweden: Swedish Authority for Privacy Protection, Ombudsman for Children in Sweden and Swedish Media Council (2021[56]).

Young children’s privacy and data protection

In today’s digital world, DSPs collect and share a wealth of data on children, even before they are born. Young children may knowingly or unknowingly offer their personal information and data directly to DSPs, or that information may be inferred from their activities online or from disclosures by others. These data are valuable: young children represent three large consumer markets in one (direct spending, future spending, indirect spending through parents) (OECD, 2020[31]). In addition, high potential for innovations in the health and education sectors through developments in artificial intelligence and big data make young children increasingly attractive targets for datafication (European Commission, 2022[57]).

However, the ability of children to identify, evaluate and consent to such data practices is highly questionable. Research indicates that young children are particularly trusting of privacy-invasive technologies and struggle to comprehend commonplace commercial activities such as selling data to a third party or combining multiple data points to profile a user (Information Commissioner's Office, 2019[58]). Parents and other adults often lack awareness of the extensive sharing of personal data that results from using digital services (European Commission, 2022[57]). This reduced comprehension minimises young children’s agency and undermines their right to privacy, while parental control or consent mechanisms often give only the illusion of protecting them (van der Hof and Lievens, 2017[59]). Moreover, the expansion of digital technology use in ECEC settings means entrusting personal data to ECEC staff or educational technology companies with little scope to refuse or challenge privacy arrangements (Schleicher, 2022[60]). The risk is real: in 2020, an analysis of nearly 500 educational technology apps found that many were collecting device identifiers, some were taking location data and nearly two-thirds of those submitted to further testing shared user data with third parties (Cannataci, 2021[61]).

The OECD guidelines call on DSPs to adopt four key actions with respect to privacy protection: 1) provide information on how personal data are collected, processed and used in concise, accessible and age‑appropriate language and formats; 2) limit the collection of personal data and its subsequent use or disclosure to third parties; 3) not use children’s data in ways that evidence indicates are detrimental to their well‑being; 4) not allow the profiling of children or use of automated decision making unless there is a compelling reason to do so and appropriate protections in place (OECD, 2021[40]).

Results from the ECEC in a Digital World policy survey (2022) suggest that countries have been more active in implementing efforts targeted at DSPs to protect children’s privacy in comparison to efforts to embed safety-by-design approaches (see Table 3.2). The majority (21) of participating countries and jurisdictions reported having standards in place for processing young children’s data. These were most often formally regulated, as reported by 14 participants. A smaller share (7) relied only on more informal approaches, such as guidelines or recommendations. Only Portugal reported having both approaches.

In recent years, legislative reform in this area has largely been driven by the General Data Protection Regulation (GDPR) of the European Union (EU), which came into force in 2018. The GDPR recognises children as requiring specific protections, including providing age-appropriate information, applying the right to be forgotten and prohibiting profiling, with some exceptions (European Parliament, 2016[62]). The GDPR also requires DSPs to seek consent for data-processing activities concerning children younger than 16 years old, with countries able to adapt this age according to domestic norms. The GDPR applies to any DSP that targets or collects data on users in the EU, regardless of the location of the DSP itself. As such, it has helped harmonise privacy laws across EU member states as well as encouraging other countries (e.g. Brazil, Japan, Korea, New Zealand, South Africa) to adopt similar measures. Some countries have introduced further provisions within their privacy laws for children (Woodward, 2021[63]). These include, for example, seeking consent for data processing from both parents and minors themselves (France) or requiring DSPs to conduct data protection impact assessments when processing minors’ personal data for marketing purposes (Finland, Ireland and Italy) (Gabel and Hickman, 2019[64]; Government of France, 2018[65]).

Laws for young children’s privacy specifically are not common, but some countries, including the United Kingdom (Box 3.2), have introduced regulations, recommendations or guidelines. Guidance published in Canada and Iceland encourages DSPs to design services that are appropriate for the youngest possible users by default, including by avoiding collecting personal information entirely (Office of the Privacy Commissioner of Canada, 2015[66]; Ombudsman for Children, n.d.[67]). France’s National Commission for Information Technology and Civil Liberties (CNIL) recommends that DSPs fully involve children of all ages in the design process; the Data and Design Project supports such collaboration, seeing young children work with digital designers to develop child-friendly interfaces (CNIL, 2021[68]). Ireland offers concrete guidance on how to ensure child-oriented transparency, even for young children, including using non-textual messages wherever possible, such as cartoons, videos, images, icons or gamification (Data Protection Commission, 2021[69]).

Box 3.2. Regulation and legislation to protect young children’s data

The United Kingdom’s Age-Appropriate Design Code (2020), or “Children’s Code”, is a statutory code of practice for digital service providers (DSPs) whose products or services are used by children. The code establishes design- and privacy-related benchmarks for the appropriate protection of children’s personal data, in line with the EU’s General Data Protection Regulation and the United Kingdom’s Data Protection Act (2018). The Information Commissioner’s Office, the United Kingdom’s data protection authority, applies the code when considering possible breaches of these laws; such breaches may result in assessment notices, warnings, reprimands or fines. The code sets out 15 risk-based standards of age-appropriate design ranging from general guidance, such as putting the best interests of the child first and applying standards in an age-appropriate manner, to measures relating to specific tools, such as parental controls, geolocalisation, profiling and nudge techniques. When it comes to the youngest users, there are several noteworthy aspects of the code:

Precautionary approaches to user age – the code applies to any DSP providing services or products likely to be accessed by children, entailing that DSPs apply the standards to all users when they cannot establish user age with confidence, thus providing protections for younger children by default. This recognises that age limits and verification tools are often inadequate.

Consideration of age ranges – to support DSPs to put the varied needs of children at different ages and stages of development at the heart of design, the code provides specific advice according to the capacity, skills and behaviours a child is likely to exhibit within certain age ranges. For very young children (0-5 year-olds), specific advice is offered in 4 of the 15 standards (transparency, parental controls, nudging and online tools). An annex sets out further key considerations based on relevant, up-to-date academic research for that age group.

Respecting the rights of the youngest – the code aims to complement rather than replace parental supervision and guidance. Nevertheless, it seeks to recognise even the youngest children’s agency and uphold their right to privacy. For example, the code encourages DSPs to rely more on parental involvement in managing privacy settings for 0-5 year-olds, but it also advises providing the children with information, in less detail and using visual or audio formats. In addition, young children should be advised, through clear and obvious signs, when parental controls are being used to monitor or track their behaviour and should be informed of their right to privacy in an age-appropriate way.

Similar efforts are now emerging in other jurisdictions. In the United States (California), the California Age‑Appropriate Design Code Act (2022) follows many of the above-mentioned principles. It also establishes a California Children’s Data Protection Taskforce to evaluate best practices and provide support to businesses, with an emphasis on small and medium-sized businesses. The European Commission has committed to developing an EU code of conduct on age-appropriate design.

With regards to privacy in educational settings specifically, the state of Maryland, in the United States, recently updated legislation on student data privacy. The provisions cover children from pre-kindergarten (3-4 year-olds) and apply to both school- and home-based instruction, as well as administrative activities and communication between children, staff and parents. The law increases student data protection over personal data and tightens definitions for covered information and targeted advertising.

Sources: European Commission: European Commission (2022[57]); United Kingdom: Information Commissioner’s Office (2020[70]); United States (California): 5Rights Foundation (2022[71]); United States (Maryland): Maryland General Assembly (2022[72]).

There are few identified examples of specific guidance for DSPs operating in the education sector, including ECEC, despite the growing role of both data management systems to support monitoring and evaluation processes (see Chapter 8) and pedagogical uses of digital tools and devices (see Chapter 4). The Council of Europe published Guidelines on Children’s Data Protection in an Education Setting. These have recommendations for governments, education professionals and DSPs and emphasise the need for age-appropriate approaches (Council of Europe, 2021[73]). In the United States, most states have legislation that deals specifically with protecting student data in educational settings; recent reforms in Maryland have implications for young children (see Box 3.2).

Governance and accountability approaches

As governments introduce new efforts to enhance children’s safety and privacy in digital environments, demand for effective oversight and enforcement increases. Governments can establish clear roles and lines of responsibility for implementing, monitoring and adapting such efforts. This may be carried out by administrative, judicial, quasi-judicial and/or parliamentary oversight bodies, or a combination. For example, in addition to data privacy authorities, consumer protection agencies, sectoral regulators, anti‑discrimination bodies and human rights institutions could all contribute to oversight (United Nations High Commissioner for Human Rights, 2021[74]).

However, the diverse web of actors involved in meeting the needs of children in the digital environment can lead to a lack of strategic leadership, bringing the risk of policy fragmentation, duplication of efforts and inconsistencies in monitoring and evaluation (Burns and Gottschalk, 2019[15]; OECD, 2020[31]). Moreover, the fast pace of technological change contrasted with the slower, lengthier research processes required to understand the impact of that change can create much uncertainty. Without strategic leadership, parents and ECEC professionals are left to navigate this uncertainty alone, risking confusion and stress. In recognition of this challenge, the OECD Recommendation calls upon governments to establish dedicated oversight bodies with inter alia responsibility for multistakeholder engagement, policy implementation and ensuring complementarity.

In the ECEC in a Digital World policy survey (2022), 15 of the participating countries and jurisdictions indicated having oversight bodies in place with specific responsibilities for monitoring the protection of young children in the digital environment (see Table 3.2). Among these, the majority (12) are statutory bodies with specific powers to implement legislation or regulation and their role and responsibilities have been formally defined. However, nine countries or jurisdictions reported having no specific oversight body in place, despite all but three of these respondents having reported that they have established standards for aspects of design and/or privacy. Some countries, such as Australia and Hungary, have established new oversight structures; others like Germany and Norway have expanded the remit of and/or encouraged collaboration between existing oversight bodies (Box 3.3).

Box 3.3. Oversight and strategic leadership of children’s digital safety

Australia’s eSafety Commissioner is the independent regulator for online safety. Established in 2015 as the Children’s eSafety Commissioner, the commissioner’s responsibilities were extended in 2017 to cover all users of digital technologies. The commissioner has hard powers to ensure regulatory compliance and its actions have a specific focus on several groups of vulnerable users, including young children. The commissioner holds industry to account for upholding Australia’s Basic Online Safety Expectations. This involves providing guidance on the expectations and reasonable practical steps to meeting them, as well as compliance actions. The commissioner’s powers include requiring digital service providers (DSPs) to report on how they are meeting the expectations, issuing a formal warning or infringement notice, and seeking court-ordered injunctions or civil penalties. Regarding children’s safety, specific actions include handling complaints and reports of cyberbullying and investigating or overseeing the removal of harmful content. The commissioner also leads activities to support parents and early childhood education and care staff in their efforts to enhance young children’s digital safety.

In Hungary, the Child Protection Internet Roundtable was established in 2014 as a consultative review committee within the National Media and Communications Authority, which brings together representatives of 20 different organisations with a vested interest in or responsibility for children’s online safety. The roundtable issues non-binding recommendations and statements to promote compliance by DSPs and raise awareness among children and their parents. It also reviews and supports the implementation of the Digital Child Protection Strategy (2016).

Germany recently reformed the Federal Testing Centre for Media Harmful to Young People, considerably increasing its powers in line with new provisions in the Youth Protection Act (see Box 3.1). The newly named Federal Centre for the Protection of Children and Young People in the Media (BzKJ) is responsible for ensuring that DSPs respect their obligations under the act; promoting shared responsibility among government, industry and civil society; and establishing networking structures to enable stakeholders to collaborate.

In Norway, the Children’s Ombudsman, the Norwegian Data Protection Authority, the Norwegian Media Authority and the Norwegian Consumer Agency have all produced content such as guidance, research and recommendations to encourage DSPs to enhance children’s online safety. The Norwegian government has appointed the Norwegian Media Authority as the national co-ordinator of this work.

Source: Australia: eSafety Commissioner (2021[75]); Germany: Federal Centre for Child and Youth Media Protection (2021[76]); Hungary: National Media and Communications Authority (n.d.[77]); Norway: Norwegian Media Authority (n.d.[78]).

Parents and families protecting young children in digital environments

Parents, carers and guardians are central figures in young children’s lives and have traditionally been at the centre of efforts to enhance children’s safety in the digital environment. The OECD Recommendation recognises that while parents have a fundamental role in protecting their children in the digital environment, they need support in this role. In particular, they need to be supported to have awareness and understanding of the rights of children in digital environments and as data subjects. In addition, it states that parents require support to fulfil their role to ensure that children can become responsible participants in the digital environment and recognises that the continually increasing complexities of digital technologies may increase the necessity for such support (OECD, 2021[39]). This section explores different ways in which governments are supporting parents to keep young children safer in digital environments.

Parents’ role in protecting young children in the digital world

Today’s young children largely engage with the digital world in the home (Carvalho, Francisco and Relvas, 2015[79]). For these digital users, parents can do much more than simply facilitate or restrict access to digital tools; they are a key source of support and inspiration for children’s digital experiences, establishing rules and boundaries but also fostering agency and empowerment for their later digital lives (Chaudron, Di Gioia and Gemo, 2018[4]; Green, Wilkins and Wyld, 2019[29]). Studies indicate that children whose parents implement Internet safety measures, model healthy digital behaviours and keep up to date with their children’s digital habits are less likely to be victims or perpetrators of negative online conduct than children whose parents adopt a restrictive approach or model unhealthy interactions with technology. They are also more likely to be digitally resilient (i.e. able to react appropriately and adjust positively in the face of risks, potentially minimising associated harms) (Livingstone et al., 2017[80]).

From a parent’s perspective, this responsibility, twinned with the increase in young children’s interactions with the digital world, is often a source of internal conflict and stress. In the United States, a survey of parents of 0-6 year-olds found that 86% reported being satisfied with how their young children use technology, identifying benefits to child development and literacy. At the same time, 72% had concerns, specifically around too much screen time, inappropriate content and physical health (Erikson Institute, 2016[81]). Similarly, an investigation into parental perceptions about smart toys found that parents strongly supported the educational and entertainment potential but equally feared possible threats to privacy (Brito, Dias and Oliveira, 2018[28]).

Supporting parents to effectively navigate this tension is critical for curbing parental anxieties and amplifying digital benefits for young children while minimising harms. This requires equipping parents with the skills, knowledge and attitudes to combine the best available evidence with their own tacit knowledge, to arrive at the most appropriate course of action for the individual child. For example, accurate knowledge that helps parents to distinguish between evidence-based and perceived risks can support them to take more informed decisions (Green, Wilkins and Wyld, 2019[29]). Such information can also usefully include guidance regarding safe and responsible uses of technology, including a broader set of healthy habits around sleep and exercise. However, at present, research indicates that parents receive conflicting information from media, social, medical and educational sources, exacerbating their internal conflict regarding digital parenting (Dardanou et al., 2020[82]).

Parents could also benefit from being informed about the pros and cons of different digital parenting styles. Qualitative research among parents of young children across multiple European countries indicates that parents are often unclear or inconsistent about how and why parental mediation matters in digital parenting, or which strategies are effective (Livingstone et al., 2015[34]). Certainly, there is no one-size-fits-all model: digital parenting is a dynamic process, shaped by individual contexts and constraints (Smahelova et al., 2017[83]; Livingstone et al., 2015[34]). Nevertheless, research can guide parents towards certain beneficial approaches. For example, among young children in offline environments, authoritarian and permissive parenting styles with their emphasis on control, intrusiveness and detachment have been shown to correlate to negative behaviours and lower development of executive functions, while positive approaches that emphasise scaffolding, cognitive stimulation and supported autonomy seem particularly beneficial (Hosokawa and Katsura, 2018[84]; Valcan, Davis and Pino-Pasternak, 2018[85]; Ulferts, 2020[86]). Although more research is required as to how these effects manifest in digital contexts for young children, among older children, digital parenting strategies that combine responsiveness, warmth and clear rules, as well as a recognition of children’s rights in the digital environment are considered useful in balancing digital risks and opportunities (Duerager and Livingstone, 2012[87]; Milovidov, 2020[88]).

Parents also need to be supported to understand the risks their own digital behaviours carry for their children. Not only does children’s screen time appear to increase with parents’ screen time, but in both online and offline contexts, studies have shown that when left unsupervised or in the presence of a distracted parent, children, particularly young ones, will engage in risky behaviours to re-engage a parent (Sanders et al., 2016[89]; Kildare and Middlemiss, 2017[22]). In addition, distracted parents have been shown to be less attentive to the potentially unsafe situations or actions their children may encounter and to engage in less verbal and non-verbal communication. This may both negatively impact the child and lead to less positive parenting experiences (Kildare and Middlemiss, 2017[22]). At the same time, parents’ digital skills play an important role: restrictive strategies tend to be adopted by parents with less confidence in their own digital skills (Burns and Gottschalk, 2019[15]), while a higher sense of digital self-efficacy among parents of younger children has been shown to correlate negatively with screen time (Sanders et al., 2016[89]).

Finally, as policy efforts regarding young children’s safety in digital environments increasingly extend to spheres outside the home and the family, making parents aware of those developments will be necessary to enhance impact and support alignment and coherence. For instance, the effect of safety-by-design approaches, transparent information about children’s security and privacy, and parental controls or consent mechanisms will partly depend on the extent that parents actively engage with them. In addition, in many countries, there is a dissonance between the messaging of or towards education and industry actors, which tend to promote the use of digital technology by young children, and that of public health actors targeting parents, which often emphasises risk management and counsels screen time reduction (Straker et al., 2018[90]). When it comes to ECEC provision, this has contributed to hostility from parents about the use of digital technologies in ECEC settings in several countries (Straker et al., 2018[90]; Zimmer, Scheibe and Henkel, 2019[91]).

Support and guidance for parents and families with young children

While public education and awareness-raising efforts are by no means a silver bullet, they are important policy tools that help to empower parents to support their children (OECD, 2020[31]; Livingstone et al., 2018[33]). This has been a favoured approach for many years but, in relation to young children at least, the COVID-19 pandemic likely accelerated the adoption of such measures. For example, the experience of distance education in 2020 led many countries to disseminate advice to parents and families about adult‑supervised use of technology for young children (OECD, 2021[5]).

Responses to the ECEC in a Digital World policy survey (2022) illustrate the breadth of topics covered in guidelines and recommendations targeting parents or a general audience about young children’s engagement with digital environments as of 2022 (see Table 3.3). The majority of participating countries or jurisdictions (28 out of 37) have some guidance in place for parents, issued or endorsed by the government. For a large share of these, this guidance appears to be quite comprehensive: 23 countries and jurisdictions reported addressing 4 or more of the 6 topics asked about in the policy survey. This may reflect the fact that parents have typically been seen as having primary responsibility for protecting children from digital risks. The issuing of these guidelines or recommendations could also be in response to the demand for support from parents who feel increasingly confused about how to manage digital risks and opportunities for their children.

Recommendations related to screen time were the most common type of guideline issued, with 28 of 37 participating countries and jurisdictions reporting having them in place. Much of the country-specific guidance follows the recommendations of the World Health Organization (WHO): zero screen exposure for children under age 1, preferably zero exposure for 1-2 year-olds and less than one hour per day, supervised, for 2‑5 year‑olds (WHO, 2019[92]). Responses to the policy survey and further research indicate that there are some variations in national interpretations, however: Germany recommends zero exposure for 0-3 year-olds; Australia and the United States recommend zero exposure up to 18 months. While many countries follow the recommendation of a maximum of one hour per day for children over 2 years old, Luxembourg suggests 10-15 minutes maximum.

Such guidance can usefully provide consistent, tangible and evidence-based recommendations. Nevertheless, as described in Chapter 2 and at the start of this chapter, the traditional concept of screen time increasingly fails to capture the diversity of children’s interactions with digital technologies. For example, the WHO recommendations are in specific reference to sedentary screen time but may often be interpreted as referring to time spent on any engagement with digital technologies. Furthermore, for most families, the reality is that young children are often exposed to screens earlier and at a higher intensity than recommendations propose and research undertaken during the COVID-19 pandemic highlights that when young children are exposed to premature, increased or unsupervised screen time, it is not necessarily a result of parents’ ignorance or scepticism of the guidance but wider contextual factors, such as parental availability (Hartshorne et al., 2020[93]).

Table 3.3. Guidelines and recommendations to protect young children in digital environments targeted at parents and families, and early childhood education and care professionals

Countries and jurisdictions reporting having introduced the following to support families and ECEC professionals in ensuring a safe digital environment for young children, by topic and target audience, 2022

|

|

Parents/families or the general public |

ECEC professionals specifically |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

Total number of topics |

Risks to physical health |

Risks to socio-emotional well-being |

Protection of privacy |

Amount of screen time |

Educational uses of technology at home |

Balancing participation with protection |

Total number of topics |

Risks to physical health |

Risks to socio-emotional well-being |

Protection of privacy |

Amount of screen time |

Educational uses of technology at home |

Balancing participation with protection |

|

Total no. of countries/jurisdictions |

25 |

26 |

21 |

28 |

24 |

17 |

9 |

12 |

10 |

11 |

11 |

11 |

||

|

Australia |

6 |

5 |

||||||||||||

|

Australia (South Australia) |

0 |

0 |

||||||||||||

|

Australia (Tasmania) |

0 |

0 |

||||||||||||

|

Australia (Victoria) |

0 |

0 |

||||||||||||

|

Belgium (Flanders PP) |

6 |

0 |

||||||||||||

|

Belgium (Flanders U3) |

5 |

0 |

||||||||||||

|

Canada CB |

5 |

0 |

||||||||||||

|

Canada SB |

5 |

0 |

||||||||||||

|

Canada (Alberta) |

0 |

0 |

||||||||||||

|

Canada (British Columbia) |

4 |

2 |

||||||||||||

|

Canada (Manitoba) |

6 |

5 |

||||||||||||

|

Canada (New Brunswick) |

6 |

0 |

||||||||||||

|

Canada (Quebec) |

5 |

0 |

||||||||||||

|

Czech Republic |

4 |

4 |

||||||||||||

|

Denmark |

5 |

0 |

||||||||||||

|

Finland |

3 |

3 |

||||||||||||

|

France |

3 |

2 |

||||||||||||

|

Germany |

6 |

6 |

||||||||||||

|

Germany (Bavaria) |

2 |

4 |

||||||||||||

|

Hungary |

6 |

0 |

||||||||||||

|

Iceland |

5 |

2 |

||||||||||||

|

Ireland |

3 |

0 |

||||||||||||

|

Israel |

6 |

0 |

||||||||||||

|

Italy |

0 |

3 |

||||||||||||

|

Japan |

6 |

0 |

||||||||||||

|

Korea |

4 |

0 |

||||||||||||

|

Luxembourg |

6 |

6 |

||||||||||||

|

Morocco |

0 |

0 |

||||||||||||

|

Norway |

4 |

3 |

||||||||||||

|

Portugal |

5 |

5 |

||||||||||||

|

Slovak Republic |

6 |

6 |

||||||||||||

|

Slovenia |

5 |

1 |

||||||||||||

|

South Africa |

2 |

1 |

||||||||||||

|

Spain |

6 |

6 |

||||||||||||

|

Sweden |

0 |

0 |

||||||||||||

|

Switzerland |

6 |

0 |

||||||||||||

|

United Arab Emirates (Dubai) |

0 |

0 |

||||||||||||

Notes: Responses refer to guidelines or recommendations as issued by either a government agency (e.g. a ministry), a public entity with government support (e.g. research institute, non-governmental organisation) or other institution with a far-reaching role, as long as the guidelines or recommendations are endorsed by government.

Belgium (Flanders PP): pre-primary education in Belgium (Flanders). Belgium (Flanders U3): ECEC for children under age 3 in Belgium (Flanders). Canada CB: centre-based sector in Canada. Canada SB: school-based sector in Canada. Canada (Manitoba): kindergarten sector only in Canada (Manitoba).

Yes

Yes

Not in place, Not known or Not applicable

Not in place, Not known or Not applicable

Source: OECD (2022[35]), ECEC in a Digital World policy survey, Table B.5.

At the same time, the evidence base on which screen-time recommendations are formed is constantly evolving as new digital technologies and habits emerge and researchers attempt to overcome some of the weaknesses of previous studies, including narrow or unreliable measures of young children’s screen time (Barr et al., 2020[94]). The WHO recommendations were developed following a research review undertaken in 2017, which found a predominantly unfavourable, or null, association between sedentary screen time and cognitive or motor development, psychosocial health and being overweight but rated the overall quality of evidence available for these relationships at the time as very low (WHO, 2019[95]). With all this in mind, for screen time guidelines to be useful to parents, and not cause more stress, they should be reviewed regularly and paired with wider advice on digital parenting and risk management. This could usefully include information that supports parents to enable children to benefit from technology by encouraging them to seek out educational and prosocial content and discuss healthy digital habits (Hill et al., 2016[16]).

A majority of participating countries and jurisdictions (25) reported having issued guidance for parents of young children on the risks of digital engagement to physical health, such as the impact on eyes, sleep and posture. The same number (25) reported guidance on limiting risks to socio-emotional well-being, such as exposure to inappropriate content and social isolation. Meanwhile, 24 countries and jurisdictions reported issuing guidance on educational uses of technology in the home. This is particularly important for parents of young children, as research indicates that they often lack awareness of the educational potential of their digital parenting role (Mascheroni, Ponte and Jorge, 2018[96]). It also provides an area of opportunity for aligning with young children’s digital interactions in ECEC settings.

In contrast, less than half of participating countries and jurisdictions (17) reported having guidance in place to support parents in balancing young children’s right to participate in the digital environment with protecting them from harm. This indicates the risk-focused nature of much of the guidance available to parents. It may be that the absence of clear evidence regarding the impact of digital technology use on young children encourages governments to adopt a cautious approach that emphasises potential risks. However, as described earlier in this chapter, focusing on digital risks to the detriment of digital opportunities can limit children’s scope for developing critical digital skills and increase parental anxiety.

Finally, 21 countries and jurisdictions reported having issued guidance related to children’s privacy. Given that legislative and regulatory efforts in this area have multiplied in recent years, as described above, it is notable that this topic remains one of the less-covered topics included in the policy survey. However, much of the guidance or regulation aimed at DSPs to help protect young children calls for interventions that involve parents (e.g. providing transparent and clear information about data processing, requiring parental consent, implementing parental controls), meaning efforts to engage them in issues related to privacy are important.

As responses to the survey and further research indicate, the online safety space is well-populated. Most countries have dedicated websites for children’s online safety; many have multiple. Efforts may come from government bodies (e.g. ministries, media authorities, data protection agencies) or civil society. At the same time, a lot of information exists that is not endorsed by governments. Furthermore, as research and technology advance, resources quickly become outdated. For parents of young children in particular, guidance may not always be tailored to their needs, as much of the knowledge base concerns older children and teenagers. Together, this can lead to duplication, overlap and a lack of clear, authoritative messaging (Green, Wilkins and Wyld, 2019[29]).

Nevertheless, some countries have developed a range of evidence-based guidance tailored to the needs of parents of young children specifically. Australia’s Early Years Program developed by the eSafety Commissioner and Denmark’s First Digital Steps initiative (see Box 3.4) are good examples. The Early Years Program targets parents and carers of children under 5 and includes guidance on the risks young children are exposed to, as well as practical tips for navigating those risks, such as modelling healthy digital habits, setting rules and selecting quality content (see Case Study AUS_1 – Annex C). Responses to the ECEC in a Digital World policy survey (2022) and further research also indicate that some government‑endorsed websites include specific resource collections for parents of young children. For example, in the Flemish Community of Belgium, the Flemish Knowledge Centre for Digital and Media Literacy has established an online catalogue of resources to support parents with digital parenting. Items are disaggregated by age, including categories for 0-3 year-olds and 4-6 year-olds (Medianest, n.d.[97]).