Ensuring that evaluation findings are used has been a consistent challenge, which is reflected in the increased focus on usefulness as a guiding principle of evaluation. To increase use, organisations are engaging across teams to ensure buy-in; aligning with policy, programming and project cycles; and preparing more diverse products to share evaluation evidence in a way that responds to the needs of target audiences and facilitates action.

Evaluation Systems in Development Co-operation 2023

5. Using evaluation evidence

Abstract

5.1. Using evaluation findings

Ensuring that evaluation findings are used has been a persistent challenge for evaluation units. As noted in Chapter 2, accountability and learning are the dual objectives of development evaluation. Reporting suggests that while stakeholders are satisfied with the extent to which evaluations meet accountability objectives, it is more challenging to make sure evaluation findings are used to inform future policy, planning, and resourcing decisions to enhance effectiveness.

Use of findings has been viewed largely as an ex-post activity. In the past, participating organisations have prioritised accountability aspects of evaluations, focusing on the success or failure of policies, programmes, and projects. Given this prioritisation, promoting the use of findings has been left until after evaluations are complete, rather than considering learning objectives in the early stages of the evaluation process (Serrat, 2009[1]; Cracknell, 2000[2]).

“There is learning in accountability and accountability in learning.”Netherlands, Policy and Operations Evaluation Department

The nature of challenges in using evaluation findings has changed. The 2010 study reported that DAC Peer Reviews and dialogue at the DAC Network on Development Evaluation (EvalNet) meetings often cited accessibility and use of evidence as the primary challenges for evaluation processes, often related to the limited ability to share findings widely (OECD, 2010[3]). These accessibility challenges have been addressed, with the majority of participating organisations publishing evaluation reports and summaries online and disseminating them through various platforms where findings can be discussed. However, new challenges have emerged:

Tension between providing timely evidence while ensuring quality. Timely evaluation findings are essential if they are to be useful. Participating organisations have to juggle providing quick evidence that can inform ongoing efforts with ensuring high-quality and credible findings. This challenge came to the fore during the COVID-19 pandemic, when organisations were working in new ways and in a new context and when real-time evidence was needed on which response and recovery measures were working (see Section 4.4).

Absorption capacity affects the ability of target audiences to act on evaluation findings. Unlike in the past, there is now an overwhelming wealth of data and evidence available to policy makers and programme/project managers, including internal evaluation findings from EvalNet and beyond. In a resource-constrained development environment and with the high volume of information produced, development professionals are not able to fully review and incorporate all evaluation findings.

Evaluation units are making concerted efforts to address these uptake-related challenges. Key measures include:

Putting renewed focus on identifying learning objectives early in the evaluation cycle and increasing emphasis on how evaluation findings are presented and disseminated. This includes consulting on evaluation needs, drafting Terms of Reference (ToRs) that place equal emphasis on learning objectives, highlighting the areas in which findings can be used to inform decision making, and considering how findings will be presented and disseminated.

“If evaluation is to fulfil its role as a decision-making tool, we need to improve ownership of its conclusions.”France, Agence Française de Développement, Department for Evaluation and Learning

Aligning evaluations with overarching organisational objectives and ensuring timely release of findings. In practice, this means choosing to conduct evaluations in time for their findings to inform upcoming policy decisions. For example, AFDB’s 2019 evaluation of its performance on gender mainstreaming was timed to feed into international negotiations on gender equality and women’s empowerment objectives (IDEV, 2020[4]). And Denmark has assessed its overall portfolio of climate finance commitments (DANIDA, 2021[5]). In the same vein, evaluations can be undertaken in line with programme and project cycles. When it is not possible to finalise evaluation findings in time for a specific deadline, evaluation units increasingly share preliminary findings with relevant teams to provide timely inputs into key decisions.

Engaging early with staff across the organisation to increase relevance and ownership. About half of the reporting units systematically engage with programme staff, including colleagues working in thematic areas, during the evaluation planning stages to identify relevant evaluation topics. Not only does this help to ensure the utility of evaluation findings, it also ensures broad organisational buy-in to evaluation processes, promotes ownership and encourages the use of evaluation results.

Conducting more cross cutting analyses and syntheses. For example, AFD’s 2023 Evaluations Report aims to raise awareness of its evaluation work among a wider audience, and answer crucial questions around key thematic areas: essential services in Africa, climate and biodiversity, and access to education and training (AFD, 2023[6]).

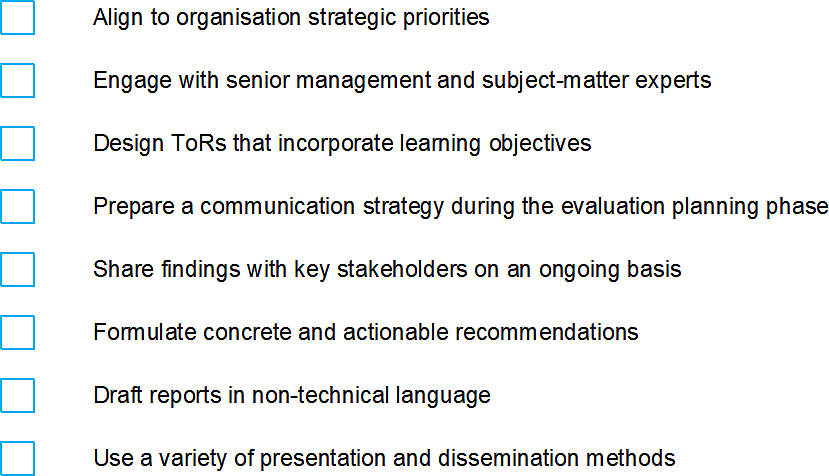

Figure 5.1 lists the key elements for supporting the use of findings based on information reported by participants.

Figure 5.1. Evaluation utility checklist

Note: Based on challenges in using evaluation findings and the mitigation efforts participating organisations are using to address them.

How findings are presented and disseminated influences their use. The 2016 study highlighted the need for more focus on how evaluation findings are prepared and shared, and suggested ensuring that the design of knowledge products is user-centric (OECD, 2016[7]). Participating organisations have taken this recommendation forward, exploring new ways to present evaluation findings alongside publishing full evaluation reports. They are also looking at new ways to share information that meet the varied needs of different audiences, ensuring key points are clear and easily absorbed. Some organisations have gone so far as to employ staff dedicated to knowledge sharing and communication in the evaluation unit.

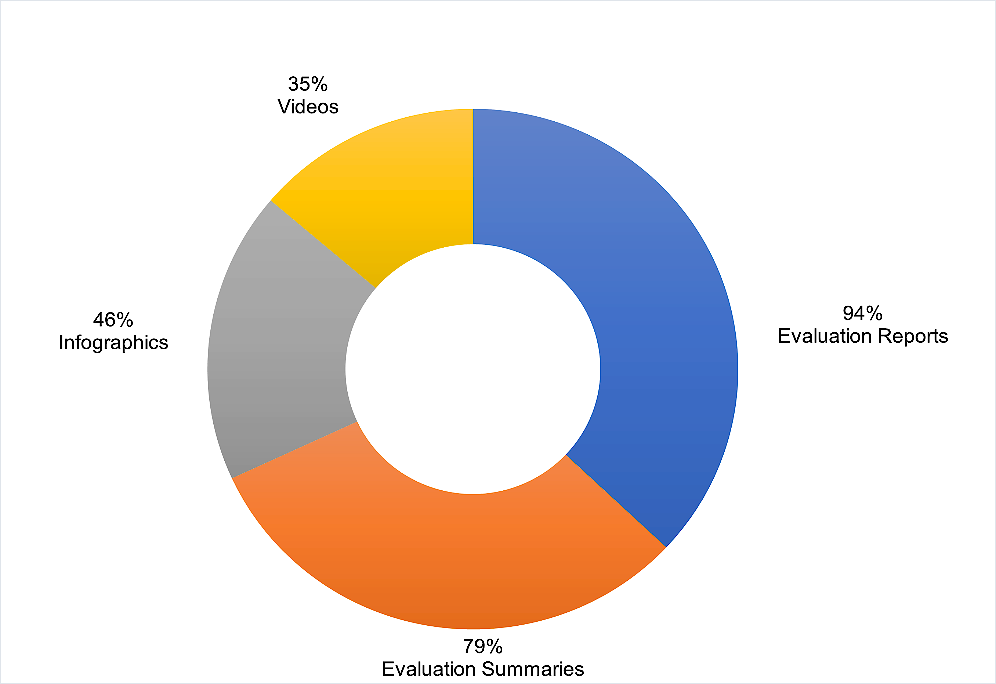

Growing diversity in evaluation knowledge products reflects an effort to respond to the needs of diverse audiences. In line with past commitments to transparency and accountability, nearly all participating organisations – 92 % (45 out of 49) – post evaluation reports on publicly-accessible websites. Those that do not post full evaluation reports at least post executive summaries. However, increasingly, participating organisations complement full length reports with other messaging, in an effort to better connect with internal and external audiences and facilitate use of results. Understanding the differing interests and needs of different audiences, evaluation units routinely publish executive summaries, press releases, policy briefs, infographics, data visualisations, blogs, videos, and podcasts to present evaluation findings (Figure 5.2). Compared to previous studies, we see more members (now the vast majority) diversifying their knowledge products.

Figure 5.2. Evaluation knowledge products

Note: Data on dissemination methods were reported by 48 out of 51 participating organisations.

Dissemination to internal audiences often extends beyond simple sharing of knowledge products to an increased focus on interactive learning. While evaluation units continue to share evaluation reports and other knowledge products across their organisations, there is more emphasis on interactive methods of engagement (Box 5.1). For example, 64 % (30 out of 47) of reporting organisations noted that they plan internal events (e.g., launch events, webinars, brown bag lunches etc.), involving evaluators and thematic units to discuss findings, recommendations and possible ways forward.

Social media is widely used for communicating evaluation findings to external audiences. In 2016, 51 % of participating organisations mentioned using digital platforms for sharing evaluation findings. In 2021, 73 % (29 out of 40) report regularly using social media to promote knowledge products and highlight key findings.

Box 5.1. Inventive ways to strengthen learning from evaluations

Austria’s annual learning conference

The Austrian Development Agency (ADA) organises a yearly workshop that serves as a retreat for its staff and that is attended by the Director General for Development Cooperation within the Ministry of Foreign Affairs. The objective of this event is to facilitate mutual reflection, knowledge exchange, and learning on a variety of questions related to global development co-operation.

A dedicated learning function in Canada

Global Affairs Canada’s (GAC) evaluation unit is made up of two divisions: the Evaluation Division and the Evaluation Services and Learning Division. The latter is primarily focused on learning from evaluation. Its role involves conducting complementary research and translating evaluation findings into actionable knowledge that informs decision making.

France’s participatory approach

Within France’s Ministry of European and Foreign Affairs (MEAE), the directorate responsible for international development co-operation organises a series of presentations and workshops with senior-level officials and relevant departments to discuss findings and provide support in responding to evaluation recommendations. This consultative approach helps to ensure buy-in to recommendations, thereby increasing their implementation.

5.2. Management response systems

Management response ensures that evaluations contribute to organisational accountability and learning. It serves as a key tool in ensuring the use of evaluation findings and establishing crucial oversight over organisational efforts. It is considered good practice for all stakeholders targeted by an evaluation, and therefore responsible for implementing evaluation recommendations, to be involved in responding to the findings and planning follow-up actions.

Nearly all organisations have a management response process/system in place. Of the participating organisations, 93 % (43 out of 46) reported that a management response to an evaluation is an absolute requirement. Generally, a management response process consists of: (i) a formal position from management that states whether a recommendation is accepted, partially accepted or rejected; and (ii) an action plan that outlines specific measures to be taken to implement recommendations, including clear roles and responsibilities and concrete timelines.

Management responses are increasingly tracked, highlighting their importance. The existence of a management response does not ensure the use of evidence for decision making; members are increasingly developing new elements to track, and support follow up. In 2010, 54 % (20 out of 37) of participating organisations reported having a system in place to track implementation of management response actions. In 2016, this figure increased to 78 % (36 out of 46). This has jumped again, with 91 % (42 out of 46) of participating organisations in 2021 indicating that they have a system in place to track implementation of management response action plans.

Several units are also pursuing ongoing research to investigate the quality of both their recommendations and the resulting actions, towards the overall goal of improving effectiveness. For example, the ADB’s Independent Evaluation Department’s 2020 assessment indicates that only 75% of actions in response to recommendations were either fully or largely implemented (ADB, 2020[8]). They report that shortfalls in implementation are generally due to a lack of alignment between actions and recommendations, and that management actions are not specific about intended outcomes—both of which can be improved through better engagement between management and IED at the action plan formulation stage.

“The impact of the Independent Evaluation Department’s (IED) recommendations depends on the quality of management’s action plans.”Asian Development Bank, Independent Evaluation Department

Most often, it is the evaluation unit that is responsible for tracking whether management implements actions in response to evaluation recommendations. In 91 % of cases (39 out of 43), the evaluation unit plays a role in management response tracking and follow-up (Box 5.2). In a small number of cases, the relevant policy or programme unit or organisation management is responsible for tracking. There is growing transparency in tracking of management responses, including the UK’s Independent Commission for Aid Impact (ICAI) and the Netherlands’ Policy and Operations Evaluation Department (IOB), which report to their respective parliaments and require formal responses from government to their evaluations. Publishing these government responses and reporting on implementation progress support public accountability.

Periodic monitoring reporting is also used to assess implementation progress. Some participating organisations track how the target audience responds to the evaluation findings and recommendations, and reports to management or independent oversight committees on follow up actions taken. These progress reports ensure accountability, while also providing a space for dialogue and learning.

“The goal of learning requires cooperation between those initiating the evaluations and the intended users.”Norway, Department for Evaluation

Box 5.2. Tracking implementation of management responses

The Management Action Record

The Management Action Record is an online system used by many agencies (such as the World Bank Group’s IEG, the African Development Bank’s IDEV, the Asian Development Bank’s IED, etc.) to support the implementation of management response action plans. The unit responsible for tracking is able to insert all the agreed actions and tag the unit responsible for implementation. As progress is made, the system is updated, providing up-to-date information that is accessible to all relevant staff. This tool facilities periodic follow up on the adoption of the evaluation agency’s recommendation by the organisation.

Canada’s annual monitoring report

Canada’s evaluation function monitors and tracks the implementation status of all active management responses and action plans on an annual basis, and reports back to the Performance Measurement and Evaluation Committee. This annual monitoring helps to understand the impact of evaluations on organisational learning, departmental programming and decision making, as well as accountability. It also provides an opportunity to address potential gaps or changes needed in planned actions; capture lessons and better grasp how evaluations contribute to broader learning and whether they are drivers of change; and support the department in implementing any intended change.

References

[8] ADB (2020), 2020 Annual Evaluation Review: ADB’s Project Level Self-Evaluation System | Asian Development Bank, Asian Development Bank, https://www.adb.org/documents/2020-annual-evaluation-review-adb-s-project-level-self-evaluation-system.

[6] AFD (2023), Evaluations report 2023 | AFD - Agence Française de Développement, Agence Française de Développement, https://www.afd.fr/en/ressources/evaluations-report-2023.

[2] Cracknell, B. (2000), “Evaluating Development Aid: Issues, Problems, and Solutions”, Public Administration and Development, Vol. 21/5, pp. 429-429, https://doi.org/10.1002/pad.190.

[5] DANIDA (2021), Evaluation of Danish Support for Climate Change Adaptation in Developing Countries, https://um.dk/en/danida/results/eval/eval_reports/evaluation-of-danish-support-for-climate-change-adaptation-in-developing-countries.

[4] IDEV (2020), Evaluation Synthesis of Gender Mainstreaming at the AfDB, Summary report, Independent Development Evaluation (IDEV) African Development Bank Group, https://idev.afdb.org/sites/default/files/documents/files/Evaluation%20Synthesis%20of%20Gender%20Mainstreaming%20at%20the%20AfDB%20-%20Summary%20Report.pdf.

[7] OECD (2016), Evaluation Systems in Development Co-operation: 2016 Review, OECD Publishing, Paris, https://doi.org/10.1787/9789264262065-en.

[3] OECD (2010), Evaluation in Development Agencies, Better Aid, OECD Publishing, Paris, https://doi.org/10.1787/9789264094857-en.

[1] Serrat, O. (2009), Learning from Evaluation, Asian Development Bank, Manila, https://www.adb.org/sites/default/files/publication/27604/learning-evaluation.pdf (accessed on 1 September 2022).