Advances in artificial intelligence (AI) models, including the advent of models offering content generative capabilities and user-friendly interfaces, have increased interest around AI innovation by the general public in Asia and globally. Although the deployment of fully automated generative AI tools in finance is slow-paced, the wider deployment of AI in finance could amplify risks already present in financial markets and give rise to new challenges. This chapter provides a sentiment analysis of interest in AI innovations in finance in major Asian economies using Machine Learning (ML) techniques; presents recent developments in AI in finance and the potential use cases and associated benefits of such tools for ASEAN member states, analyses potential risks from a wider use of such tools in ASEAN financial markets; examines policy developments and discusses associated policy implications.

Mobilising ASEAN Capital Markets for Sustainable Growth

3. Artificial intelligence (AI) and finance in ASEAN economies

Abstract

3.1. Introduction

Advances in artificial intelligence (AI), including the advent of models with content generating abilities, have sparked public interest and increased direct usage of AI tools by non-technical users. For example, generative AI models have the ability to produce ‘original’ content that closely resembles human-generated output. In addition to their advanced computational capabilities, such tools have a user-friendly, accessible conversational interface that has been one of the main drivers of rapid public adoption of such models, particularly given the availability of some free-of-charge. Such developments have marked a breakthrough in the ability of non-technical users to engage with complex technologies in a way that aligns with human thinking.

This chapter discusses potential benefits and risks of the use of AI in finance and presents trends in AI in finance in ASEAN economies, in terms of the sentiment and deployment trends, use cases, and implications for financial market participants and policy makers. It includes original analysis based on a machine learning (ML) model that uses natural language processing techniques provides evidence of important and increasing interest around AI in finance in major Asian economies, such as Japan and Korea. Finally, it examines national AI strategies that have been developed in seven ASEAN member states and provides policy considerations and recommendations for ASEAN economies.

3.2. AI in finance: Asian trends

3.2.1. Using AI models to examine interest in AI in finance in major Asian economies

Asian economies have emerged as key hubs for the development of AI, particularly given the role of some Asian economies in the semiconductor markets (e.g. China, Chinese Taipei), while the region has been at the forefront of AI adoption. The central role of the Asian region's AI activity contributes to economic growth and the digital transformation of the regions’ economies.

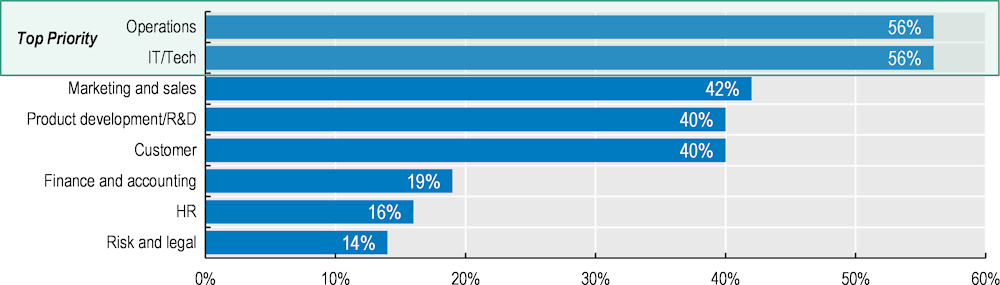

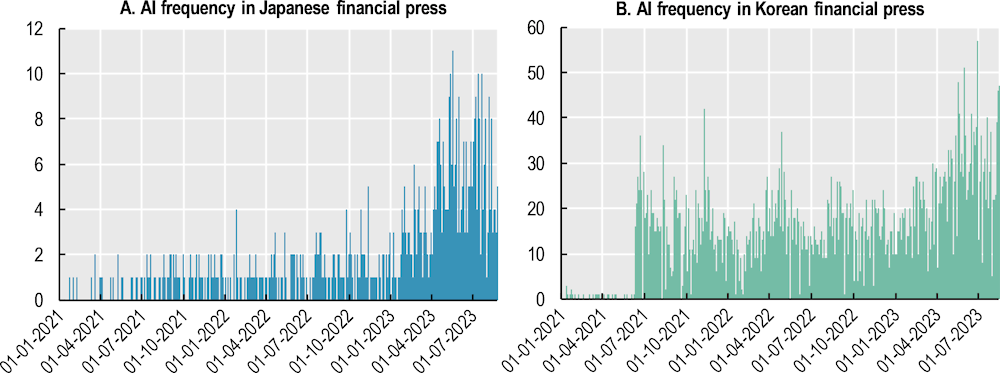

OECD analysis based on a ML-based model that uses Natural Language Processing (NLP) techniques1 provides evidence of important and increasing interest around AI in finance in major Asian economies such as Japan and Korea (Figure 3.1). AI-related topics were covered in almost 2.5% of articles examined in the period January 2021 – October 2023 in the respective samples examined for each of the countries’ financial press.2 A graphic representation of the frequency of words in the abovementioned samples, in the form of WordClouds, demonstrates the importance of ‘Generative AI’ or ‘GenAI’, ‘investment’, and ‘technology’ in the discussion and reporting around AI in finance (Figure 3.2). In the case of Japan, ‘risk’ is also a prominent word appearing in financial press, and this could be related to the G7 policy discussions, the Hiroshima Process International Guiding Principles for Organizations Developing Advanced AI Systems and the Hiroshima Process International Code of Conduct for Organizations Developing Advanced AI Systems (G7, 2023[1]; 2023[2]; 2023[3]). The Korean financial press has concentration of interest on the investment perspective of AI (Figure 3.2), which can also be explained by the increasing investment of Gen AI applications (Table 3.1), and other FinTech companies in Korea (Table 3.2).

Figure 3.1. Increasing interest in AI in finance in Japan and Korea (January 2021-October 2023)

Note: Based on a machine learning (ML)-based model that uses Natural Language Processing (NLP) techniques to analyse 44 222 press articles from the Japanese financial press, which included 1 027 AI-related articles; and 436 509 articles from the Korean financial press, which included 9 730 AI-related articles. Covering the period January 2021 – October 2023.

Figure 3.2. AI in finance in Japan and Korea (January 2021-October 2023)

Note: Wordclouds demonstrating a graphic representation of the most frequently used words in the same of financial press articles studied. Terms prevailing in Japanese news articles include ’risk’, ’information’, ‘United States’, ‘China, ‘, and ’requirement‘. Terms prevailing in Korean financial press include ‘investment’, ’increase’, ’chart’, ’array’, ’analysis’” and ’stock prices‘.

Source: OECD. Based on a ML-based model that uses NLP techniques to analyse 44 222 press articles from the Japanese financial press, which included 1 027 AI-related articles; and 436 509 articles from the Korean financial press, which included 9 730 AI-related articles. Covering the period January 2021 – October 2023.

3.2.2. Evolution of sentiment on AI in Finance in Japan and Korea

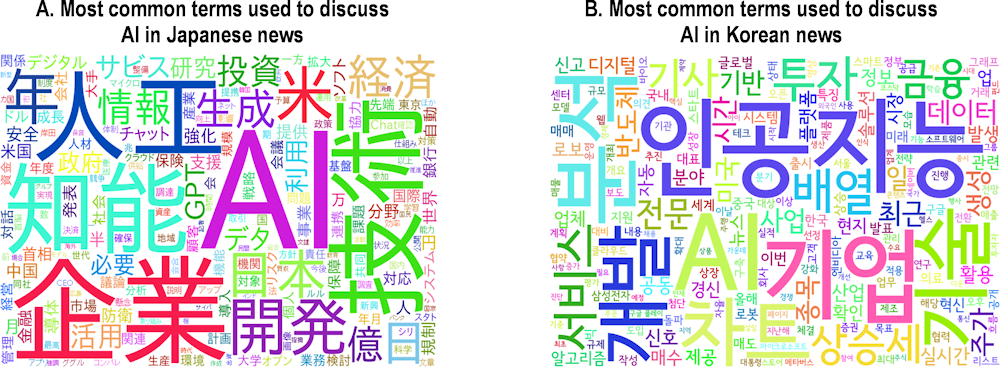

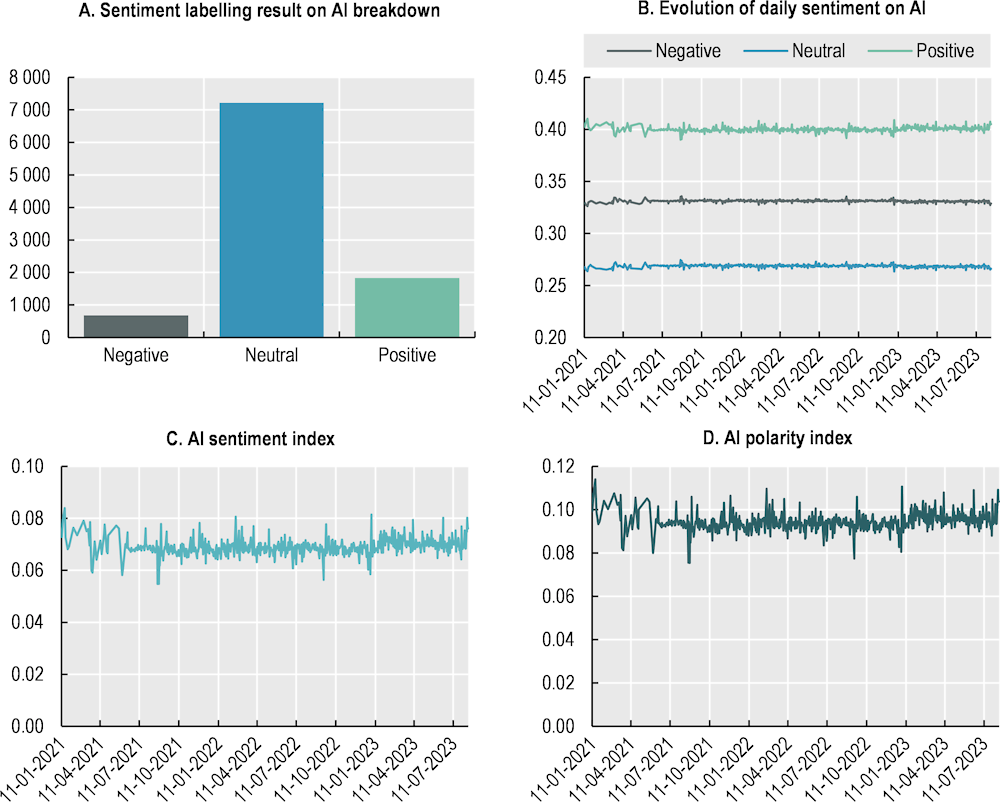

Sentiment analysis performed on the basis of the abovementioned sample of financial press in Japan and Korea provides some evidence of the sentiment towards AI in finance in the countries examined, and indicates the temporal evolution of such sentiment and its direction (Figure 3.3 for Japan and Figure 3.4 for Korea).

The labelling of the articles focusing on AI in the sample examined was based on three sentiments, positive, neutral, and negative, and the probabilities of each sentiment was generated with the pretrained NLP model, BERT. The indices on sentiment and polarity were generated based on a daily basis frequency by integrating those probabilities (see more in the Annex). The sentiment in Japan seems to be mostly neutral in the period January 2021 – October 2023. Over time, the sample exhibits some peaks of negative sentiment which could be attributed to the increase in the discussions around AI and generative AI challenges and risks, inter alia during the G7 meetings in Japan throughout 2023. The same peaks in negative sentiment are reflected in the negative peaks of the polarity index for the sample discussed, although there is great volatility in the sentiment observed. Such large volatility could be explained by periods of increased reporting around AI regulation or policy discussions more broadly in the financial press, as well as by discussions on investment opportunities on AI in Japan3.

Figure 3.3. Sentiment analysis on AI in finance in Japan (January 2021-October 2023)

Note: Based on a ML-based model that uses NLP techniques to analyse 44 222 press articles from the Japanese financial press, which included 1 027 AI-related articles. Polarity and sentiment indices follow similar trends because the equation between the sentiment index and the AI polarity index has the same numerator. The exact equations are denoted in the Annex.

On the other hand, the analysis of the Korean financial press shows a similar absolute prevalence of neutral sentiment in financial press. However, the discussion in the financial press seems to be focusing more on the opportunities of AI, rather than the challenges, and the overall sentiment expressed is always positive in the period examined, as evidenced by the positive values of the polarity index throughout this period. The low volatility of the sentiment indices also stands for consistent positive tone of AI articles in the Korean financial press, and it also demonstrates the Korean news articles contain more positive tones, compared to the Japanese.

Figure 3.4. Sentiment analysis on AI in finance in Korea (January 2021-October 2023)

Note: Based on a ML-based model that uses NLP techniques to analyse 436 509 articles from the Korean financial press, which included 9 730 AI-related articles.

Overall, the results of the sentiment analysis for Japan and Korea may indicate a different tone in the discussion around AI in finance, that may be driven by the multilateral policy discussions that took place in Japan over 2023 during the G7 Presidency. A more negative sentiment may be related to the risk implications from the use of AI and the policy discussions on mitigating such risks. On the other hand, Korean News articles tend to focus on more neutral to positive implications of AI, focusing on investment opportunities and AI applications in finance and beyond.

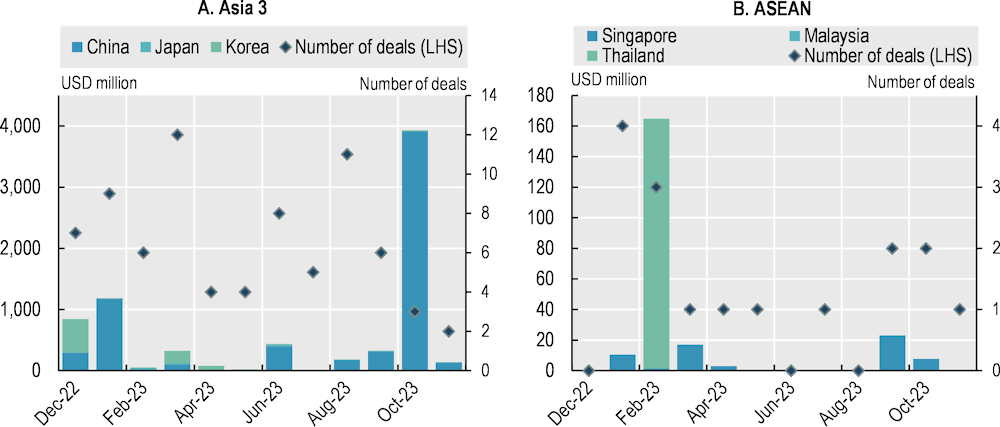

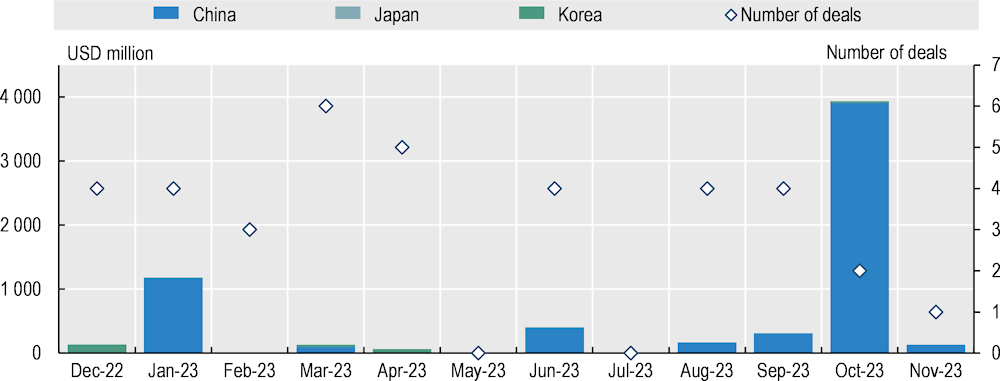

3.2.3. Investment into AI and financial sector acquisitions of AI companies in Asia

Analysis of mergers and acquisitions (M&A) of AI-related companies by financial service providers shows activity in Asia as well as in ASEAN member states over the year 2023 (Figure 3.5). Given the enormous amounts of compute power and data required to develop and train AI models, banks and financial institutions tend to acquire companies developing AI-based models, particularly those with a first mover advantage or with the resources available to undertake design, training and maintenance of models. Although the number of M&A deals in both Asian and ASEAN markets fluctuated during the year, activity is recorded right after the announcement of ChatGPT in November 2022 and following the ChatGPT model updates, announced in February and July 2023. Activity in ASEAN is concentrated on three member states: Malaysia, Singapore, and Thailand.

Looking at the latest trends, the volume of generative AI M&A deals involving financial entities in Japan, Korea, and China gradually increased between December 2022 and March 2023. In ASEAN, a peak is observed in February 2023 when a Thai firm acquired a holding company of semiconductor manufacturing services in Singapore, representing the largest transaction in this sector in the region (The Business Times, 2023[4]). Activity in Asia is concentrated in three countries, Japan, Korea, and China, which together account for almost 87% of total deal volume. Singapore has also recorded important activity, which amounted to 11.6% of the transaction volume.

Figure 3.5. M&A deals related to generative AI in finance in selected Asian jurisdictions

Note: The three Asian countries are Japan, Korea and China.

Source: LSEG and OECD staff compilation.

Figure 3.6. M&A deals related to advanced semiconductors in selected Asian jurisdictions

Note: The 3 Asian countries are Japan, Korea and China.

Source: LSEG and OECD staff compilation.

Large Asian countries also recorded semiconductor-related deals, with chips being a critical component of the AI model development (Section 3.3.1). Since the chip production is mostly led by the three Asian countries, Japan, Korea, and China (World Population Review, 2023[5]), an important number of semiconductor M&A transactions were recorded in these countries over the past year. In particular, 97% of semiconductor deals in Asia involved Japanese, Korean or Chinese entities (Figure 3.6).

3.3. Recent developments in AI: the advent of Generative Artificial Intelligence

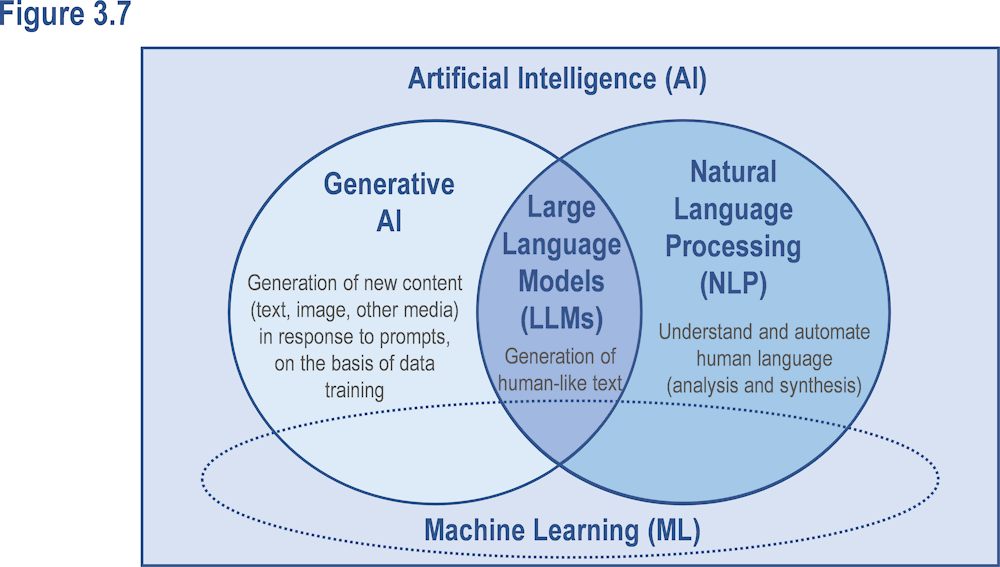

Generative Artificial Intelligence (GenAI) is a subset of AI comprising models that generate new content in response to user-based inputs or prompts by using neural networks and deep learning (OECD, 2023[6]) (Figure 3.7). Examples of output include text (produced from LLMs like ChatGPT), visual outputs (Sunthesia), audio (Speechify), and code (GitHub CoPilot). These outputs are informed by models built on neural networks such as Generative Adversarial Networks (GANs), 4 which process and transform input data based on pre-processed data collected from massive, unstructured datasets.5

Figure 3.7. Generative AI

Note: indicative, non-exhaustive representation of AI domains.

Source: OECD (2023[7]), Generative artificial intelligence in finance, https://doi.org/10.1787/ac7149cc-en.

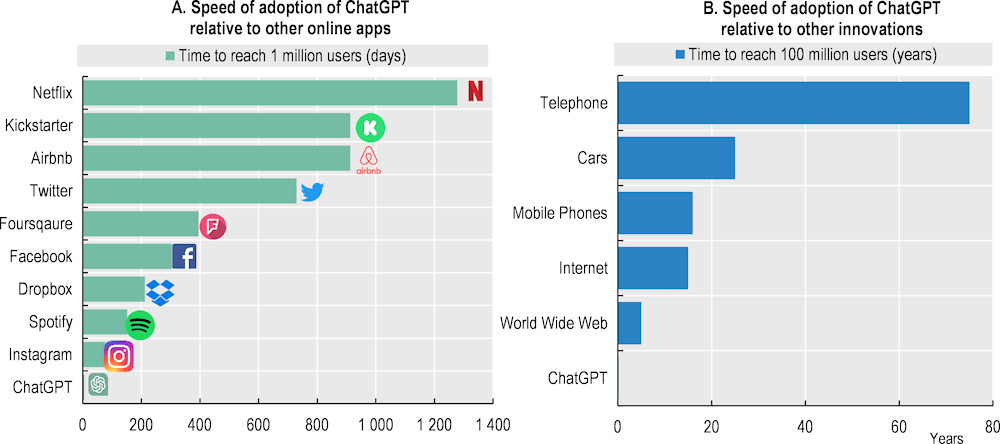

GenAI has garnered widespread popularity as a result of their wide array of potential use cases and their ease of use, particularly when it comes to LLMs that have caught particular attention as a subset of the wider AI advances and tools. These include models such as ChatGPT (OpenAI), Bard (Google), Bing Chat (Microsoft), Claude (Anthropic), Ernie Bot (Baidu). The conversational character of such models, that bring them closer to human cognition than any other previous AI model, coupled with their computational power, have driven to a large extent their notoriety with the general public since the release of ChatGPT in November 2022.

3.3.1. Drivers of fast AI adoption in non-finance applications

AI has developed quickly over the last decade; indicatively, more advances in deep learning have been made in the last ten years alone compared to the last forty years (Kotu and Deshpande, 2019[8]). Such advances are due to three key drivers: significant progress in computational power (GPU and TPU), the rapid growth of available online data, and improved cost efficiency for underlying data processing capacity (Ahmed et al., 2017[9]; OECD, 2021[10]). The exponential growth of datasets is a result of both increasing reliance on internet and online data as well as progress in synthetic data generation, which makes it simpler to produce the volume of data needed to train AI models. Another driver is increased private funding for GenAI, with USD 2.6 billion raised across 110 deals in 2022 (OECD, 2023[7]).

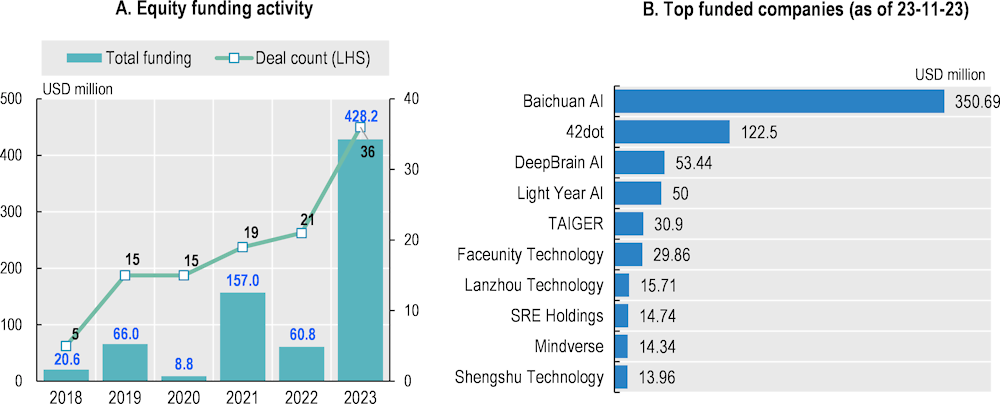

Private and public investment flows into projects involving the development of AI tools have been growing over the past years in Asia, including across ASEAN member states. In 2023, government funding to the generative AI projects in Asia Pacific supported almost two-thirds of the regional organisations looking into the potential use cases of Generative AI (IDC, 2023[11]). On the private investment side, the total value of private equity funding for GenAI projects in Asia stood at USD 428.2 million as of 30 November 2023, recording a significant increase relative to previous years (Figure 3.8). A similar increase is observed in the corresponding number of transactions underlying this funding. In terms of the number of venture capital (VC) investments, AI accounted for a very small percentage (3%) of total venture capital investments in Asia in 2012, reaching 23% of all VC investments by H1 2023 (Monetary Authority of Singapore, 2023[12]). Important investments are also flowing in the semiconductor industry of the Asian region, with Japan, Korea, China and Chinese Taipei accounting for around 41% of global market share in 2021 and 36 % of the global R&D expenditure (as a percent of sales), respectively (SIA, 2023[13]). Those investments are related to semiconductor subproduct sectors such as chips are to a large extent driven by demand and urgency in sourcing logic chips used to build large AI models (Section 3.2.3).

Figure 3.8. Private funding flows to generative AI and generative AI unicorns in Asia

Source: CB Insights and OECD staff compilation.

AI and LLMs have gained increased popularity and demand from the public given they are designed to be user friendly, accessible, and intuitive, while also having significant technical capabilities. Unlike other types of AI, like deep neural or ML models, GenAI models product outputs that are easy to grasp by the average user without any specific technical knowledge and resonate with human cognition, thereby driving fast adoption (Figure 3.9). The cost-free availability of online LLM models such as ChatGPT and their conversational abilities contribute to such a demand trend.

Figure 3.9. Speed of adoption of some generative AI applications

Source: OECD (2023[7]), Generative artificial intelligence in finance, https://doi.org/10.1787/ac7149cc-en; based on Statista and OECD calculations.

3.3.2. Slow-paced deployment of advanced AI models in finance

Despite the popularity of AI, implementing advanced AI solutions such as generative AI models that involve full end-to-end automation in financial markets continues to be in the testing and development phase (OECD, 2023[7]). So far, such tools are mostly deployed in process automation, which is designed to enhance productivity at both the back-office (operations) and middle-office (compliance and risk management) of financial service providers. These tasks include content generation, summarisation of documents used by financial advisors, and human resource processes. As AI advances, its use in front-end use cases and the increased use in the back-end is expected to accelerate.

Part of the reason why advanced AI tools such as generative AI models have slow uptake in finance is that financial market activity is highly regulated. Risk management and model governance rules and regulations are already in place, and the technology neutral approach of regulation renders them applicable irrespective of the type of technology used (OECD, 2023[7]). As such, the more advanced AI techniques may not be fully compatible with regulatory frameworks that try to ensure market integrity, consumer protection, financial stability and require risk management, model governance, transparency, and other obligations.

Given the important costs of developing and training large AI models such as LLMs, in most cases the use of such models by financial market participants will involve the outsourcing of a model that is then tailored to the specific needs of the user firm. Such model will then be trained with private proprietary data in ‘offline’ environments, for example within the private cloud of the firm.

Furthermore, due to the high risks of security and data breaches, the use of public AI tools is most likely incompatible with data protection frameworks in place. The use of open-source or off-the-shelf reusable models (e.g. foundation models6) can pose a significant risk of data breaches of financial market participants’ sensitive and confidential client data. Additionally, some AI models such as LLMs, are known for lack of transparency behind their decision-making processes. This can be problematic for financial markets where transparency is crucial for regulatory compliance and trust (Section 3.5.4). As such, financial market participants that use AI typically deploy restricted and bespoke LLM models that operate within the firewalls or at the private cloud of the firm in order to ensure data sovereignty and security.7

Also, given the legal responsibility and fiduciary duty of financial service providers to act in the best interests of the clients, financial service providers must work to protect clients from the risks of misleading outputs, misinformation, and other risks posed by advanced AI tools (e.g. deceptive model outcomes, deepfakes etc. Section 3.5.4). Risks related to the use of advanced AI models discussed in this chapter may be an additional impediment to the wider use of such tools by the financial sector at this stage. Incompatibilities with applicable rules and requirements, such as the ones posed by the lack of explainability of model outputs, may further impede their widespread usage in finance.

Smaller financial service providers will likely face greater challenges in the implementation of AI tools, including advanced AI models such as LLMs, related to their capacity. Although large financial institutions may have challenges related to their existing governance structures and legacy infrastructure, smaller players may not have the financial resources and capacity of managing datasets in order to be able to implement large AI models. For instance, successful deployment of AI depends on both the availability and quality of data. Smaller financial institutions may not have sufficient data management structures in place to support the vast amounts of unstructured data they own for the purpose of AI use. While the above risks regarding training data are salient for supervised ML models in finance, AI models such as LLMs that are fully autonomous and self-supervising do not need to label training data. This is because such models can identify complex relationships and learn from unstructured data. Furthermore, effectively using AI tools to keep up with modern work trends will require AI skills across the board. Accordingly, AI skills should be present at all levels and functions that use AI for service provision and may require additional organisational manoeuvring.

One example of the phased introduction of AI in finance is the use of AI in trading: instead of completely automating the entire trading process, the use of AI is limited to specific tasks, mostly to analyse the large, noisy and complex datasets at hand to identify insights for trading decisions. It is possible that AI-based algorithms may eventually be fully automated and equipped to adjust their own decisions without requiring human intervention. However, the use of AI in trading can nevertheless exacerbate the risk of prohibited or illegal trading strategies such as spoofing and front-running (OECD, 2021[10]).

Financial market participants are currently experimenting with customized, offline or private versions of LLMs and other advanced AI tools (OECD, 2023[7]). Presently, these models use public data to primarily act as sources of information and as tools for internal processes and operations. As AI continues to advance, it can be anticipated that financial market participants may implement new use cases of these models emerging from experimentation or third-party provision. The wider adoption of AI mechanisms may expose financial service providers, users and the markets to important risks, warranting policy discussion and possible action.

3.3.3. Direct vs. indirect scope of use of AI in finance

Different types of AI models interact with financial service providers and/or the end customer in different ways, and each level of interaction comes with a different level of associated risks. These different levels may also underpin the slow-paced and phased deployment of AI in finance, which is currently used primarily to assist operations as opposed to full automation and direct interaction of the model with the financial consumer.

AI models, particularly those with generative capabilities, can be employed to assist customers without directly interacting with them. For instance, they can be used to generate portfolio allocation recommendations that are customised to their financial profile. Such output can be used as input to inform the financial service provider in the delivery of his recommendation. But they can also be used more directly, to provide direct personalised recommendations to the customers and/or to execute suggested recommendations without any human involvement. The latter case has increased risks and anecdotal evidence by the financial services industry indicates limited full end-to-end deployment of AI tools at their current stage of development.

Risks related to the use of AI in finance increase given that users (whether financial service providers or end-customer) may not be fully aware of the limitations of the AI models. These risks are even more pronounced if and when the model interacts directly with the customers and executes its own recommendations in a fully automated manner without any ‘human-in-the-loop’ and therefore such direct scope of use poses significant risks to both the customers and the service provider. It could be anticipated, however, that the use of AI in finance will in the future evolve to include such direct interaction of financial consumers with the model, and to that end trust and safety of such applications will be of paramount importance.

3.4. Use cases of AI in finance and associated benefits for ASEAN countries

AI tools are used across a vast array of use cases in financial markets, including multiple parts of the value chain and multiple verticals, such as asset management (e.g. stock picking; risk management and operations); algorithmic and high-frequency trading (e.g. liquidity management and execution with minimal impact); retail and corporate banking (e.g. onboarding, creditworthiness analysis, customer support) and payment institutions (e.g. AML/CFT, fraud detection) (OECD, 2021[10]). The performance of such AI-based services is expected to be improved through the use of AI tools, particularly for areas such as sales and marketing, customer support and operations, including data/information management, as well as translation, coding and software development.

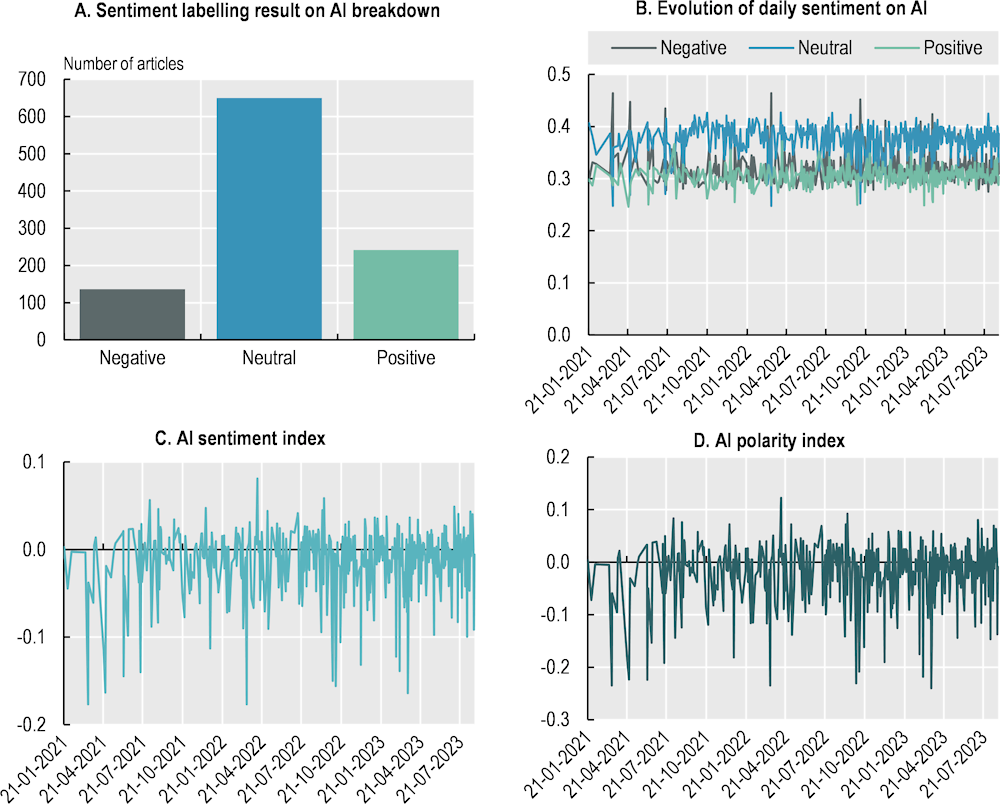

Operations and back-office functions is one of the most widely reported use cases of AI in finance today (Figure 3.10), with the potential to increase both efficiency and accuracy of operational workflows and enhance performance and overall productivity. This can be an important benefit for financial market participants in ASEAN countries, as it can allow for cost reduction given that these AI tools can replace manually intensive and repetitive P&L and other reconciliations with less expensive and more efficient automated ones. To the extent that such cost savings are passed on to the end customer, these can alleviate any potential cost burden associate with formal financial services.

Figure 3.10. Generative AI use cases in finance, 2023

In % of industry survey respondents

AI, and generative forms of AI in particular, can also be used to make data analysis and reporting firm data more human-like for both internal and external purposes, facilitating regulatory reporting and compliance by small financial services firms in particular. External purposes include customer service analytics, human resource tasks (e.g. generation of summaries of management reviews), translation or summarisation of contracts, or other reporting. AI models with generative capabilities can also enhance individualised communication for customer-facing purposes, including tasks related to product creation, marketing and sales, and improved customer support.

AI-based anomaly detection tools can also improve both AML/CFT processes fraud detection across various types of financial market participants, especially in the area of payments, with important potential contribution to improving trust and confidence in the formal economy for ASEAN consumers. This, in turn, can increase customer trust and satisfaction from the formal financial system and their willingness to participate in the formal economy. These models can automatically identify outliers that deviate from expected data points and behaviour within given datasets (Kotu and Deshpande, 2019[15]), thereby potentially implying fraudulent activity. It can also be beneficial for automating client onboarding, making KYC checks for banking clients more efficient, and improving compliance functions for financial market participants. The performance of such tasks can be further augmented by generative forms of AI, which can use company data to generate reporting and other necessary outputs needed to facilitate compliance.

AI is also used to enhance risk management for asset managers and institutional investors. AI-based risk models have the capacity to quickly assess portfolio performance under various market and economic scenarios by considering a range of consistently monitored risk factors. Investment strategies, such as quantitative strategies or fundamental analysis of systematic trading, have always relied heavily on structured data. However, AI-based models use raw or unstructured/semi-structured data to give investors an informational advantage. This data-driven approach enhances sentiment analysis and provides additional insights using pattern recognition (OECD, 2021[10]).

ML models in particular inform decision-making for portfolio allocation and/or stock selection by using pattern recognition, NLP8 to make predictions (Table 3.1). Such models have recently gained significant attention due to the ability of models like neural networks to capture non-linear relationships between stock characteristics and future returns by learning from data. This has opened up the potential for including ML‑based stock-selection strategies in informing portfolio construction. Academic studies examine whether or not such ML-based strategies can generate “alpha”, a measure of investment performance, with mixed results (Freyberger et al., 2020[16]; Moritz and Zimmermann, 2016[17]). Interestingly, ML-based strategies with longer time horizons tend to focus on slower signals and rely more on traditional asset pricing factors, which can lead to poorer performances compared to short-term strategies (Blitz et al., 2023[18]).

AI models in lending could reduce the cost of credit underwriting and facilitate the extension of credit to ‘thin file’ clients, potentially promoting financial inclusion (OECD, 2021[10]). The use of AI can create efficiencies in information management and data processing for the assessment of creditworthiness of prospective borrowers, enhance the underwriting decision-making process and improve the lending portfolio management. It can also allow for the provision of credit ratings to ‘unscored’ clients with limited credit history, supporting the financing of the real economy (e.g. SMEs) and potentially promoting financial inclusion of underbanked populations. As with any AI application in finance, the potential benefits come also with important risks, such as possible discrimination and bias; and with challenges, such as the difficulty in interpreting the model’s output (explainability) (Section 3.5.1).

The most significant opportunities for Gen AI are expected to lie in customer-facing financial services and in the delivery of new, highly customised products. ‘Traditional’ AI classes were used to power chatbots and automated call centres for customer support (Weizenbaum, 1966[19]). In comparison, GenAI introduces a conversational element that closely resembles human interaction. GenAI is also expected to support the production and delivery of new products, from investment advice to robo-advisors, by using product-feature optimisation, and improving targeted sales and marketing (Table 3.2). GenAI can also help brokerage firms and other investment advisors tailor their recommendations at an individual level, delivered in a human‑like and conversational manner, through improved customer segmentation at the individual level in an efficient manner.

Table 3.1. Select types of AI applications by Asian financial services firms

|

Name |

Segment |

Service |

Description |

|---|---|---|---|

|

TROVATA |

FinTech |

Treasury tool |

Generative AI Finance & Treasury Tool |

|

Kakaobank |

FinTech |

R&D center |

With the basis for judgment on the results made by AI, explaining the decision-making process and results from the user's perspective |

|

Shinhan Bank |

Commercial Bank |

Financial assistant |

Recognition of AI customer answer, real-time AI consultation analysis, tablet handwriting verification, and full text subtitle implementation |

|

Kookmin Bank |

Commercial Bank |

Financial assistant |

The AI model automatically generates the overall financial status and analysis, and system judgment results, which is for the corporate goddess in charge |

|

Hana Bank |

Commercial Bank |

Financial advisor assistant |

Building AI chatbot and callbot services for banks and cards based on NLP engines, enabling AI to quickly determine customer requests and suggest ways to respond directly or process them on their own |

|

Nonghyup Bank |

Commercial Bank |

Sales and marketing, information management |

The 12 different AI channels, including customer service, consultation support, quality control, and big data analysis |

|

MUFG Bank |

Commercial Bank |

Financial assistant |

Using AI chatbots to increase productivity responding the customers’ inquiries for their satisfaction |

|

SMBC Bank |

Commercial Bank |

Financial assistant |

Using the chatbot as a personal teller service where customers can make inquiries via a messaging style interface |

|

MIZUHO Bank |

Commercial Bank |

Financial assistant |

With Fujitsu’s generative AI technology, using the streamline of the maintenance and development of its systems |

|

Daiwa Securities |

Corporate and Investment Banking |

Financial assistant, information management |

Free use of ChatGPT to the employees to identify financial products and collecting information and drafting |

|

VietABank |

Commercial Bank |

Financial assistant, information management |

Using AI for foreign currency transactions, personal credit, and digital banking by transaction monitoring to detect fraud and risk through VPDirect |

|

Vietcombank |

Commercial Bank |

Financial assistant, information management |

Collaborated with FPT Smart Cloud Company to develop VCB Digibot, a customer care chatbot platform |

Note: Non-exhaustive and based on reported information by financial market participants.

Source: OECD based on web research.

However, the greatest short-term impact of AI for finance could come in the areas of financial analysis assistance and communication, especially in light of the proliferation of platformisation and embedded finance. AI tools’ ability to make predictions and produce information is critical for product support. Recommendation engines, a class of ML techniques, can predict user preference, especially when bolstered by methods like content-based filtering (Kotu and Deshpande, 2019[20]). AI’s ability to generate content complements this by tailoring sales strategies and marketing campaigns to individual customers, thereby potentially promoting financial inclusion if the customisation aims at that objective.

Coding represents another highly impactful domain for generative AI, which can support software development for a wide array of financial services/products. It can serve as a dedicated coding assistant and generate new code, provide troubleshoot scripts, offer solutions to coding errors, and test code. Despite its significant potential and relatively low associated risks, this use case is more unexplored compared to others. Similar considerations are also applicable in the use case of translations supporting customisation and communication around financial services and products. Related, AI can produce custom, large-scale synthetic data that is customised for specific market scenarios. Synthetic data is artificial data created from an original dataset and a model that is trained to mimic its characteristics and structure. It offers potential advantages in terms of privacy, cost and fairness (EDPS, 2021[21]). In the financial sector, the most pertinent use case is generating simulated financial market data for scenario analysis and creating datasets to test, validate and calibrate AI-based models.

Finally, AI can support sustainable financing and ESG investing, particularly through NLP for real-time ESG assessments based on firms’ communications, like corporate social responsibility reports (ESMA, 2023[22]). In investment strategies, AI tools are mainly deployed to process unstructured and complex ESG-related data that typically require more sophisticated analysis (Papenbrock, GmbH and Ashley, 2022[23]). As ESG continues to gain prominence, asset managers are also advocating for ethical AI use by companies they invest in. For example, the world’s largest sovereign wealth fund, located in Norway, is introducing AI use standards for its portfolio companies to align with its responsible investment framework and ESG commitments (FT, 2013[24]).

Table 3.2. Select AI companies offering AI and generative AI applications for finance in Asia

|

Name |

Service |

Description |

|---|---|---|

|

Active.Ai |

Customer support |

Using AI to provide conversational finance and banking services and help financial companies integrate virtual intelligence assistants into their services |

|

Boltzbit |

Synthetic data generation and analysis |

Offers database linking, portfolio optimization and enhanced prospect profiling through the generation of synthetic financial data. |

|

KryptoGO |

Financial analysis |

Fast identity verification, risk assessment, blockchain address analysis, and periodic reviews, ensuring your business remains highly compliant and secure |

|

QRAFT |

Synthetic data generation and analysis |

Easy access to the translated and summarized overseas disclosures with its pioneer AI-driven investment solutions |

|

INNOFIN |

Financial analysis |

Data collection, refinement, and preprocessing the scattered financial data with AI and big data technologies |

|

ALCHEMI LAB |

Synthetic data generation and analysis |

AI-Guided trading solution that visually displays the risks associated with each trade, empowering traders with insight, based on asset allocation |

|

AI ZEN |

Financial Advisory |

AI-based banking services for financial institutions easy access to data by securing more customers with better automatic financial decision-making |

|

Syfe |

Financial Advisory |

With its Robo-Advisor by accessing diversified, institutional-grade funds and optimizing the portfolio’s equity component to outperform the markets over time |

|

bambu |

Financial Advisory |

SaaS-based Robo-advisor with full transactional capabilities, customizable to your products, portfolios, personalized branding |

|

KRISTAL |

Financial Advisory |

Building a customized financial plan tailored to the investment needs and managing the funds across various asset classes, investment styles, and geographies |

|

AUTOWEALTH |

Financial Advisory |

Institutional grade Robo-advisory available to retail users and the WealthTech automating the investment plans by offering professionally managed portfolios to cater to the investment needs |

|

WEINVEST |

Wealth Management |

Quant strategies augmented with AI/ML capabilities of digital wealth management and asset management |

|

StashAway |

Wealth Management |

Easy investment on autopilot managed by experts or customizing the portfolio by earning returns with fair and transparent fees and unlimited transfers and withdrawals |

|

WINKSTONE |

Wealth management |

By existing financial institutions to AI/ML-based models, public and actual transaction data other than credit data used for financial benefits |

|

ADVANCE.AI |

Direct Lending / Credit Scoring |

Managing risk efficiently across the industry by preventing fraud and automating workflow to reduce the cost |

|

credolab |

Direct Lending / Credit Scoring |

Scoring Risk, detecting fraud, improving marketing for better decisions with advanced behavioral analytics |

|

LenddoEFL |

Direct Lending / Credit Scoring |

Offering alternative credit scores based on the consumer's digital footprint, from social media posts to geotagged photos, and behavioral data derived from psychometric tests |

|

TurnKey Lender |

Direct Lending / Credit Scoring |

Providing a B2B AI-powered lending automation platform, and decision management solutions and services |

|

VALIDUS |

Direct Lending / Credit Scoring |

Supervised and unsupervised machine learning can predict potentially fraudulent and anomalous transactions, and protect customer databases to provide SME working capital loan services |

|

CrediLinq.Ai |

Direct Lending / Credit Scoring |

Disrupting credit underwriting for businesses using embedded finance and Credit-as-a-Service |

|

funding societies |

Direct Lending / Credit Scoring |

Pairing AI processes with a reliable funding option to allow businesses to focus on expanding their roots |

|

aspire SYSTEMS |

Direct Lending / Credit Scoring |

Businesses assessments for the effectiveness of different service approaches, optimize resource allocation, by simulating real-world scenarios to identify potential challenges and opportunities, which ultimately leads to more informed and robust service strategies |

|

SILOT |

Direct Lending / Credit Scoring |

AI platform for intelligent financial decisions by enabling banking software suite and merchant banking solutions |

|

cynopsis.co |

Regulatory and Compliance |

Offering the RegTech solutions designed to automate KYC/AML processes |

|

Handshakes |

Regulatory and Compliance |

Performing entity search and gain useful insights to support your due diligence with data analytics solutions |

|

SILENT EIGHT |

Regulatory and Compliance |

Leveraging AI to create custom compliance models for the world's leading financial institutions to combat money laundering and terrorist financing |

|

SHIELD |

Anti-Fraud |

Provides software security solutions which is related to the online fraud management solutions enabling enterprises to manage risk from fraudulent payments and accounts |

|

URBANZOOM |

Quantitative & Asset Management |

AI-enabled research tool for homeowners, buyers, sellers, landlords and tenants |

|

value 3 |

Quantitative & Asset Management |

B2B FinTech offering Capital Markets AI-platform for independent, predictive, and automated credit ratings, research and analytics |

Note: Non exhaustive list.

Source: OECD compilation based on public sources.

3.5. Risks and challenges of AI applications in finance

The use of AI tools in finance has the potential to amplify risks identified in the use of more ‘traditional’ AI mechanisms in financial markets, while it also gives rise to novel risks (e.g. related to the authenticity of outputs of LLMs) (OECD, 2023[7]). This section identifies such risks, focusing on the most pertinent for ASEAN economies idiosyncrasies.

3.5.1. Lack of explainability

Lack of explainability, which can be described as the capacity to understand or clarify how AI-based models arrive at decisions, can increase risks and incompatibilities for financial applications. While such risks already existed for ML models and other AI techniques, they are significantly amplified when AI models are used. Recent advances in generative forms of AI demonstrate its ability to generate highly complex, non-linear, and multidimensional outputs, which, while providing potential benefits, makes it harder for humans to understand or interpret their decision-making processes. This is made more difficult by the dynamic nature of AI models, which adapt based on feedback on a dynamic, autonomous manner9.

The significant lack of explainability for AI decisions makes it harder to mitigate risks associated with their use. Limited interpretability of AI models makes it harder to identify instances where inappropriate or unsuitable data is being used for AI-based applications in finance. This magnifies the risks of bias and discrimination in the provision of financial services, which is particularly pertinent in countries with ethnic minority groups, as is the case in some ASEAN countries (UN, 2012[25]). This also creates challenges when it comes to adjusting investment or trading strategies due to the nonlinear nature of the model or lack of clarity around the parameters that influenced the model’s outcome. Overall, lack of explainability of AI‑based models can also lead to low levels of trust in AI-assisted financial service provision for both customers and particularly market participants, limiting its potential beneficial impact.

3.5.2. Risk of bias and discrimination

Risk of bias and discrimination in the outcomes of algorithms has been well-known since machine learning first began being used in finance given the quantity of data required to train AI-based models. For example, if data containing gender-based variables or information on protected categories, like race or gender, is used as input for the AI-based model, it can lead to biased outputs. These results are not necessarily intentional because algorithms may analyse seemingly neutral data points but can nevertheless use such data points as proxies for protected characteristics like race or gender or infer these from the datasets. This can lead to biased decisions that may circumvent existing laws against discrimination. Bias can also be intentional when datasets used to train the model are manipulated to intentionally exclude certain groups of consumers.

A pertinent example of risk of bias and discrimination that could be relevant for ASEAN countries lies in credit allocation and discriminatory lending practices when creditworthiness is assessed using AI-based models and alternative data (OECD, 2021[10]). When such models are exclusively used for credit allocation decisions, this can risk disparate impact in credit outcomes, i.e., different outcomes for different groups of people, and can make it more challenging to identify instances of discrimination due to the machine’s lack of transparency and the limited explainability of AI models, exacerbated in case of generative forms of AI. Such lack of explainability also makes it impossible to justify the outcomes to declined prospective borrowers, a legal requirement in certain jurisdictions. Consumers are also limited in their ability to identify and contest unfair credit decisions. Even when the decision is fair, it is difficult for prospective borrowers to understand how their credit outcomes can be improved in a future request for credit.

Advanced AI models can be trained on any data source available online, intensifying the risk of discrimination as the model can learn from possibly already biased data, such as data that includes hate or toxic speech. Furthermore, imbalanced datasets, where some data is underrepresented or excluded while other data is more dominant, can negatively impact the model’s accuracy and thereby distort results. Such was the case with the Gender Shades project for facial recognition (Buolamwini, 2018[26]). Since advanced AI models have the opportunity to learn from user feedback, including through user prompts, this risk is accentuated as the model outputs could reflect prejudices demonstrated by the individual users post-training of the model.

3.5.3. Data-related risks

Data plays a critical role for both financial systems and AI, which is why quality data is central to quality output of any AI model, and even more so of advanced AI models, such as the ones with generative capabilities, given the massive amount of data required for their training, as well as the dynamic self-learning capabilities and the feedback loops with user input (OECD, 2023[7]). The quality of outputs is also impacted by the level of representativeness of data, which must cover a comprehensive and balanced representation of a population of interest in order to minimise risk of bias or discrimination and promote the accuracy of the model outputs.

Risks to data privacy and confidentiality would increase with the possible integration of plug-ins in private AI models, allowing for access to a wide array of content. Accordingly, this can increase the volume of data flowing into AI systems, which will further amplify the risk of data breaches by making it more challenging to protect such vast swathes of information. User inputs (e.g. prompts) can also contain private or proprietary information that would heighten the risks involved with data leaks. While specificity of user inputs can improve the quality of output, this may come at the cost of potential data privacy breaches.

In addition to quality and privacy, authenticity of data and intellectual property risks are also significant concerns for AI models built on large amounts of unstructured, public data. Given the vastness and diversity of this data, there are risks that training datasets may contain information protected by intellectual property rights, potentially without proper authorisation or copyright permissions. Consequentially, there is also an inherent doubt about the authenticity of such outputs due to the uncertainty around origin and permission status of the data used for training. Data provenance, or the origin and complete history of data, and data location, or the physical location of data, are also important considerations. AI-model related data management and data sharing frameworks, which allow third parties to access customer data, must consider the implications of data provenance and location when exploring challenges around intellectual property and data ownership. Financial market participants should also consider who holds the ownership of the data used to train their private AI models. This involves examining the intellectual property rights of the models used and their outputs.

3.5.4. Cyber-security risks

Similar to other digitally enabled financial products, the use of AI techniques exposes markets and their participants to increased cyber-security risks. AI models exacerbate such risks as they could be used by bad actors to tailor individualised fraud attacks on a large scale and with fewer resources required. For example, AI tools could be used for social engineering, email phishing, and attacks that compromise access to firms’ systems, emails, databases, and technology services (Federal Reserve Board, 2023[27]). Integrating external models, such as third-party software or open-source systems, amplifies the risk of cyber-security breaches by introducing vulnerabilities to a firm’s security infrastructure. Such vulnerabilities can stem from the inherent risks associated with using externally sourced software, and such risks are further exacerbated when dealing with open-source systems due to their broader accessibility and potential for security gaps to be identified by bad actors.

The misuse AI techniques can easily cause market financial disruption, which in some cases also involves difficulties in market participants understanding of whether the information they receive is true or constructed. For instance, Deepfake pictures or other content generated by AI may be used to manipulate the market. For example, a fake viral image of an explosion at the Pentagon in 22th of May, 2023, which is possibly generated by AI, induced the market fluctuation to the US stock market (NPR, 2023[28]). Another example in the Asian region involved a false essay entitled "Warning Article on Major Risks in iFLYTEK”10 that was widely circulated in the market, which was eventually confirmed as having been written by generative AI.

Cyber-security risks could also include state-sponsored cyber-attacks leveraging on advanced AI tools, such as generative AI, to disrupt financial markets by disseminating sophisticated disinformation. State-sponsored cyber-attacks are linked to or sponsored by states and aiming at both financial profit and/or geopolitical goals (e.g. hacks by North-Korean-affiliated Lazarus Group).11 Hackers in these cases are trained as national projects, with systematic and sophisticated attack methods. AI could magnify the risk of financial market manipulation by state-sponsored hackers or other malicious actors given the significant capabilities of such tools that could be used for massive manipulation of markets and their participants. For example, deepfakes (e.g. voice spoofing or fake images generated by AI) could be used to spread disinformation that is difficult to detect and identify as false and misleading given the capabilities of AI (e.g. rumours or disinformation that could cause market instability or panic). Furthermore, with the development of quantum mechanics-based computational power, there are risks of increased cyber-security risks involved, including with geopolitical motives (The Institute of World Politics, 2019[29]; NATO, 2022[30]).

Bad actors can therefore utilise AI to conduct market manipulation at a large scale. This could involve dissemination of false information about stocks and other investments or provision of deceptive advice to potential investors and other financial consumers. Regular, real-time input of web information, such as social media data, into AI-driven financial models can increase such risk of market manipulation.

To address the various risks highlighted above, large financial institutions currently report the use of private, restricted versions of AI models that operate offline within the firm’s firewalls or private cloud. This setup promotes greater security over the operation of the AI-based application, thereby allowing financial institutions to better protect client data and proprietary information. It also allows them to better oversee and ensure compliance of AI use with regulatory standards. A future scenario in which use of plug-ins enable input of real time internet data for these proprietary models may see an increase in market manipulation risk as it can enable bad actors to spread rumours through social media, thereby impact financial markets.

3.5.5. Model robustness and resilience, reliability of outputs and risk of market manipulation

According to the OECD AI Principles, it is essential that AI systems consistently function in a robust, secure and safe way while continuously managing related risks (OECD, 2019[31]). If AI-driven models lack reliability and accuracy, there's a heightened risk of poor outcomes, particularly for financial applications like inadequate investment advice. Models that lack robustness and resilience may not function as intended, posing potential harm in unforeseen scenarios or environments. In essence, these models are unable to handle unexpected events or changes effectively, impacting end users negatively (NIST, 2023[32]). Concerns regarding data quality, discussed above, as well as model drifts and overfitting pose risks to the accuracy and reliability of machine learning models use in finance (OECD, 2021[10]). When unexpected events cause disruptions in the data used for model training, for example, this can cause model drifts that negatively impact the models’ predictive capability, especially during market turbulence or periods of stress.

Lack of resilience of AI models and their potentially limited reliability can impact trust among retail investors and financial consumers, which could be even more concerning in economies with important part of the population being unbanked - as is the case in several ASEAN member states. In advanced AI models, such as generative AI, user interactions and the feedback loops used for self-learning models can reduce the model’s accuracy: recent empirical analyses demonstrate that there can be significant change in the behaviour of the same LLM model over a short time, thereby requiring ongoing monitoring of LLMs (Chen, Zaharia and Zou, 2023[33]). It is challenging to discern whether changes in the model’s accuracy stems from model updates or from interactions with users, where poor quality inputs may affect the model’s autonomous learning process. AI also introduces risks related to a model output’s quality and reliability, potentially leading to the risk of ‘hallucinations’12 or other kinds of deception or misinformation.13 False information or advice provided by AI-driven financial models can damage the credibility of financial market practitioners responsible for this service provision among financial consumers. AI models have the potential for deception that be either unintentional, such as when AI generates content that does not have any real-world basis, or intentional, such as in fraudulent use cases like identity theft. Such kinds of deception can be subtle, like encouraging use of opaque methods for discretionary pricing based on client attributes such as purchasing power among financial advisors. Differentiating between accurate information and inaccurate or deceptive information is crucial in mitigating such intentional or unintentional risks. The potentially limited awareness of limitations of AI-models by both users and recipients of such financial services can exacerbate concerns about the trustworthiness of the models as well as the services in question.

3.5.6. Governance-related risks, accountability and transparency

Financial institutions that use AI-based models adhere to their established model governance frameworks, model risk management and oversight arrangements. This involves defining clear lines of responsibility for the development and supervision of AI-based systems across their entire lifecycle, from creation to implementation, and assignment of accountability for any negative outcomes that result from the model’s operation. However, accountability hinges on transparency (NIST, 2023[32]), which can only be advanced in AI models by disclosing a comprehensive amount of information about the model and its data. This includes information on data sources, copyrighted data, compute- and performance-related information, model limitations, foreseeable risks and steps to mitigate risks (such as evaluation) and the environmental impact of these models.

The environmental aspect of advanced AI model usage is particularly important for financial market participants who aim to harmonise AI applications with ESG practices they may follow. Achieving high levels of transparency for AI models might face challenges based on their specific characteristics. For instance, disclosing copyright status of training data sourced from unstructured internet information may prove difficult (Bommasani R. et al., 2023[34]). Similar to the case of DLTs (OECD, 2023[6]), accurately measuring energy usage and emissions may prove challenging. Furthermore, given their influence on downstream use, it may prove difficult to establish accountability for a model’s downstream14 applications.

The lack of awareness regarding the associated risks might heighten the risk profile for both AI tools and for the end users. As use of AI solutions continue to become more widespread, the AI-driven tools and applications are also likely to proliferate in the financial industry. As such, non-qualified practitioners may also unknowingly begin to use these tools and therefore, governance frameworks for financial market practitioners may need to consider human capacity requirements (e.g. awareness and skills).

Governance issues are amplified in the case of outsourcing AI models and third-party provision of AI‑related services and infrastructure (such as cloud providers), which is particularly important in the case of smaller financial institutions active in ASEAN member states given possible limits in their in-house capacity to develop/maintain large models. Governance hurdles may be associated to the assignment of accountability for adverse outcomes to third parties involved in model creation and training. Questions about intellectual property also emerge as financial providers, even if they purchase “off-the-shelf” AI models, may not necessarily own the intellectual property rights. Simultaneously, these providers do input valuable proprietary data to these models, which the third-party service provider can access. The distinction between the roles of model provider and model deployer may also need to be considered for matters related to oversight and enforcement.

3.5.7. Systemic risks: Herding and volatility, interconnectedness, concentration and competition

The use of AI-based models in finance, including GenAI, could pose potential systemic risks with regards to one-way markets, market liquidity and volatility, interconnectedness and market concentration (OECD, 2021[10]). The widespread use of the same AI model among numerous finance practitioners may induce herding behaviour and one-way markets, affecting liquidity and system stability, especially during stressful periods (OECD, 2021[10]). For example, AI in trading could potentially exacerbate market volatility by initiating large and simultaneous sales or purchases, thereby introducing new means for vulnerabilities (FSB, 2017[35]). When trading strategies converge, they risk creating self-reinforcing feedback loops that lead to significant price shifts and pro-cyclicality. In addition, investor herding behaviour can cause liquidity issues and flash crashes during times of stress, as seen recently in algo-high frequency trading.15 Such convergence also heightens cyber-attack risks, allowing bad actors to influence agents that act similarly. These risks are prevalent in all algorithmic trading and are accentuated in AI models that autonomously learn and adapt, notably unsupervised learning-based AI models.

The use of AI in financial market activity, such as trading, may cause financial markets and institutions to further connect financial markets and institutions to each other in unforeseen ways, including interconnections between previously unrelated variables (FSB, 2017[35]). It can also result in higher network effects, potentially causing unexpected changes in the magnitude and direction of market movement. This may be further increased by the advent of AI-as-a-Service providers, especially those that provide bespoke models (Gensler and Bailey, 2020[36]).

AI models amplify concerns about market concentration and dominance by a few model providers, potentially risking market concentration and a range of systemic implications (OECD, 2023[7]). These risks are compounded by the concentration of data (Gensler and Bailey, 2020[36]) while they could also be associated with infrastructure providers enabling the use of AI models (e.g. cloud services). The risk of operational failures by dominant players can have systemic effects for the markets based on the level of dependence of financial market participants on such providers and models. With regards to outsourcing, the reliance on third-party model providers adds an extra layer of vulnerability in addition to existing infrastructure dependence on these third-party providers, such as cloud services.

Related to these systemic implications for financial stability, AI models can also raise competition-related challenges. Indicatively, a possible refusal of access to models or data and barriers to switching by dominant providers could have important implications for financial market participants in a market with distorted competition conditions. Since AI models require a significant level of resources and computing power to be developed and trained, there is indeed a risk of market concentration amongst a small group of players, especially those with first mover advantage or with the number of resources needed to design, train and maintain models. Financial institutions that deploy models from dominant third parties may face the burden of reduced competition, which can also impact their customers (such as associated costs).

The current stage of AI development also poses challenges for countries that lack the economic resources to develop, train and maintain their own models, as could be the case in some ASEAN member States. With regards to users, large-scale AI models may primarily benefit those equipped to invest in such technologies, such as larger financial market participants. Data concentration is another risk related to dominance of incumbents with cheaper or easier access to datasets (e.g. social platforms). Access to data is crucial for the success of AI models such as LLMs, and data concentration by BigTech or other platforms could exacerbate the risk of dominance of few large companies with excess power and systemic relevance. Furthermore, AI models could be exploited to bolster monopolies or oligopolies and stifle competition, thereby undermining market dynamics. For instance, AI models can be used to influence investor preferences based on their specific role.

3.5.8. Other risks: employment and skills, environmental impact

While the current and future impact of AI in the labor market remains uncertain, there is currently little evidence of significant negative employment effects due to AI to date according to OECD analysis (OECD, 2023[37]). This could be due to low AI adoption rates and firms opting for voluntary workforce adjustments, possibly delaying the materialization of any negative employment effects from AI (OECD, 2023[37]).

In the long-term, new employment challenges and opportunities could arise through a wider adoption of AI tools by financial institutions, with implications also in terms of capacity and skills development. Widespread usage of such tools in finance may help increase available resources needed for higher-value tasks while also posing risks to the job market. AI in particular has the potential to automate a wide array of back-office and middle-office functions in finance (Section 3.4). AI’s impact on employment is also anticipated to cause pressure for industries to consider how skillsets may need to evolve (OECD, 2023[37]). Insufficient skills for using AI can pose risks from both an industry and regulatory perspective, thereby potentially leading to employment issues for financial institutions. Using AI for finance will demand skillsets currently possessed by only a small segment of financial practitioners, and inadequate capacity or awareness of the risks associated with models, especially with easily accessible AI models, can have adverse effects for financial market participants and their clients.

The increasing computational needs of AI systems may also raise sustainability concerns (OECD, 2023[38]). GenAI and LLMs, for example, necessitate extensive computational resources for training, consuming significant energy for development, training and inference processes, with potential environmental impact that requires deeper examination. This also pertains to data centres, considering their pivotal role in model training. Like other innovative financial technologies, there is not enough reliable information on AI’s impact on the environment that can inform policy discussions around is environmental risks (OECD, 2023[37]; 2022[39]).

3.6. Policy considerations on AI in finance

The use of AI in finance has the potential to deliver important benefits to financial consumers and market participants in ASEAN member states and beyond, by promoting efficiencies and enhancing productivity, but comes with important risks and challenges. Rapid developments in AI and its increasing relevance to financial markets calls for policy discussion and potential action to ensure the safe and responsible use of such tools in finance. Financial regulators and supervisors must ensure that the use of AI in finance remains consistent with the policy objectives of securing financial stability, protecting financial consumers, promoting market integrity [and fair competition].

The OECD Principles on AI, adopted in 2019, which constitute the first international standard agreed by governments for the responsible stewardship of trustworthy AI, remain highly relevant for the application of AI, including GenAI, tools in finance (OECD, 2019[31]). At the G20 level, the financial stability implications of artificial intelligence and machine learning in financial services have been discussed by the Financial Stability Board in 2017 (FSB, 2017[35]), while the G7 in 2020 has analysed the cyber risks posed by artificial intelligence in the financial sector. Most recently, the G7 Leaders welcomed the Hiroshima Process International Guiding Principles for Organizations Developing Advanced AI Systems and the Hiroshima Process International Code of Conduct for Organizations Developing Advanced AI Systems (G7, 2023[1]; G7, 2023[2]; G7, 2023[3]).

A number of national or regional initiatives have also been launched with the aim of providing guidance or promoting guard rails for the safe and trustworthy development of AI across sectors globally, including in ASEAN member states.

3.6.1. Policy developments on AI in finance in ASEAN countries

National AI strategies have been developed in seven ASEAN member states, namely Indonesia, Malaysia, Myanmar, Singapore, Thailand, Philippines and Viet Nam. Furthermore, at the ASEAN-level, ongoing discussions are currently taking place concerning the preparation of a Guide to AI Ethics and Governance, which is anticipated to be released in 2024 (Reuters, 2023[40]). Although such framework can be influential in providing guidance to the national legislators, its application would remain voluntary. Unlike the EU AI Act (European Commission, 2021[41]) the ASEAN document will not include a strict risk categorisation and will take a more business-oriented approach, allowing for flexibility related to cultural differences across member countries. In particular, when it comes to national AI strategies:

The Indonesian AI strategy has been implemented in 2020, with an end date of 2045. The end goal of this strategy involves the transformation of the country in line with an innovation-based approach, including through the encouraging of AI research, and the amelioration of data infrastructure (Nasional Kecerdasan Artifisial Indonesia, 2020[42]). In addition, the AI Ethical Guidelines incorporate setting up a data ethics board and suggesting AI innovation regulation, which is to be expected in the nearby future (Arkyasa, 2023[43]). Certain aspects of Fintech, digital banking and capital markets fall under the AI regulation in Indonesia.

Malaysia has developed its National AI Roadmap for the years 2021-2025, which includes establishing AI governance, as well as advancing R&D, digital infrastructure and a national AI innovation system (Ministry of Science, 2021[44]). Focus is also placed on ethics by incorporating seven principles of responsible AI into the roadmap. Furthermore, the Responsible AI Framework Guidelines, published in 2023, formulate further guidance related to the ethical dimension of AI use by the Malaysian organisations more broadly (Ariffin et al., 2023[45]).

Singapore’s National AI Strategy dates back to 2019 and aims to promote Singapore as an AI leader by 2030. This framework is developed with the focus placed on specific national projects, such as improving efficiency of municipal services and of border clearance operations, for instance (Smart Nation Singapore, 2019[46]). Singapore has also developed a Model Governance AI Framework in 2020, which incorporates the principles of inter alia fairness, accountability and explainability into the AI governance models across sectors of activity (Info-communications Media Development Authority, 2020[47]).

Thailand’s AI Strategy and Action Plan was launched in 2022 and has 2027 as its end date. The strategy includes regulatory readiness, national infrastructure development, education, innovation development and promotion of AI use (AI Thailand, 2022[48]). Currently, the second phase of the Action Plan is being implemented, with the focus placed on the expansion of research and development of AI applications to enhance competitiveness of industries (AI Thailand, 2022[48]). The Royal Decree on AI System Service Business of 2022 introduces a risk-based approach to AI, with differentiation of certain AI systems as high risk and including some prohibitions (His Majesty King Maha Vajiralongkorn Phra Vajiraklaochaoyuhua, 2022[49]). At the same time, the Draft Act on the Promotion and Support of AI Innovations in Thailand of 2023 seeks to enhance the innovation within the AI ecosystem by granting businesses with access to an AI sandbox, AI clinic and training AI database (The Electronic Transactions Development Agency, 2023[50]).

The National AI Strategy Roadmap of the Philippines was issued in 2021. The overall objective of the roadmap is ensuring AI readiness through the dimensions of development of infrastructure, research and development, workforce development and regulation (The Department of Trade and Industry, 2021[51]).

Viet Nam’s National Strategy on R&D and Application of AI was launched in 2021 and has an end date of 2030 (Prime Minister of Vietnam, 2021[52]). The framework sets out the strategic directions to be taken, which include building of AI-related regulations, computing infrastructure, promotion of AI application and international cooperation. The draft National Standard on AI and Big Data, released in 2023 is focused on the AI quality standards in the realms of safety, privacy and ethics, as well as risk assessments and addressing unintentional biases (The Ministry of Information and Communication, 2023[53]).

Cambodia and Lao PDR have not developed any national AI strategies. However, the Cambodian Ministry of Industry, Science, Technology & Innovation has issued its first AI-specific report in May of 2023 (The Ministry of Industry, 2023[54]). The AI Landscape in Cambodia Report discusses the importance of imminent development of national AI regulation and guidelines that would harmonise the existing laws. Such policies are to be in line with principles of human-centricity and sustainable development, while taking into consideration the issues of ethics and privacy.

Almost all ASEAN countries have provided some form of guidance around the use of AI in finance, in most cases as part of their broader policy action on AI across sectors.16 Specific policies related to the use of AI in the field of finance can be found in Indonesia, Malaysia, Myanmar, Singapore, Thailand, Philippines, Viet Nam and Cambodia. In particular:

The Indonesian AI strategy explicitly distinguishes finance as one of the key sectors relevant to long-term development of AI (Nasional Kecerdasan Artifisial Indonesia, 2020[42]). It underlines the importance of the use of financial data for the development of AI applications, and it refers to financial use cases such as credit scoring or financial forecasting. This policy is focused on pursuing four strategic targets, namely of service improvement, cost optimization, improved products, and a reliable risk management. The framework is set forth to include stages of exploration of AI in finance, optimization (implementation of the findings) and transformation (practical support of the finance sector). The framework relevant to financial data may be further amended in the near future, as the upcoming Presidential Regulation implementing the Personal Data Protection Law will regulate the protection of data for artificial intelligence uses (Rochman and Adji, 2023[55]).

Malaysian policies on digital economy, articulated within the National 4IR Policy and the Digital Economy Blueprint of 2021, enlist finance as one of the key sectors for the digital transformation of Malaysia (MyDIGITAL Malaysia, 2021[56]). The National 4IR Policy introduces strategies such as adoption of an anticipatory regulatory approach that allows for the innovation acceleration, as well as promotion of uniform data protection standards for the finance industry (Ministry of Science, 2021[57]). Actors within the finance industry are encouraged to ensure that their workforce possesses the skills and knowledge necessary to the digital economy. Furthermore, financial service providers are to adopt an anticipatory regulatory approach, that includes both the necessary risks management policies and innovative initiatives (Ministry of Science, 2021[57]). Furthermore, MDEB established concrete strategies aiming at fostering innovation in the sector. Namely, the policy created a Fintech Innovation Accelerator Programme, to support the local fintech development (MyDIGITAL Malaysia, 2021[56]). On the regulatory side, a special task force has been formed in July 2023 to review current laws in the realm of investment and business in Malaysia, inter alia in light of the AI developments (New Straits Times, 2023[58]).

In Singapore, the Monetary Authority of Singapore (MAS) published in 2018 broad Principles to promote Fairness, Ethics, Accountability and Transparency (FEAT) in the use of AI in Singapore's financial services sector (MAS, 2018[59]). In 2022, MAS conducted a thematic review on selected financial institutions’ implementation of the Fairness Principles in their use of AI, reviewing policies and governance frameworks against the FEAT Principles, and their implementation effectiveness in actual AI/ML use cases (MAS, 2022[60]). In 2022, MAS also released five whitepapers, setting forth guidelines applicable to financial service providers, aimed at the use promotion of responsible use of AI (MAS, 2022[61]). The white papers detail assessment methodologies for the FEAT principles and include a comprehensive FEAT checklist for financial institutions to adopt during their AI and data analytics software development lifecycles; an enhanced fairness assessment methodology, which enables financial institutions to define fairness objectives, as well as identifying personal attributes of individuals and any unintentional bias; a new ethics and accountability assessment methodology, which provides a framework to carry out quantifiable measurements of ethical practices, in addition to the qualitative practices currently adopted; and a new transparency assessment methodology, which helps determine the extent of internal and external transparency needed to interpret the predictions of ML models. The white papers were advanced on the basis of a public-private collaborative model, promoting risk management and sustainable good governance principles in the use of AI in finance. Currently, MAS is working on GenAI and plans to publish a risk framework for the use of such models by the financial sector. In 2022, MAS has launched Project NovA! – a tool helping financial institutions to predict financial risks relevant to their organisations (Monetary Authority of Singapore, 2023[12]). MAS has also launched Project MindForge – a Generative AI risk management framework, developed in a collaborative manner with the finance industry players (MindForge Consortium, 2023[62]). During the first phase of the Project, completed in November 2023, the main risks areas were identified, inter alia in the areas of accountability, monitoring, transparency and data security. Next, the Project is to include insurance and asset management financial entities in its scope and to expand the use of GenAI in areas of compliance with anti-money laundering, sustainability, and cyber-security policies.