Emerging technologies can be pivotal for much needed transformations and responses to crises, but rapid technological change can carry negative consequences and risks for individuals, societies and the environment including social disruption, inequality, and dangers to security and human rights. The democratic community is increasingly asserting that “shared values” of democracy, human rights, sustainability, openness, responsibility, security and resilience should be embedded in technology, but questions remain on how this should be accomplished. Using “upstream” design principles and tools can help balance the need to drive the development of technologies and to scale them up while helping to realise just transitions and values-based technology. This chapter documents and analyses a set of design criteria and tools that could guide this approach to elaborate an anticipatory framework for emerging technology governance.

OECD Science, Technology and Innovation Outlook 2023

6. Emerging technology governance: Towards an anticipatory framework

Abstract

Key messages

Emerging technologies are reshaping our societies. While they can be key to much-needed transformations and responses to crises, they also present certain risks and challenges that must be addressed if their potential is to be realised. Faced with this double-edged nature of emerging technology, good technology governance can encourage the best from technology and can help prevent social, economic, and political harms.

Actors at both the national and international levels are seeking guidance and agreement on how to promote shared values in technology development and make innovation more responsible and responsive to societal needs. A range of anticipatory governance mechanisms present a way forward. Working further upstream in the innovation process, these tend to shift the focus of governance from exclusively managing the risks of technologies to engaging stakeholders – funders, researchers, innovators and civil society – in the innovation process and co-developing adaptive governance solutions. While regulators are a key stakeholder group in emerging technology governance, a whole range of other actors can facilitate responsible innovation.

Emerging technologies have unique governance needs. However, while there is no one-size-fits-all approach, a general and anticipatory framework for the governance of emerging technologies could be useful at the national or international level. For instance, it could help provide a common language and tools built from experience to help address recurrent policy issues across emerging technologies and ensure wider stakeholder engagement.

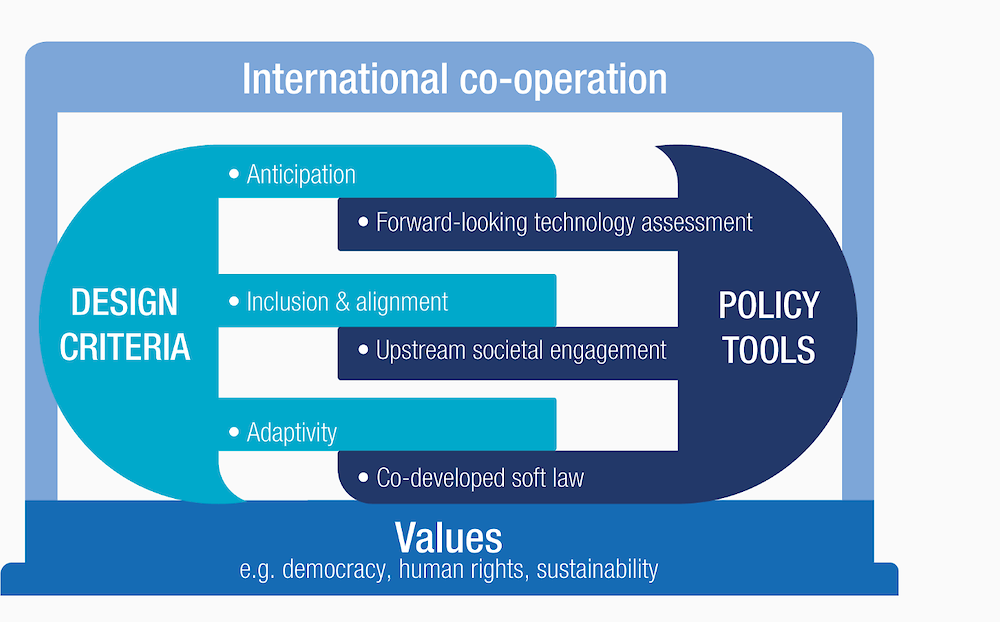

The proposed framework aims to help guide the development of emerging technology governance at both the national and international levels. It consists of a three-tiered structure, comprising (i) values, (ii) design criteria, and (iii) tools. Discussion around the framework may facilitate international technological co-operation in the governance of emerging technologies. Suitable tools are necessary to operationalise design criteria. The chapter discusses a selected tool for each of the three design criteria:

Anticipation through strategic intelligence. Countries and the international community should assess and enhance their strategic capacity to anticipate technological developments and technology governance needs. They should aim to deepen strategic intelligence on new, emerging and/or key technologies through forward-looking technology assessment (TA). Forward-looking TA both depends on and supports the expression of key values, which underpin the analysis of potential benefits and harms, and the trajectories of emerging technology.

Inclusion and alignment through societal and stakeholder engagement upstream. Societal and stakeholder engagement can enhance the democratic governance of emerging technology, enabling deliberation on the values that should support and guide technological development. Countries might not only sponsor programmes to advance communication and consideration of emerging technology in public fora, but also build the necessary linkages for exchange and co-development.

Adaptivity through co-development of principles, standards, guidelines, and codes of practice (soft law mechanisms). Countries and stakeholders could strengthen professional guidelines, technical and normative standards, codes of conduct and good practices during technological development, to promote an agile and adaptive system of governance.

Introduction

Emerging technologies have a central role to play in our collective future. They will help reshape the infrastructure and capacities of our societies and help drive our economies and our behaviour in new ways. While problems like climate change and global health disparities cannot be solved by technology alone, technology policy can be a pivotal factor in the responsiveness and resilience of our sociotechnical systems in the face of crisis. section

In addition to the great promise of emerging technologies for green transitions and other crucial societal objectives, rapid technological change can carry negative consequences and risks for individuals, societies, and the environment. Relevant threats include social disruption, various kinds of inequity, privacy, and human rights. For example, facial recognition and spyware are becoming a tool in mass surveillance (Ryan-Mosley, 2022[1]), social media is a known vector for the active propagation of misinformation (Matasick, Alfonsi and Bellantoni, 2020[2]), and reported mandatory involvement in genomics research violates human rights standards (Wee, 2021[3]).

Emerging technology also carries major implications for distributive justice, geopolitics, and security. While COVID-19 vaccines have been so critical in alleviating illness in high-income countries, they have reached low- and middle-income countries unevenly. As previous chapters have discussed, calls for technological independence – at best, “technological sovereignty” (Crespi et al., 2021[4]) and at worst, new forms of techno-nationalism (Capri, 2019[5]) – have strained international science and technology co-operation, in the same vein as what might be called a “security turn” in innovation policy (see Chapter 1). The globalisation of emerging technologies has also revealed supply chain vulnerabilities, with implications for economic resilience.

Given the double-edged nature of emerging technology, good technology governance might encourage the greatest societal benefit from technology and help prevent social, economic, and political harms. Technology governance can be defined as “the process of exercising political, economic and administrative authority in the development, diffusion and operation of technology in societies” (OECD, 2018[6]). In the context of emerging technologies, the concept of governance has evolved in response to high uncertainty (Folke et al., 2005[7]), risk (Baldwin and Woodard, 2009[8]), complexity (Hasselman, 2016[9]) and the need for co-operation (Sambuli, 2021[10]). From setting rules on the integrity of science to establishing norms for biosecurity and responsible neurotechnology (OECD, 2019[11]), technology governance provides norms and standards for both the bottom-up research that drives discovery, and the application and use of technologies in society.

Perhaps for these reasons, technology governance has attracted increasing attention at a high political level. In recent years, several international fora have focused on the topic of technology governance, including France’s “Technology for Good” initiative (Tech For Good Summit, 2020[12]), the United Kingdom’s “Future Tech Forum” under its 2021 Group of Seven Presidency (HM Government, 2022[13]) and the initiative on “Democracy-Affirming Technologies” launched at President Biden’s Summit on Democracy (The White House, 2021[14]). At the OECD, the Global Forum on Technology was initiated in 2022 to foster multi-stakeholder collaboration on digital and emerging technology policy (see Box 3.7), and the 2021 Recommendation of the Council for Agile Regulatory Governance to Harness Innovation sets norms for rethinking governance and regulatory policy to better harness the societal impacts of innovation (OECD, 2021[15]). Furthermore, the United States and the United Kingdom recently announced an initiative on “privacy-enhancing” technology (The White House, 2021[16]). In the same vein, the need for “human-centric” artificial intelligence (AI) has become a refrain across the public and private sectors and the subject of an influential soft-law instrument at the OECD (OECD, 2019[17]).

These nascent efforts at international technology governance often frame the challenge as one of better regulation. Although it is no doubt one component of the technology governance challenge, this framing arguably does not address a general and recurring problem across critical and emerging technologies such as AI, robotics and synthetic biology: as their development advances, their impacts on society become more profound, and their effects more entrenched (OECD, 2018[18]). It follows that shaping them, without undue restriction, during the innovation process could carry great societal utility.

Efforts to exercise political, economic, or administrative authority during the innovation process might be called “upstream” or “anticipatory technology governance”. Such an approach to governance shifts the locus from exclusively managing the risks of technologies to engaging in the innovation process itself. It aims to anticipate concerns early on, address them through open and inclusive processes, and align the innovation trajectory with societal goals (OECD, 2018[18]). Of course, a balance must be struck between preserving space for serendipitous technology development and shaping technology trajectories through upstream governance.

Actors in the field of international technology governance invoke the need to promote “shared values” – which in the context of these initiatives tend to include the values of democracy, human rights, sustainability, openness, responsibility, security and resilience (e.g. (Council of Europe, 2019[19]) or the (US State Department, 2020[20]). To the extent that it can help embed values within the innovation process itself, the anticipatory approach to technology governance might be better positioned to enact a stated goal of values-affirming technology rather than post-hoc regulatory approaches.

This chapter does not aim to identify the core substantive values that should guide technological development, or to reconcile different positions on them. Instead, it analyses the following question: given that the democratic community is increasingly asserting that values should be embedded in and around technology (e.g., non-discrimination in A.I. algorithms), how should this be accomplished? Governments are increasingly recognising and aiming to address this challenge. All these initiatives are based on an important premise: technology should no longer be viewed as an autonomous agent, but as a system which, through governance, can better serve societal goals and values.

This chapter documents and analyses a set of design criteria and tools that could guide this approach to elaborate an anticipatory framework for emerging technology governance. It is not intended to provide an exhaustive review of design criteria and tools. Rather, it provides a framework for further analytical and normative work, suggesting ideas for the design of good technology governance systems. Figure 6.1 shows this framework, linking values, design criteria, and mechanisms and tools. The chapter explores how actors can implement these design criteria for governance, using policy tools.

An anticipatory policy framework for emerging technology governance

In areas other than science, technology, and innovation (STI), anticipatory governance has emerged as a key challenge for governments as they try to move from a reactive stance towards addressing the complexities and uncertainties of the economic and political present (OECD, 2022[21]). Likewise, actors in the STI system have been laying the groundwork for anticipatory technology governance for some time (Guston, 2013[22]), in part under the banner of responsible research and innovation (von Schomberg, 2013[23]). An important aim of this upstream approach is to align research and development (R&D) of cutting-edge technology with key societal goals, whether related to energy transitions, health systems or mobility. To do so, anticipatory governance aims to identify possible stakeholder concerns and values, address them through open and inclusive processes, and embed shared values in the development of new technologies.

The responsible research and innovation approach argues that embedding responsibility and accountability in the activities of researchers, firms and other actors can help orient new technologies towards meeting grand challenges, rather than just decreasing the likelihood of undesirable effects of technologies (Shelley-Egan et al., 2017[24]; Owen, von Schomberg and Macnaghten, 2021[25]). This is consistent with the turn towards mission-oriented Innovation policy (Larrue, 2021[26]) and is the cornerstone of the Recommendation of the OECD Council on Responsible Innovation in Neurotechnology (OECD, 2019[11]).

Actors in both the public and private sectors are starting to take a more proactive approach to technology governance, engaging in activities like anticipatory agenda-setting, test beds, and value-based design and standardisation as a means of addressing societal goals upstream (OECD, 2018[6]). National actors are beginning to promote a holistic view of the challenges and opportunities inherent to the governance of emerging technologies. They are developing frameworks to address recurring concerns and approaches, thereby facilitating learning across technology areas. The National Academy of Medicine in the United States, for instance, recently published a framework for the governance of emerging medical technologies (Mathews, Balatbat and Dzau, 2022[27]). In addition, regulatory communities have already convened at the OECD with the objective of reforming regulatory governance to better harness innovation (OECD, 2021[15]).

Taken as a whole, recent activities in emerging technology governance can be grouped under a policy framework comprising values, design criteria and tools to putting shared values into practice (see Figure 6.1). These components lay the foundation for discussions on emerging technology governance. Each of these elements is outlined below.

Figure 6.1. Elements of a framework for emerging technology governance

Source: Developed by the authors

Shared values: The foundation of emerging technology governance

Key values orient governance systems, and therefore ground the model. They are not always explicit, and the tools described below may be necessary to surface them. This element answers the question of what is worth ensuring, enabling, and embedding – and why. The (OECD, 2021[28]) has affirmed, among others, democracy, human rights, good governance, security, sustainability, and open markets as shared values. However, it is not the purpose of this chapter to posit particular values for the governance community. This policy framework advances techniques of a process-based approach, laying out tangible strategies for promoting values through design criteria and tools at different stages of the innovation process (OECD, 2018[6]). In practice, it sets out what might be considered guidance for responsible innovation and the development of “values-based technology”.

Design criteria for emerging technology governance

Design criteria define the generalisable characteristics of good technology governance and responsible innovation. Although this is not a comprehensive list, they should be based on the design criteria of anticipation, inclusivity and alignment, adaptivity and international co-operation.

Anticipation. Technology governance faces a dilemma. Governing emerging technologies too early in the development process could be overly constraining, while governing them later can be expensive or impossible. Navigating the so-called “Collingridge dilemma” (Worthington, 1982[29]) requires a form of governance that operates “upstream” and throughout the process of scientific discovery and innovation. Prediction of a particular technological trajectory is notoriously difficult or even impossible, but exploration of possible technologic developments is necessary and can create policy options.

Inclusivity and alignment. Involving a broad array of stakeholder groups, including actors typically excluded from the innovation process (e.g., small firms, remote regions, and certain social groups, including minorities) is important to align science and technology with future user needs and values. Inclusivity encompasses access both to technology itself and to the processes of technology development, where enriching the diversity of participants is linked to the creation of more socially relevant science and technology (OECD, forthcoming[30]). A related point is the need to include and integrate diverse disciplines and approaches in the R&D process in order to build richer understandings and fit-for-purpose design (Kreiling and Paunov, 2021[31]; Winickoff et al., 2021[32]), (OECD, 2020[33]).

Adaptivity. The pace, scope and complexity of innovation pose significant governance challenges for governments (Marchant and Allenby, 2017[34]) and technology firms. As emerging technologies can have unforeseen consequences, and adverse events or outcomes may occur, the governance system must be adaptive to build resilience and stay relevant – a central tenet of the Recommendation of the Council for Agile Regulatory Governance to Harness Innovation (OECD, 2021[15]). Adaptivity as a design criterion is closely related to anticipation, in that adaptive principles and guidelines might be better suited to the fast pace of technological development.

Tools: Concrete means for action

An array of tools could help realise the above design criteria and embed values in the innovation process (see Figure 6.1). They are the operational element of the framework, the means to take action and govern emerging technologies. The following sections introduce three sets of tools that seek to advance the design criteria: forward-looking technology assessment (TA) promotes anticipation; societal engagement encourages inclusivity and alignment; soft law mechanisms can bolster adaptivity; for international co-operation, all three tools are important. These tools have strong corollaries with known tools for regulators (OECD, 2021[35]), but explicitly seek to engage STI actors – including research funders and agenda setters, researchers and engineers, entrepreneurs and small business, and industry – further upstream, i.e., during the technology development process. Together, these tools constitute a non-exhaustive package of policy interventions to implement anticipation, inclusion and alignment, adaptivity and international co-operation.

The importance of international co-operation

The framework in this chapter (as shown in Figure 6.1) aims to guide both national and international policy makers. The development, use and effects of technologies span national borders. The global scope of technological challenges creates a need for an international approach to the governance of emerging technologies. This scope carries implications for the design of both national and international technology governance systems. For national governments, this means that effective governance will require international policy engagement. This engagement is already a clear policy trend, exemplified by the numerous international activities noted above. International co-operation can grow around shared values, and the sharing of tools and good practices, and these in turn can guide national approaches (see Chapter 2).

Tailor to the case

The treatment of different technologies under such a holistic framework must not be a one-size-fits-all approach. Governance needs for advanced nanomaterials will differ from those relating to new digital platforms or synthetic biology. Indeed, the appropriate approach will depend on the technology’s characteristics, such as:

its level of readiness for commercialisation

the profile of risks and potential benefits in the short and long term, as viewed by experts and the public

the nature of local, national, and international matters of concern

the level of public concern.

Nevertheless, applying a common framework at the national and international levels is important as these emerging technologies share certain characteristics, such as uncertain trajectories and impacts, enabling broad areas of follow-up work, potential issues of public trust and the need for value-based reflection (Mathews, 2017[36]). These common characteristics make common tools – including those that follow – highly relevant.

Anticipation: Building strategic intelligence through technology assessment

The governance of early-stage technologies poses a set of challenges that require forward-looking knowledge and analysis. This strategic intelligence can be defined as usable knowledge that supports policy makers in understanding the impacts of STI and potential future developments. (Kuhlmann, 2002[37]) identified several processes that could provide such “futures intelligence”, such as technology assessment (TA), technology foresight, anticipatory impact assessment and formative approaches to evaluation.

Emerging and early-stage technologies not only carry inherent uncertainties and complexities, but there are also situations where their desirability is unclear (e.g., human germline gene editing) because the promised novelty may well transcend existing ethical and political evaluations. The Collingridge dilemma sums up the challenge to find the right time to govern technology using dedicated standards, rules, regulations and/or laws. To navigate this dilemma, new kinds of anticipation and strategic intelligence are essential (Robinson et al., 2021[38]).

This section focuses on TA as a source of strategic intelligence. It presents the rationales for TA, the trends shaping TA-based strategic intelligence and concludes with a review of challenges and policy considerations.

Rationales

TA is an evidence-based, interactive process designed to bring to light the societal, economic, environmental, and legal aspects and consequences of new and emerging science and technologies. TA informs public opinion, helps direct R&D, and unpacks the hopes and concerns of various stakeholders at a given point in time to guide governance. Informally, various forms of TA have been in operation since the dawn of science and technology policy. Formally, TA began 50 years ago with the establishment of the Office for Technology Assessment (OTA) within the United States Congress.1 Its mission was to identify and consider the existing and potential impacts of technologies, and their applications in society. OTA emphasised the need to anticipate the consequences of new technological applications, requiring robust and unbiased information on their societal, political, and economic effects.

Following in the footsteps of OTA, parliamentary TA institutions also emerged in Europe. The Netherlands Organisation for Technology Assessment, for example, was established in 1986 to inform the Dutch Parliament on the developments and potential consequences of new technologies.2 Parliamentary TA institutions proliferated around the globe throughout the 1990s and 2000s. TA and TA-like processes have diversified with different (or expanded) objectives and are conducted in different situations and settings. One evolution is the expansion from expert-oriented TA activities to more participatory TA approaches. Participatory TA acknowledges that technology and society are entwined, further proof that underlying values should be part of the TA process (Delvenne and Rosskamp, 2021[39]).

The main rationales of TA for emerging technology governance can fit into three broad and sometimes overlapping categories.

TA for informing decision makers on key technology trends. One role of TA is as a process of sense-making around emerging technologies, their state-of-the-art and their potential benefits and risks, be they economic, societal, or environmental. When addressing emerging and converging technologies such as synthetic biology, neurotechnology and quantum computing, TA must grapple with high degrees of uncertainty along multiple dimensions. It therefore serves an important function in structuring disparate and unclear information and translating it into usable information that can inform decision-making.

TA for deliberation by gauging stakeholders’ hopes and concerns. Some forms of TA, such as participatory TA, brings together different stakeholder groups, which not only stimulates public and political opinion-forming on the societal and ethical aspects of STI, but also helps promote public trust through engagement and inclusion, one of the key design criteria in the framework. Participatory TA approaches are particularly relevant for probing and highlighting hopes and concerns around potentially disruptive and controversial technologies. Here, the inclusion of relevant stakeholders is key not only for providing democratic legitimacy and building trust, but also for deepening knowledge and expertise. Such stakeholders include associations of small and medium-sized enterprises (SME), civil society organisations, non-governmental agencies, trade unions, consumer groups and patient associations. Thus, integrating a variety of stakeholders and insights can help create a form of “distributed intelligence” (Kuhlmann et al., 1999[40]). However, critics of participatory TA highlight potential weaknesses, such as the lack of impact on decision-making, the lack of support of mainstream science and technology policy, and the exclusion of diverse kinds of knowledge (Hennen, 2012[41]).

TA as means of building and steering technological and industrial agendas. Building national competitiveness through targeted investment in different areas of science and technology R&D is a key aspect of STI policy, in which TA can play a supportive role. For example, following the Portuguese Resolution of the Council of Ministers, the Ministry for Science and Higher Education commissioned the Portuguese Foundation for Science and Technology (FCT) to develop 15 thematic research and innovation agendas. Among them, the Industry and Manufacturing Agenda 2030 mobilised experts from R&D institutions and companies to prospect potential opportunities and challenges for the Portuguese research and innovation system in the medium and long term. The agendas’ main objective was to promote collective reflection on the knowledge base required to pursue the scientific, technological, and societal goals in a given thematic area. FCT facilitated a bottom-up approach through an inclusive process involving experts from academia, research centres, companies, public organisations, and civil society.3

Some TAs combine all three rationales. One example is the Novel and Exceptional Technology and Research Advisory Committee (NExTRAC) at the National Institutes of Health (NIH) in the United States. NIH undertakes horizon-scanning and sense-making of new technologies; deliberates on ethical, legal, and societal issues with a variety of stakeholders; and directly informs the NIH director in agenda-setting (National Institutes of Health, 2021[42]).

Trends reshaping needs for TA-based strategic intelligence

Since the founding of the OTA 50 years ago, there has been growing recognition that timely intelligence for STI policy and governance is necessary. Not only are technologies becoming more complex and more pervasive, but they are evolving rapidly with potential new and disruptive risks to the economy, environment, and society. While prudent STI policy and governance for emerging technologies mobilises strategic intelligence in various ways (Tuebke et al., 2001[43]), new trends are challenging established strategic intelligence practices to incorporate new needs. Stemming from a mixture of technological developments, new STI policy approaches and exogenous shocks, these trends produce new requirements for TA processes and outcomes.

Technology trends: The pace of convergence. The escalating and transformative interaction among seemingly distinct technologies, scientific disciplines, communities, and domains of human activity are achieving new levels of synergism (Roco and Bainbridge, 2013[44]). This “convergence” at different loci of the STI system means that ideas, approaches, and technologies from widely diverse fields of knowledge become relevant and necessary for analysing the potential impacts of such convergent systems (National Research Council, 2014[45]). Thus, convergence is placing new demands on strategic intelligence and TA to capture its implications for sociotechnical change.

Innovation policy trends: Mission-orientation. One major STI policy trend is the shift towards greater directionality (Borrás and Edler, 2020[46]), a theme treated in detail in Chapter 5. So-called “mission-oriented” innovation policies seek to steer research and innovation systems so that they contribute to achieving a societal goal (Robinson and Mazzucato, 2019[47]; Larrue, 2021[26]; Mazzucato, 2018[48]). Such approaches require expounding values within ambitious, clearly defined, measurable and achievable goals within a binding time frame (Lindner et al., 2021[49]). Missions envision large transformations. They pressure TA to move from techno-centric approaches focusing on a particular technology and its ramifications, to exploring portfolios of technologies (e.g., related to mobility, energy production and waste management) and how they might impact and drive transformations in value chains, industries, and whole sociotechnical systems. In Germany, the federal government’s most recent funding instrument, “INSIGHT”, promotes a holistic, forward-looking impact assessment of innovations. In addition to the natural and technical sciences, the assessment includes ethical, social, legal, economic, and political considerations. Acknowledging the increasing importance of social innovations, the focus shifts from “pure” technology analysis to including societal developments in the innovation processes.

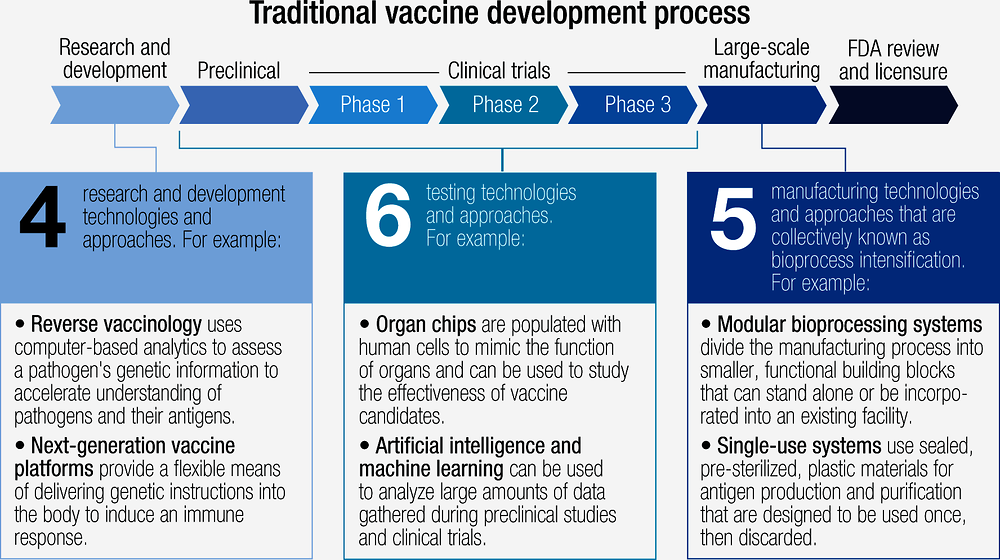

Crises and societal missions are driving what could be termed “solution-centric” TA. In the Netherlands, the Rathenau Institute develops TAs focusing on problems such as deepfakes (synthetic media) (STOA, 2021[50]) and cyber resilience (van Boheemen et al., 2020[51]). In the United States, the Government Accountability Office (GAO) has been focusing on problems like reducing freshwater use in hydraulic fracturing and power plant cooling and tracing the source of chemical weapons (GAO, 2020[52]). One recent TA by GAO assesses the vaccine development chain for infectious diseases (see Figure 6.2). Here, the goal was to identify key technologies that could enhance the ability of the United States to respond rapidly and effectively to high-priority infectious diseases through rapid vaccine development.

Figure 6.2. GAO: Assessment of vaccine development technologies

Exogenous forces: A proliferation of crises. Proliferating crises – e.g., the COVID-19 pandemic, Russia’s war of aggression against Ukraine and the subsequent energy crisis, and the local effects of extreme events such as droughts, flooding and forest fires linked to climate change – reshape the requirements for strategic intelligence. As a recent example, the rapid spread of COVID-19 caught most nations off guard, requiring accelerated technology development and deployment of vaccines and defibrillators, as well as knowledge about the virus, its spread, and mutations. Governments around the world had to deal with a crisis featuring high scientific uncertainty, making rapid decisions that would affect national populations and beyond, owing to mobility restrictions. Crises require urgent action. They put pressure on the production of useful and timely strategic intelligence to shape actions in near-real time. TA practitioners are also challenged to incorporate detailed investigations in the rapid scaling and diffusion of new and emerging technologies, and to consider the societal, economic, and environmental effects of rapid scaling.

Challenges and policy considerations

While global TA practice is still rife with techno-centric TA activities, solution-based and crisis-driven TAs are increasing, bringing with them many questions regarding tools and processes. How wide a portfolio of technologies is there to explore? What is the scope of the TA study? What sort of inclusion is needed to build trust and harness collective intelligence? How rapidly is the intelligence from TA needed for decision-making, and how does this balance with the depth and breadth of TA analysis?

The trend towards mission-oriented innovation policies (see Chapter 5) requires identifying and enacting core societal values that should drive technical change. TA is well placed to spell out these values, particularly around controversial technologies. However, the increasing complexities of emerging technologies and their impacts make it necessary to move beyond techno-centric perspectives. Adopting a socio-centric approach, in turn, increases the complexity and information requirements of not only technology options, but also of the value chains and systems involved.

Crises increase demands on rapid sourcing and scaling of technology solutions. However, uncertainty in both the emerging technology options and the impacts generated as they scale increases the need for controlled speculation on both the mechanisms for rapid scaling and the various facets of scaling. TA and other intelligence sources, such as Foresight, are potential approaches to this end.

Box 6.1. Considerations for Robust Technology Assessment

1. Fitness for purpose. TA processes should be aligned with goals, such as (1) promoting deliberation and gauging opinions, (2) providing information on key trends, and (3) building agendas. Clear articulation of the different steps and activities that will fulfil the goals of the TA will be valuable in determining the appropriate methods and approaches.

2. Clarity in scope. TA must be clear about which level of analysis it undertakes. Is it technology-centric (e.g., quantum computing), does it have a value chain focus (e.g., food supply chains), or does it take a sociotechnical-system perspective (e.g., mobility)? The scope and granularity of the TA activity should be connected to the goal of the TA exercise, since each perspective requires a different range of expertise, evidence tools and processes.

3. Smart and inclusive participation. TA requires participation of stakeholders with different kinds of expertise and experience. The inclusion criteria depend on several constraints - the resources available (i.e., staffing and funding), the scope (identifying relevant social groups based on the topic and scope of TA), and the time available (limited time may require restricting and focusing inclusion). Robust TA mobilises approaches such as the European Parliament’s STEEPED approach (Van Woensel, 2021[54]), which undertakes a comprehensive scan of social, technological, economic, environmental, political, ethical and demographic aspects (Van Woensel, 2020[55]) as a means to identify relevant stakeholders.

4. Explicit with regards to values, frames, and biases. Some forms of TA bring together stakeholders to explore the impacts of technology on their professions, their personal lives, and the broader sociotechnical systems that make up society. Naturally, different stakeholders will have their own perspectives, and it is therefore important to understand (a) the contexts in which professionals and lay persons operate, and (b) the various biases that may shape both their opinions and reactions to others. Trustworthy TA brings to light values, frames, and biases.

5. Usability. TA is important for structuring disparate and unclear information, thereby providing decision makers with understandable interpretations. Robust TA should demonstrate careful consideration of the target audience for the intelligence produced, and of this audience’s absorptive capacity.

Inclusion and alignment: Engaging stakeholders and society upstream

Achieving an anticipatory system of technology governance will require recognising the central role of citizens and stakeholders in ensuring the use of trusted and trustworthy technology in society. Contemporary sociological accounts of the relationship between science, technology and society demonstrate that knowledge is increasingly produced in contexts of application, publics are aware of how STI affect their interests and values, and these interests can shape innovation (Jasanoff, 2007[56]). The numerous forms of stakeholder participation in the communication and making of science and technology contradict the so-called “deficit model” of publics as largely ignorant and irrational (Wynne, 1991[57]). But misunderstandings still exist (Chilvers and Kearnes, 2015[58]). Upstream stakeholder engagement can help frame – and reframe – the issues at stake (Jasanoff, 2003[59]) and “open up” important new questions (Stirling, 2007[60]). It must also be translated into practice, so experimentation and knowledge sharing will be important. Reviewing a large body of literature on societal engagement in the context of emerging technologies, this section focuses on how to engage societal stakeholders upstream in technology development to promote trust and trustworthiness.

Rationales: The need for upstream stakeholder engagement in innovation

Why is engagement necessary from the perspective of achieving an anticipatory and inclusive technology governance system? First, engagement can surface societal goals for emerging technology at different points in the complex innovation system, from agenda-setting to product design and diffusion, contributing to a better alignment of technological development with social needs (von Schomberg, 2013[23]). Such alignment, unfolding in an iterative process, is one of the key functions of emerging technology governance and responsible innovation.

Second, engaging societal stakeholders earlier in the development process can help spot public sensitivities and ethical shortcomings. Societal stakeholders bring experiential knowledge to societal problems (OECD, 2020[33]) and offer the perspectives of future users (Kreiling and Paunov, 2021[31]). This diversifies the types of expertise that are included during technology development, potentially pointing to application challenges, or raising questions that innovators do not anticipate, even with their knowledge and expertise. Such diversity has the potential to locate certain biases that are built into digital and other technologies. Subsequent design considerations could help foster societal acceptance and avoid backlashes and controversies that could lead to adoption failures (OECD, 2016[61]), and manage expectations for future products and services.

Third, stakeholder engagement promotes public understanding of science and technology, and enhances the societal capacity for deliberating on technological issues. Such deliberation and consultation can breed trust and enrich the relationship between science and society – although pre-ordained consultation can undermine engagement as a trust-building exercise (Wynne, 2006[62]).

Fourth (and related to the first point), societal engagement presents an opportunity to bring representatives from diverse cultures, demographics, ages, social structures, and skill levels to the innovation process. Including their views, and building stakeholder capacity, not only addresses forms of rooted exclusion but could render technologies more relevant to broader social groups.

Trends in upstream societal engagement

Use of new digital technologies. Digitalisation advanced the use of atypical engagement formats, such as online tools or immersive virtual-reality technologies and simulation, although traditional paper-based or face-to-face approaches are still used most frequently (BEIS, 2021[63]).

Iterative and sequenced engagement. Staged approaches have become more frequent. One example is the “IdeenLauf” (“flow of ideas”) initiative during German Science Year 2022, which collected societal impulses to inform science and research policy. First, citizens submitted over 14 000 questions for science. Second, the questions were consolidated, complemented by additional texts to provide relevant context, and discussed among scientists and selected citizens. Third, citizens commented on the text via online consultation. The final report was presented to policy makers and researchers in November 2022.4

Directionality: Focus shifts from technologies to missions, goals, and future products. Emerging technologies are often not yet embodied in future products or services, complicating exchanges between technology experts and broader publics. One trending response to this challenge has been to focus the engagement exercise on issues that societal stakeholders can more easily relate to. An example in the area of future mobility is the “GATEway” project in the United Kingdom, which conducted live public trials on connected and autonomous vehicles resulting in insights on public acceptance of, and attitudes towards, driverless vehicles (BEIS, 2021[63]).

Focus on diversity. There exists momentum to ensure age, ethnic, gender, cultural and other forms of diversity in the make-up of the “publics” engaged in consultation. However, practitioners still perceive a diversity gap both in the theory and practices of engagement, resulting in problems for both sides. On the one side, some communities are not solicited and are thus unable to provide inputs. On the other side, technology experts do not learn about the needs and values of these future users.

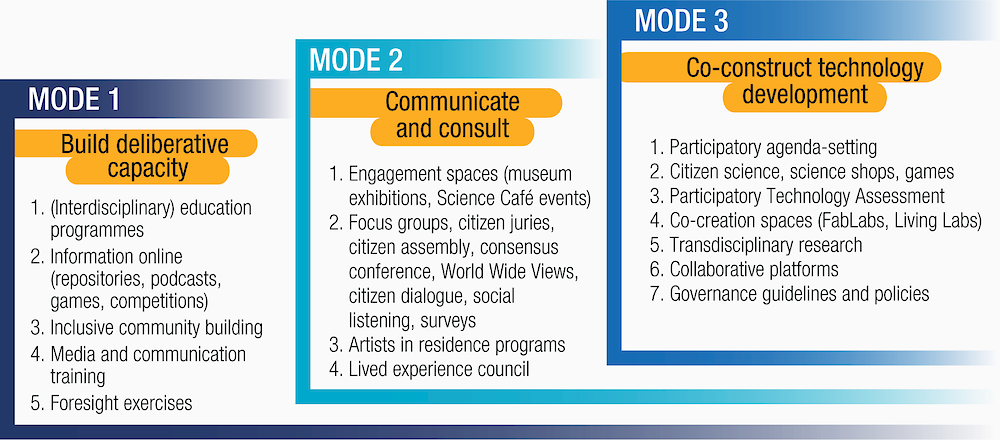

Upstream societal engagement: Three modes and examples

Engagement techniques can be categorised under three main groups, corresponding to their different purposes (Figure 6.3). Mode 1 (capacity-building) can be viewed as a prerequisite that establishes the conditions for effective societal engagement and democratic governance. Mode 2 (communicate and consult) gathers the views of citizens or informs them, which may have an indirect influence on technology governance decisions. Mode 3 (co-construct technology development) engages societal stakeholders more directly in the construction of science and technology.

Figure 6.3. Each engagement mode comes with a set of engagement techniques

Source: Developed by the authors.

Clarity on the rationale for stakeholder engagement and its timing – during, before or in parallel to the technology development process – is essential when deciding on the suitable societal engagement technique. Deliberative capacity-building (Mode 1) acts as foundation or enabler of societal engagement and occurs during and alongside innovation processes. Societal engagement exercises before or during the research planning phase tend to focus on communicating with or consulting societal stakeholders (Mode 2). Engagement efforts to co-construct science and technology pathways (Mode 3) occur during development, e.g., of prototypes or testing at scale.

Mode 1: Building deliberative capacity

Anticipatory governance has been defined as “a broad-based capacity extended through society that can act on a variety of inputs to manage emerging knowledge-based technologies while such management is still possible” (Guston, 2013[22]). Mode 1 activities (see Table 6.1) help build the capacity of publics and innovators to engage in deliberative processes and contribute constructively to governance discussions. They can include techniques (such as communication training) aimed at scientists and innovators, programmes to involve them in the science policy process, and multidisciplinary work that embraces the social sciences and humanities. Other engagement techniques (like science and science policy training) focus on journalists and the media.

Table 6.1. Engagement techniques and rationales: Mode 1

|

Mode 1: Building deliberative capacity |

|

|---|---|

|

Techniques and rationales |

|

These activities also tend to focus on assembling and empowering specific stakeholder groups around technology development, design, and governance. For instance, the European Human Brain Project built an inclusive community for the EBRAINS research infrastructure. This network of external collaborators (including patient associations, clinicians, and industry) brings together those who are particularly concerned by future technology applications.5 The project also provides information platforms and games to build knowledge and skills at the interface of science and society. Two examples in the field of synthetic biology are the citizen game “Nanocrafter” and the annual “iGem” student competition,6 both of which also feature community-building elements. The European Commission’s e-learning platform, “Digital Skillup”, is designed for both beginners and advanced users. It helps them explore emerging technologies and their impact on everyday life and offers training on topics like cybersecurity or the digital revolution.7 In the United States, the Science and Technology Policy Fellowships of the American Association for the Advancement of Science place talented scientists and engineers in positions of federal policy making, furthering the training of a cadre of communicators and contributors across the science and society divide.8

Mode 2: Communication and consultation

Mode 2 pertains to engagement techniques aiming to gather stakeholder views. While their outcomes and influence on the innovation process are often indirect, they do have capacity-building elements. For example, a UK citizen jury exercise to understand public attitudes towards ethical AI also resulted in their gaining a better understanding of automated decision systems (BEIS, 2021[63]).

Table 6.2. Engagement techniques and rationales : Mode 2

|

Mode 2: Communication and consultation |

|

|---|---|

|

Techniques and rationales |

exhibitions to engage publics in “engagement spaces” such as science museums, libraries, universities, and science cafés citizen juries, citizen assembly, consensus conference, World Wide Views, citizens’ dialogues foresight and gaming focus groups and surveys for public consultation to gather views lived experience council to consult with those concerned artists in residence programmes to promote exchanges between scientists and artists and engage the local community. |

Mode 2 contains a wide array of mechanisms and processes for soliciting views and attitudes towards emerging technology (see Table 6.2). Processes can vary from a one-off citizen dialogue to a sequence of meetings and conversations lasting many months. An important consideration across many Mode 2 engagement techniques is the need to design engagement spaces. This includes not only the location’s selection, accessibility, and institutional affiliation, but also the types of event formats and interactivity. For example, public outreach in science museums may take the form of exhibitions or room for experimentation. At Science Café events, on the other hand, scientists may engage with lay persons and discuss their research. Each form of consultation requires a different engagement space.

Mode 3: Co-constructing science and technology development

Mode 3 encompasses the wide variety of modalities for direct contribution by stakeholders and even publics to the creation of new knowledge and technology. As shown in Table 6.3, these techniques and processes promote exchanges between innovators and societal stakeholders that may explore complex and controversial questions and capture deeper underlying values and trade-offs. The exchange is bidirectional, resulting in the “co-construction” or “co-creation” of STI (König, Baumann and Coenen, 2021[64]; Kreiling and Paunov, 2021[31]).

Table 6.3. Engagement techniques and rationales : Mode 3

|

Mode 3: Co-construct technology development |

|

|---|---|

|

Techniques and rationales |

|

Mode 3 engagements can occur at different stages in the innovation process.

Agenda-setting: engagement typically occurs in participatory agenda-setting exercises, using formats like “decision theatres” or “social foresight labs”. The rationale is to co-create or inform research agendas (Matschoss et al., 2020[65]) by involving, for example, patient groups (Scheufele et al., 2021[66]). It can also be to integrate the needs of rural areas and indigenous communities in research and innovation processes (Schroth et al., 2020[67]).

New knowledge creation: community-based research strives to build equitable partnerships based on long-term commitment and applies interventions that are beneficial to all stakeholders involved (Baik, Koshy and Hardy, 2022[68]). This category also includes different forms of citizen science and transdisciplinary research (OECD, 2020[33]), both of which are premised on the power of experiential and acquired expertise in the creation of new knowledge. One example is the German funding initiative for citizen science, which is extending support to 28 projects in two phases between 2017 and 2024.9

Prototype development: the prototype stage is an important innovation milestone, and engagement is increasingly considered critical to its success. The user-centric methods for the development and testing of prototypes have been evolving. For example, (Rodriguez-Calero et al., 2020[69]) identified 17 strategies to engage stakeholders with prototypes during front-end design activities in the area of medical devices.

Deployment and testing at scale: Maker spaces have been used to engage societal stakeholders. For example, the “Lorraine Fab Living Lab”10 tests prototypes and prospectively assesses innovative usages, combining elements of FabLabs and Living Labs (Engels, Wentland and Pfotenhauer, 2019[70]).

Engagement governing scientific conduct: These occur alongside technology development processes and could result in the development of guidelines, such as on human genome editing (Iltis, Hoover and Matthews, 2021[71]). The term “open innovation” describes the opening of the innovation process. In the private sector, open innovation happens when future consumers are included in “customer co-creation” activities (Piller, Ihl and Vossen, 2010[72]), resulting in “prosumers” (Rayna and Striukova, 2015[73]). Initiatives are underway to build industry tools for engagement. One such initiative is the “Societal engagement with key enabling technologies (SOCKETS”)11 project supported by the European Commission (2020-23), which develops and tests methods to engage citizens in the industrial development and use of key enabling technologies.

Challenges and policy considerations

Despite their importance, establishing and running engagement initiatives upstream in the innovation process can be challenging, both from a procedural and organisational standpoint. Procedural challenges relate to the context and impact of the engagement exercise. Concretely, this means using the appropriate channels to ensure that inputs from engagement reach relevant decision makers and innovators and that engagement exercises are not perceived as an additional requirement which is met with a “tick-box mentality” of innovators. Moreover, processes tend not to recognise that experts and communities have different stakes, with traditional decision makers having more to gain and marginalised communities potentially having more to lose. Hence, another issue lies in the power relations between technical experts and societal stakeholders (see Chapter 4). Implementing meaningful participation requires capacity-building and training, as well as developing formats, procedures and a framework that enable members of the public to participate in the process (Schroth et al., 2020[67]).

Organisational challenges revolve around selecting and motivating stakeholders. In this respect, both the scope of the perceived societal impact of the technology and the societal relevance of the research are key. In the case of emerging technologies, relevance and urgency for stakeholders may not be high (de Silva et al., 2022[74]). Still, some technology solutions may affect a smaller group of (local) stakeholders, while others could impact broader groups and cover a geographically larger (global) scale. Lack of relevance, expertise, trust, skills, motivation, incentives, time, and financial resources are common engagement barriers across all stakeholder groups.

As diversity, equity and inclusion become dedicated goals, stakeholder differences in terms of knowledge, ways to communicate, values, expectations, contextual understanding, and routes to forming opinions may become even more pronounced. Allowing an open yet focused debate by balancing between an overly narrow and an open framing of the issues is essential to handle differences and disagreement, facilitating deliberation without forcing consensus (Bauer, Bogner and Fuchs, 2021[75]).

Box 6.2. Policy considerations for conducting effective societal engagement

Procedural aspects

Deploy engagement techniques sequentially to bring societal stakeholders into the innovation process at different stages. This means organising a series of societal engagements so that they build on each other and inform different dimensions of the technology governance process.

Frame engagement around societal missions and goals, as early-stage emerging technology may appear abstract to societal stakeholders. Effective engagement uses narratives that focus on anchors to which stakeholders can relate.

Provide training and incentives to innovators to nurture a culture of engagement and inclusion, so that engagement outcomes are linked with decision-making processes and embedded in innovators’ core activities.

Organisational aspects

Identify and select relevant stakeholders depending on the scope of the engagement exercise and the technology’s societal impact. Consider mobilising civil society or advocacy groups that represent societal members with high personal stakes in the R&D process, as well as positively seeking out diversity.

Make diversity, equity, and inclusion key design goals for engagement in Mode 1, Mode 2, and Mode 3. This means involving various types of expertise and creating an environment that allows an open yet focused debate, facilitating deliberation without forcing consensus. Funding structures can motivate innovators to engage with broad and diverse communities.

Build capacity and minimise barriers to entry for societal stakeholders to participate in engagement exercises. Suitable formats and effective procedures are essential to attract and retain committed participation.

Adaptivity: Co-developing principles, standards, guidelines, and codes of practice

Compared to strategic intelligence and societal engagement, norms and institutions are the more typical tools of technology governance through, for example, regulation, rules, and standards by authoritative bodies. However, while they will be necessary in certain situations, formal regulatory approaches that use norms to define permissible and impermissible activities, along with sanction or incentives to ensure compliance, may present disadvantages in more upstream contexts. First, the speed of technological advances makes it difficult for regulation to keep up. Second, novel ethical, social, and economic issues can operate outside or across regulatory jurisdiction and expertise. Third, applications across multiple industries and government agencies can create interagency co-ordination problems. For all these reasons, formal regulatory approaches may be ill-suited to govern emerging technology, at least in the earlier stages of development (Marchant and Wallach, 2015[76]; Hernández and Amaral, 2022[77]; OECD, 2019[78]). Further, attempts to govern emerging technology could derail innovative approaches, prompting concerns that companies and technologies may simply move across borders (Pfotenhauer et al., 2021[79]).

The OECD is rethinking regulatory policy to document and encourage more agile regulatory governance using a wide array of approaches (OECD, 2021[15]). One such approach might be to use principles, standards, guidelines, and codes with moral or political force but without formal legal enforceability. These “soft law” approaches may provide a number of advantages in terms of multisector co-operation and cross-jurisdictional flexibility (García and Winickoff, 2022[80]). For instance, (Gutierrez, Marchant and Michael, 2021[81]) have pointed to the adaptivity of soft law in governing AI, noting that “AI’s dynamic and rapidly evolving nature … make it challenging to keep in place. In these scenarios, soft law…can transcend the boundaries that typically limit hard law and, by being non-binding, serve as a precursor or as a complement or substitute to regulation.” Nevertheless, its effective deployment has both opportunities and challenges. Indeed, soft law is an increasingly important mode of governance for emerging technology (Hagemann, Huddleston and Thierer, 2019[82]). In the current context, soft law – in all its different forms -- should be considered an important tool for achieving an emerging technology governance system that is more anticipatory, inclusive, and adaptive.

Rationale

Guidelines, standards and codes of practice feature different types and rationales. Organisations create high-level principles that communicate a joint commitment to ideals and values-based operations. Standard-setting bodies – such as the Institute of Electrical and Electronics Engineers (IEEE) and the International Organization for Standardization (ISO) develop technical norms to guide communities of practice. Professional groups and firms also often ask their members to follow certain rules and codes of conduct. Governments can publish guidelines while threatening to pass enforceable laws as a backstop in the event of insufficient adherence. Finally, voluntary programmes, labels or certification schemes may drive markets, and ultimately the adoption of best practices.

Trends and examples

Public international principles: OECD recommendations

In situations where new international legal treaties are rarely achieved, principles can be an attractive modality for international, transnational and/or global actors to make moral and political commitments with some flexibility and accommodation for differences and changing circumstances. Principles can operate at the international level through a number of organisational sources, from the United Nations to the Council of Europe and the OECD. The OECD offers salient examples of public international recommendations that present principles in the field of technology governance. OECD recommendations feature regular reporting requirements by Adherents, to promote progress in their implementation as well as transparency. Recent recommendations and implementation work include:

May 2019: the Recommendation of the Council on Artificial Intelligence (OECD, 2019[17]), under which the OECD convened a multi-stakeholder group, developed a practical toolkit, created an “observatory” of existing policies to promote mutual learning, and led to the establishment of a new OECD Working Party on AI Governance

December 2019: the Recommendation on Responsible Innovation in Neurotechnology (OECD, 2019[11]), which seeks to anticipate problems during the course of innovation, steer technology towards the best outcomes, and include many stakeholders in the innovation process.

October 2021: the Recommendation for Agile Regulatory Governance to Harness Innovation (OECD, 2021[15]), which provides guidance for policy makers to design agile regulations that can address the regulatory challenges and opportunities arising from emerging technologies.

Public-private international standards

Other important technology governance mechanisms arise at the public and private interface. As a case in point, ISO is an independent, non-governmental international organisation with a membership of 167 national standards bodies. Among other things, ISO sets many technical standards in the arena of emerging technology, which are developed through a stakeholder-driven process at a fairly high level of technical detail. ISO/TR 12885:2018 on health and safety practices in occupational settings of nanotechnologies is a good example of a technical governance standard.12 This standard focuses on the occupational manufacture and use of manufactured nano-objects, and their aggregates and agglomerates greater than 100 nanometres.

Codes of practice

Codes of scientific and engineering practice

Novel and specialised codes of practice in science and engineering are sometimes deployed before new technologies hit the market, when their potential risks and harms are anticipated but not well-known, or the work has significant ethical implications. These can cross over into public funding agencies through policy. A good example of guidelines that have influenced both the public and private sectors are those developed by the International Society of Stem Cell Research (ISSCR) (Box 6.3).

Box 6.3. Guidelines on the ethics of stem cell research as a self-regulatory approach

ISSCR is an independent global non-profit organisation that promotes excellence in stem cell science and therapies. Founded in 2002, the ISSCR consists of 4 500 scientists, educators, ethicists, and business leaders across 80 countries. ISSCR members make a commitment to uphold the ISSCR Guidelines for Stem Cell Research and Clinical Translation (ISSCR Guidelines), an “international benchmark for ethics, rigor, and transparency in all areas of practice.” 1

Although not directly enforceable, the guidelines provide regulators and research funders with a framework for the regulatory oversight of stem cell research and clinical translation, including recent advances related to embryo models, chimeric embryos and mitochondrial replacement (Anthony, Lovell-Badge and Morrison, 2021[83]). The guidelines can be indirectly enforced by research institutions, funding agencies and scientific journals that require scientists to comply (Marchant and Allenby, 2017[34]).

In 2021, the ISSCR updated its guidelines to address advances in stem cell science and other relevant fields since the previous update in 2016. These advances included human embryo culture, organoids, mitochondrial replacement, human genome editing and prospects for obtaining in vitro-derived gametes. The guidelines directly address new ethical, social and policy issues that have arisen, and recommendations for oversight.

1. https://www.isscr.org/guidelines (accessed 22 September 2022).

Industrial codes of practice

Many companies find it advantageous to work at the industry-wide level to design joint solutions to governance in the form of self-regulation. For example, the biopharmaceutical industry is experiencing intense changes, with a number of frontier technologies impacting the way it does research, commercialises its products, and collaborates with partners and stakeholders across the world. At the industry level, the International Federation of Pharmaceutical Manufacturers and Associations has responded by creating new bodies, like global future health technologies and bioethics working groups, to consider the next generation of risks, benefits, and standards, with a view to updating its “Code of Practice”.13 Another example is the International Gene Synthesis Consortium (Box 6.4), which has developed a strong network and commitment to biosecurity measures in the industry.

Box 6.4. International Gene Synthesis Consortium

Synthetic biology, also known as “engineering biology”, is a multidisciplinary field that “integrates systems biology, engineering, computer science, and other disciplines to achieve the ’modification of life’ or even the ’creation of life’ via the redesign of existing natural systems or the development of new biological components and devices” (Sun et al., 2022[84]). Several major breakthroughs have occurred over the last two decades, including the development of the first synthetic cell at the James Craig Venter Institute in 2010 (Trump et al., 2020[85]), advances in DNA synthesis and assembly (Sun et al., 2022[84]) and the adoption of the Clustered Regularly Interspaced Short Palindromic Repeats-associated protein system (CRISPR/Cas) for genome editing in eukaryotic cells (Cong et al., 2013[86]). Like other emerging technologies, synthetic biology is a rapidly advancing field that has outpaced its current regulatory framework and is likely to have disruptive impacts.

The power to design organisms carries risks in terms of biosecurity. Formed in 2009, the International Gene Synthesis Consortium (IGSC) is an industry-led group of gene synthesis companies and organisations. Currently, IGSC members represent approximately 80% of commercial gene synthesis capacity worldwide. IGSC was created to develop the Harmonised Screening Protocol, now in its second version. Under the protocol, IGSC members test the complete DNA and translated amino acid sequences of every double-stranded gene order against a curated regulated pathogen database derived from international pathogen and toxin sequence databases.1

The current version of the Harmonised Screening Protocol, which amounts to a private standard enacted to protect the public, was launched in 2017.2 More recently, in the context of the rapid pace of technological change in the field, some industry and academic actors have publicly called for a process to update the protocol that should include the synthesis companies themselves, policy makers, science and technology funders (both public and private), and the broader synthetic biology community (Diggans and Leproust, 2019[87]).

1. https://genesynthesisconsortium.org (accessed 23 September 2022).

2. https://genesynthesisconsortium.org/wp-content/uploads/IGSCHarmonizedProtocol11-21-17.pdf (accessed 20 September 2022).

Self-regulatory product or process standards

Technology-based standards determine the specific characteristics (size, shape, design, or functionality) of a product, process, or production method. These standards are an important form of governance that can emanate from both the private sector (e.g., de facto standards in the form of dominant designs) and the public sector (e.g., government-regulated vehicle safety standards or mobile phone frequency bands). Environmental non-governmental organisations (NGOs) are partnering with industry on the development of product standards for new food products driven by new and emerging technologies. These partnerships can help generate standards or certification schemes that may command premiums in the market.

Co-developed product standards have potential utility for “upstream governance” because retailers can leverage their market power to influence how technology developers are considering unanticipated consequences throughout the supply chain, from design and sourcing to disposal. Companies are accountable as they have a duty to report on their activities to their investors. They have the power to “bake in” these concerns as the new technologies, chemicals and innovations develop.

Recently, the Environmental Defense Fund, a US-based NGO, worked with the private sector to develop principles and standards to ensure the environmental sustainability of cell-based meat and seafood. This information allows companies to assess these products’ potential impacts on human health, the environment and society, and to communicate the implications to stakeholders clearly and transparently (Environmental Defense Fund, 2021[88]). An important question was how to translate the mechanisms and principles of co-design and upstream engagement into practice. Involving multiple stakeholders was key to ensuring the quality and legitimacy of the guidance.

“By-design” approaches

With the “ethics-by-design” or “sustainability-by-design” approach to governance, some firms and regulatory agencies assess and build in the sustainability or ethical implications of new technologies at different stages of technology development. The “Safe(r)-by-Design” concept, for instance, encourages industry to reduce uncertainties and risks to human and environmental safety, starting at an early phase of the innovation process and covering the whole innovation value chain (or life cycle for product development) (OECD, 2022[89]).

This ethics-by-design approach seeks to embed ethics and societal values – such as privacy, diversity, and inclusion – through clear protocols (e.g., search protocols in AI). Analytical tools can serve to assess privacy impacts, safety impacts, diversity, inclusion, and human rights impacts, and avoid bias. At the December 2021 Summit for Democracy, the United States announced new international technology initiatives including International Grand Challenges on Democracy-Affirming Technologies to drive global innovation on technologies that embed democratic values and principles (Matthews, 2021[90]). In July 2022, the United States, and the United Kingdom co-launched “a set of prize challenges to unleash the potential of privacy-enhancing technologies (PETs) to combat global societal challenges”, making sure privacy and trust are at the heart of the design process” (The White House, 2022[91]).

Challenges and policy considerations

Principles, guidelines, standards, and codes of practice face some challenges. First, they may lack the formal legitimacy of regulations, which are derived from governments’ legislative authority. This means that they may escape some of the formal procedures required to enact regulations, such as transparent and accountable public comment periods, and structured stakeholder engagement.

Second, the efficacy of these systems must be better addressed should “soft law” become an even more important tool (Hagemann, Huddleston and Thierer, 2019[82]). Third, the existence of too many non-binding sets of norms in a particular terrain may cause overlaps, impeding efficacy across the complex system of actors and institutions that make up global governance (Black, 2008[92]).

Box 6.5. Policy considerations for co-developing principles, guidelines, standards, and codes

Perform empirical analysis of diverse mechanisms and tools, recognising its interplay with regulation to optimise their use, further increasing the credibility and effectiveness of technology governance.

Co-design

Ensure “meaningful” participatory mechanisms where concerned stakeholders (both citizens and SMEs) are invited into the design of both technologies and governance systems. Principles, standards, guidelines, and codes of practice should be transparent and built on evidence, so that they are accountable not only to industry, but to the public.

Perform outreach to ensure effective standardisation. This includes SMEs, which often do not have the resources to contribute effectively to standardisation. Include user groups (like patients), regulatory authorities, social scientists, philosophers, and civil society in standard-setting. This co-creation is critical in the early phases of development of soft law instruments for self-regulation. For instance, co-design will enhance the likelihood of public acceptance, which will facilitate the use of technology at scale to enhance and save patient lives.

Compliance

Develop oversight mechanisms for implementation and compliance, including third-party audits of technology governance as part of an effective quality control infrastructure.

Consider other mechanisms like liability regimes with contractual force, external ethics committees, insurance companies that might require compliance and performance, and government off-ramps (if conditions of governance not satisfied, government regulator will step in).

Strengthen the use of and compliance with governance tools. Tie funding, publication, and regulatory approval to compliance with safety standards; access; transparency; and ethical, legal, and social principles.

By-design

Change the incentives for researchers to promote more transparent processes for the selection, funding, and monitoring of early prototype plans for technology innovation.

Funders could require and provide adequate incentives for peer-review and community engagement during the early design phases of disruptive research.

Towards international co-operation on anticipatory governance

Technological sovereignty as a concept is becoming more pronounced, and more countries are striving for technological sufficiency – if not clear advantages – in specific domains (see Chapter 2). Yet this movement towards national or regional approaches might be out of step with current demands. The global nature of the challenges facing the world today requires greater technological (or other) co-operation. The question is whether – and how – the technological governance framework addresses these dynamics.

The section above pitched the use of such design criteria and tools at the national level. This framework could also encourage technological co-operation at the international level – first, by reinforcing commitment to common values such as human rights, responsibility, economic co-operation, and democratic governance; and second, by paving the way for the development of international approaches, such as good strategic intelligence, stakeholder and societal engagement, and mechanisms like OECD recommendations. As stated previously, international co-operation is a consideration for good emerging technology governance that spans the gamut of values, design criteria and tools.

International co-operation on TA and strategic intelligence

As explored in the above section, anticipatory tools can enhance the capacity to spot issues, understand a given technological and governance landscape, and ultimately make better governance decisions. Across the world, TA, strategic foresight, and other forms of strategic intelligence (such as horizon-scanning) are being applied at the national level to inform national STI policies and technology governance.

One clear gap in the landscape of strategic intelligence lies in the international arena. International technology decision-making on the possible limits on geoengineering,14 human augmentation15 and AI will require strategic intelligence sources that are trusted across countries and sectors. Commonly recognised evidence can serve as the foundation of agreement on different forms of governance. The Intergovernmental Panel on Climate Change, which has supported climate co-ordination and co-operation under the Paris Agreement/COP21, is a case in point. Such global forward-looking analysis could be informed by and link to so-called “global observatories”, which aggregate policy approaches and technology developments. The AI Observatory at the OECD is a good model, with its searchable database of AI policies and normative instruments throughout the world, and its hub for expert blog posts and articles. Some have proposed a Global Observatory for Gene Editing which would serve a broader set of functions, notably to enrich ethical, legal, and cultural understandings, and encourage debate among the global citizenry (Jasanoff and Hurlbut, 2018[93]). Collaboration around such efforts at the international level could pool insights on the development and potential impacts of technology, as well as build best practices for collective strategic intelligence.

International stakeholder and public engagement

In this vein, the development of emerging technologies has ramifications for the nature of citizen engagement. For example, geoengineering techniques could affect weather patterns or water supply, with impacts that are not restricted to national borders. AI applications exert profound impacts not only on national, but global, economies. Growing calls for international public deliberation exercises, such as a global citizens' assembly on genome editing (Dryzek et al., 2020[94]), evince the co-emergence of technology and new kinds of global citizenship. Going from the traditionally local or national level to the global scale will require adapting engagement techniques, for example by using formats like World Wide Views.16 However, deciding which stakeholder groups should be involved in global efforts raises questions related to the nature of international publics and the identification of relevant stakeholders.

International co-operation on principles, standards, guidelines, and codes of practice

Addressing governance challenges at the country level runs the risk of being ineffective at best and counter-productive at worst, as particular jurisdictions could exploit the governance gaps to gain advantage. Several of the governance modalities (such as the OECD recommendations) discussed in this chapter operate at an international level, offering an opportunity to co-ordinate and even harmonise different jurisdictions’ approaches. Further, standards emanating from industry groups or public-private partnerships can work transnationally, across and through jurisdictions linked by supply chains, markets, and border-crossing actors.

Conclusion