All OECD countries currently measure population mental health, yet use a variety of tools to capture a multitude of outcomes. In order to improve harmonisation, this chapter poses a series of questions that highlight the criteria to be considered when choosing appropriate survey tools. These criteria include statistical quality, practicalities of fieldwork and data analysis. Overall, there is strong evidence supporting the statistical properties of the most commonly used screening tools for the composite scales of mental ill-health and positive mental health. Four concrete tools (the PHQ-4, the WHO-5 or SWEMWBS, and a question on general mental health status) that capture outcomes across the mental health spectrum are suggested for inclusion in household surveys in addition to already ongoing data collection efforts.

Measuring Population Mental Health

3. Good practices for measuring population mental health in household surveys

Abstract

Countries across the OECD are already implementing a variety of survey tools to measure aspects of population mental health. Chapter 2 highlighted that while there is some degree of harmonisation for outcomes such as risk for depression, life evaluation and general psychological distress, there are gaps in coverage for others: in particular, anxiety; other specific mental disorders (bipolar disorder, PTSD, eating disorders and so on); and affect and eudaimonic aspects of positive mental health. Before settling on a concrete list of recommendations for member countries, this chapter provides an overview of the properties that should be considered when selecting a specific tool to measure these outcomes in household surveys.

While OECD countries are already using a variety of tools, including structured interviews, data on previous diagnoses, experienced symptoms and questions on suicidal ideation and suicide attempts, this chapter will focus on the statistical qualities of three other tools in common use: screening tools1, positive mental health indicators and general questions on mental health status. This is for two reasons. First, these tools are standardised in terms of question formulation and thus provide the easiest foundation on which to make harmonised recommendations. Second, these tools are more commonly featured in general social surveys (as compared to tools for diagnoses or experienced symptoms), which tend to be collected more frequently than health-specific surveys. Taken together, these three tools also provide a holistic measure of mental health, encompassing the full possibility of outcomes conceptualised by both the single and dual continua (see Figure 1.2), and they provide more nuance than, say, measures of suicidal ideation or attempts. These tools are then the most promising when thinking of pragmatic recommendations that can be taken up by the largest number of countries.

When selecting an appropriate tool, the overarching consideration is how to measure the different facets of mental health most accurately – across countries, groups and time – in a way that can be used by government as a part of an integrated policy approach to mental health. High-quality data are needed to provide insights into how societal conditions (economic, social, environmental) affect the mental health of different population groups and whether these conditions contribute to improving or declining mental health. No data are completely without measurement bias, and it is always important that data collection entities enact rigorous quality controls to minimise the amount of noise in a measure. However, there are challenges specific to the measurement of mental health, due to stigma and bias affecting survey response behaviour, different cultural views and evolving attitudes towards mental health over time. Furthermore, household surveys by definition exclude institutionalised populations, including those in long-term care facilities, hospitals or prisons, as well as people with no permanent addresses, all of whom may have higher-than-average risk for some mental health conditions.

Good practices for measuring mental health at the population level differ in several ways from those for measuring mental health at the clinical level. For national statistical offices or health ministries conducting large-scale, nationally representative surveys, implementing long structured interviews is impractical, even though these may be considered the gold standard from a clinical perspective. The end users of the data are different, and policy makers have other needs than clinicians: tracking overall trends (over time, across at-risk groups, among countries), and factors of risk and resilience in population groups vs. diagnosing an individual and developing a treatment plan. These needs guide this chapter’s discussion.

This chapter provides a guide to good practices in producing high-quality data on population mental health outcomes, by posing a series of questions for data collectors to consider. High-level findings from this exercise are shown in Table 3.1, below. The specific screening and composite-scale tools included in the table are those that are used most frequently across OECD countries (for more information on each, refer to Table 2.7, Table 2.11, Table 2.12 and Annex 2.B).2 Questions are grouped into three overarching categories, covering (1) statistical quality, (2) data collection procedures and (3) analysis. Evidence from existing research is used to illustrate each question area, rather than to comprehensively assess every mental health tool used by OECD countries. These framing questions serve as a lens for assessing the advantages and disadvantages of different tools for measuring population mental health and to guide the concrete recommendations for tool take-up and harmonisation outlined in the conclusion.

Table 3.1. Overview of mental health tool performance on statistical quality, data collection and analysis metrics

|

Tool Information |

Statistical Quality |

Data Analysis |

Country Coverage |

|||||||

|

Name |

Topic coverage and item length |

Reference period |

Reliability |

Validity |

Low missing rates |

High comparability across groups |

Sensitive to change |

Normal distribution |

Sensitivity/ specificity of thresholds |

OECD countries reporting its use |

|

Validated screening tools for assessing mental ill-health |

||||||||||

|

Psychological distress |

||||||||||

|

General Health Questionnaire (GHQ-12) |

Negative and positive affect, somatic symptoms, functional impairment; 12 items |

Recently |

🗸 |

🗴 |

🗴 |

~ |

🗴 |

🗴 |

5 of 37 |

|

|

Kessler Scale 6 (K6) |

Negative affect; 6 items |

Past 4 weeks |

◯ |

🗸 |

~ |

🗸 |

4 of 37 |

|||

|

Kessler Scale 10 (K10) |

Negative affect, functional impairment; 10 items |

Past 4 weeks |

🗸 |

🗸 |

~ |

🗸 |

4 of 37 |

|||

|

Mental Health Inventory 5 (MHI-5) |

Negative and positive affect; 5 items |

Past month |

🗸 |

🗸 |

🗴 |

~ |

28 of 37 |

|||

|

Depressive symptoms |

||||||||||

|

Patient Health Questionnaire -8 or -9 (PHQ-8 / PHQ-9) |

Negative affect, anhedonia, somatic symptoms, functional impairment (matched to major depressive disorder per DSM-IV and DSM-5 criteria); 8 or 9 items |

Past 2 weeks |

🗸 |

🗸 |

🗸 |

🗸 |

🗸 |

30 of 37 |

||

|

Patient Health Questionnaire -2 (PHQ-2) |

Negative affect, anhedonia; 2 items |

Past 2 weeks |

~ |

~ |

~ |

🗸 |

8 of 37 |

|||

|

Center for Epidemiological Studies Depression Scale (CES-D) |

Negative affect, anhedonia; 20 items |

Past week |

🗸 |

~ |

🗴 |

🗴 |

1 of 37 |

|||

|

Symptoms of anxiety |

||||||||||

|

Generalised Anxiety Disorder-7 (GAD-7) |

Negative affect, somatic symptoms, functional impairment; 7 items |

Past 2 weeks |

🗸 |

🗸 |

~ |

◯ |

~ |

11 of 37 |

||

|

Generalised Anxiety Disorder-2 (GAD-2) |

Negative affect, functional impairment; 2 items |

Past 2 weeks |

🗸 |

🗸 |

~ |

~ |

7 of 37 |

|||

|

Symptoms of depression and anxiety |

||||||||||

|

Patient Health Questionnaire -4 (PHQ-4) |

Negative affect, anhedonia, functional impairment; 4 items |

Past 2 weeks |

~ |

~ |

~ |

~ |

~ |

13 of 37 |

||

|

Standardised tools for assessing positive mental health |

||||||||||

|

Short Form Health Status (SF-12) |

Negative and positive affect, functional impairment (Mental Health Component Summary); 12 items |

Past 4 weeks |

🗸 |

🗸 |

🗴 |

8* of 37 |

||||

|

Warwick- Edinburgh Mental Well-Being Scale (WEMWBS) |

Positive affect, eudaimonia, social well-being; 14 items |

Past 2 weeks |

🗸 |

🗸 |

🗸 |

~ |

🗸 |

🗸 |

~ |

2 of 37 |

|

Short Warwick- Edinburgh Mental Well-Being Scale (SWEMWBS) |

Positive affect, eudaimonia, social well-being; 7 items |

Past 2 weeks |

🗸 |

🗸 |

🗸 |

🗸 |

🗸 |

🗸 |

~ |

6 of 37 |

|

WHO-5 Wellbeing Index (WHO-5) |

Positive affect; 5 items |

Past 2 weeks |

🗸 |

🗸 |

🗸 |

6 of 37 |

||||

|

Mental Health Continuum Short-Form (MHC-SF) |

Positive affect, eudaimonia, life satisfaction, social well-being 14 items |

Past month |

🗸 |

~ |

~ |

🗴 |

2 of 37 |

|||

|

Single-question self-reported general mental health status |

||||||||||

|

Self-reported mental health (SRMH) |

Varies widely, including self-reported: general mental health status; number of mentally healthy days; recovery from mental health condition; satisfaction with mental health; extent to which mental health interferes in daily life; Single question |

Varied (ranges from current assessment to last 12 months) |

~ |

~ |

🗴 |

◯ |

23 of 37 |

|||

Note: 🗸 indicates that the evidence shows this tool performs well on this dimension; ~ indicates that the evidence shows this tool performs only fairly; 🗴 indicates that the evidence shows this tool performs poorly; and ◯ indicates that evidence is limited or missing. If a cell is blank, this means that no research on this tool / topic combination was reviewed for this publication. * Refers to the fact that Germany included the longer SF-36 (rather than the shorter SF-12) in its 1998 German National Health Interview and Examination Survey, however the instrument will not be used in future due to licensing fees. Refer to Annex 2.A and Annex 2.B for more information about each tool. Country coverage refers to all OECD countries except Estonia, which did not participate in the questionnaire.

Source: Literature reviewed in this chapter; Responses to a questionnaire sent to national statistical offices in January 2022.

Statistical quality

A suitable measurement instrument for population mental health should perform well across a range of statistical qualities, including reliability, validity, ability to differentiate between different latent constructs, minimal non-response or refusals, comparability across groups and sensitivity to change. In addition, practical considerations surrounding a tool are important, such as keeping it short enough in length, with low redundancy between question items, so as to avoid respondent fatigue. These qualities interact with one another, meaning that in practice the goal is to balance the trade-offs of each in order to find a sensible solution. An instrument that performs well in one quality criterion – i.e. validity – may perform poorly in another – i.e. length of the questionnaire and/or non-response rates. Thus before choosing a metric, it is important for survey producers to weigh the costs and benefits of each approach to identify a tool suitable for their context.

How reliable are survey measures of mental health?

Measures of population mental health should produce consistent results when an individual is interviewed or assessed under a given set of circumstances. This concept, called reliability, is about ensuring that any changes detected in outcomes have a low likelihood of being due to problems with the tool itself – i.e. measurement error – and instead reflect actual underlying changes in the individual’s mental health (Box 3.1).

Box 3.1. Statistical definitions: Reliability

Two important aspects of reliability are test-retest reliability and internal consistency reliability (OECD, 2013[1]; OECD, 2017[2]).

Test-retest reliability concerns a scale’s stability over time. A respondent is re-interviewed or re-assessed after a period of time has passed, and their responses to a given questionnaire item are compared to one another. The expectation is that (assuming no change in the underlying state being measured) a reliable measure should lead to responses that are highly correlated with one another. There is no fixed rule for the length of time between the initial interview and follow-up: practice ranges from as short as 2-14 days to six months, depending on the assessment type (NHS Health Scotland, 2008[3]).

The test-retest criterion must be applied thoughtfully in the case of mental health measurement instruments, as mental health states (and particularly affective states) can fluctuate over short periods of time for a given individual. This means that measurement instruments addressing specific symptoms or states can be highly reliable yet still produce different results for the same individual over a period of days or weeks, as symptoms and experiences themselves wax and wane. In the context of measuring population mental health outcomes, then, test-retest reliability is particularly relevant for:

Simple measures that concern whether an individual has ever been diagnosed with a mental health condition (where a good instrument should have a very high test-retest correlation)

Establishing whether a short-form measure (or a measure being validated against a clinical diagnosis) is performing with the same test-retest accuracy as a long-form measure (or clinical diagnosis) when the two are administered to the same respondent, and/or

Establishing the broad stability of symptom-based measurement scales over short time periods and across large samples - i.e. while the test-retest correlation of questions for a set of symptoms is unlikely to be perfect for a given individual (if symptoms themselves are not always stable), day-to-day fluctuations in symptoms at the individual level can be expected to wash out across large samples to produce a similar distribution of scores over a short time period.2

Assessing test-retest reliability therefore indicates a trade-off between measures that are sufficiently stable, yet sensitive to change over time. An instrument that performs well on test-retest reliability may perform poorly on tests to measure sensitivity to change, which underscores the importance of looking at statistical quality measures holistically when making decisions as to which tools to implement.

Internal consistency reliability assesses the extent to which individual items within a survey tool are correlated to one another when those items aim to capture the same target construct. In the context of measuring population mental health, this might mean that, in a battery of items designed to measure depression and anxiety, the depression items correlate with one another, and the anxiety items correlate with one another (see also Box 3.3 for a discussion of factorial validity). The most widely used coefficient for internal consistency reliability is Cronbach’s alpha, which is a function of the total number of question items, the covariance between pairs of individual items and the variance of the overall score.1 Although there is not universal consensus, most researchers agree that a coefficient value between 0.7 and 0.9 is ideal (NHS Health Scotland, 2008[3]). Values below 0.7 may reflect the fact that items within the scale are not capturing the same underlying phenomenon (OECD, 2013[1]), while values above 0.9 may indicate that the scale has redundant items.

Notes:

1. The Cronbach coefficient alpha is commonly used in the literature to assess the internal consistency reliability of multi-item tools. The coefficient is calculated by multiplying the mean paired item covariance by the total number of items included in the scale and dividing this result by the sum of all elements in the variance-covariance matrix (OECD, 2013[1]). This results in a coefficient ranging from 0 (scale items are completely independent from one another, no covariance) to 1 (scale items overlap, complete covariance).

2. The definition of “a short time period” is subjective and can vary depending on circumstance. For example, although the period of a couple of days may be deemed an acceptably short period of time over which a test-retest assessment could be administered, if there were to be an extreme shock in the intervening days, either positive or negative, there would be good grounds to expect change in the underlying distribution. Frequent data collection on mental health during the COVID-19 pandemic illustrated the volatile nature of many affect-based measures, with large spikes coinciding with the introduction / easing of confinement policies.

The performance of screening tools on measures of reliability varies across tools and the outcomes they measure. There are mixed findings for general measures of psychological distress. The General Health Questionnaire (GHQ-12) as well as the Short Form-36 (SF-36) and its shorter sub-component, the Mental Health Inventory (MHI-5), have been shown to have good reliability (Schmitz, Kruse and Tress, 2001[4]; Ohno et al., 2017[5]; Elovanio et al., 2020[6]; Strand et al., 2003[7]); however, while the longer Kessler (K10) has been shown to be internally consistent, the test-retest reliability of the shorter Kessler (K6) tool has not been assessed in any studies (El-Den et al., 2018[8]; Easton et al., 2017[9]).

Conversely, screening tools for specific mental conditions – especially depression – are the most studied, and they have been shown to be reliable in terms of both test-retest reliability and internal consistency reliability. A meta-analysis of 55 different screening tools for depression found the Patient Health Questionnaire (PHQ-9) to be the most evaluated tool, with a number of studies concluding that both it and the PHQ-8 (a shorter version with the final question on suicidal ideation removed) have high reliability and validity (El-Den et al., 2018[8]). The same report, however, found that the shorter Patient Health Questionnaire-2 (PHQ-2) lacked consistent data on validity and reliability: among the six reports that evaluated the PHQ-2, only one reported on its internal consistency or test-retest reliability (El-Den et al., 2018[8]), which led the authors to caution that the reliability of the PHQ-2 cannot be confirmed with available data. The Center for Epidemiological Studies Depression Scale (CES-D), although less studied than the PHQ, has also been found to have good reliability, on both metrics (Ohno et al., 2017[5]). Among anxiety tools, the Generalised Anxiety Disorder screeners (both the longer GAD-7 and shorter GAD-2) have been found to be reliable, with good test-retest and internal consistency reliability (Ahn, Kim and Choi, 2019[10]; Spitzer et al., 2006[11]).

A study of the Patient Health Questionnaire-4 (PHQ-4), which combines the PHQ-2 and GAD-2 to generate a composite measure of both depression and anxiety, found lower, yet still acceptable reliability (Cronbach’s alpha > 0.80 for both sub-scales) (Kroenke et al., 2009[12]). Another study of the PHQ-4 found lower item-intercorrelations but deemed the reliability to be acceptable given the short length of the scales (Löwe et al., 2010[13]).3 Because Cronbach’s alpha is in part a function of the total item length (refer to Box 3.1), shorter scales will perform worse on tests of internal consistency by construction. However shorter measures, with less redundancy between question items, are often preferred by survey creators, as they entail a lower burden for respondents.

Composite scales capturing aspects of positive mental health have also been found to be reliable. A study of the 14-question Warwick-Edinburgh Mental Well-Being Scale (WEMWBS) tool found it to have high test-retest reliability (0.83 at one week) and a high Cronbach’s alpha (around 0.9) (Tennant et al., 2007[14]; NHS Health Scotland, 2016[15]). The authors cautioned, though, that the high Cronbach’s alpha suggests some redundancy in the scale items, a concern that led to the development of the shorter seven-item version (SWEMWBS) (Tennant et al., 2007[14]; NHS Health Scotland, 2016[15]). Multiple studies of WEMWBS and SWEMWBS found them both to have strong test-retest reliability (Stewart-Brown, 2021[16]; Shah et al., 2021[17]). The World Health Organization-5 (WHO-5) composite scale has also been tested for reliability in a variety of settings (Dadfar et al., 2018[18]; Garland et al., 2018[19]). Similarly, the MHC-SF has been found to have high internal reliability, though its test-retest reliability is only moderate (Lamers et al., 2011[20]).

Fewer studies have investigated the reliability of general self-reported indicators of mental health status; however, evidence from the United States suggests that these measures have acceptable test-retest reliability. The health-related quality-of-life tool used by the United States Centers for Disease Control, the Behavioral Risk Factor Surveillance System (BRFSS) survey, measures perceived health by combining physical and mental health. A study in the state of Missouri found that the shorter version of the tool, with four items, has acceptable test-retest reliability and strong internal validity, although reliability was lower among older adults (Moriarty, Zack and Kobau, 2003[21]).

Box 3.2. Key messages: Reliability

Most mental health screening tools, including both surveys that identify specific mental disorders and those that identify positive mental health, have been found to have strong reliability, as measured through both test-retest and internal consistency measures.

Test-retest reliability must be considered in tandem with a measure’s sensitivity to change over time, rather than blindly applied as a quality criterion.

There is strong evidence for the reliability of screening tools (especially those focusing on depression) and, to a somewhat lesser extent, positive mental health composite scales. However, fewer studies have been done to assess the reliability of general self-reported indicators of mental health status; more research is needed in this area.

How well does the tool measure the targeted outcome?

In addition to being reliable, a good measurement instrument must be valid, i.e. the measures provided by the tool should accurately reflect the underlying concept. For indicators that are more objective, validity can be assessed by comparing the self-reported measure against an objective measure of the same construct. For example, respondents’ self-reported earnings could in theory be cross-checked with their tax returns, or pay slips, to ascertain whether their response was reported accurately. Of course there are practical reasons that prevent this from being done systematically, but this illustrates that there are ways of assessing the validity of self-reported earnings data. Conversely, it is not possible to ascertain the “objective truth” of a subjective indicator, such as subjective well-being, trust or indeed mental health. This does not mean that validity cannot be assessed: OECD measurement guidelines use the concepts of face validity, convergent validity and construct validity to assess the validity of subjective indicators (OECD, 2013[1]; OECD, 2017[2]) (Box 3.3).

Unlike many subjective indicators, the bulk of screening tools to assess mental health have been validated against diagnostic interviews for common mental disorders, which provide a rigorous assessment of their accuracy and real-world meaning. The most common diagnostic interview against which mental health screening tools are validated is the World Health Organization’s Composite International Diagnostic Interview (WHO-CIDI), which was designed for use in epidemiological studies as well as for clinical and research purposes (see Chapter 2 for more details). This tool allows to measure the prevalence of mental disorders, the severity of these disorders, their impact on home management, work-life balance, relationships and social life, as well as mental health service and medications use. Although the CIDI is widely accepted as a gold standard against which mental health survey items should be assessed, it is not immune to criticisms and validity concerns (Box 3.4).

Box 3.3. Statistical Definitions: Validity

Validity is more difficult to ascertain than reliability, especially for subjective data for which an objective truth is unknowable, and which typically cannot be compared to an equivalent objective measure. Three ways of assessing validity for subjective measures include face validity, convergent validity and construct validity.

Face validity evaluates whether the indicator makes intuitive sense to the respondent and to (potential) data users. One way to indirectly measure face validity is through non-responses. High levels of non-response may indicate that respondents do not understand or see the relevance or usefulness of the question. In the case of mental health, high levels of non-response may also reflect a degree of discomfort with the topic due to stigma and bias, rather than lack of face validity. (An extended discussion of non-response and mental health measures appears later in this chapter.) Cognitive interviewing can also be used.

Convergent validity is assessed by how well the indicator correlates to other proxies of the same underlying outcome. Using mental health tools as an example, were a researcher to introduce a new tool to assess anxiety, s/he could test its convergent validity by comparing it to pre-existing screening tools for data on anxiety, diagnosis or mental health service use, self-reported assessments of anxiety level, and/or bio-physical markers of stress and anxiety (heart rate, blood pressure, neuroimaging, etc.).

Construct validity is the extent to which the indicator performs in accordance with existing theory or literature. For example, research shows that mental health and physical health are correlated with one another and co-move. Therefore, if a new mental health tool showed little correlation with physical health, or if changes in mental health as measured by this tool did not reflect any changes in physical health, the scale would be suspected of having low construct validity. The growing literature on the social determinants of health can also be leveraged to assess construct validity, in a similar way.

In addition to the three aspects of validity mentioned above, clinical validations of mental health survey items often refer to three additional assessments: criterion validity, factorial validity and cross-group validity.

Criterion validity exists only when there is a gold standard against which an item can be compared. In the case of mental health, this gold standard is typically a structured interview (e.g. the CIDI, refer to Annex 2.B). Criterion validity assesses the psychometric properties of a measure, i.e. how it compares to the gold standard. A measure is said to be sensitive if it can accurately identify a “true positive” (i.e. how often the survey accurately identifies someone at risk of, say, depression); it is specific if it can accurately identify a “true negative” (i.e. it accurately identifies someone as not at risk for depression). In order to establish diagnostic accuracy, sensitivity and specificity are plotted in a receiver operating characteristic (ROC) curve at various thresholds. The area under the curve (AUC) can then be used to assess the diagnostic performance of the screening tool in comparison to the gold standard.1

Factorial validity assesses whether a multi-item survey tool is measuring one, or several, underlying concepts. In almost all cases, unidimensionality is desired if only a single construct is being assessed; this provides assurance that the mental health tool is measuring, for example, depression, anxiety or latent well-being. However, if a scale is assessing multiple dimensions of mental health, then multidimensionality is desired. For example, factor assessments for the PHQ-4, which measures depression and anxiety, indeed identify two latent factors (Löwe et al., 2010[13]). Factorial validity is commonly assessed using either confirmatory factor analysis (CFA) or exploratory factor analysis (EFA). In the former, researchers test a hypothesis that the relationship between an observed variable (e.g. respondents’ answers to the PHQ-8 tool) and an underlying latent construct (e.g. depression) fits a given model. That is, using CFA, researchers test the hypothesis that an observed dataset has a given number of underlying latent factors. Using EFA, researchers do not impose a theoretical model and instead work backwards to uncover the underlying factor structure (Suhr, 2006[22]).

Cross-group validity, or cultural validity, refers to the extent to which a measure is applicable across different population groups. There are a range of ways that cross-group validity can bias mental health outcome measures, including through cultural factors affecting the way in which symptoms are expressed, clinical bias (either implicit or explicit), language limitations of the respondent (if the tool is being implemented in a language other than their mother tongue) and differences in response behaviour (e.g. greater likelihood to choose midpoint values on Likert scales rather than extreme values) (Leong, Priscilla Lui and Kalibatseva, 2019[23]). Cross-group validity is best ensured by validating a survey tool in the requisite population, rather than applying it blindly.

Notes:

1. A receiver operating characteristic curve provides a visualisation of diagnostic ability by plotting the true positive rate against the true negative rate. The curve can be used to determine the optimal cut-off point, which minimises both Type 1 (false positive) and Type II (false negative) errors. ROC analysis is used in determining the threshold cut-off scores, which are discussed later in this chapter. For more information on ROC and its use in clinical psychology, refer to (Pintea and Moldovan, 2009[24]) and (Streiner and Cairney, 2007[25]).

To assess the validity of screening tools, researchers typically implement a study in which respondents both answer the self-reported scale and participate in a structured CIDI interview, with their responses to both then compared. A screening tool with high sensitivity and specificity is said to have high criterion validity. Although criterion validity ensures that screening tools are designed to mirror diagnostic outcomes from the CIDI, screening tools by design estimate higher prevalence rates for specific mental disorders (see Box 2.1). Convergent validity is assessed by comparing different screening tools against one another to see whether a new tool for measuring, say, depression, performs similarly to existing measures for depression. This approach is often used when testing shortened versions of screening tools, to see whether the truncated survey performs as well as its longer, more in-depth, predecessor. The majority of screening tools described in this chapter have been validated against diagnostic interviews for common mental disorders and have reported good psychometric properties (high sensitivity and specificity) across age groups, gender and socio-economic status (Gill et al., 2007[26]; O’Connor and Parslow, 2010[27]; Huang et al., 2006[28]) (Box 3.3).

Box 3.4. Validity of structured interviews

One important caveat to using structured interviews to validate screening tools is that it presupposes the structured interviews to be an accurate measure of “true” underlying mental health. This issue is raised in two different contexts: (1) most screening tools used in OECD countries were validated against the fourth version of the Diagnostic and Statistical Manual of Mental Disorders (DSM-IV), published in 1994, which is now outdated, rather than against the DSM-5, published in 2013; and (2) the extent to which the DSM itself provides accurate diagnostic data cross-culturally.

The first concern relating to the validity of structured interviews has to do with the fact that none of the screening tools commonly in use have been validated against the newer DSM version (Statistics Canada, 2021[29]). Yet, in total, there are 464 differences between the DSM-IV and DSM-5. Broadly speaking, the DSM-5 includes fewer diagnostic categories, as many previously separate disorders share a number of features or symptoms. In addition, greater effort was made to separate an individual’s functioning status from their diagnosis. One area that could have an impact moving forward is the lowering of the diagnostic threshold for generalised anxiety disorder – a move that has been criticised by some psychiatrists for pathologising what had previously been considered quotidian worries (Murphy and Hallahan, 2016[30]).

In sum, even though there are always changes between DSM updates that include the restructuring of diagnostic categories and the updating of some diagnosis criteria, there is by design a degree of continuity between different DSM versions, and most changes are minor. Regardless, in order to be up to date with most recent clinical practice, instruments like the CIDI would benefit from an update.

On the second point, there are concerns about the applicability of these diagnostic validations to non-US regions and population groups, which at the very least would require validation studies to be conducted in different local contexts. Beyond this, validating mental health screening tools in more geographically diverse clinical settings may be insufficient if the clinical diagnoses underpinning the validation are themselves flawed. Haroz and colleagues investigated the extent of this cross-cultural bias by reviewing 138 qualitative studies of depression reflecting 77 different nationalities and ethnicities (Haroz et al., 2017[31]). They found that only 7 of the 15 most frequently mentioned features of depression across non-Western populations reflect the DSM-5 diagnosis of Major Depressive Disorder. DSM-specified diagnostic features including “problems with concentration” and “psychomotor agitation or slowing” did not appear frequently, while features including “social isolation or loneliness”, “crying”, “anger” and “general pain” – none of which are included as diagnostic criteria – did. Some features arose more frequently in certain regions: “worry” in South and Southeast Asia, and “thinking too much” in Southeast Asia and sub-Saharan Africa. This implies that the close alignment of the PHQ-9 or the GAD-7 with the DSM criteria could in theory limit detection of the underlying targeted construct (i.e. depression, anxiety) relative to longer or more comprehensive screening tools and/or structured interviews (Ali, Ryan and De Silva, 2016[32]; Sunderland et al., 2019[33]).

Although criticisms of DSM criteria do exist, the DSM still remains the most useful tool for enabling cross-country comparative data on mental health outcomes. While improvements could be made, the DSM includes considerations of cultural validity in its drafting, which are updated in each subsequent iteration.

Moving beyond clinical psychology, a few OECD countries have expanded their definition of mental health to encompass a wider range of viewpoints, beyond the traditional ones rooted in a Western perspective. In New Zealand, for example, the Government Inquiry into Mental Health and Addiction includes a Māori perspective of mental health (New Zealand Government, 2018[34]). In a similar vein, the Swedish government will solicit feedback from the Sami parliament when drafting its upcoming strategy on mental health (Public Health Agency of Sweden, 2022[35]).

Across measures of general psychological distress, the Kessler and MHI-5 scales have stronger criterion validity than the GHQ-12. Studies have found that the K10 and K6 scales have strong psychometric properties (encompassing both reliability and validity) and better overall discriminatory power than the GHQ-12 in detecting depressive and anxiety disorders (Furukawa et al., 2003[36]; Cornelius et al., 2013[37]). The mental health component of the SF-12 tool is also better able to discriminate between those with and those without specific mental health conditions, as compared to the GHQ-12 (Gill et al., 2007[26]).While the MHI-5 tool has been found to be just as valid as the longer MHI-18 and GHQ-30 to assess a number of mental health conditions, including major depression and anxiety disorders, it performed less well than the MHI-18 for the full range of affective disorders (Berwick et al., 1991[38]). While the MHI-5 was designed as a general tool, it has been proven effective to identify a specific risk for depression and/or anxiety (Yamazaki, Fukuhara and Green, 2005[39]; Rivera-Riquelme, Piqueras and Cuijpers, 2019[40]).

A recent meta-analysis of the sensitivity and specificity of instruments used to diagnose and grade the severity of depression reported that, on average, the PHQ-9 demonstrated the highest sensitivity and specificity relative to other screening tools, including the CES-D (Pettersson et al., 2015[41]). A different version of the PHQ-8 has been used in the CDC Behavioral Risk Factor Surveillance System (BRFSS) survey. This measure, referred to as the PHQ-8 days, asks respondents how many days over the past four weeks they have experienced each of the eight depressive symptoms that make up the PHQ‑8. This yields a scale ranging from 0-112 and can provide a look at depression risk that is more granular – better identifying individuals who may be at risk for mild depression but currently have higher levels of mental well-being – and also more sensitive to change (Dhingra et al., 2011[42]). The PHQ-2 has been assessed for its internal consistency, construct validity and correlation convergent validity; however, a meta-analysis did not find evidence of studies of criterion validity (El-Den et al., 2018[8]). Another overview cites evidence for the PHQ-2 as having good criterion validity for specific populations such as older adults, pregnant or post-partum women, and patients with specific conditions such as coronary heart disease or HIV/AIDS (Löwe et al., 2010[13]).

While self-report scales for depressive symptoms tend to be well validated, scales for anxiety disorders have been found to be somewhat less sensitive and specific in clinical populations. Research suggests this may be because different types of anxiety disorders have more heterogeneous symptoms than depressive disorders (Rose and Devine, 2014[43]). Despite this, both the GAD-7 and GAD‑2 have been validated in a number of studies. The GAD-7 was designed to provide a brief clinical measure of generalised anxiety disorder, and its validation exercise found it to have good validity (criterion, construct, factorial, etc.). Furthermore, factorial validity assessments of the GAD-7 and PHQ-8 found that, despite a high correlation between the anxiety and depression scales (0.75), the two scales are complementary and not duplicative; more than half of patients with high levels of anxiety did not also have high levels of depression (Spitzer et al., 2006[11]). The high correlations of the GAD-7 with two other anxiety scales indicated good convergent validity (Kroenke et al., 2007[44]; Spitzer et al., 2006[11]).4 Both the GAD‑7 and the shorter GAD‑2 perform well in detecting all four major forms of anxiety disorders: generalised anxiety disorder, panic disorder, social anxiety disorder and post-traumatic stress disorder (Kroenke et al., 2007[44]).

The PHQ-4 has been found to be a valid tool for measuring the combined presence of risks for both depression and anxiety. As noted above, its component parts – the PHQ-2 and GAD-2 – have been validated against diagnostic criterion standard interviews (with caveats to the broader applicability of PHQ-2 criterion validity, as mentioned above). Studies have shown that PHQ-4 scores are associated with the SF-20 functional status scale and health information such as disability days used, etc., providing evidence for convergent and construct validity. Furthermore, factorial analysis has found that the PHQ-4 has a two-dimensional structure with two discrete factors, picking up on both depression and anxiety disorders (Löwe et al., 2010[13]).

Composite scales capturing aspects of positive mental health have also been found to have good validity. WEMWBS was found to have good criterion and convergent validity, being highly correlated with other scales that capture positive affect. WEMWBS and the WHO-5 are, unsurprisingly, highly correlated with one another (correlation coefficient of 0.77) (NHS Health Scotland, 2016[15]), with WEMWBS being slightly less correlated with other measures of mental health that had a stronger focus on physical health or psychological distress (including the GHQ-12). Another study on WEMWBS found that the shorter version of the screening test was highly correlated with the longer version, making it an efficient and quicker alternative to the longer 14-question version (Stewart-Brown et al., 2009[45]). Despite its length, Rasch analysis has found that WEMWBS is unidimensional with one underlying factor (Stewart-Brown, 2021[16]).5 Multiple studies have shown the MHC-SF has good convergent validity (Guo et al., 2015[46]; Petrillo et al., 2015[47]; Lamers et al., 2011[20]), however cognitive interviews in Denmark found that it had poor face validity, especially for questions on the social subscale (Santini et al., 2020[48]).

Although designed as measures of positive mental health, both WEMWBS and the WHO-5 have been shown to be effective screeners for depression and/or anxiety. A study found the WHO-5 to have high sensitivity, but low specificity, in identifying patients with depression in a clinical setting (Topp et al., 2015[49]). A study of SWEMWBS found it to be relatively highly correlated with the PHQ-9 (rho = 0.6-0.8) and the GAD-7 (rho = 0.6-0.7), suggesting that it is an acceptable tool for measuring common mental disorders (CMD); however, other tools may be more sensitive in identifying and distinguishing between individuals with worse levels of mental health (Shah et al., 2021[17]). A study comparing WEMWBS to the GHQ-12, through multidimensional item-response theory, found that both tools appear to measure the same underlying construct (Böhnke and Croudace, 2016[50]).

Self-reported mental health (SRMH) indicators have been compared to validated clinical measures of mental health and have been shown to be related to, though distinct from, other mental health scales. SRMH is correlated with the Kessler scales, the PHQ and the mental health component of the SF-12 and is often used in the validation process of other mental health screening tools as a test for convergent validity. Furthermore, SRMH is associated with poor physical health and an increased use of health services. Although related, research has shown that correlations between SRMH and screening tools are moderate, suggesting that they are capturing slightly different phenomena (Ahmad et al., 2014[51]). The authors note that further research is needed but suggest that findings from longitudinal studies of self-reported physical health could shed some light. SRMH measures were shown to be stronger predictors of mortality, morbidity and service use than other indicators, and that SRMH may be capturing mental health problems that do not yet manifest in screening tools (Ahmad et al., 2014[51]). Conversely, health-related quality of life (HRQoL) – which measures both physical and mental health – has been found to have construct and criterion validity that is good and comparable to the SF-36 scale (Moriarty, Zack and Kobau, 2003[21]).

Studies of mental health screening tools have yielded conflicting evidence as to whether single-item mental health questions are sufficiently valid. A study assessing the comparative performance of the MHI-5 and MHI-18 (which concluded in favour of the shorter version) found that even a single question – “how often were you feeling downhearted and blue?” – performed as well as the MHI-5, MHI-18 and GHQ‑30 at detecting major depression (Berwick et al., 1991[38]). However, studies assessing ultra-short screening tools found that even two questions perform significantly better at screening for depression than does a single question (Löwe et al., 2010[13]). Conversely, the Australian Taking the Pulse of the Nation (TPPN) survey, administered throughout the COVID-19 pandemic, found that the psychometric properties of its single-item mental health measure compared favourably to the K6: the items were highly correlated (rho = 0.82), and the single-item measure had high sensitivity for psychological distress (Botha, Butterworth and Wilkins, 2021[52]).

Box 3.5. Key messages: Validity

All of the mental health screening tools commonly used by OECD member states have been validated in clinical settings and found to have strong convergent, construct and criterion validity.

Composite scales for positive mental health have also been found to have strong psychometric properties, and they have proven effective as screeners for specific mental health conditions such as depression and/or anxiety.

Criterion validity is assessed by the survey tool’s performance in comparison to a clinical diagnostic interview gold standard; however, this presupposes the validity of clinical diagnoses, which may not hold in all contexts.

What do non-response rates tell us about stigma? How does this affect the comparability of mental health data across groups?

The stigma associated with mental illness may lead to misreporting – and under-reporting – of one’s mental health conditions (Hinshaw and Stier, 2008[53]). Low levels of mental health literacy can also lead to under-reporting, with individuals not recognising their own experienced symptoms as representative of an underlying condition (Tambling, D’Aniello and Russell, 2021[54]; Dunn et al., 2009[55]; Coles and Coleman, 2010[56]).6 Feelings of stigma towards mental health conditions remain important in all OECD countries, with large differences between them. A survey conducted in 2019 found that, in 19 OECD countries, 40% of respondents did not agree with the statement that mental illness is just like any other illness, and a quarter agreed that anyone with a history of mental disorders should be prevented from running for public office (OECD, 2021[57]). Because of stigma, respondents may either conceal their true conditions when answering mental health surveys or may choose not to participate in the first place. When administering surveys on sensitive subjects, providing clear assurances of data confidentiality and ensuring that the interview is conducted in a private place, out of hearing of family members, minimise the likelihood of respondent refusal (Singer, Von Thurn and Miller, 1995[58]; Krumpal, 2013[59]).7

Evidence shows that those experiencing psychological distress or a specific mental disorder are more likely to refuse to participate in a survey; this non-response bias then leads to underestimates of the overall prevalence of mental ill-health (de Graaf et al., 2000[60]; Eaton et al., 1992[61]; Mostafa et al., 2021[62]). A recent study, which compared the effect of psychological distress on a number of economic transitions (e.g. falling into unemployment), using both the GHQ-12 score and a version of it adjusted for misreporting behaviour scores, showed that the original version of the GHQ-12 score underestimated the effect of psychological distress on transitions into better-paid jobs and higher educational attainment (Brown et al., 2018[63]). Thus, misreporting of symptoms of psychological distress can lead to biased and inconsistent estimates. However, not all studies come to the same conclusion: the US National Comorbidity Survey Replication (NCS-R) study found no evidence of non-response leading to underestimates of disorder prevalence (Kessler et al., 2004[64]).

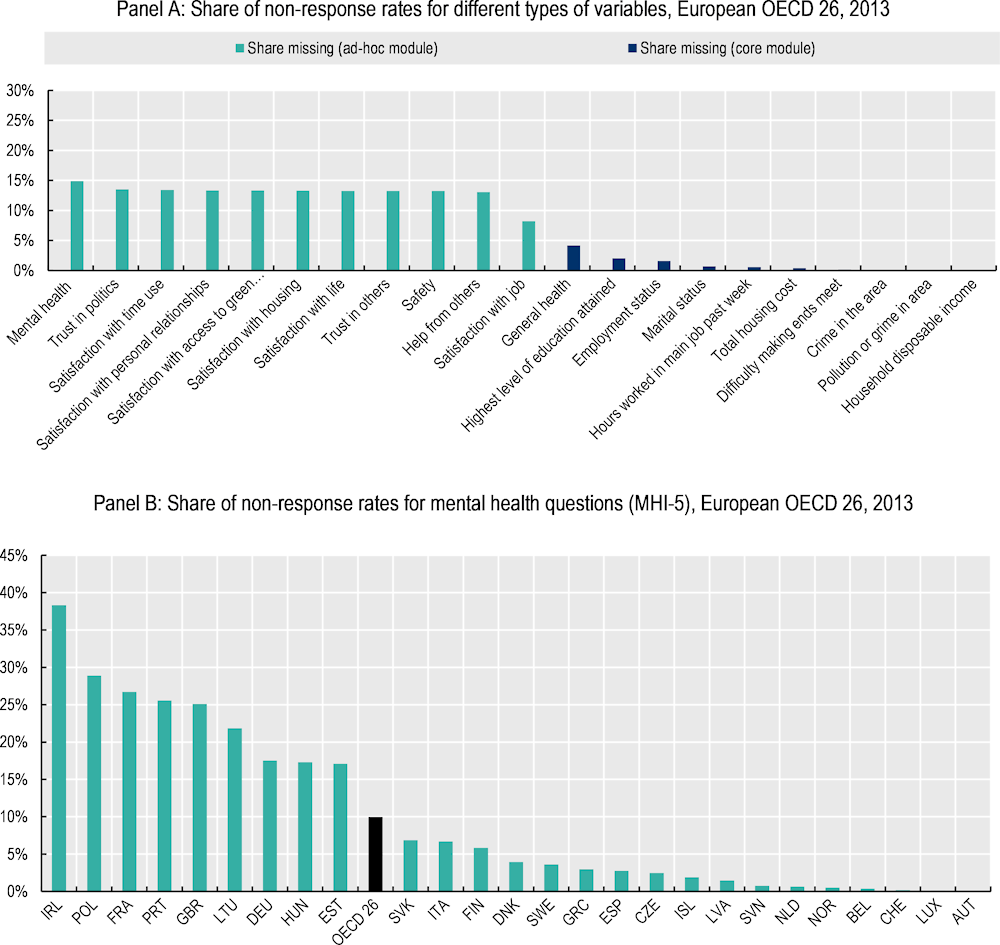

Evidence from the 2013 European Union Statistics on Income and Living Standards (EU-SILC) survey shows that non-response rates for mental health questions are high (15%), but still comparable to those for other subjective variables (e.g. 13% for trust in politics, 8% for satisfaction with one’s job (Figure 3.1 Panel A). High non-response rates for mental health (as measured through the MHI-5) may partly reflect the way in which the EU-SILC survey is implemented. Each year, an ad-hoc module featuring additional questions on a specific topic is implemented in addition to the core module (in 2013 this module focused on well-being), implying that some respondents may have problems in answering questions that were not asked in previous waves.

Missing and non-response rates for mental health variables vary widely between countries (Figure 3.1, Panel B). In the 2013 EU-SILC survey, missing rates for the mental health module were higher than 20% in Ireland, Poland, France, Portugal, the United Kingdom and Lithuania, but were below 1% in Norway, Belgium, Switzerland, Luxembourg and Austria.

Figure 3.1. Non-response rates are higher for mental health questions than they are for other variables, and vary substantially across countries

Note: This figure only includes individuals who have agreed to participate in the survey, and subsequently choose not to answer individual question items; it does not consider those who refuse to participate in the full survey. A respondent is deemed to be missing mental health data if they refused, or replied “do not know”, to at least four of the five individual items on the MHI-5. Refer to Annex 2.B for more information about specific tools.

Source: OECD calculations based on the European Union Statistics on Income and Living Conditions (EU-SILC) (n.d.[65]) (database), https://ec.europa.eu/eurostat/web/microdata/european-union-statistics-on-income-and-living-conditions.

Table 3.2 shows some suggestive evidence that differences in non-response rates by country could be related to levels of stigma; in nine European OECD countries, the prevalence of any depressive disorder (as measured by the PHQ-8) is inversely correlated with the prevalence of mental health stigma, as measured by the share of the population who agree that people with a history of mental health problems should be excluded from running for office. Therefore, in countries with more stigma, the prevalence of depressive disorder risk is also lower – perhaps because of reluctance to report.8

Table 3.2. The relationship between stigma and prevalence is complex, but in some instances, stigma may lead to lower reported prevalence of mental disorders

Correlations between indicators of stigma towards mental health and prevalence of mental health conditions, nine European OECD countries

|

Exclude from office if mental health history |

Seeking treatment shows strength |

Mental health like any other illness |

Prevalence of psychological distress (MHI-5) |

Prevalence of any depressive disorder (PHQ-8) |

Share missing psychological distress responses (MHI-5) |

|

|---|---|---|---|---|---|---|

|

Exclude from office if mental health history |

1 |

|||||

|

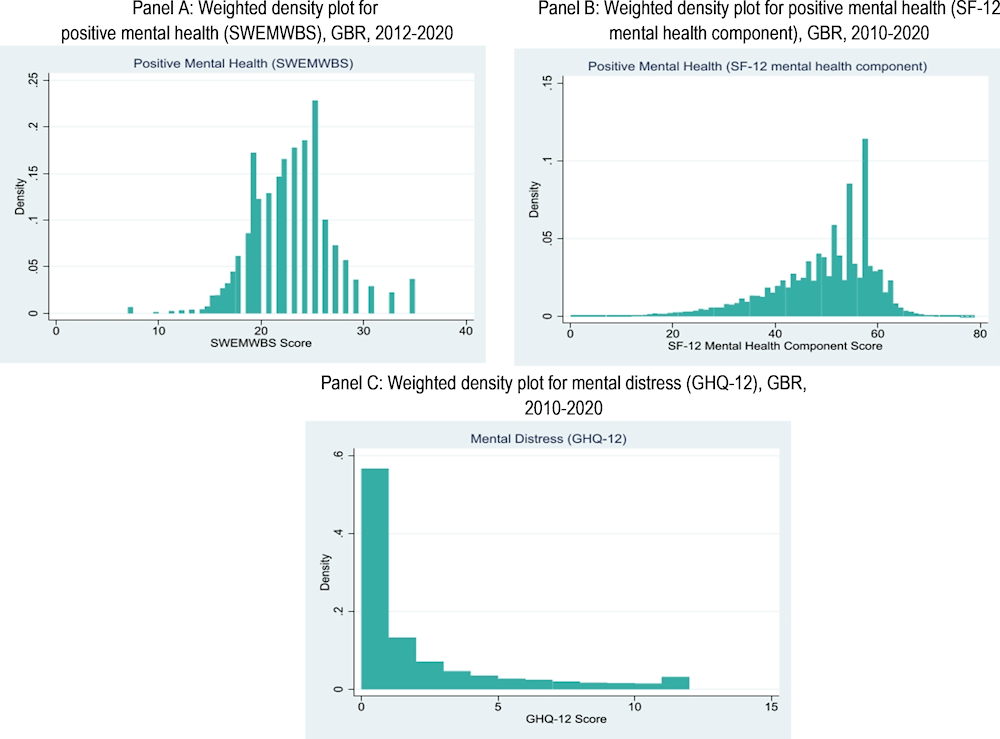

Seeking treatment shows strength |

0.11 |

1 |

||||

|

Mental health like any other illness |

0.11 |

0.56 |

1 |

|||

|

Prevalence of psychological distress (MHI-5) |

0.14 |

-0.44 |

-0.47 |

1 |

||

|

Prevalence of any depressive disorder (PHQ-8) |

-0.83*** |

-0.19 |

-0.06 |

0.13 |

1 |

|

|

Share missing psychological distress (MHI-5) responses |

0.17 |

-0.6 |

0.11 |

-0.001 |

0.03 |

1 |

Note: Table displays listwise correlations. The three stigma questions ask respondents to indicate the extent to which they agree with the statement. For the first, agreement entails stigma; for the second two, agreement entails the absence of stigma. For details on the MHI-5 and PHQ-8 measures, see Annex 2.B. * Indicates that Pearson’s correlation coefficients are significant at the p<0.10 level, ** at the p<0.05 level, and *** at the p<0.01 level. N = 9 countries.

Source: Stigma data originally come from an Ipsos survey, as published in OECD (2021[57]), Fitter Minds, Fitter Jobs: From Awareness to Change in Integrated Mental Health, Skills and Work Policies, Mental Health and Work, OECD Publishing, Paris, https://dx.doi.org/10.1787/a0815d0f-en; MHI-5 data come from OECD calculations based on the 2018 European Union Statistics on Income and Living Conditions (EU-SILC) (n.d.[65]) (database), https://ec.europa.eu/eurostat/web/microdata/european-union-statistics-on-income-and-living-conditions; PHQ-8 come from OECD calculations based on European Health Interview Survey (EHIS) wave 3 data (n.d.[66]) (database), https://ec.europa.eu/eurostat/statistics-explained/index.php?title=Glossary:European_health_interview_survey_(EHIS).

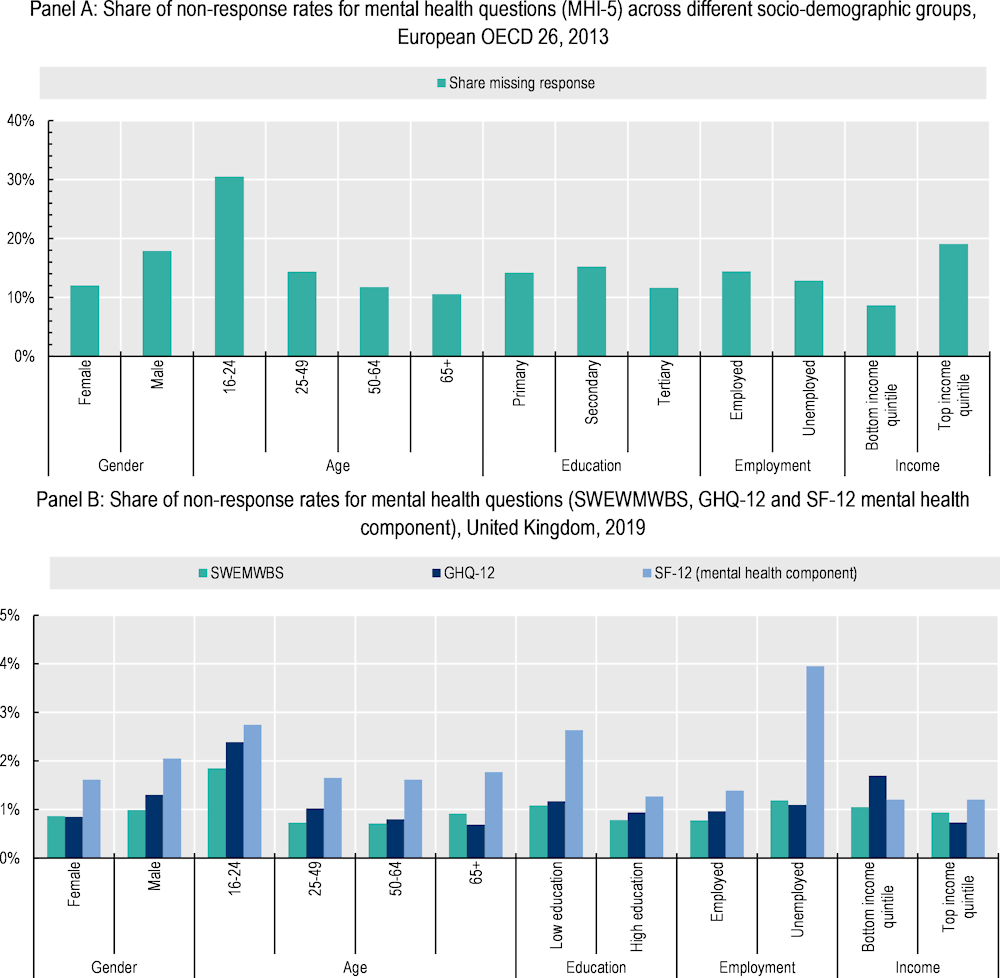

In order to understand what bias is introduced by non-response rates, it is important to understand the profile of those who are choosing not to respond to mental health questions. Figure 3.2 shows these shares for a number of socio-demographic groups – gender, age cohort, education level, labour market status and income quintile. Panel A displays non-responses to mental health questions for 26 European OECD countries, while Panel B shows those for the United Kingdom. For both data sources, women, those with higher levels of education, and older age cohorts are more likely to answer mental health questions, while men, young people and those with lower levels of education are more likely to not respond. These results are in line with a report describing stigma towards mental health in Sweden: women were found to be more likely than men to have positive feelings towards those with mental health conditions, while young people were more likely than older people to report that it would be difficult to talk about their own mental illness with someone else (Folkhälsomyndigheten, 2022[67]). In European countries, there is a clear difference by income – those in the top income quintile are less likely to respond – however, this pattern does not hold for the United Kingdom. A study on non-response rates in longitudinal health surveys among the elderly in Australia found that those with lower occupational status and less education were less likely to participate (Jacomb et al., 2002[68]); however, neither risk for depression nor anxiety influenced refusal rates. The Netherlands Mental Health Survey and Incidence Study-2 (MENESIS-2) found higher non-response rates among young adults, leading to under-reporting of specific mental disorders among this population (de Graaf, Have and Van Dorsselaer, 2010[69]).

Figure 3.2. Young people, men and those with lower levels of education are less likely to respond to mental health questions

Note: Refer to Annex 2.B for more information about specific tools.

Source: Panel A: OECD calculations based on the 2013 European Union Statistics on Income and Living Conditions (EU-SILC) (n.d.[65]), (database), https://ec.europa.eu/eurostat/web/microdata/european-union-statistics-on-income-and-living-conditions; Panel B: OECD calculations based on University of Essex, Institute for Social and Economic Research (2022[70]), Understanding Society: Waves 1-11, 2009-2020 and Harmonised BHPS: Waves 1-18, 1991-2009 (database). 15th Edition. UK Data Service. SN: 6614, http://doi.org/10.5255/UKDA-SN-6614-16, from wave 10 only (Jan 2018 – May 2020).

Data on previous diagnoses for, or experienced symptoms of, specific mental disorders are likely to under-report population prevalence due to a combination of reticence to disclose personal medical history and inability to afford or access care (Hinshaw and Stier, 2008[53]). Furthermore, prevalence of mental ill-health based on these data is heavily influenced by the characteristics of health care systems in different countries and regions, including their ability to treat and diagnose a wide range of patients. For example, data predating the pandemic show that 67% of working-age adults who wanted mental health services reported difficulty in accessing treatment (OECD, 2021[71]). A survey in Canada compared the self-reported use of mental health services from the Canadian Community Health Survey with health service administrative data from the government of Quebec (Régie de l’assurance maladie du Québec – RAMQ), reporting significant discrepancies: 75% of mental health service users in the RAMQ registry did not report using services in the CCHS, with these disparities being highest for older people, those with lower levels of education and mothers of young children (Drapeau, Boyer and Diallo, 2011[72]). Another study for Australia examined the extent of under-reporting of mental illness by matching self-reported mental health information (self-report diagnosis and self-reports of prescription drug use) to administrative records of filled prescriptions for mental disorders; the researchers found that survey respondents are significantly more likely to under-report mental illnesses compared to other health conditions because of stigma (Bharadwaj, Pai and Suziedelyte, 2017[73]).

Box 3.6. Key messages: Non-response bias and missing values

Those with worse underlying mental health may be more likely to refuse to participate in surveys, thereby understating the actual prevalence of mental ill-health; however, the evidence is not conclusive.

There is conclusive evidence that self-reported data on previous diagnoses and experienced symptoms of specific mental ill-health conditions are significantly influenced by stigma and bias.

Analysis from European OECD countries shows that younger people, men and those with lower levels of education are more likely to refuse to answer questions on mental health.

Are the reliability and validity of these measures consistent across cultures and socio-demographic groups?

Governments tasked with promoting population mental health need high-quality information to understand inequalities in mental health outcomes and whether national trends (either improvements or deteriorations) are masking differences within groups, so that they can target policy interventions to those who are most in need. For these reasons, a mental health indicator needs to be able to compare age cohorts, genders, race and ethnic groups, different education and income levels and other socio-economic markers. Ensuring comparability, however, is not straightforward. Cultural differences in perceptions of mental health may make some groups less likely to answer (or honestly answer) questions surrounding mental health. These challenges are true for both inter- and intra-country comparisons.9

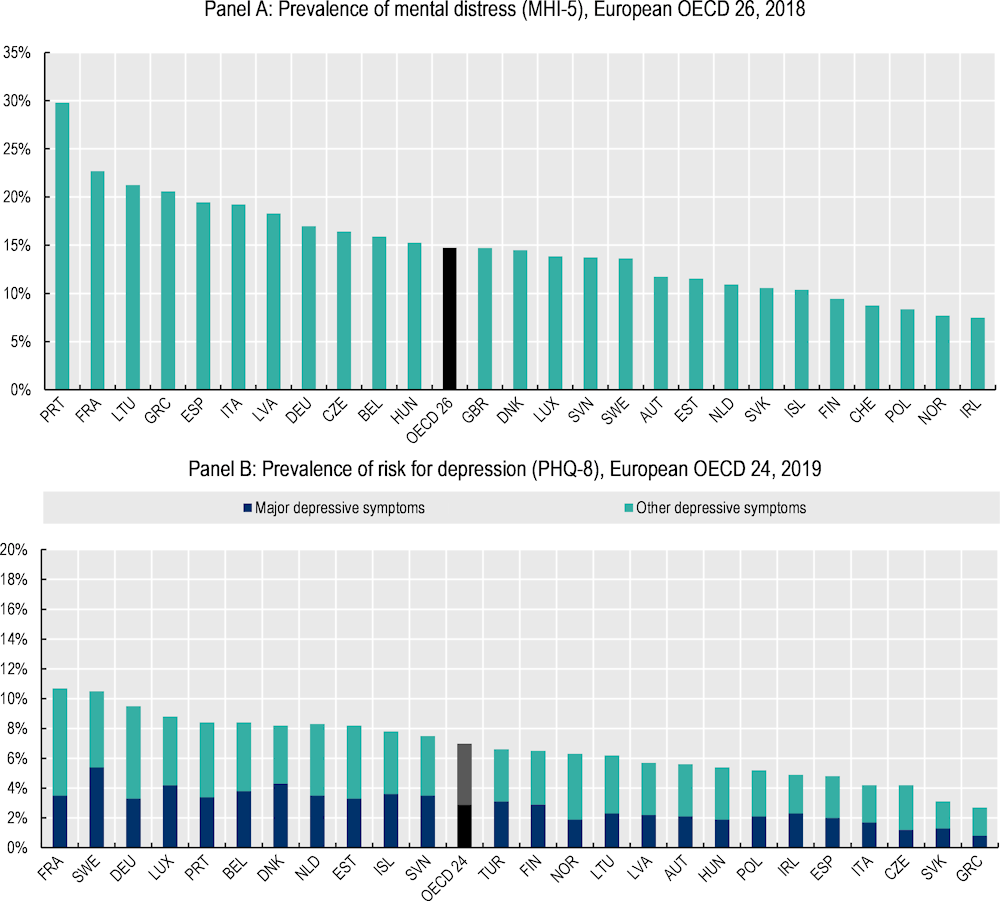

Figure 3.3. Prevalence of psychological distress and depressive symptoms risk varies by as much as 100 percent across European OECD countries

Note: Panel A: risk for psychological distress is defined as having a score >= 52 on a scale from 0 (least distress) to 100 (most); Panel B: a respondent is deemed to be at risk for major depressive disorder if they answer “more than half the days” to either of the first two questions on the PHQ-8, and, in addition, if five or more of the eight items are reported as “more than half the days”. They are at risk for “other depressive disorders” if they answer “more than half the days” to either of the first two questions on the PHQ-8, and in addition, a total of two to four of the eight items are reported as “more than half the days” (Eurostat, n.d.[74]). Refer to Annex 2.B for more information on individual screening tools.

Source: Panel A: OECD calculations based on the 2018 European Union Statistics on Income and Living Conditions (EU-SILC) (n.d.[65]), (database), https://ec.europa.eu/eurostat/web/microdata/european-union-statistics-on-income-and-living-conditions; Panel B: European Health Interview Survey (EHIS) wave 3 data (n.d.[66]) (database), https://ec.europa.eu/eurostat/statistics-explained/index.php?title=Glossary:European_health_interview_survey_(EHIS).

Data from European countries show large variations in the prevalence of psychological distress and depressive symptoms. The prevalence of psychological distress in Portugal, France and Lithuania is more than twice that of Ireland, Norway, Poland and Switzerland (Figure 3.3, Panel A). Similarly, the prevalence of depressive symptoms in France, Sweden and Germany is more than double that of Greece, the Slovak Republic and the Czech Republic, among others (Figure 3.3, Panel B). Yet how much of this is due to differences in the underlying mental health of each population, and how much is due to cultural differences leading to differential response behaviours for these screening tools?

Some of these cross-country differences could stem from different levels of stigma towards mental health, with countries having lower overall prevalence levels also showing higher levels of stigma (refer to the previous section, and Table 3.2).

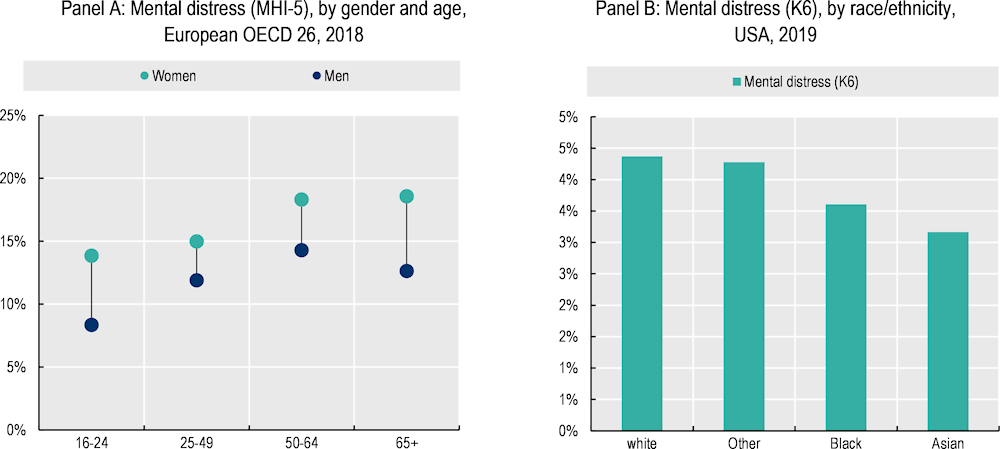

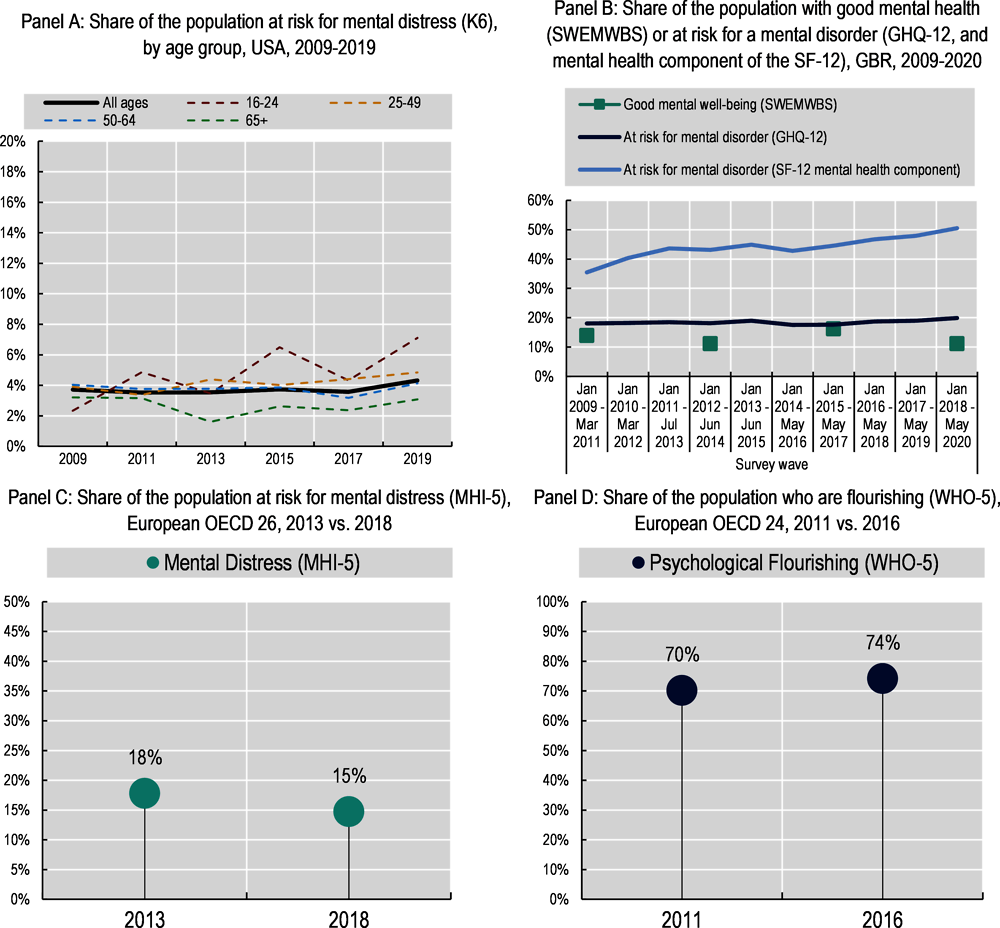

Comparisons of the prevalence of mental ill-health can be difficult within countries, as well. Panel A of Figure 3.4 shows that women in 26 European OECD countries are more likely to report higher levels of psychological distress than men, at all stages of their life. Panel B of Figure 3.4 also suggests that white Americans have higher levels of psychological distress than other racial/ethnic groups, and that Asian-Americans have the lowest levels. Research has shown that there are systematic gender differences in self-report bias, as men tend to minimise their symptoms more than women do (Brown et al., 2018[63]). One study also found that men, but not women, reported fewer depressive symptoms when consent forms indicated that a more involved follow-up might occur (Sigmon et al., 2005[75]). A survey on attitudes towards mental health and stigma in Sweden found that women were more likely to report feeling positive attitudes towards those with mental health conditions than did men (Folkhälsomyndigheten, 2022[67]). Therefore how much of the visible difference is due to differences in reporting rather than differences in actual underlying mental health?

Figure 3.4. Are differences in reported outcomes by gender and race/ethnicity due to differences in underlying mental health or to measurement issues?

Note: Scoring information for Panel A: risk for psychological distress is defined as having a score >= 52 on a scale from 0 (least distress) to 100 (most); Panel B: risk for psychological distress is defined as having a score >= 13 on a scale from 0 (least distress) to 24 (most). Refer to Annex 2.B for more information on individual screening tools.

Source: Panel A: OECD calculations based on the 2018 European Union Statistics on Income and Living Conditions (EU-SILC) (n.d.[65]), (database), https://ec.europa.eu/eurostat/web/microdata/european-union-statistics-on-income-and-living-conditions; Panel B : OECD calculations based on University of Michigan (2021[76]), Panel Study of Income Dynamics (database), https://psidonline.isr.umich.edu/default.aspx data from 2019 only.

To answer these questions on measurement bias and cross-group comparability, researchers assess whether surveys have structural invariance by key socio-demographic characteristics in the process of clinically validating screening tools. Screening tools for symptoms of depression and anxiety, along with the WHO-5 and SWEMWBS scales for positive mental health, are the tools that have been most frequently validated across numerous settings (e.g. gender, age cohorts and racial/ethnic groups).

In terms of screening tools for specific mental ill-health conditions, both the PHQ-8 and GAD-7 have been found to be free from basic gender and age biases. The PHQ-8 and -9 have been validated across a number of clinical settings, with different age groups, cultures and racial/ethnic groups (El-Den et al., 2018[8]), and the PHQ-2 has been validated for use in youth and adolescents (Richardson et al., 2010[77]). A study of the PHQ-4, limited to the United States, found no structural invariance by gender and age: its findings may extend to other countries with similar population structures, but not necessarily to others with different population structures (Sunderland et al., 2019[33]).

Positive mental health composite scales also perform strongly on age and gender generalisability. The WHO-5 has been shown to have good construct validity for all age groups and has been deemed suitable for children aged 9 and above (Topp et al., 2015[49]). The MHC-SF performs well across sex, age cohorts and education levels (Santini et al., 2020[48]). WEMWBS was originally validated for an adult population but has since been validated for use in youth aged 11 and above (Warwick Medical School, 2021[78]). In the course of validating the 14-item version of WEMWBS, researchers found evidence that two items showed bias for gender; for example, for any level of mental well-being, men were more likely than women to answer positively for the item “I’m feeling more confident” (Stewart-Brown et al., 2009[45]). These two items were removed in the process of creating the 7-item version of the screening tool (SWEMWBS). This short form displays no response rate differences by gender, marital status or household income (Tennant et al., 2007[14]).

Evidence for validity across racial groups is more mixed for all tools, and much of the evidence comes from either the United States or Canada. There is mixed support for cross-cultural invariance of the CES-D’s factor structure across Latino and Anglo-American populations (Crockett et al., 2005[79]; Posner et al., 2001[80]); one study found that item-level modifications were needed for the CES-D when administered to older Hispanic/Latino and Black respondents (El-Den et al., 2018[8]). Other studies also indicate that Asian-American and Armenian-American populations have a different factor structure, higher depressive symptoms, and a tendency to over-endorse positive affect items, in comparison to Anglo-Americans (Iwata and Buka, 2002[81]; Demirchyan, Petrosyan and Thompson, 2011[82]). Research in the United Kingdom implementing the GHQ-12 across diverse racial and ethnic groups found some suggestive evidence of differences by group, requiring further study (Bowe, 2017[83]). A study comparing Korean-American and Anglo-American older adults found that cross-cultural factors may significantly influence the diagnostic accuracy of depression scales and potentially result in the use of different cut-off scores for different populations (Lee et al., 2010[84]). Another study revealed that Black/African-American participants with high GAD symptoms scored lower on the GAD-7 than other participants with similar GAD symptoms (Parkerson et al., 2015[85]; Sunderland et al., 2019[33]).

Measures of self-reported mental health may also be susceptible to bias by racial/ethnic identity. US studies have found that ethnicity appears to moderate the relationship between SRMH and a range of mental health conditions. For example, Black and Hispanic/Latino Americans are more likely to report excellent SRMH than white Americans and show a weaker association between SRMH and service use. A study in Canada found that Asian identity was associated with worse SRMH even after controlling for socio-economic status (Ahmad et al., 2014[51]).

Many screening tools have been translated into multiple languages and used in surveys across the globe. The WHO-5, K10, MHI-5, GAD-7 and WEMWBS have all been translated into a number of languages (Sunderland et al., 2019[33]); the WHO-5, for example, has been translated into more than 30 languages and implemented in surveys in six continents (Topp et al., 2015[49]).10 WEMWBS has been used across 50 countries and translated into 36 languages (Stewart-Brown, 2021[16]; Warwick Medical School, 2021[78]). Psychometric evaluations for the MHC-SF have also been conducted in many countries (Petrillo et al., 2015[47]; Joshanloo et al., 2013[86]; Guo et al., 2015[46]); however, cross-country comparisons in rates of flourishing show a high degree of variability, some of which may be driven by measurement issues rather than differences in latent mental health (Santini et al., 2020[48]).

Cultural differences pose significant challenges in establishing uniform definitions and descriptions of mental health and threaten cross-country comparisons (see Box 3.4). Cross-cultural validation refers to whether mental health measures that were originally generated in a given culture are applicable, meaningful and thus equivalent in another culture (Huang and Wong, 2014[87]). Most widely used mental health scales have been developed and validated in high-income, Western and English-speaking populations (e.g. North America, Europe, Australia) and therefore assume a Western understanding of mental disorders and symptoms (Sunderland et al., 2019[33]). This can raise questions as to their applicability to other population groups. For example, a review of 183 published studies on the mental health status of refugees reported that 78% of the findings were based on instruments that were not developed or tested specifically in refugee populations (Hollifield et al., 2002[88]).

Evidence on cross-cultural validation for different tools is mixed. WEMWBS has been validated in 17 different languages and local populations as well as for minority populations within the United Kingdom (Warwick Medical School, 2021[78]). Although the PHQ has been validated in many settings and is considered to be one of the more robust screening tools, one study found that, when applied in middle- or low-income countries, it performed well only in student samples and not in clinical samples, leading researchers to suggest that it should be used only in settings with relatively high rates of literacy (El-Den et al., 2018[8]; Ali, Ryan and De Silva, 2016[32]). Similarly, scoring schemes – i.e. the process of determining what score on a screening tool designates risk for a specific mental issue – are often calibrated based on the US general population, where the initial clinical study took place. The scoring scheme of the Kessler scales was designed to seek out maximum precision in the 90th – 99th percentile of the general population distribution, because of US epidemiological evidence that, in any given year, between 6% and 10% of the US population meet the definition of having a serious mental illness. Therefore, these scoring schemes may not be appropriate for other populations with different structures (Kessler et al., 2002[89]). As another example, the mental health component of the SF-12 is typically scored using US-derived item weights for each response category (Ware et al., 2002[90]). International comparisons have been done in Europe and Australia, which have found these weights to be appropriate (Vilagut et al., 2013[91]), but this does not necessarily extend to other regions.

Research is clearly needed on culturally specific mental health scales developed using a bottom-up and open-ended approach, or with a greater degree of local adaptation, and with further testing of existing scales across different cultures and ethnicities (Sunderland et al., 2019[33]). Furthermore, advances in psychometric models and computational statistics have led to new developments in the administration and scoring of screening tools, which can facilitate cross-cultural analyses.11 Yet it is important to contextualise the magnitude of these differences. Research using data from the Gallup World Poll covering 150 countries on cross-country differences in measures of positive mental health, including life satisfaction, has found that cultural differences account for only 20% of inter-country variation in outcomes. This 20% includes both the impact of different cultures on outcomes, as well as potential measurement bias, an amount that is small in comparison to the impact of objective conditions – such as income, education and employment (Exton, Smith and Vandendriessche, 2015[92]; OECD, 2013[1]). The impact that these objective life conditions have on mental health is also likely to be larger than that of cultural bias. This does not negate the importance of better designing and validating mental health tools across populations, but it does provide a needed reminder that mental health indicators are informative and useful for policy.

Box 3.7. Key messages: Accuracy across groups

Differences in attitudes toward mental health can lead to differential reporting across countries, as well as by gender, age and racial/ethnic identity.

Surveys on stigma and discriminatory views have shown differences in attitudes toward mental health by age and gender.

In the process of validation, screening tools are tested for biases by age, gender and racial/ethnic group. While most screening tools for specific mental ill-health conditions and most composite scales for positive mental health perform well for age and gender, evidence for race/ethnicity is mixed. More research is needed on the performance of self-reported general mental health questions.

Survey items must be validated in local populations to ensure their suitability. Validation studies conducted in one geographic area, or in one population group, may not be applicable to other contexts.

How comparable are measures over time?

A key goal of policy makers is to understand trends over time. Is population mental health improving or deteriorating? Do policy interventions lead to visible changes in mental health outcomes? It is therefore important that the accuracy of chosen indicators hold not only cross-sectionally but also over time. There are two complications in measuring mental health over time: (1) behavioural and attitudinal changes towards mental health, leading to different response behaviour; and (2) the fact that many of the screening tools have been validated against clinical diagnoses in cross-sectional studies, which may not provide sufficient evidence that they are sensitive to changes over time.

Attitudes towards mental health have changed over the years, and while stigma and bias remain, progress in reducing them has been made. In recent years, governments across the OECD have pursued public information campaigns, especially centred in schools and educational institutions, to destigmatise mental illness. Even before the COVID-19 pandemic, 12 OECD countries waged national campaigns to improve mental health literacy, and five had regional or local campaigns (OECD, 2021[71]). Initial evidence of the impact is mixed: while some studies show little to no decline in stigma to mental health conditions, especially in the long run (Deady et al., 2020[93]; Walsh and Foster, 2021[94]), others point to an increase in service use, such as visits to psychiatric emergency departments (Cheng et al., 2016[95]). A study in the United Kingdom found that exposure to mental health campaigns may have led to an increase in these symptoms among young people; the research suggests that this was not because of a newfound awareness of pre-existing feelings, but a causal result of increased information about mental illness (Harvey, n.d.[96]). Other early research in this vein posits that awareness campaigns may lead to individuals categorizing their feelings and emotions – which may be mild or moderate – as more concerning indications of mental distress, which may then change their own perceptions and behaviours, thus leading to actual worsening of symptoms (Foulkes and Andrews, 2023[97]).

If anti-stigma campaigns are indeed having their intended impact, then general population attitudes toward poor mental health may be changing, and the average person may feel more comfortable speaking openly and honestly about their mental health. This could distort estimates of mental ill-health prevalence over time. If the general population ten years ago felt less comfortable honestly answering questions on how often they felt “down, depressed or hopeless” over the past two weeks, one might expect higher rates of non-response, or of respondents lying about their true feelings, than today; as a result, one would expect to see the reported prevalence of psychological distress increase just because of this change in attitudes.

Box 3.8. Changes in mental health during the pandemic

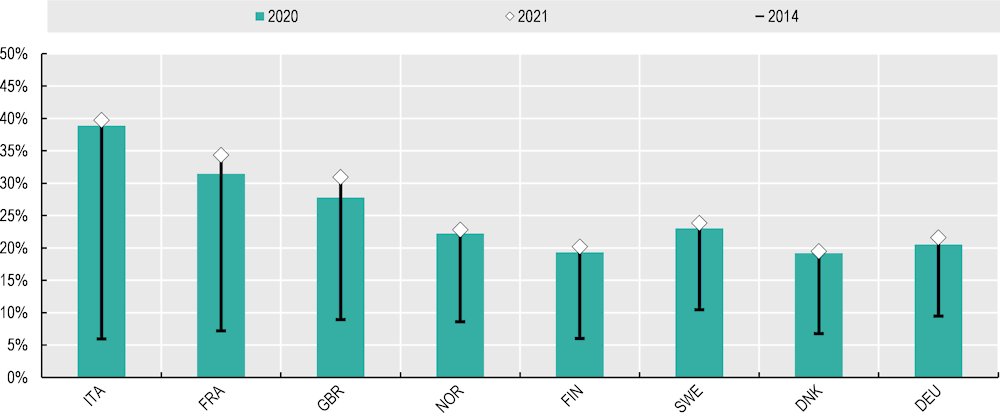

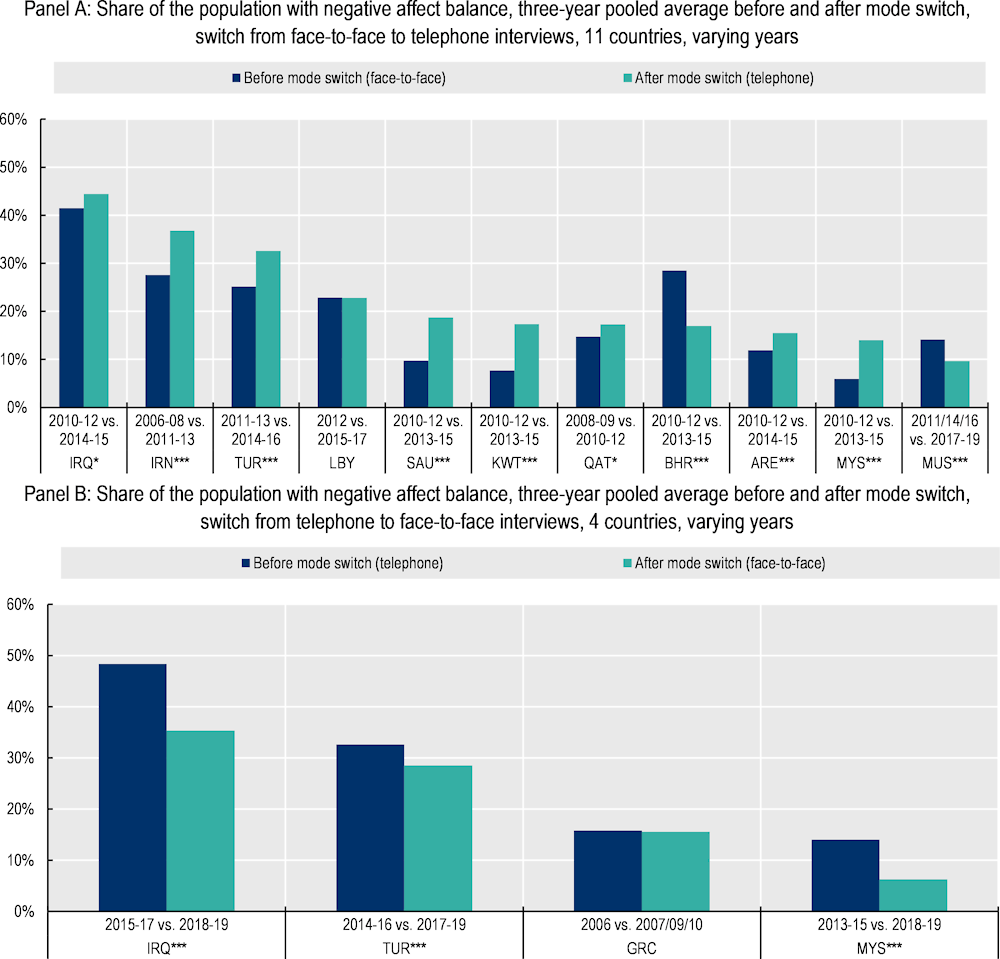

During and in the wake of the COVID-19 pandemic, mental health deteriorated in most OECD countries, with rates of symptoms of depression and anxiety doubling in some (OECD, 2021[98]; OECD, 2021[57]). Indeed, for eight European OECD countries that have comparable pre-pandemic baseline data, the share of the population at risk for depression rose substantially, and by more than 20 percentage points in Italy and France (Figure 3.5; (OECD, 2021[99])). A study looking at data from January 2020 to January 2021 estimated that the share of people experiencing symptoms of anxiety and depression were 28% and 26% higher, respectively, in 2020 than they would have been had the pandemic not occurred (OECD, 2021[57]).12 Both longitudinal and cross-sectional studies in European countries have found that positive mental health – measured through the WHO-5 (an affect-based measure), and SWEMWBS or the MHC-SF (combining aspects of affect, eudaimonia, social connections and life evaluation) – significantly deteriorated over the course of the pandemic (Thygesen et al., 2021[100]; Vistisen et al., 2022[101]; Eurofound, 2021[102]; Vinko et al., 2022[103]).