The policy and institutional arrangements that govern evaluation systems are important for how they support the intended role of evaluations. There is strong coherence between the principles that inform evaluations and the kinds of policies and guidance documents that steer and support high-quality evaluation processes. However, participating organisations diverge in the human and financial resources allocated for evaluation.

Evaluation Systems in Development Co-operation 2023

3. Policy and institutional arrangements

Abstract

3.1. Principles and policies

Integrating evaluation systems into organisational architecture supports evaluation objectives. As noted in Chapter 2, accountability and learning are the dual objectives of evaluation, with an overall aim of supporting better development co-operation. The policy and institutional arrangements surrounding evaluation systems can influence whether these objectives are achieved.

Commitment to the OECD DAC principles that guide development evaluations remains steadfast. As part of its mandate to increase the volume and effectiveness of development co-operation, and with an understanding of the importance of evaluation for enhancing the quality of development efforts, the DAC adopted five Principles for Evaluation of Development Assistance in 1991 (OECD, 1991[1]). These are: impartiality, independence, credibility, usefulness and partnership. These principles lay out a definition of evaluation, including the core criteria of relevance, effectiveness, efficiency, impact and sustainable – to which coherence was added in 2019. As seen in previous studies, the criteria are very widely used, and included in every reporting evaluation unit’s policy or guidelines.

Today, independence and usefulness are the principles most often cited by participating organisations. All DAC evaluation principles continue to be used by participating evaluation units to inform their evaluation activities. These principles are referenced in the majority of DAC Network on Development Evaluation (EvalNet) participant evaluation policies or other normative documents. However, not all principles are given equal weight. The principles of independence and usefulness (utility) were both mentioned 28 times when participants were asked which principles guide their evaluations (Figure 3.1). These were followed by credibility (23 mentions), impartiality (19 mentions), partnership (19 mentions) and transparency (18 mentions). In addition to these principles, many organisations also now include principles related to value for money, ethics, and human rights.

Figure 3.1. Main guiding evaluation principles as reported by EvalNet participants

Note: the size of the words reflects the number of times they were mentioned.

Utility is a major driving force of evaluation decisions, continuing the trend towards learning. While all five principles are referred to by participating organisations, many emphasise utility or usefulness as a priority consideration today (see Box 3.1 for an example). Utility requires that evaluations respond to continually evolving organisation priorities and needs. In this vein, the timeliness of evaluations is of critical importance, and is an additional sub-principle that is necessary to ensure utility (further details can be found in Chapter 5). The importance of timeliness was a key lesson learned during the COVID-19 pandemic (see Chapter 4).

Box 3.1. Ensuring the utility of the United Kingdom’s evaluations

When asked about what principles inform their evaluation activities, the United Kingdom (UK) responded that evaluations must be useful. They defined “useful” as including the following: responds to organisational priorities; fills evidence gaps; and provides opportunities for influencing change. In order to meet these criteria, an evaluation must ask well-defined questions that are feasible to answer; be timed in order to inform internal and external decision making; engage internal and external stakeholders in a participatory approach; and include actionable recommendations. In short, the use of evaluation findings must be central throughout the design and implementation of an evaluation. Other principles that guide evaluation in the UK are credibility, robustness, proportionality, safety and ethics.

Evaluation principles are codified in evaluation policies. The cornerstone of evaluation systems is the organisational evaluation policy, which sets out the purpose and principles that guide evaluation. Some elements are common to many organisations’ evaluation policies: evaluation purpose; evaluation focus (what will be evaluated and how evaluation decisions will be made); institutional set-up of evaluation units; roles and responsibilities; evaluation process (discussed in Chapter 4); evaluation quality standards; evaluation dissemination; and evaluation follow up.

In addition to codifying the Principles for Evaluation of Development Assistance, evaluation policies reflect the Quality Standards for Development Evaluation (OECD, 2010[2]). Adopted in 2010 as part of the DAC Guidelines and Reference Series, the Quality Standards outline key pillars needed to ensure high-quality evaluation processes and products. They have been systematically adopted and integrated into participating organisations’ systems through their policies and other normative documents. The Quality Standards are intended to guide practitioners across the various stages of the evaluation process, including in the creation of policy documents.

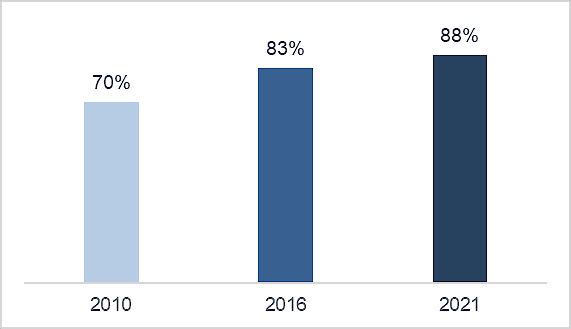

The number of organisations with an evaluation policy in place has increased. The high number of policies in place highlights the importance given by development organisations to evaluation as a core function. The importance of evaluation systems is also emphasised by the fact that its existence is a soft criterion for DAC membership. In 2021, all but four organisations indicated having an evaluation policy, with one in the process of being designed. Those that do not have a policy often have other internal documents that guide the evaluation function. There has been a steady increase in institutionalised policies for evaluation units since 2010 (Figure 3.2).

Figure 3.2. Proportion of organisations with evaluation policies, 2010-2021

Note: Data on evaluation policies were reported by 49 out of 51 organisations that responded to the questionnaire.

Evaluation policies are often complemented by concrete evaluation guidance. Nearly all organisations have developed comprehensive guidance to ensure consistently high-quality evaluations. Organisations that do not have evaluation policies typically provide evaluation guidance that outlines evaluation standards and processes, and in some cases also includes practical tools (e.g., checklists and templates) for use by evaluation commissioners, managers, and evaluators. Organisational guidance reflects global evaluation norms, including the Quality Standards for Development Evaluation (OECD, 2010[2]), the Big Book on Good Practice Standards (ECG, 2012[3]) and the Norms and Standards for Evaluation (UNEG, 2016[4]). These guidelines provide flexible advice that can be adapted depending on evaluation objectives.

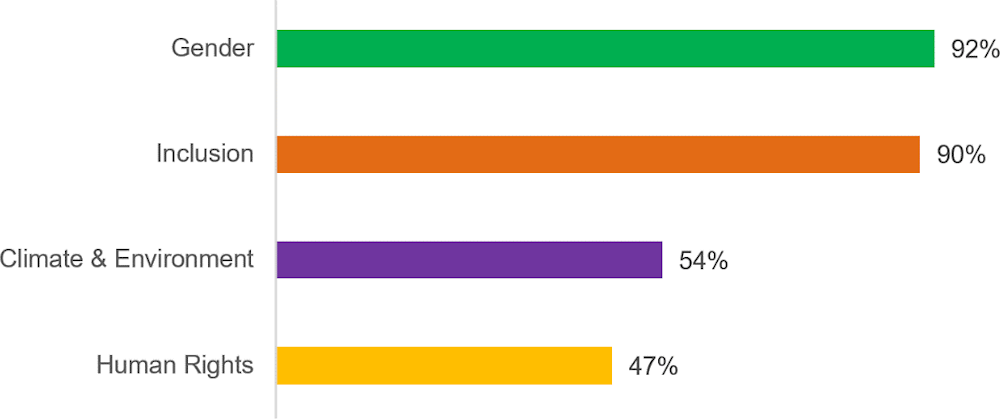

Significant effort has been made – and is ongoing – to mainstream human rights, gender, and inclusion into evaluation practices (Figure 3.3). EvalNet meetings often raise these topics and members have reported that there is growing political priority and pressure for evaluation units to better address these topics. The joint work of EvalNet and DAC Network on Gender Equality (GenderNet) in 2020-2021, and EvalNet’s new publication on human rights and gender equality (Box 3.2), reflect this growing interest. Of the 47 organisations that indicated that they have evaluation guidance, 44 (90 %) report that their guidance addresses leave no one behind and inclusion issues, and 45 (92 %) address gender (Box 3.2).

Figure 3.3. Inclusion of cross-cutting issues in evaluation guidance

Note: Data on cross-cutting issues were reported by 47 out of 51 participating organisations.

Climate and environment are also now frequently being considered as cross-cutting issues. However, while specific guidance is often provided on mainstreaming gender and inclusion into evaluations, climate and environment is often only considered in a broad way (Figure 3.3). More specific guidance is needed on how these areas can be meaningfully incorporated into evaluations. This reflects ongoing work in the global evaluation community to strengthen monitoring and evaluation of climate efforts, as well as work by EvalNet members to develop their own approaches and strategies.1 Finland has prepared “Practical tips for assessing cross-cutting objectives in evaluations” (Ministry of Foreign Affairs of Finland, n.d.[5]), which provide evaluation managers and practitioners with resources and tools for integrating cross-cutting objectives, such as climate resilience, low emission development, environment and biodiversity, into evaluations. The document presents key considerations for each stage of the evaluation process, providing examples of evaluation questions.

While consistency and rigour are vital, there is also a recognition that evaluation practices may need to be adjusted to specific contexts. Participating organisations often noted the importance of flexibility in implementing evaluation guidance, as different areas of work and country contexts may call for adapted approaches. In this vein, there has been a heightened focus on the design stage of evaluations to ensure that the decided approach will respond to specific needs and will support evaluation objectives (further details in Chapter 4).

While there is strong coherence in the purpose and principles that guide evaluations, how evaluation units are set up, and their scope to achieve these, varies across organisations. In general, the role of a centralised evaluation unit is to develop evaluation policy, guidance and standards, and to oversee the evaluation function, including providing some level of quality assurance during the evaluation process. Beyond these core functions, there is a degree of variation in how evaluation units function – with some conducting or commissioning evaluations by external consultants, and others primarily supporting evaluations conducted by other parts of the institution.

The inclusion of learning and knowledge management as a core function of evaluation units is increasing. Considering the challenges of using evaluation findings (see Chapter 5), there is increasing focus on communications and knowledge management in evaluation units to facilitate learning. A growing number of evaluation units now include dedicated experts in knowledge management and communication to turn evaluation findings into digestible and actionable knowledge products and to organise learning events.

Box 3.2. Guidance to ensure inclusive evaluations

Mainstreaming gender and inclusion issues in development evaluations is critical for identifying and understanding the extent to which gender and other intersecting identity factors were considered in the design, development and implementation of development policies, programmes and projects, and what the outcome of those considerations were for various groups.

New OECD guidance on evaluating human rights and gender

The OECD (2023[6]) publication Applying a Human Rights and Gender Equality Lens to the OECD Evaluation Criteria, provides in-depth practical guidance for evaluators, evaluation managers, and programme staff in applying a human rights and gender equality lens to the six OECD evaluation criteria (Figure 2.1). It aims to support evaluators and evaluation managers in the design, management and delivery of credible and useful evaluations that assess whether and how interventions contribute to realising human rights and gender equality – be they interventions with explicit human rights objectives or not. It also provides broader guidance to programme staff in applying the six criteria with a human rights and gender equality lens at the outset of an intervention and addresses the main considerations and challenges in doing so.

Gender mainstreaming in Canada

Canada’s Feminist Evaluation Framework ensures that feminist principles are central throughout evaluations. Taking this framework forward, the Government of Canada has published guidance on gender-based analysis. Integrating Gender-Based Analysis Plus into Evaluation: A Primer (2019[7]), outlines considerations and methodological approaches, along with practical examples, for how to include gender impacts throughout the evaluation cycle. The guidance is complemented by the Gender equality and empowerment measurement tool (2022[8]), an innovative data collection system that incorporates feminist elements of participation, inclusivity, intersectionality, and empowerment.

Disability inclusion in Australia

Australia has developed the Disability inclusion in the DFAT Development Program: Good practice note to provide advice on how to engage people with disabilities and their representative organisations throughout policy and programming design, implementation, and monitoring and evaluation. The overall aim of this advice is to identify and address barriers to inclusion of people with disabilities in development efforts.

Sources: OECD (2023[6]), Applying a Human Rights and Gender Equality Lens to the OECD Evaluation Criteria, https://doi.org/10.1787/9aaf2f98-en; Treasury Board of Canada Secretariat (2019[7]), Integrating Gender-Based Analysis Plus into Evaluation: A Primer, https://www.canada.ca/en/treasury-board-secretariat/services/audit-evaluation/evaluation-government-canada/gba-primer.html#H-04; Government of Canada (2022[8]), Gender equality and empowerment measurement tool, https://www.international.gc.ca/world-monde/funding-financement/introduction_gender_emt-outil_renforcement_epf.aspx?lang=eng; DFAT (2021[9]), Disability inclusion in the DFAT Development Program: Good practice note, https://www.dfat.gov.au/sites/default/files/disability-inclusive-development-guidance-note.pdf.

3.2. Governance and independence

Evaluation units have implemented multi-pronged strategies to maintain their independence. Independence is essential for ensuring that evaluations are credible and impartial. All organisations report that their evaluations are free from undue influence and biases, including political or organisational pressure, and are independent in their function from the processes associated with policy making, delivery, and the management of development assistance. EvalNet members have a nuanced and comprehensive understanding of independence, which is operationalised across the network through one or more of the following ways of working.

Structural independence

All EvalNet organisations reported that their organisation’s evaluation function is organisationally separate from the line function responsible for development assistance management and delivery. This status helps to establish the evaluation unit’s independence in the choice, implementation and dissemination of its evaluations. Nearly all Evaluation Departments commission and manage evaluations, making decisions about what to evaluate, as well as evaluation design, questions, and methodological choices.

The independence of evaluation units' activities is reinforced through direct reporting lines to decision makers, such as a senior board of directors. Typically, the Head of Evaluation reports directly to the Secretary-General or the senior board members of the agency, rather than to Programme or Policy Directors. This helps prevent any compromise of the evaluation process and its results and safeguards independence. In many cases there are also staff-related limitations related to serving as head of evaluation.

Functional independence

EvalNet members reported having their own budget, staff and workplan, which are not overseen or controlled by the organisation's management. In many cases, evaluations are funded from a separate, stand-alone budget line independent of programme or operational budgets.

To further safeguard the independent functioning of the evaluation units and shield them from outside influence, the appointment and removal of the head of the evaluation unit are overseen by an independent jury consisting of members of the senior board of directors and advisory committees. The head of evaluation has authority over the implementation of the work plan, the conduct and content of evaluations and their publication, budget utilisation, and staff appointments and management.

Approximately half of the organisations have an independent advisory committee in place. The purpose of these bodies varies across organisations, but often includes guiding the design of evaluation policies, appointing the head of the evaluation unit, providing input on the evaluation priorities and workplans, and making recommendations to management based on evaluation findings.

Behavioural independence

All EvalNet members reported on their methods for producing high-quality and unbiased reports. Evaluation units generally have the sole responsibility for final evaluation reports. While the board, management and staff may comment and fact check reports, they cannot impose unfounded changes or alter the conclusions. Members work to ensure that evaluators – with evaluation unit staff or consultants – are free to conduct the evaluation work without interference and to express their opinions freely. Any possible conflicts of interest are addressed openly and honestly.

EvalNet members use oversight committees and multistakeholder reference groups to provide independent guidance, scrutinise evaluation design and process, and ensure quality. Independent quality assurance takes place at key points in the evaluation cycle, for example by asking external evaluation experts to review terms of reference and final reports. The French Development Agency (AFD) establishes multi-stakeholder reference groups for each evaluation consisting of diverse external and internal stakeholders. These include parliamentarians, representatives of civil society, researchers, and organisations that directly benefit from the policy being evaluated, and the group is presided over by an independent qualified chairperson. The group is responsible for reviewing all the reports produced by external evaluators and facilitating discussions during meetings. Their perspectives shape the evaluation report and recommendations.

External evaluation specialists are either outsourced or engaged in some capacity to provide a more objective and independent assessment. All participating units engage independent consultants or firms in some way, most often through a competitive public procurement procedure, to conduct evaluations either autonomously or in collaboration with the evaluation unit. External consultant involvement is believed to strengthen the impartial and independent nature of evaluations. When consultants are hired locally (in developing countries), this contributes to the understanding of the context, as well as fostering local ownership of the evaluation. However, the relationship between external consultants and independence is not always straightforward, and hiring consultants is not in and of itself a guarantee of independence or objectivity. Members have long debated how best to involve consultants, as well as their role in ensuring impartiality and limiting bias. They recognise that the impartiality and objectivity of external evaluators can be compromised by information asymmetries and their desire to secure the contract (Picciotto, 2018[10]).

It is best practice to have formal guidelines in place to avoid potential conflicts of interest. Many interviewees described this was the case in their institutions. Conflict of interest safeguards ensure that evaluators' judgement is not influenced by current, recent, or past professional or personal relationships and factors. These safeguards also prevent the appearance of bias or a lack of objectivity (ECG, 2012[3]). For example, the Asian Development Bank (ADB) has specific procedures in place to protect against a range of conflicts of interest that could weaken the objectivity and integrity of its evaluations (ADB, 2008[11]). These include protections against official, professional, financial and familial conflicts.

3.3. Resourcing

Human and financial resources dedicated to evaluation vary significantly. Multilateral organisations often have larger centralised evaluation units, averaging 38 full-time equivalent (FTE) staff. Bilateral organisations have, on average, 10 FTE staff within the evaluation unit, though with significant variation between organisations. Of the 38 bilateral organisations that reported their staff numbers, only 9 (24 %) have 10 or more staff members. In two organisations, there are no staff fully dedicated exclusively to the evaluation function.

“Use of resources is also an important attribute of independence – if you don’t have enough resources, how can you really be independent?”United Nations Development Programme, Independent Evaluation Office

Some organisations use staff to conduct evaluations, while others rely on external evaluators, as described above. Larger evaluation units more often conduct evaluations directly, using external evaluators only when specific skills or knowledge are required. Conversely, there are also evaluation units that do not conduct evaluations themselves but rather commission external consultants, with staff playing a management and oversight role.

Gender balance in evaluation units is improving. Across participating organisations, 60 % of evaluation staff are women – a slight decrease from the 62 % in 2016. In leadership roles, widely viewed as a more significant indicator of gender parity, 25 out of 46 (54 %) evaluation units of reporting organisations are led by a woman, up from 46 % in 2016.

Accounting for financial resources dedicated to evaluation is challenging. As part of the data collection process for this study, participating organisations were asked to report on the total amount spent on development and humanitarian evaluations in 2021, in local currency2 (Table 3.1). Annex B provides an overview of resources for each member. However, evaluation units’ varied mandates and institutional set ups mean that not all organisations are able to report on evaluation costs in the same way. A calculation of evaluation spending may include staff costs, including professional and administrative staff; contractual services – most often consultant evaluators; regular operating costs, which are sometimes included as part of an organisation-wide budget and not calculated for a specific department or unit; travel costs; and communications, including costs for publications and knowledge sharing events.

Whilst a robust comparison over time is not possible because there is not yet an agreed methodology for counting these costs, overall trends in spending from 2010 to 2015 and from 2015 to 2021 suggest modest increases, with an increase of 15% overall from 2010 to 2021. During this period, total ODA (which can provide a rough measure of the increasing portfolio of development finance evaluation units are mandated to evaluate) increased by 35%. From 2015 to 2023, multilateral spending increased more than for bilateral units (Table 3.1). Annexes B and C provide a more nuanced picture for each individual member country, with some members – like Australia – increasing their evaluation budgets relative to ODA provided, and others decreasing spending on evaluation.

Table 3.1. Financial resources for evaluation, 2010 to 2021

|

Average 2010 |

Average 2015 |

Average 2021 |

% Change 2010-2021 |

|

|---|---|---|---|---|

|

All organisations |

4.08 million EUR |

4.10 million EUR |

4.68 million EUR |

+15% |

|

Bilateral organisations |

1.92 million EUR |

2.76 million EUR |

2.95 million EUR |

+54% |

|

Multilateral organisations |

7.76 million EUR |

9.96 million EUR |

11.00 million EUR |

+42% |

|

Total ODA provided |

129.81 billion EUR |

156.19 billion EUR |

175.62 billion EUR |

+35% |

Note: Data on financial resources were reported by 42 out of 51 participating organisations. It is important to note that these figures are approximations only, as there is not yet a common methodology for accounting for evaluation spending. Reported figures were converted to euros, as it is the most common currency used by members.

References

[11] ADB (2008), Review of the Independence and Effectiveness of the Operations Evaluation Department, https://www.adb.org/documents/review-independence-and-effectiveness-operations-evaluation-department.

[9] DFAT (2021), Disability inclusion in the DFAT Development Program: Good practice note, https://www.dfat.gov.au/sites/default/files/disability-inclusive-development-guidance-note.pdf.

[3] ECG (2012), Big Book on Evaluation Good Practice Standards, Evaluation Cooperation Group, https://www.ecgnet.org/document/ecg-big-book-good-practice-standards.

[8] Government of Canada (2022), Gender equality and empowerment measurement tool, https://www.international.gc.ca/world-monde/funding-financement/introduction_gender_emt-outil_renforcement_epf.aspx?lang=eng.

[5] Ministry of Foreign Affairs of Finland (n.d.), Practical tips for addressing cross-cutting objectives in evaluations, https://um.fi/documents/384998/0/Practical_tips_Cross-cutting_objectives_Climate_change_environment.pdf/169bbeb0-47f0-59a0-1c90-0bd7cb3752a0?t=1648783016982.

[6] OECD (2023), Applying a Human Rights and Gender Equality Lens to the OECD Evaluation Criteria, Best Practices in Development Co-operation, OECD Publishing, Paris, https://doi.org/10.1787/9aaf2f98-en.

[2] OECD (2010), Quality Standards for Development Evaluation, OECD Publishing, https://doi.org/10.1787/9789264083905-en.

[1] OECD (1991), DAC Principles for Evaluation of Development Assistance, OECD Publishing, https://www.oecd.org/dac/evaluation/41029845.pdf.

[10] Picciotto, R. (2018), The Logic of Evaluation Independence and Its Relevance to International Financial Institutions (IMF), https://ieo.imf.org/-/media/IEO/Files/Publications/Books/first-decade/first-decade-chapter-5.ashx.

[7] Treasury Board of Canada Secretariat (2019), Integrating Gender-Based Analysis Plus into Evaluation: A Primer, https://www.canada.ca/en/treasury-board-secretariat/services/audit-evaluation/evaluation-government-canada/gba-primer.html#H-04.

[4] UNEG (2016), Norms and Standards for Evaluation, United Nations Evaluation Group, http://www.unevaluation.org/document/detail/1914.

Notes

← 1. There has been strong demand from the members of the OECD-DAC Results Community for guidance to support partner countries to implement and monitor climate adaptation efforts. In response, the Results Community Secretariat is preparing guidance on how to design results frameworks for climate adaptation, as well as how to support partner country monitoring and evaluation efforts in this area. The guidance process kicked off with a dialogue in October 2022, co-hosted by the United Nations Framework Convention on Climate Change (UNFCC) Adaptation Committee.

← 2. Reported figures were converted to euros, as it is the most common currency used by members.