This chapter illustrates the range of evaluation practices applied by international organisations. IOs mainly review individual instruments rather than sub-sets or the overall stock, conduct evaluation ex post rather than ex ante, and assess implementation more than impact. There is increasingly widespread commitment to upgrading evaluation among international organisations. This is reflected in a series of key principles, which aim to institutionalise, incrementally scale up, and guide evaluation activities, while broadening their scope and transparently publishing and acting on their results. Undertaking evaluation can be resource-intensive, methodologically difficult, and politically sensitive. IOs are taking innovative steps to overcome these hurdles, particularly by strengthening the interface with their members and deploying digital technologies and information sources.

Compendium of International Organisations’ Practices

3. Developing a greater culture of evaluation of international instruments

Abstract

Introduction

The evaluation of international instruments can provide valuable information about their implementation and impacts. Ultimately, evaluation improves the international rulemaking process at several levels. It enhances the quality of international instruments and is a key tool to ensure that international instruments remain fit-for-purpose - that is, that they continue to meet their objectives and address the needs of constituencies. Evaluation can also help to promote the wider adoption of international instruments and to build trust in IOs and their practices.

“Evaluation” refers here not to evaluating the quality of the provisions of international instruments themselves, which would consider whether they set out clear and comprehensive rules. Rather, it refers to evaluating the effectiveness, use, implementation, or impacts of these instruments. Such an evaluation often involves the collection and analysis of data related to the policies that the instruments address; who is using them, why and how; the costs and benefits of using the instruments (intended or unintended); and the extent to which they achieve their objectives in practice. IOs collect a range of data in relation to their instruments, and the information generated reflects the nature of the instrument(s) concerned (see Chapter 1).

There is a broadening commitment amongst IOs to developing a greater culture of evaluation of international instruments, despite the fact that they generally find evaluation to be a challenging and resource-intensive activity. For example, while IOs may have the technical expertise and resources to conduct evaluation of their instruments, domestic constituents generally possess the detailed information regarding their implementation and impacts, as well as knowledge of their coherence with national regulatory frameworks (OECD, 2016[1]). IOs may also face methodological challenges, such as difficulties associated with measuring and isolating impacts.

Against this backdrop, this section of the IO Compendium aims to inform the evaluation practices of IOs by setting out the variety of available approaches, as well as their associated benefits and challenges. The evaluation practices considered in this section are carried out by IOs themselves (i.e. evaluations by other organisations of international instruments are not considered).1 The discussion is grounded in the existing practices of IOs, collected through the framework of the IO Partnership.

Rationale

IOs conduct evaluations of their instruments for a variety of purposes. These include encouraging the implementation and use of their instruments, supporting advocacy initiatives, gauging the assistance needs of members, assessing levels of compliance, and feeding evaluation results into monitoring procedures (see Chapter 2).

Evaluation can also contribute to improvements in the design of international instruments by highlighting areas for updating. Overall, evaluation can help international rule-makers to take stock of the costs and benefits associated with their instruments (Parker and Kirkpatrick, 2012[2]). This can facilitate consideration of how these costs and benefits are distributed, whether there are differential impacts across members, and whether the benefits outweigh the costs.

The benefits of evaluation can be magnified when applied to the stock or a sub-set of instruments, and co-operation with other IOs active in relevant fields can enhance the outcomes where instruments may complement one another (see Chapter 5).

Ex ante impact assessments can serve to clarify the objectives and purpose of international instruments before the rulemaking process commences, supporting efficiency as well as effectiveness. It also encourages rule-makers to examine the variety of potential pathways for action – including the possibility of inaction – in advance of the adoption of instruments (OECD, 2020[3]). Ex ante impact assessment also facilitates the systematic consideration of potential negative effects and costs in advance of adoption, which can support their mitigation (OECD, 2020[3]).

Typology: evaluation mechanisms

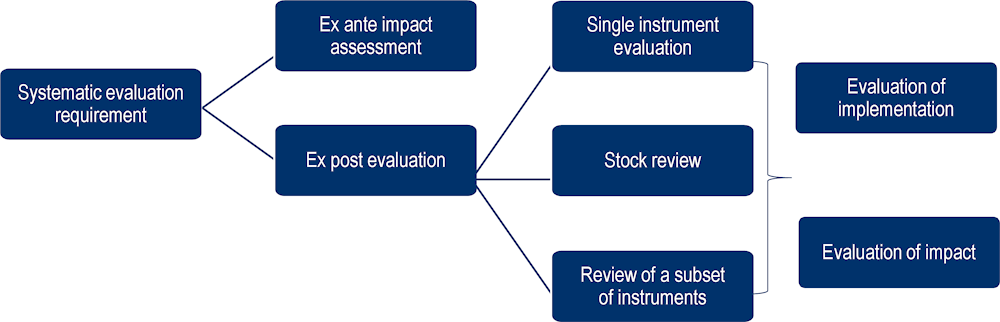

Figure 3.1 and Table 3.1 enumerate the existing mechanisms to evaluate international instruments, building on the typology outlined in the Brochure (OECD, 2019[4]) and the responses to the 2018 Survey. The typology was developed to represent the evaluation practices undertaken by the IOs that are part of the IO partnership. It is therefore illustrative only and does not necessarily provide a complete picture of all possible kinds of evaluation practices that IOs could use.

Figure 3.1. Current evaluation practices of international organisations

Source: The Contribution of International Organisations to a Rule-Based International System, (OECD, 2019[4]).

Table 3.1. Current evaluation practices of international organisations: overview of benefits and challenges

|

Description |

Benefits |

Challenges |

|

|---|---|---|---|

|

Ex ante impact assessment |

Examining the expected social, economic and environmental impacts of instruments in advance of adoption. |

Strategic focus and planning, improved resource allocation, help anticipate data needs and establish benchmarks for ex post monitoring. |

Reliance on estimation and projection, vulnerability to unanticipated impacts |

|

Ex post evaluation |

Gauging realised impacts of international instruments retrospectively |

Feedback loop between implementation and instrument development, identification of unexpected issues of implementation |

May lack baseline data, rely on external agencies for reporting information, encounter stronger resistance than prospective evaluation, and involve resource intensiveness |

|

Evaluating the use/ implementation of a single instrument |

Review focussing on use and administration |

Methodological simplicity, tracks assistance needs, promotes compliance, enhances advocacy, less resource intensive than evaluation of impact |

Limited applicability to organisational learning processes |

|

Evaluating the impact of a single instrument |

Analysis of outcomes against a set of criteria, emphasis on attribution, results, performance |

Methodological rigour and credibility of evidence, stronger learning processes |

Resource intensiveness, may lack informational/control mechanisms to carry out this form of evaluation at the international level, methodological complexity |

|

Stock review |

Assessing overall regulatory output |

Holistic account of instruments, overall strategic direction, identification of gaps in coverage |

Resources intensiveness, lack of targeted depth of analysis |

|

Review of subset of instruments |

Evaluating a range of instruments within a certain sector, rulemaking area, or initiative |

Account for interaction between various instruments and cumulative effects, reduced duplication |

May encounter difficulties of attribution |

Source: Author’s own elaboration via exchanges in IO partnership.

Key principles of evaluation

This section contains key principles that may support IOs in enhancing their evaluation practices. These principles are derived from the experiences of a wide range of IOs. They also build on the OECD Best Practice Principles for Regulatory Impact Assessment (OECD, 2020[3]) and Reviewing the Stock of Regulation (OECD, 2020[5]), which synthesise domestic experiences in the evaluation of laws and regulations. Given the diversity of IOs and the kinds of normative instruments they develop, not all of the below principles will be relevant or practical for all IOs. Nevertheless, they can provide useful guidance and inspiration for IOs wishing to develop a greater culture of evaluation.

1. Institutionalise the evaluation of instruments

Institutionalising the systematic evaluation of normative instruments developed by IOs is an important step towards ensuring their continued relevance. The level of formality of such institutionalisation can vary – for example, ‘institutionalising’ an evaluation commitment could mean including evaluation practices in IOs’ rules of procedure, or via the creation of a unit whose job it is to carry out evaluations. Either way, it is a demonstration of the commitment of IOs to the continual improvement of their instruments and to ensuring that they remain fit-for-purpose.

When evaluation processes are clearly prioritised, defined and accessible (including who has the responsibility for overseeing and carrying out these processes), this can help embed evaluation into everyday organisational culture and practice.

2. Start small and build evaluation practices over time

IOs find it challenging to evaluate their instruments for a number of different reasons (see Section 5). Indeed, it may not even be possible to effectively evaluate every kind of IO instrument. For a type of instrument that has not been evaluated before and for which no evaluation best practice can easily be identified, it makes sense to first assess the “evaluability” of the instrument – looking at its objectives and considering how it is implemented and by whom, and whether evaluation will be possible and/or useful. In most cases, the answer will be “yes”, but the scope and breadth of evaluation may differ.

The first level of evaluation for International instruments is to evaluate their use or implementation – who is implementing the instrument, why, where, when and how (see Chapter 2). This is particularly relevant for non-binding instruments.

Because IOs often do not have oversight of the implementation of their instruments, especially for non-binding instruments, evaluation of use is not always straightforward and it can be hard to collect complete data. Nevertheless, even incomplete data can provide extremely useful information that can lead to international instruments being revised – or withdrawn – and help IOs better target and design the support they provide to encourage implementation of their instruments (see Chapter 2). Evaluation of use is also possible for international instruments that were not developed with clearly measurable objectives.

The next level of evaluation for international instruments is to evaluate their impacts. This is a much more complex undertaking than evaluation of use and IOs face major challenges related to availability of data; the difficulty of establishing causality (e.g. how much of the observed results can be attributed to the IO instrument in question vs other factors such as the enabling environment and complementary actions by other actors); and the fact that normative work can take a long time to have an impact.2 Because of these challenges and more, IOs generally have less experience conducting impact evaluation. Nonetheless, many IOs are conscious of this fact and are actively looking at how they can successfully move from evaluating use to also evaluating impact. There are a wide range of effective practices and methodologies for evaluating impact, and some IOs have prepared guidance documents to help others perform effective impact evaluation.3 IOs could also explore collaboration with other stakeholders such as academia or NGOs if the required technical expertise for impact evaluation is not available in-house (see Chapter 4).

A culture of evaluation cannot be created from scratch overnight. Defining the scale and objectives of the evaluations less ambitiously in the beginning could allow to take intermediate outcomes and use these to build confidence in evaluation processes within IOs, leading eventually to a greater willingness to go further in terms of evaluation. Even small amounts of data and limited results from smaller scale evaluations can demonstrate valuable impact and be important in influencing more actors to implement international instruments (see Chapter 2).

3. Develop guidance for those undertaking evaluation

Developing guidance documents aimed at those responsible for planning or undertaking the evaluation will help to harmonise practices and set expectations for the IO and its stakeholders. A common approach is especially important if evaluations are carried out in a decentralised manner (for example, not led by the IO secretariat, but conducted by members or by external consultants).

Guidance could consider elements such as how to address:

Objective setting: how to set objectives that are practical and viable and establish clear and measurable evaluation criteria.

Selection of people to undertake the evaluation: outline the criteria /qualifications needed for undertaking the evaluation in question.

Evaluation costs: ensure the costs involved in the process of evaluation are proportionate to the expected impacts of the international instrument.

Benchmarking: when possible, consider benchmarking comparisons across jurisdictions.

Stakeholder engagement: ensure inclusive and effective consultation with relevant stakeholders/ those affected or likely to be affected.

Use of technology: think about how digital technologies can be used to increase efficiency of evaluation processes, analyse or collect data.

Use of data: make use of all available sources of information, and consider including less traditional ones such as open source data, satellite data, mobile phone data, social media etc.

Confidentiality, impartiality and independence: think about how to reflect these qualities at each stage of the evaluation process.

4. Establish objectives for International instruments to be evaluated against

When international instruments have clearly-measurable objectives, these serve as helpful criteria for the evaluation. However, when this is not feasible or leads to an incomplete understanding of the instrument, it becomes important to provide qualitative descriptions of those impacts that are difficult or impossible to quantify, such as equity or fairness. Depending on the nature of the instrument and the level of evaluation foreseen, objectives might be specific to one instrument, or could apply to a set of instruments or type/class of instruments. Alternatively, the objectives for the instrument may be set or modified by the State or organisation implementing the instrument, according to local circumstances.

Using and documenting a rigorous process to establish objectives for international instruments – involving, for example, data collection, research and consultation with stakeholders likely to be affected by the implementation of the instrument – can help ensure objectives are coherent across different instruments of the same IO. It can also contribute to making the objective-setting process more transparent, potentially increasing the acceptance of and confidence in both the instruments themselves and the evaluation practices that later rely on these objectives.

The establishment of objectives needs to be part of the development process of IO normative instruments (see Chapter 1). Where possible, the process of objective-setting should be embedded within the larger practice of ex-ante objective setting and impact assessment (OECD, 2020[3]).4

Before developing an instrument, typically the IOs can consider the use of alternative options for addressing the objectives that have been established, including the effects of inaction. They should collect the available evidence and solicit scientific expertise and stakeholder input in order to assess all potential costs and benefits (both direct and indirect) of implementing the proposed instrument. The results of this assessment can help to improve the design of the proposed instrument, and communicate these results openly (where possible) to increase trust and stakeholder buy-in in the international instruments or the IO’s evaluation culture more broadly.5

5. Promote the evaluation of sub-sets or the overall stock of instruments

The IOs should consider evaluating their instruments on more than an individual basis. Evaluating a sub-set of instruments or the whole stock of international instruments can introduce greater strategic direction into the practices of IOs by providing a detailed overview of the range of instruments applied and lessons on which instruments work better than others (OECD, 2020[5]).

IOs can begin with analysing sets of instruments within a given sector, policy area, or initiative, and gradually expand to include wider ranges of instruments. This will allow for the identification of gaps in portfolios where new international instruments may be needed and overlaps or duplication between existing instruments can be addressed.

6. Be transparent about evaluation processes and results

The open availability of information about evaluation processes and transparent dissemination of evaluation results are important to build trust and demonstrate that a given IO has a sound culture of evaluation and of accountability for its instruments.

Consultation of key stakeholders at each stage of the evaluation process greatly contributes to transparency and can also increase the credibility of evaluation results (see Chapter 4). Sharing the draft conclusions of evaluation exercises for comment may help the evaluating body to strengthen its evidence base.

Evaluation reports should be made available as broadly as possible, including within the IO, to IO members and possibly even the broader public (unless, for example, there are issues related to the protection or confidentiality of stakeholders). Establishing a repository of past evaluation results (for example on the IO website) offers a means to achieve this. Providing copies of evaluation reports directly to stakeholders who contributed to the evaluation process is also good practice (UNEG, 2014[6]).

7. Use the results of evaluations

Not only should the results of evaluations be used, but the IO should be able to show how they have been used by the IO, its respective governing bodies, its members or other stakeholders to:

Improve International instruments and/or their implementation, including closing regulatory gaps in the stock of instruments (see Chapter 1).

Identify follow-up actions related to other IO practices and items that need to be fed into the next cycle of IO decision-making.

Identify lessons-learned during the evaluation process that can improve the evaluation process itself (e.g. improving guidance documents, objective-setting processes or communication of results).

Advocate the value of international instruments (see Chapter 2).

State of play on evaluation of international instruments

Trends in evaluation practices by IOs

Uptake of evaluation practices

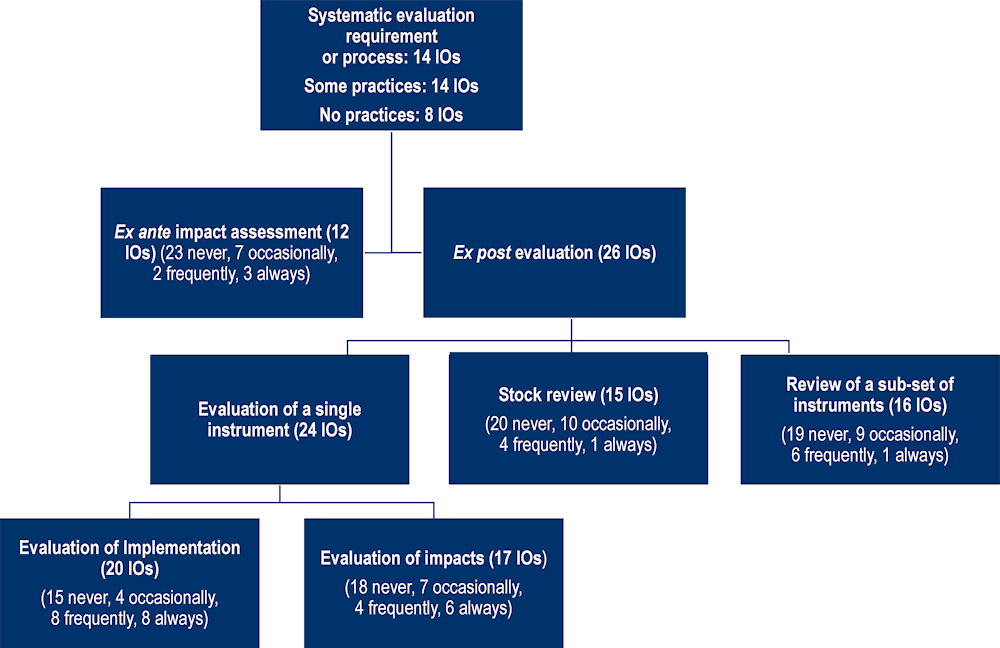

In comparison with the other practices described in this Compendium, evaluation is not as frequently used by IOs. Nevertheless, more and more IOs are taking up evaluation practices. In the 2018 Survey of IOs conducted by the OECD, the great majority of IOs (28 out of 36) reported having adopted some form of evaluation mechanism. Of these 28, 14 IOs reported having a systematic requirement to conduct evaluation. Only 8 IOs reported having no evaluation practices at all (Figure 3.2).

Figure 3.2. Typology and examples of normative evaluation mechanisms

Note: Number of IOs reporting such mechanisms out of 36 respondents.

Source: 2018 IO Survey.

When taking a look at the different categories of IOs,6 it becomes clear that evaluation is most frequently conducted by IGOs with smaller, closed memberships or Secretariats of Conventions. This is likely to be a function of the formality of the instruments used (secretariats of conventions) and the practicality of conducting evaluations with smaller memberships (“closed” IGOs).

When engaging in evaluation, IOs most frequently focus on evaluating the use, or implementation of international instruments, as opposed to their impacts (OECD, 2019[4]) (OECD, 2016[1]). For example, a number of IOs, including OIML and ISO, report some sort of periodic review of the use or implementation of their instruments to decide whether these should be confirmed, revised, or withdrawn. One example of an IO that does evaluate impacts is the Secretariat of the Convention on Biological Diversity (CBD), which reported conducting mandatory reviews of the effectiveness of its instruments (the protocols). However, despite the relatively low uptake of the practice, IOs and their constituencies nevertheless acknowledge the need to review the impacts of instruments in order to assess their continued relevance and/or the need for their revision. This was a clear take away from the second meeting of international organisations7 and is reflected in the results of the 2018 Survey.

While all categories of instruments are evaluated, the results of the 2018 Survey show that instruments qualified as “standards” by the IOs are the type of instrument most frequently reviewed. International technical standards more specifically (e.g. ASTM International, IEC, ISO) undergo regular evaluation with the aim of ensuring quality, market relevance and that they reflect the current state-of-the-art. Often, evaluations of international technical standards take place on a systematic basis and with a set frequency (e.g. at least every 5 years). Whilst they are not as frequent, there are various examples of other types of instruments being evaluated, including conventions (e.g. the evaluation of all six Cultural Conventions by UNESCO), and even voluntary instruments (e.g. MOUs etc.).

Institutionalisation, governance of evaluation

According to the 2018 Survey, out of those who reported having adopted some form of evaluation mechanism, half reported having made evaluation a systematic requirement for their instruments. The other half does not have such a general requirement, i.e. only a subset of their instruments is subject to evaluation or evaluations are carried out only on an ad-hoc basis. There are different ways to embed evaluation requirements, including as clauses of specific instruments themselves, or in broader rules of procedure, guidelines, or terms of reference (see Chapter 1).

Whether or not there is an obligation to take action in response to the evaluation of international instruments often depends on the outcome of the evaluation itself. For example, if an instrument is ‘confirmed’, no action may be required. Whereas if the evaluation results in a proposal to ‘revise’ or ‘withdraw’ the instrument, further action will be necessary. In some cases, IOs may only recommend action rather than impose it on their members.

Regarding the governance of evaluation processes, this is generally a shared responsibility between the IO Secretariat and members. Survey responses indicate that this shared responsibility is systematic for some, but for others is decided for each instrument on an ad-hoc basis.

As far as the entity in charge of the evaluation, in some IOs technical committees responsible for the development of the instrument are also in charge of the evaluation. This is mainly the case for standardisation organisations. Other IOs have a permanent standing body or unit dedicated to the evaluation of instruments, including a governance or global policy unit, or the department which has developed the instrument. Other less frequent forms of evaluation governance include ad-hoc working groups, and governing boards or presidential councils that assume evaluation responsibilities. Only in very few cases is an external body contracted to conduct the evaluation.

Box 3.1. Examples of systematic evaluation carried out by IOs

The OECD systematically embeds clauses within its legal instruments requesting the responsible body/bodies to support the implementation, dissemination and continued relevance of the instrument, including a five years report to the governing board (”OECD Council”). These frequently instruct the responsible body to consult with other relevant bodies (within and beyond the OECD) and serve as a forum to exchange information and assess progress against benchmark indicators. The reporting to the governing board is intended to evaluate the usefulness of the instrument rather than compliance by individual adherents (OECD, 2014[7]).

The ILO Standards Review Mechanism (SRM) (ILO, 2011[8]) was established to ensure that the organisation has a clear, robust and up-to-date body of international labour standards. The Standards Review Mechanism Tripartite Working Group (SRM TWG) assesses and issues recommendations on a) the status of the standards examined, including up-to-date standards, standards in need of revision, outdated standards, and possible other classifications; b) the identification of gaps in coverage, including those requiring new standards; c) practical and time-bound follow-up action, as appropriate.

Source: Author’s elaboration of 2018 IO survey responses.

Type of evaluation (ex ante and ex post evaluation; single instrument/sub-set/stock review) and tool (manual/online survey, analytical tool)

There is a notable tendency for IOs to evaluate their instruments ex post, rather than ex ante (Figure 3.2). In the 2018 Survey, only 12 IOs indicated that they conduct ex ante impact assessment and, of these, most do not do so on a regular basis (only three IOs reported that they always perform an ex-ante evaluation, and two reported that they do so frequently). When ex post evaluation is carried out, it is generally for a single instrument rather than for the overall stock (OECD, 2019[4]). Many ex post review processes of IOs are time-bound, with provisions often mandating a review-process five years after implementation.

Box 3.2. Examples of ex ante evaluation modalities applied by IOs

The Structure of the ILAC Mutual Recognition Arrangement and Procedure for Expansion of the Scope of the ILAC Arrangement (ILAC – R6:05/2019) (ILAC, 2019[9]) establishes a framework for the inclusion of conformity assessment activities, normative documents, and sectoral publications within the scope of the organisations core instrument, the ILAC MRA. This includes several key steps, beginning with a proposal for expansion, its review and approval, its practical development, and its final launch. The proposal for expansion can come from conformity assessment body (CAB) associations, industry, and professional associations; regulators; recognised regions; and individual accreditation bodies. The review involves an interrogation of the background evidence on the need for a new area, an assessment of the implications of a lack of inclusion, and the gathering of views and conduct of consultations with affected parties. This signifies a comprehensive approach to international rulemaking, which combines and capitalises on the overlapping strengths of instrument development (WG1), stakeholder engagement (WG3), and evaluation (W4), while strengthening the interface between ILAC and its members.

Box 3.3. Examples of ex ante evaluation modalities applied by IOs (cont’)

Under the Inter-institutional Agreement on Better Law-Making in the European Union (European Commission, 2016[10]), the European Commission (EC) requires that RIA be conducted for regulatory proposals with significant economic, environmental or social impacts on the EU. The Commission publishes an inception impact assessment (IIA) that outlines the policy problem and a preliminary assessment of the anticipated impacts. Following public consultation on the IIA, the Commission undertakes a full RIA including data collection and evidence gathering, as well as public consultations. Regulatory impact assessments (RIAs) are subject to scrutiny by the Regulatory Scrutiny Board, where regulatory proposals may need to be revised. In terms of EU-made laws, RIA is much more likely to be required when transposing EU directives than it is to form the basis of individual EU Member States’ negotiating position. Where Member States do not have formal requirements to conduct RIA at the negotiating phase, they do rely on the European Commission’s impact assessments, albeit not systematically.

The International Maritime Organisation (IMO) occasionally conducts Ex ante Impact Analysis (OECD, 2014[7]) of its regulations, particularly in cases for controversial or complex issues on which divergent views exist. The analysis of the probable and potential effects of regulations, as well as their extent, serves to enhance the legitimacy of proposals and forge agreement in difficult areas. For example, the organisation has issued a variety of feasibility studies and impact assessments related to market-based mechanisms to reduce greenhouse gas emissions from shipping. The examinations of the Energy Efficiency Design Index (EEDI) and the Ship Energy Efficiency Management Plan (SEEMP) in advance of their adoption estimated that, under high uptake scenarios, these instruments would both reduce global emissions below the status quo by an average of 330 million tonnes (40%) annually by 2030, and increase savings in the shipping industry by USD$310 billion annually. This highlights an intersection between various areas of international rulemaking, as ex ante impact assessment can function simultaneously as a core element in the development of instruments, an advocacy and compliance mechanism, and a form of evaluation (see chapters 1 and 2).

Pursuant to the Convention Establishing an International Organisation of Legal Metrology (OIML, 1955[11]), the OIML scrutinises a number of critical factors before any new project is submitted for approval, including the development of new international standards. The range of factors subject to review include the rationale for an OIML publication in the proposed subject area, the envisaged scope of the proposed publication, the reasons for regulating this category of instrument, a selection of details regarding the countries already regulating this category of instrument or have expressed an intention to regulate it, a description of similar work underway in other organisations (if applicable), and details of potential liaison organisations. In the final two instances, the OIML demonstrates an integrated approach to evaluation and co-ordination (See chapters 3 and 5). These horizon-scanning activities can expand the field of evidence and expertise contributing to the development of new instruments, underpin effective resource allocation, and minimise unnecessary duplication. These factors and others are reviewed prior to submitting the project for approval by Member States.

Source: (OECD, 2019[12]); (OECD, 2014[7]); Author’s elaboration of the 2018 IO survey responses and inputs received from IOs.

Among those international organisations which routinely conduct evaluation, the analysis of individual instruments represents the most common type. According to the 2018 Survey results, two in three IOs evaluate the use, implementation or impact of single instruments. Within this group, international private standard-setting organisations stand out for the consistency with which evaluation is applied, its scope and format, and its embeddedness within the development of instruments (Box 3.4, see Chapter 1). The uniformity of this practice illustrates a mutual learning process across IOs, and opens new spaces for co-ordination (see Chapter 5). Beyond the OECD, these forms of evaluation are not replicated by IGOs. However, there is no necessary institutional reason for this, and IGOs could consider integrating similar minimum review requirements into their instruments.

Box 3.4. Systematic review of international technical standards: a replicable model?

Under the ASTM Review of Standards Procedure (ASTM International, 2020[13]), all standards are entirely reviewed by the responsible subcommittee and balloted for re-approval, revision, or withdrawal within five years of its last approval date. If a standard fails to receive a new approval date by December 31 of the eighth year since the last approval date, the standard is withdrawn.

All IEC International Standards are subject to a review process, under Maintenance of Deliverables procedures set out in the ISO/IEC Directives Part 1 + IEC Supplement (ISO/IEC, 2018[14]) These support and frame the review of all IEC publications for their acceptable usage, and determines whether revisions or amendments are required. The Maintenance of Deliverables reviews are governed by a set of indicators, which establish the relevance and coverage of the standard under analysis. These include the degree of adoption or future adoption of the publication as a national standard, its use by National Committees (NCs) without national adoption or for products manufactured or used based on the publication, reference to the publication or its national adoption in regulation, and IEC sales statistics.

Through the Systematic Review (SR) (ISO, 2019[15]) process, ISO determines whether its standards are current and are used internationally. Each standard is reviewed at least every 5 years – the committee responsible for the standard can launch a review sooner than 5 years, if it feels this is necessary. If no action is taken by the committee, an SR ballot is automatically launched at the 5-year mark. The ballot is sent to all members of ISO and contains a series of questions related to the use of the standard in their country, its national adoption or use in regulations, and its technical soundness. This requires national standards bodies to review the document to decide whether it is still valid, needs updating, or should be withdrawn. ISO has issued dedicated guidance to support this Systematic Review process.1 Beyond the core function of ensuring that the body of ISO standards remains relevant and fit-for-purpose, SR serves a data collection function. Part of the information received from SR is integrated within the “ISO/IEC national adoptions database”, which provides information on which standards have been nationally adopted, whether in identical or modified form, and the national reference numbers. This provides a vital resource through which to monitor the global uptake of standards.

Under the Recommendation Review process (OECD; OIML, 2016[16]), OIML recommendations and documents are reviewed every five years after their publication, to decide whether it should be confirmed, revised or withdrawn. The priority for the periodic review of OIML publications is defined by the Presidential Council and the OIML secretariat in consultation with the OIML-CS Management Committee, and approved by the International Committee of Legal Metrology (CIML). High priority publications shall be subject to a periodic review every two years.

Source: (OECD, 2021[17]); Author’s elaboration of IO practice templates and subsequent inputs from IOs.

The evaluation of individual instruments also occasionally extends to the core and/or founding instruments of an international organisation. The rationale for undertaking such an evaluation is clear. As these instruments frame, establish the basis for, and encompass an expansive range of international rulemaking activities undertaken by the IO in question, knowledge that these instruments are fit-for-purpose is vital to lending credibility and legitimacy to the organisation, demonstrating the effectiveness of their efforts, and making the case for the wider adoption of their instruments. In addition to the practices included in Box 3.5, this form of evaluation would equally apply to the ILO SRM (Box 3.1); the OECD SSR (Box 3.6), IUCN’s review of Resolutions, and UNESCO’s Evaluation of its Culture Conventions (Box 3.2); and the Standards Review procedures outlined in Box 3.3.

Box 3.5. Reviews of core international instruments

After more than 15 years of operation, a Review of the CIPM MRA (BIPM, 2016[18]), one of the core instruments of the BIPM, established views on what was working well and what needed to be improved regarding its implementation. The primary objective of the evaluation was to ensure that the “Mutual Recognition Arrangement (MRA) of the International Committee for Weights and Measures (CIPM) of national measurement standards and of calibration and measurement certificates issued by national metrology institutes (CIPM MRA)” remained fit-for-purpose, and to ensure its sustainability into the future. This generated a variety of Recommendations from the Working Group on the Implementation and Operation of the CIPM MRA, including with respect to managing the level of participation in key comparisons (KCs), improving the visibility of services provided by Calibration and Measurement Capabilities (CMCs) in the BIPM key comparison database (KCDB), constraining the proliferation of CMCs, improving the efficiency of review processes, encouraging and enabling states with developing metrology systems to become signatories and fully participate in the MRA, enhancing the governance of this instrument, and ensuring the effective and timely implementation of the Recommendations themselves.

The ILAC Mutual Recognition Agreement (ILAC MRA) (ILAC, 2015[19]) is a multilateral agreement that offers coherence across regional recognition agreements and arrangements, and can be seen as enabling evaluation of ILAC decisions on accreditation. The ILAC MRA links the existing regional MRAs/MLAs of the Recognised Regional Cooperation Bodies. For the purposes of the ILAC MRA, and based on ILAC’s evaluation and recognition of the regional MRAs/MLAs, ILAC delegates authority to its Recognised Regional Cooperation Bodies for the evaluation, surveillance, re-evaluation and associated decision making relating to the signatory status of the accreditation bodies that are ILAC Full Members. The accreditation bodies that are signatories to the ILAC MRA have been peer evaluated in accordance with the requirements of ISO/IEC 17011 – Conformity Assessment Requirements to demonstrate their competence. The ILAC MRA signatories then assess and accredit conformity assessment bodies according to the relevant international standards including calibration laboratories (using ISO/IEC 17025), testing laboratories (using ISO/IEC 17025), medical testing laboratories (using ISO 15189), inspection bodies (using ISO/IEC 17020), proficiency testing providers (using ISO/IEC 17043) and reference material producers (using ISO 17034). The integration of ISO/IEC 17011 into the peer review processes of ILAC represents an intersection between the evaluation (WG4) and co-ordination (WG5) aspects of international rulemaking. This may be expected to bring greater consistency into peer review procedures. The assessment and accreditation of conformity assessment bodies through the prism of dedicated international standards in various sectors (ISO/IEC 17025, ISO/IEC 17025, ISO 15189, ISO/IEC 17020, ISO/IEC 17043, ISO 17034) demonstrate further instances of this phenomenon, and illustrates the complementarity between ISO, ILAC and IEC more broadly.

Source: Author’s elaboration based on IO practice templates, subsequent inputs from IOs.

Some IOs have demonstrated an organisation-wide ambition to assess the effects and relevance of their instruments and embark on broader evaluation efforts. The 2015 and 2018 IO Surveys showed that stock reviews were not as frequent as ex post evaluations of individual instruments. Nevertheless, in recent years, some initiatives have emerged, with several organisations recently conducting broad reviews of their sets of instruments (Box 3.6).

Box 3.6. Examples of reviews of the overall stock or a thematic sub-set of instruments by IOs

The IMO Ad Hoc Steering Group for Reducing Administrative Requirements (SG-RAR) (OECD, 2014[7]) was established in 2011 to conduct a comprehensive review of the administrative requirements contained in the mandatory instruments of the organisation, with a view to issuing recommendations on how the burdens imposed by these requirements could be reduced. This involved the creation of an Inventory of Administrative Requirements, which identified 560 administrative requirements, and the conduct of an extensive public consultation process.

From 2013-2019, the United Nations Educational, Scientific and Cultural Organization (UNESCO) conducted a series of evaluations of all six of its Culture Conventions, within an overall Evaluation of UNESCO’s Standard-Setting Work of the Culture Sector (UNESCO, 2019[20]) The primary objective of the evaluations was to generate findings and recommendations regarding the relevance and the effectiveness of standard-setting work with a focus on its impact on legislation, policies, and strategies of Parties to the conventions. The evaluations of the Culture Conventions specifically assessed the contribution of UNESCO’s standard-setting work, which is designed to support Member States with the: i) ratification (or accession / acceptance / approval) of the Conventions; ii) the integration of the provisions of the Conventions into national / regional legislation, policy and strategy (policy development level), and iii) implementation of the legislation, policies and strategies at national level (policy implementation level). All evaluations of UNESCO’s standard-setting work were requested by Management and included in the Evaluation Office’s evaluation plans that are published annually in the Internal Oversight Service Annual Reports. In terms of results, the evaluations have led to the development of results frameworks for the 2003 and 2005 Conventions, revised periodic reporting systems (1970, 2003, 2005 Conventions) as well as resource mobilisation strategies for the instruments, improvements in respective Conventions’ capacity development programmes and communication initiatives, as well as changes to the working methods of the Conventions’ Secretariats.

In 2018, the IUCN published a review of the Impact of IUCN Resolutions on International Conservation Efforts (IUCN, 2018[21]). The central objective of this paper is to highlight some of the major impacts and influences a particular sub-set of IUCN instruments – its 1305 adopted Resolutions – have had on conservation. According to the report, these occur across four key areas. First, Resolutions have served to set the global conservation agenda. Under the auspices of the World Conservation Strategy, developed in Christchurch, New Zealand in 1980, these instruments have framed national conservation efforts, promoted co-operation between public, private, and rural sectors, and underpinned a range of international programmes. Second, Resolutions have supported the development of international conservation law, in particular through the establishment of landmark instruments (and international organisations in their own right) such as the Convention on International Trade in Endangered Species (CITES) and the Convention on Biological Diversity (CBD). Third, these instruments have facilitated the identification of emerging issues in conservation, by phasing out a focus on individual species and promoting ecosystem management, addressing the intersections between energy and conservation and between trade and the environment, calling for the conservation of marine resources, recognising the growing importance of the private sector in both contributing to and addressing environmental issues, and encouraging the protection of mangroves. Fourth, the Resolutions have supported specific actions on species and protected areas. Primary among these are the introduction of quotas on species affected by trade, promoting the sustainable use of wild living resources, and driving the establishment of a United Nations List of National Parks and Equivalent Reserves.

In 2016, the OECD Secretary-General launched an organisation-wide Standard-Setting Review (SSR) C/MIN(2019)13 (OECD, 2019[22]). During its first phase, the SSR involved a comprehensive assessment by OECD substantive committees of the overall stock of OECD legal instruments and led to the abrogation of a first set of 32 outdated legal instruments. Now in the second phase of the SSR, substantive committees are implementing their respective standard-setting Action Plans (i.e. the review, revision or monitoring of implementation of 134 legal instruments), and considering proposals for additional abrogations or the development of new instruments in some areas.

Currently under development by a dedicated working group, the World Customs Organization (WCO) Performance Measurement Mechanism (PMM) (WCO, 2020[23]) aims to provide an evaluation tool, which will apply a set of quantitative and qualitative key performance indicators (KPIs) to examine all the Customs competences (revenue collection, trade facilitation and economic competitiveness, enforcement, security and protection of society, etc.) for assessing and improving organisational efficiency and effectiveness, but also to reflect the extent to which the relevant WCO tools and Conventions are applied in support of revenue mobilisation and safe and smooth movement of people and goods. The PMM will also set out to measure the performance of customs administrations, with the objective of strengthening a measurement culture across its membership and supporting the development of metrics for strategic planning and evidence-based decision-making. To facilitate the effective development of the mechanism, relevant consultations are envisaged with other interested stakeholders including Private Sector Consultative Group (PSCG), academia and partner international organisations. Taking advantage of the WCO participation in the work of the IO Partnership, the design of the prospect WCO PMM adopts the evaluation principles of progression and proportion presented in this Compendium and prospectively aims to build up an evaluation framework that connects Members’ implementation, awareness and use of the main WCO tools to the relevant organisational performance dimensions of the WCO constituency.

Source: Author’s elaboration of the IO practice templates.

Criteria and indicators to be used for evaluation (qualitative/quantitative)

There is no common or frequently-used methodology employed by IOs to conduct evaluations. The methodologies to evaluate impacts remain nascent and specific to each IO, often vary according to the different kinds of instruments within the IO (Box 3.7) (OECD, 2016[1]).

Box 3.7. Lessons from evaluation methodologies applied by IOs

CITES: Resolution Conf. 14.8 – Periodic Review of Species (CITES, 2011[24]) sets out a detailed methodological framework for the conduct of periodic evaluations. The process involves the establishment of a dedicated schedule of operation, the selection of a practical sub-set of CITES-listed species of flora or fauna for analysis, and the appointment of qualified consultants to undertake the review. The outputs must include a summary of trade data, the current conservation statues of the subject matter – including the IUCN category of the species, the current listing in the CITES Appendices, and the distribution of species across states. The review results can underpin the production of Recommendations for changes in species status.

IAF: in conducting its evaluations, the International Accreditation Forum (IAF) applies ISO/IEC 17011 – Conformity Assessment Requirements (ISO/IEC, 2017[25]) and ISO 19011 – Guidelines for Auditing Management Systems (ISO, 2018[26]). These provide dedicated guidance for assessing and accrediting conformity assessment bodies in the first instance, and on the principles, management, and competence evaluation of auditing management systems in the second.

ISO: every June, the Technical Management Board (TMB) (ISO, 1986[27]) reviews all the statistics related to the standards development process, which includes those related to standards reviews (for example, assessing the response rate and percentage of responses sent on time). If any issues are identified, then the whole process, or parts of it, may be reviewed further by the TMB or the Directives Maintenance Team (DMT) – the DMT is the group that is responsible for the ISO Directives and Supplement (the rules for the standards development process). The DMT can propose changes to the rules, which must then be approved by the TMB.

OTIF: the Draft Decision on the Monitoring and Assessment of Legal Instruments (OTIF, 2019[28]), OTIF’s forthcoming evaluation procedure, establishes a three-year timeframe beyond which outcomes and impacts of its instruments can be meaningfully monitored and assessed, instructs members to ensure the comprehensiveness and timeliness of the information required to conduct effective evaluations, and sets out the data sources that could be included within the scope of an evaluation (reports, academic literature, case law, surveys of Member States, regional organisations and/or stakeholders). The various categories of ‘relevant stakeholders’ are also explicitly outlined, and evaluators are encouraged to seek their experience, expertise and perspectives as a primary source of information on the practical application of its regulations.

WCO: the upcoming WCO Performance Measurement Mechanism (PMM) (WCO, 2020[23]) will be marked by the application of a set of quantitative and qualitative key performance indicators (KPIs) to examine four performance dimensions covering the full range of customs competences (revenue collection, trade facilitation and economic competitiveness, enforcement, security and protection of society, organisational development.) and the application of the relevant main WCO instruments and tools. The PMM will also be positioned with respect to the global impact, by mapping the list of its expected outcomes against the Sustainable Development Goals (SDGs), in order to ensure greater clarity as to Customs’ role in contributing to a sustainable future through its performance.

Of the IOs responding to the 2018 Survey, 13 reported having written guidance on evaluation. Some IOs have developed their own internal guidelines or evaluation policy, while others reported that they used the Handbook by the United Nations Evaluation Group (UNEG) - an inter-agency group that brought together the evaluation units of the UN system (UNEG, 2014[6]). Other sources of written guidance on evaluation include the UNEG document library (which now includes guidelines for evaluation under COVID-19) and the Inspection and Evaluation Manual of the United Nations Office of Internal Oversight Services Inspection and Evaluation Division (OIOS-IED) (UNEG, 2021[29])(UNEG, 2020[30]) (OIOS-IED, 2014[31]).

In the exceptional cases in which IOs conduct an ex ante assessment before developing an international instrument, this is pursued for example with a list of questions and factors that are systematically posed to the rulemaking body. The results can be submitted for members’ approval prior to embarking on the rule-making process.

Core challenges to the effective evaluation of international instruments

The evaluation of international instruments is still far from systematic across IOs, largely because they face a number of common challenges to evaluation. For one thing, the subject-matter – evaluation of normative activity itself – is recognised as being extremely difficult. Moreover, there are challenges related to resources, co-operation with constituents, organisational culture and the ability to use the results of evaluation, as well as specific challenges regarding the evaluation of impact and the type and the age of the instrument in question.

One of the most prevalent challenges is the resource intensiveness of evaluations. This resource challenge applies across IOs, but can be particularly challenging for IOs with smaller secretariats and limited resources. Resource intensiveness is a challenge in terms of both quantitative resources (time and money), and qualitative resources (expertise). Expertise can be very expensive to obtain if it is not available in-house and a cost-benefit analysis may be needed to determine if the evaluation is justified (OECD, 2016[1]).

Another set of difficulties in evaluating instruments stems from the respective role of IOs and their constituencies. IOs typically need to have a mandate from their members to engage in evaluation activity, but this is not necessarily straightforward. This arises from both the dynamics between governing bodies and members, as well as the heterogeneity across members’ interests. Firstly, the relevant governing bodies of the instrument need to have a key role in the evaluation, particularly to ensure follow-up to the recommendations. Nevertheless, it can be difficult to achieve consensus among governing bodies and IO members on precisely what should be evaluated, the depth of the evaluation and on the development of specific recommendations. Secondly, the heterogeneity of IO members can mean that they have very different needs and capabilities and, consequently very different objectives. For example, the engagement by different Member States and Associates in the CIPM Mutual Recognition Agreement (Box 3.5) varies significantly due to the different needs of their economies and highly divergent scientific and technical capabilities. Countries with advanced metrology systems prefer to focus on the higher-level capabilities, while economies with emerging metrology systems focus on the provision of more basic services. These challenges can sometimes be overcome by conducting broad-based consultations and using an iterative process, leading to a consensus built around broad, common objectives.

The increased evaluation of international instruments also faces some challenges of organisational culture. IOs may be reluctant to ‘lift the lid’ on instruments, in case the results of evaluation are not positive and the consequent need to publish negative findings. This is a sensitive issue but could be turned into a positive message on the value of evaluation if there is better awareness of the benefits that evaluation can bring both for IOs and their members, and particularly if IOs demonstrate improvements on the quality and effectiveness of their instruments following an initially disappointing or negative assessment. To help minimise these challenges, IOs have much to gain from ensuring that they get the messaging around evaluation right. This involves promoting evaluation as an assessment of the effectiveness of instruments, as opposed to a review and comparison of their members’ performance in relation to those instruments.

IOs can also face challenges when it comes to using the results of evaluations. Even if the evaluation itself can be conducted, this does not necessarily mean its benefits will be fully realised. For example, if new technologies are needed in order to implement recommendations following an evaluation, the appropriate infrastructure or necessary resources may not be available.

Regarding evaluation of impact, there are significant methodological difficulties associated with measuring and assessing the effects of international normative activity given the potentially diffuse scope of application and the problem of establishing causality (attributing specific effects to international instruments). International instruments often lack assessment measures which allow for the measurement of both quantitative and qualitative data, thereby limiting understanding of the full breadth and complexity of their achievements (or reasons for their absence). Gathering the data required to evaluate impact can be particularly difficult for IO Secretariats because this information is mostly held by their members. Even in cases where there is willingness to share this information, there may be practical impediments.

Considering the different types of instruments, evaluation can be more challenging for voluntary instruments than for those which are mandatory (see Chapter 1). Voluntary instruments tend to be more flexible and there may be little homogeneity in terms of how they are implemented.

Depending on the age of the instrument, it can also be challenging for an evaluation to account for both implementation and impact. This is particularly relevant for recent instruments, which are merely trying to achieve adequate levels of ratification. To address this, evaluations need to consider the maturity of the instrument(s) in question and set realistic objectives from the outset.

Forward-looking practices that can help overcome IOs’ evaluation challenges

Although the evaluation of international instruments remains relatively scarce, the increase in evaluation efforts is progressively contributing to improving the knowledge-base and understanding about the implementation and impacts of international instruments. With this growing experience and the emergence of new information technology tools, IOs can unlock new opportunities to gather and process broad quantities of data and information about international instruments and share it more fluidly among interested parties – whether between countries and IO secretariats or among IOs themselves, to leverage common information sources (see Chapter 5).

Box 3.8. Evaluation experience among IOs and the use of digital tools in support of evaluation

According to UNESCO, the first of its Evaluations of UNESCO’s Standard-setting Work of the Culture Sector (UNESCO, 2013[32]) functioned as a de facto pilot phase with respect to the evaluation of normative instruments. These evaluations were generated in parallel with, and informed by, the UN Evaluation Group (UNEG) Handbook for Conducting Evaluations of Normative Work in the UN System (UNEG, 2013[33]). This provides a comprehensive framework for evaluation underpinned by the accumulated experiences of evaluators across the UN network of agencies, and contains detailed guidance on determining the evaluability of normative work, preparing the evaluation (including stakeholder identification, overall purpose, and criteria and indicators), data collection and analysis, reporting, and follow-up activities. More broadly, the lessons emerging from the initial evaluation were fed into subsequent assessments, through living practice as well as the implementation of reforms to the Terms of Reference governing UNESCO’s evaluation practice.

The Food and Agriculture Organization (FAO) has developed and actively maintains the FAOLEX Database (FAO, 2020[34]) which includes national legislation, policies and bilateral agreements on food, agriculture and natural resource management. This provides the foundation for a thematic stocktaking under the scope of the FAO’s mandate, and is practically administered by the Development Law Service (LEGN) of the FAO Legal Office. The key outputs of FAOLEX include a comprehensive database of legal and policy documents drawn from more than 200 countries, territories, and economic integration organisations (with 8 000 new entries per year), thematic datasets organised by subject matter, and country profiles containing an overview of national policies, legislation and international agreements in force. This provides a practical illustration of the link between monitoring (WG2) and evaluation (WG4), as the ability to undertake the latter is contingent on the quality and expansiveness of the former. Moreover, it demonstrates the enabling potential of emerging technologies, the use of which reduces the administrative, economic, and informational barriers to conducting effective evaluations.

Source: (OECD, 2020[35]), Author’s elaboration of IO practice templates.

National regulators gather important information via domestic ex ante and ex post evaluation, which can fill the information gaps faced by IO secretariats on the impacts of their instruments. A 2017 survey of the OECD Regulatory Policy Committee (RPC) indicates that a third of member countries review the implementation of international instruments to which they adhere. Of those, six share the results of these evaluations with the relevant IOs (OECD, 2018[36]). In the 2018 IO Survey, 12 IOs indicated that they occasionally take into consideration the results of national evaluation of international instruments transposed into domestic legislation.

References

[13] ASTM International (2020), ASTM Regulations Governing Technical Committees | Green Book, https://www.astm.org/Regulations.html#s10.

[18] BIPM (2016), Recommendations from the Working Group on the Implementation and Operation of the CIPM MRA, https://www.bipm.org/utils/common/documents/CIPM-MRA-review/Recommendations-from-the-WG.pdf.

[24] CITES (2011), Resolution Conf. 14.8 - Periodic Review of Species included in Appendices I and II, https://cites.org/sites/default/files/document/E-Res-14-08-R17_0.pdf.

[10] European Commission (2016), Inter-Institutional Agreement on Better Law-Making in the European Union, https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A32016Q0512%2801%29.

[34] FAO (2020), FAOLEX Database, http://www.fao.org/faolex/en/.

[9] ILAC (2019), Structure of the ILAC Mutual Recognition Arrangement and Procedure for Expansion of the Scope of the ILAC Arrangement (ILAC - R6:05/2019), https://ilac.org/?ddownload=122555.

[19] ILAC (2015), ILAC Mutual Recognition Agreement (ILAC MRA), https://ilac.org/ilac-mra-and-signatories/.

[8] ILO (2011), Standards Review Mechanism (SRM), https://www.ilo.org/global/about-the-ilo/how-the-ilo-works/departments-and-offices/jur/legal-instruments/WCMS_712639/lang--en/index.htm.

[15] ISO (2019), Guidance on the Systematic Review Process, https://www.iso.org/files/live/sites/isoorg/files/store/en/PUB100413.pdf.

[26] ISO (2018), ISO 19011:2018 Guidelines for Auditing Management Systems, https://www.iso.org/standard/70017.html.

[27] ISO (1986), ISO Technical Management Board (ISO/TMB), https://www.iso.org/committee/4882545.html/.

[14] ISO/IEC (2018), ISO/IEC Directives Part I + IECD Supplement, https://www.iec.ch/members_experts/refdocs/iec/isoiecdir1-consolidatedIECsup%7Bed14.0%7Den.pdf.

[25] ISO/IEC (2017), ISO/IEC 17011:2017 Conformity Assessment — Requirements for Accreditation Bodies Accrediting Conformity Assessment Bodies, https://www.iso.org/standard/67198.html.

[21] IUCN (2018), Impact of IUCN Resolutions on International Conservation Efforts, https://portals.iucn.org/library/node/47226.

[17] OECD (2021), International Regulatory Cooperation and International Organisations: The Case of ASTM International, OECD Publishing.

[5] OECD (2020), OECD Best Practice Principles for Reviewing the Stock of Regulation, OECD Publishing, Paris, https://www.oecd.org/gov/regulatory-policy/reviewing-the-stock-of-regulation-1a8f33bc-en.htm (accessed on 25 March 2021).

[35] OECD (2020), OECD Study on the World Organisation for Animal Health (OIE) Observatory: Strengthening the Implementation of International Standards, OECD Publishing, Paris, https://dx.doi.org/10.1787/c88edbcd-en.

[3] OECD (2020), Regulatory Impact Assessment, OECD Best Practice Principles for Regulatory Policy, OECD Publishing, Paris, https://dx.doi.org/10.1787/7a9638cb-en.

[12] OECD (2019), Better Regulation Practices across the European Union, OECD Publishing, Paris, https://dx.doi.org/10.1787/9789264311732-en.

[22] OECD (2019), OECD Standard-Setting Review (SSR), OECD Publishing, Paris, https://one.oecd.org/document/C/MIN(2019)13/en.

[4] OECD (2019), The Contribution of International Organisations to a Rule-Based International System: Key Results from the Partnership of International Organisations for Effective Rulemaking, https://www.oecd.org/gov/regulatory-policy/IO-Rule-Based%20System.pdf.

[36] OECD (2018), OECD Regulatory Policy Outlook 2018, OECD Publishing, Paris, https://dx.doi.org/10.1787/9789264303072-en.

[1] OECD (2016), International Regulatory Co-operation: The Role of International Organisations in Fostering Better Rules of Globalisation, OECD Publishing, Paris, https://dx.doi.org/10.1787/9789264244047-en.

[7] OECD (2014), International Regulatory Co-operation and International Organisations: the Cases of the OECD and the IMO, OECD Publishing, Paris, https://dx.doi.org/10.1787/9789264225756-en.

[16] OECD; OIML (2016), International Regulatory Co-operation and International Organisations: The Case of the International Organization of Legal Metrology (OIML).

[11] OIML (1955), Convention Establishing an International Organisation in Legal Metrology, https://www.oiml.org/en/files/pdf_b/b001-e68.pdf.

[31] OIOS-IED (2014), OIOS-IED Inspection and Evaluation Manual Inspection and Evaluation Manual, https://oios.un.org/sites/oios.un.org/files/images/oios-ied_manual.pdf (accessed on 26 March 2021).

[28] OTIF (2019), Explanatory Note on the Draft Decision on the Monitoring and Assessment of Legal Instruments, http://otif.org/fileadmin/new/2-Activities/2G-WGLE/2Ga_WorkingDocWGLE/2020/LAW-19054-GTEJ2-fde-Explanatory-Notes-on-the-Draft-Decision-text-as-endorsed.pdf.

[2] Parker, D. and C. Kirkpatrick (2012), The Economic Impact of Regulatory Policy: A Literature Review of Quantitative Evidence, OECD Publishing, Paris, http://www.oecd.org/regreform/measuringperformance (accessed on 21 October 2020).

[29] UNEG (2021), UNEG Document Library, http://uneval.org/document/library (accessed on 26 March 2021).

[30] UNEG (2020), Detail of Synthesis of Guidelines for UN Evaluation under COVID-19, http://uneval.org/document/detail/2863 (accessed on 26 March 2021).

[6] UNEG (2014), UNEG Handbook for Conducting Evaluations of Normative Work in the UN System, http://www.uneval.org/document/detail/1484 (accessed on 4 December 2020).

[33] UNEG (2013), UNEG Handbook for Conducting Evaluations of Normative Work in the UN System, http://www.uneval.org/document/detail/1484.

[20] UNESCO (2019), Evaluation of UNESCO’s Standard-Setting Work in the Culture Sector, https://unesdoc.unesco.org/ark:/48223/pf0000223095.

[32] UNESCO (2013), Evaluation of UNESCO’s Standard-setting Work of the Culture Sector, Part I: 2003 Convention for the Safeguarding of the Intangible Cultural Heritage, https://unesdoc.unesco.org/ark:/48223/pf0000223095.

[23] WCO (2020), WCO Performance Measurement Mechanism (PMM), http://www.wcoomd.org/zh-cn/wco-working-bodies/capacity_building/working-group-on-performance-measurement.aspx.

Notes

← 1. Some IOs may distinguish between ‘internal evaluations’ (where the evaluation is carried out by a dedicated, independent evaluation unit that is part of the IO) and ‘self-evaluations’ (where the evaluation is carried out directly by the unit responsible for the instrument for their own purposes). In both cases, external consultants/specialists may be contracted to assist, but the evaluation is still driven internally by the IO. This chapter does not make this distinction between ‘internal’ and ‘self-evaluation’ – both are relevant here.

← 2. UNEG Handbook for conducting evaluations of normative work in the UN system (2014) www.uneval.org/document/detail/1484.

← 3. For example, the OECD DAC Better Criteria for Better Evaluation (2019) www.oecd.org/dac/evaluation/revised-evaluation-criteria-dec-2019.pdf, the UNEG Handbook for conducting evaluations of normative work in the UN system (2014) and the European Commission’s Guidelines on evaluation, https://ec.europa.eu/info/sites/info/files/better-regulation-guidelines-evaluation-fitness-checks.pdf.

← 4. According to the OECD Regulatory Policy Outlook (2018), ex ante regulatory impact assessment refers to the “systematic process of identification and quantification of the benefits and costs likely to flow from regulatory and non-regulatory options for a policy under consideration”.

← 5. For more information on best practice in impact assessment see: the OECD’s publication on Regulatory Impact Assessment (2020) www.oecd.org/gov/regulatory-policy/regulatory-impact-assessment-7a9638cb-en.htm and the European Commission’s Guidelines on Impact Assessment https://ec.europa.eu/info/sites/info/files/better-regulation-guidelines-impact-assessment.pdf.

← 6. See Glossary section.