Natalie Foster

OECD

Innovating Assessments to Measure and Support Complex Skills

5. Exploiting technology to innovate assessment

Abstract

This chapter provides an overview of how digital technologies can be integrated in different assessment paradigms (traditional assessment, technology-enhanced assessment and embedded assessment). The chapter discusses the promise of technology in bridging educational rhetoric with practical assessment challenges, and in particular discusses technology-enabled innovations across the three interconnected models of an Evidence-Centred Design (ECD) assessment framework. It considers innovations at the conceptual model level (i.e. advances in the kinds of complex and dynamic performances that can be elicited), at the task model level (i.e. advances in task design) and at the measurement levels (i.e. advances in data analysis and learning analytics). The chapter underlines that technology must be used purposefully in assessment following the principles of a coherent design process.

Introduction

Digital technologies significantly expand our assessment capabilities: they offer new possibilities for designing items and test experiences, generating new potential sources of evidence, increasing efficiency and accessibility, improving test engagement and providing real-time diagnostic feedback for students and educators, among other things. These possibilities can advance assessment in several ways depending on the goals and intended purpose of assessment. Yet despite widespread recognition in the field of this transformative potential of technology for assessment, its implementation as such remains somewhat limited (Timmis et al., 2016[1]).

This chapter provides an overview of how technology can advance different assessment paradigms. It discusses innovations in three dimensions that are aligned with the three integrated models of Evidence-Centred Design (ECD). It does so to make explicit that technology does not just innovate assessment design at the task model level (i.e. how we can present assessment tasks) but also at the conceptual and measurement levels (i.e. what kinds of complex and dynamic performances we can target and elicit, and how we can generate and make sense of the data). The chapters that follow (particularly Chapters 6-10 of this report) explore some of the innovations discussed in this chapter in more detail.

The unexploited potential of technology to transform assessment

Technology offers the potential to transform what, how, why, when and where assessments happen. Several theories or models address the different uses of technology in educational contexts: some are general models of technology integration (Puentedura, 2013[2]; Hughes, Thomas and Scharber, 2006[3]), while others focus on teaching (Koehler and Mishra, 2009[4]), learning (Salomon and Perkins, 2005[5]) and assessment (Zhai et al., 2020a[6]) specifically. Despite these different perspectives, what they all share is the core idea that technology can be more or less “transformative” depending on how it is integrated into classrooms. In sum, transformative uses of technology enable new ways of learning and assessment that are otherwise not possible, providing learners with interactive tools and affordances to support their cognitive and metacognitive processes (Mhlongo, Dlamini and Khoza, 2017[7]). Less transformative uses of technology replace or functionally improve otherwise unchanged teaching, learning or assessment experiences and are often employed with the goal of making traditional activities easier, faster or more convenient.

The integration of technology in educational assessment is not new nor has its potential been completely untapped: over the past two decades, technologies have supported advances in large-scale assessment across various assessment functionalities including accessibility, test development and assembly, delivery, adaptation, adaptivity, scoring and reporting (Zenisky and Sireci, 2002[8]; Bennett, 2010[9]). Item authoring environments have expedited the production and packaging of tasks and computer-based assessment delivery has streamlined logistical processes (albeit raising other issues related to equipment, infrastructure and security). Advances in adaptive testing (i.e. presenting students with items at an appropriate level of difficulty, based on their prior responses) have also improved test efficiency and experience, and automated scoring engines have significantly reduced both the time and investment required in coder training to score assessments.

For the most part, these uses of technology have supported the computer-based delivery of otherwise unchanged tasks and item formats (i.e. selected- or constructed-response types), the automation of administrative or logistical processes, or the automation of scoring and interpreting responses (Quellmalz and Pellegrino, 2009[10]). In other words, digital technology has predominantly served to replicate or streamline existing large-scale assessment practices without significantly transforming the type of information that these assessments provide to students, teachers and other education stakeholders, nor improving the quality of test experiences for students. Such uses of technology are of course useful in terms of efficiency gains but maintain assessment largely within the traditional assessment paradigm (i.e. drop-from-the-sky tests of accumulated knowledge). There remains considerable potential to harness digital technologies to transform what we can measure, how we make sense of test taker performance and how assessment relates to learning (Thornton, 2012[11]; Timmis et al., 2016[1]; Zhai et al., 2020a[6]; DiCerbo, Shute and Kim, 2017[12]; Shute and Kim, 2013[13])

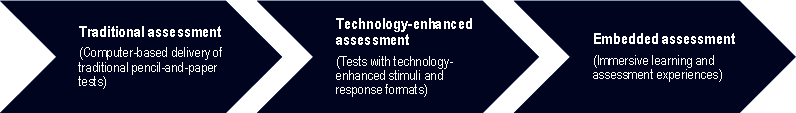

While technology-enhanced assessment (TEA) can support more innovative assessment practices and systems, not all TEA are necessarily transformative. It is possible to identify two different TEA paradigms, in part based on the extent to which technology has been used in fundamentally transformative ways but also driven by the different goals of those assessments (Bennett, 2015[14]; Redecker and Johannessen, 2013[15]). In the first TEA paradigm, technology enables new task formats via multimedia stimuli and interactive response-types as well as improvements in measurement precision by generating new potential sources of evidence – but tests ultimately still look and feel like explicit tests for students. The other, more transformative TEA paradigm integrates assessment and learning activities through embedded assessment, where technology serves to create richer, immersive, interactive and unobtrusive learning and assessment environments that evolve as students make choices and interact with them.

Embedded assessment – also referred to as stealth assessment (Shute, 2011[16]) – engages students in carefully designed learning games and activities while unobtrusively gathering data and drawing inferences about their competencies based on their behaviours and performance. The data produced during their learning process can be used to trigger scaffolds or provide immediate feedback to learners and teachers on their progress and future learning strategies. Embedded assessments therefore minimise the gap between “teaching and learning time” and “assessment time”, translating into a fundamentally different assessment experience for learners compared to explicit testing paradigms (Redecker and Johannessen, 2013[15]).

Leveraging technology across an evidence-centred assessment design process

The remainder of this chapter discusses how technology can innovate assessment design and practice across these different assessment paradigms. Broadly speaking, the three assessment paradigms identified above can be seen as situated along a spectrum of technology integration, from least (traditional assessment) to most transformative (embedded assessment). As set forth in the Introduction chapter of this report, a principled design process like Evidence-Centred Design (ECD) (Mislevy and Haertel, 2006[17]) supports the design of valid assessments, especially when measuring complex multidimensional constructs using technology-enhanced tasks; this is because the complexity of student performances coupled with the rich observations that such tasks provide can create interpretation challenges. The following discussion is therefore structured around three key assessment design dimensions – assessment focus, task design, and evidence identification and accumulation – that closely align with the three interconnected models of an ECD framework. Table 5.1 summarises assessment capabilities across these three dimensions for each of the assessment paradigms.

Framing the discussion in this way explicitly addresses how technology enables test takers to demonstrate proficiency in new constructs, expands the ways in which tasks can be presented and behaviours observed in assessment environments, and utilises new sources of evidence and interpretative methodologies for the purposes of measurement and reporting on those constructs. While each dimension is addressed separately, there is significant interdependence among them. As the discussion advances further along the technology integration spectrum, note also that innovations enabled in less transformative uses equally apply in more transformative uses.

Table 5.1. How technology can innovate assessment

|

|

||

|

Assessment focus |

||

|

|

|

|

Task design |

||

|

|

|

|

Evidence identification and accumulation |

||

|

|

|

Assessment focus

Traditional assessments typically measure what students know at a given point in time, generally by asking them to recall facts and reproduce content knowledge or to apply fixed solution procedures for static and highly structured problems (Pellegrino and Quellmalz, 2010[18]). As described in Chapter 1 of this report, these assessments cannot replicate the types of authentic problems needed to engage and capture more complex and multi-faceted aspects of performance – especially those characterised by behaviours or processes.

Technology can broaden the range of constructs we are able to measure by simulating complex problems that are open-ended and dynamic, meaning that they evolve as test takers engage in iterative processes of reasoning, problem solving and decision making. This makes it possible to measure more complex constructs or additional aspects of performance that are not possible to replicate using static tasks and problem types that focus on knowledge recall. More interactive and immersive environments allow students to engage actively in the processes of making and doing, making it possible to track the strategies that test takers employ and the decisions they make as they work through complex tasks. Taken together, these opportunities represent a powerful shift in the focus of assessment away from simple knowledge reproduction to knowledge-in-use. They also expand the range of information it is possible to capture about test takers not only in terms of what they can do (i.e. better coverage of constructs) but also in terms of how they do it (i.e. thinking and learning processes).

By simulating open-ended and dynamic environments and providing test takers with interactive tools that generate feedback, either implicitly or explicitly, test takers’ knowledge and proficiency states may evolve over the course of an assessment activity – especially if it occurs over an extended period. While traditional testing models rely on assumptions about the fixed nature of knowledge and skills that constitute the target of assessment, many complex competencies explicitly include aspects of metacognition and self-regulated learning as part of their student model. Seeking and reacting to feedback is therefore an inherent part of their authentic practice (and consequently, should be part of their assessment).

Being able to track a student’s knowledge and proficiency as it evolves over the course of an assessment also provides opportunities to understand how students learn and transition into higher mastery levels and how prepared they are for future learning (Xu and Davenport, 2020[19]). Technology-rich embedded assessment environments can integrate scaffolding to support learning, merging summative and formative assessment and providing measures of the capacity of test takers to learn and transfer that learning to other tasks. These types of environments are therefore particularly well-suited for both acquiring and assessing 21st Century competencies (see Chapter 2 of this report for more on 21st Century competencies and transfer of learning, and principles for designing next-generation assessments of these competencies). Research has demonstrated that these types of assessments provide evidence of latent abilities that more conventional measures fail to tap (Wolf et al., 2016[20]), more accurate measurements regarding what students know and can do (Almond et al., 2010[21]), and more useful insights and guidance to practitioners (Elliott, 2003[22]). This makes sense, given these environments are most similar to the kinds of learning contexts in which students apply and develop their skills.

While these advances enabled by technology open new possibilities for measuring complex aspects of performance, a key challenge lies in how to model these more dynamic competencies (i.e. defining how student behaviours in open environments connect to variables within the student model). Chapter 6 of this report further explores this issue.

Task design

Task design essentially refers to the tools at an assessment designer’s disposal for creating valid tasks and generating relevant evidence about the target constructs. For traditional paper-and-pencil tests, this dimension long presented a weakness to assessment design (Clarke-Midura and Dede, 2010[23]). The assessment designer’s toolbox was limited to static task stimuli, multiple- or forced-choice responses among a list of pre-determined options, or simple constructed responses. As described above, these simple tasks and item-types cannot authentically replicate complex problem scenarios, nor can they generate a rich range of observations about students’ mastery of 21st Century competencies – most notably about their behaviours and processes.

Digital technologies significantly expand the range of task stimuli it is possible to integrate in assessment, including text, image, video, audio, data visualisations and haptics (touch). These can enhance features of more traditional assessment stimuli (e.g. hyperlinked text or animated images) as well as create new dynamic stimuli like videos, audio and simulations. Highly interactive tools and immersive test environments can not only provide more open-ended, iterative and dynamic problems to test takers, but they can also replicate situations that would otherwise be difficult to (re)create in a standardised way for assessment (e.g. simulating collaborative encounters) or in the context of a classroom setting (e.g. visualising and modelling dynamic systems). The affordances of technology-enhanced tasks provide opportunities for test takers to make choices, iterate upon their ideas, seek feedback and create tangible representations of their knowledge and skill, and advances in technology and measurement mean that tasks can be designed to include intentional scaffolds (e.g. hints, information resources) to support learning.

New task modalities, problem types, and affordances mean there are also new possibilities for response types, and in turn, new sources of potential evidence about test takers. Digital platforms can capture, time stamp and log student interactions with the test environment and affordances. This is especially transformative in the context of measuring complex competencies as the process by which an individual engages with an activity can be just as valuable for evaluating proficiency as their final product. These process data, when coupled with appropriate analytical models, can reveal how students engage with problems, the choices they make and the strategies they do (or do not) implement – all of which may constitute potential evidence for the variables of interest in the competency model.

Chapter 7 of this report explores the expanded range of tools now at an assessment designer’s disposal thanks to digital technologies, taking a deeper dive into different task formats, test affordances and response types as well as discussing how design choices must interact with validity considerations.

Evidence identification and accumulation

Test taker responses in traditional assessments are typically scored as correct or incorrect, or as polytomous items in ordered categories. In open and interactive TEA environments, test takers can provide unique, relatively complex and unstructured “responses”. Potential sources of evidence may include time spent responding to a task or interacting with an affordance, sequences of actions, interactions with agents or human collaborators, multiple iterations of a product or (intermediate) solution states as well as other behaviours that can be recorded using technology (e.g. pausing, eye movements, etc.). More open-ended and interactive problem types also result in students navigating through tests in different ways. All this means that the structure and nature of the data collected can vary widely across examinees and that test items can effectively become interdependent, making it difficult or inappropriate to apply the same psychometric methods used to accumulate evidence in more traditional assessments (Quellmalz et al., 2012[24]).

Advances in measurement technology however do offer new ways to reason about and interpret evidence from TEA. Sophisticated parsing, statistical and inferential models that use Artificial Intelligence (AI), machine learning (ML) or other computational psychometric techniques can enable the automated scoring of complex constructed response data as well as accumulate evidence from different sources of data for scoring and reporting. Dynamic analytical approaches that can distinguish construct-relevant differences in student process data can integrate this information into scoring models to augment their precision. A recent study by (Zhai et al., 2020b[25]) reviewed the technical, validity and pedagogical features of ML-involved science assessments and revealed significant advantages of these innovative assessments compared with traditional assessments. Yet exploiting these advantages requires well-designed task models that define the features of responses that matter for scoring as well as a clear conceptual understanding of how different patterns in individual student actions align with competency states (i.e. by reflecting variations in strategy or evolving psychological states). An ongoing challenge in the measurement field relates to being able to parse the massive volumes of process data generated by TEAs and to distil them into actionable pieces of information to inform claims about students’ competencies (Bergner and von Davier, 2019[26]). Chapter 8 of this report focuses on this challenge and explores the potential of hybrid analytical models to reliably integrate multiple sources of evidence for scoring.

There is also potential to bridge measurement models traditionally applied to large-scale summative assessments with learning analytics methods that have been mostly applied in the context of monitoring and optimising learning and the environments in which it occurs. Learning analytics can supplement traditional performance scales by providing more descriptive and diagnostic information based on process data to explain why students might attain a given score (e.g. by identifying the strategies they successfully implemented or the types of mistakes they committed). This kind of detailed assessment reporting can help to promote a vision of assessment that is more integrated with the processes of teaching and learning. However, more work is needed to connect the disciplines of measurement science and learning analytics (see Chapter 13 of this report for directions on how to integrate advances from the two fields).

Discussion

Technology clearly offers many new possibilities for assessment design that can help to bridge the gap between educational rhetoric – the goal of assessing students’ preparedness for their future – and the challenges that pose difficulties for assessing 21st Century competencies (see Chapter 1 of this report for an in-depth discussion of these challenges). Technology is also particularly suited for responding to the design innovations for next-generation assessments outlined in Chapter 2 of this report, namely providing opportunities for learning, feedback and instructional support during extended performance tasks. However, using technology to advance assessment in the ways described in this chapter warrants some further reflection.

First, technology-enabled innovations are only useful insofar as they are integrated purposefully within a principled design process. This means that choices about what aspects of performance to simulate, what tools and affordances to include, what evidence to collect and what interpretations to draw from the data are guided by an explicit chain of reasoning. Integrating more technology in assessment for technology’s sake is not always better. To borrow from other frameworks on technology integration in education, in order to create meaningful learning and assessment experiences then it is necessary to bring together technology with pedagogical and content knowledge (Koehler and Mishra, 2009[4]).

Second, while the assessment paradigms described in Table 5.1 are presented along a technology integration continuum, from less to more transformative, it is worth acknowledging that they are conceptually different in terms of their intended purpose. Assessments in the first two paradigms (traditional and technology-enhanced) intend to measure a fixed state or ability, usually in the context of summative assessment; technology-enabled innovations have thus tended to focus on increasing measurement efficiency or enhancing item-types. Conversely, embedded assessments can measure dynamic abilities and processes (that may change during the assessment) and technology has been used to enable more personalised learning and assessment experiences, primarily in the context of formative assessment. Both types of assessment remain useful: if education stakeholders need a snapshot of students’ knowledge across a vast content discipline (e.g. mathematics), then a more traditional assessment might serve that purpose better by more efficiently sampling activities across the domain. This chapter does not intend to argue that one paradigm is inherently better than the other, nor that less transformative assessments will become obsolete – at least, not in the near future. However, the single-occasion, drop-from-the-sky model of assessment made of short and discrete items is clearly insufficient for measuring complex constructs that are dynamic, iterative and that require extended performance-based assessments. Measuring these constructs well necessarily requires assessments to be closer tied to the processes and contexts of learning and instruction – something that technology can facilitate through embedded assessment.

Third, the embedded assessment paradigm aligns with other visions of assessment in technology-rich environments that are characterised by performance-based formative activities (DiCerbo, Shute and Kim, 2017[12]; Shute et al., 2016[27]); see also Chapters 2 and 3 of this report. In this vision of assessment, students are immersed in different technology-rich learning environments that can capture and measure the dynamic changes in their knowledge and skills, and that information can then be used to further enhance their learning. This does not necessarily involve administering assessments more frequently, but rather unobtrusively collecting data as students learn and interact with their digital environment and systematically accumulating evidence about what students know and can do in multiple contexts – therefore merging summative and formative assessment (see (Wilson, 2018[28]), for more on facilitating coherence between classroom and large-scale assessment). However, turning this vision into reality requires the development of high-quality, ongoing, unobtrusive and technology-rich assessments whose data can be aggregated to describe a student’s evolving competency levels (at various grain sizes) and also aggregated across students (e.g. from student to class, to school to district, to state to country) to inform higher-level decisions (DiCerbo, Shute and Kim, 2017[12]). This is turn implies much higher development and data analysis costs as well as addressing potential fairness issues including how such assessments are communicated to students (i.e. do they know what they are being assessed on?) and ensuring that innovative analytical and scoring models are unbiased.

Conclusion

This chapter has described how technology can innovate assessment across the three interconnected dimensions of assessment design in different assessment paradigms. Technology can introduce new forms of active, immersive and iterative performance-based tasks within interactive environments that make it possible to observe how test takers engage in complex and authentic activities. These types of tasks can provide richer observations and potential evidence about students’ thinking processes and learning as well as enable the measurement of dynamic skills beyond the capability of more traditional and static items. Interactive tools and affordances can also provide dynamic and targeted feedback to test takers, supporting test takers’ learning progress, motivation and engagement.

Technology can also generate new sources of complex evidence that, when combined with sophisticated analytical approaches, can identify patterns of behaviour associated with different mastery levels, increase the precision of performance scores and produce diagnostic information on what support students need in order to progress their skills. By allowing for the real-time measurement of students’ capacities as they engage in meaningful learning activities, technology holds the promise of creating new systems of evaluation where evidence on students’ progress is collected in a continuous way and assessment and learning are no longer explicitly separated.

While these opportunities are exciting, the development of effective innovative assessments must follow the principles of a coherent design process so that technology effectively serves the intended purposes of the assessment. The opportunities discussed in this chapter also raise a set of new challenges for assessment designers and measurement experts. These include how to design tasks that can simulate authentic contexts and elicit relevant behaviours/evidence, how to interpret and accumulate the numerous sources of data that TEAs can create in meaningful and reliable ways, and how to compare students meaningfully in increasingly dynamic and open test environments.

References

[21] Almond, P. et al. (2010), “Technology-enabled and universally designed assessment: Considering access in measuring the achievement of students with disabilities - A foundation for research”, The Journal of Technology, Learning and Assessment, Vol. 10/5, http://www.jtla.org (accessed on 6 May 2022).

[14] Bennett, R. (2015), “The changing nature of educational assessment”, Review of Research in Education, Vol. 39/1, pp. 370-407, https://doi.org/10.3102/0091732X14554179.

[9] Bennett, R. (2010), “Technology for large-scale assessment”, in Peterson, P., E. Baker and B. McGaw (eds.), International Encyclopedia of Education, Elsevier, Oxford, https://doi.org/10.1016/B978-0-08-044894-7.00701-6.

[26] Bergner, Y. and A. von Davier (2019), “Process data in NAEP: Past, present, and future”, Journal of Educational and Behavioral Statistics, Vol. 44/6, pp. 706-732, https://doi.org/10.3102/1076998618784700.

[23] Clarke-Midura, J. and C. Dede (2010), “Assessment, technology, and change”, Journal of Research on Technology in Education, Vol. 42/3, pp. 309-328, https://doi.org/10.1080/15391523.2010.10782553.

[12] DiCerbo, K., V. Shute and Y. Kim (2017), “The future of assessment in technology-rich environments: Psychometric considerations”, in Spector, J., B. Lockee and M. Childress (eds.), Learning, Design, and Technology: An International Compendium of Theory, Research, Practice, and Policy, Springer International Publishing, New York, https://doi.org/10.1007/978-3-319-17727-4_66-1.

[22] Elliott, J. (2003), “Dynamic assessment in educational settings: Realising potential”, Educational Review, Vol. 55, pp. 15-32, https://doi.org/10.1080/00131910303253.

[3] Hughes, J., R. Thomas and C. Scharber (2006), “Assessing technology integration: The RAT - Replacement, Amplification, and Transformation - framework”, in Proceedings of SITE 2006: Society for Information Technology & Teacher Education International Conference, Association for the Advancement of Computing in Education, Chesapeake, http://techedges.org/wp-content/uploads/2015/11/Hughes_ScharberSITE2006.pdf.

[4] Koehler, M. and P. Mishra (2009), “What is technological pedagogical content knowledge?”, Contemporary Issues in Technology and Teacher Education, Vol. 9/1, https://citejournal.org/volume-9/issue-1-09/general/what-is-technological-pedagogicalcontent-knowledge/.

[7] Mhlongo, S., R. Dlamini and S. Khoza (2017), “A conceptual view of ICT in a socio-constructivist classroom”, in Proceedings of the 10th Annual Pre-ICIS SIG GlobDev Workshop, Seoul, South Korea, https://www.researchgate.net/publication/322203195.

[17] Mislevy, R. and G. Haertel (2006), “Implications of evidence-centered design for educational testing”, Educational Measurement: Issues and Practice, Vol. 25/4, pp. 6-20, https://doi.org/10.1111/j.1745-3992.2006.00075.x.

[18] Pellegrino, J. and E. Quellmalz (2010), “Perspectives on the integration of technology and assessment”, Journal of Research on Technology in Education, Vol. 43/2, pp. 119-134, https://files.eric.ed.gov/fulltext/EJ907019.pdf.

[2] Puentedura, R. (2013), SAMR and TPCK: An Introduction, http://www.hippasus.com/rrpweblog/archives/2013/03/28/SAMRandTPCK_AnIntroduction.pdf (accessed on 24 March 2023).

[10] Quellmalz, E. and J. Pellegrino (2009), “Technology and testing”, Science, Vol. 323/5910, pp. 75-79, https://doi.org/10.1126/science.1168046.

[24] Quellmalz, E. et al. (2012), “21st century dynamic assessment”, in Mayrath, M. et al. (eds.), Technology-Based Assessments for 21st Century Skills, Information Age Publishing,, http://www.simscientists.org/downloads/Chapter_2012_Quellmalz.pdf.

[15] Redecker, C. and Ø. Johannessen (2013), “Changing assessment - towards a new assessment paradigm using ICT digital competence view project the future of learning view project”, European Journal of Education: Research, Development and Policy, Vol. 48/1, pp. 79-96, https://doi.org/10.2307/23357047.

[5] Salomon, G. and D. Perkins (2005), “Do technologies make us smarter? Intellectual amplification with, of and through technology”, in Sternberg, R. and D. Preiss (eds.), Intelligence and Technology: The Impact of Tools on the Nature and Development of Human Abilities, Lawrence Erlbaum, New Jersey.

[16] Shute, V. (2011), “Stealth assessment in computer-based games to support learning”, in Tobias, S. and J. Fletcher (eds.), Computer Games and Instruction, Information Age Publishing, Charlotte, https://myweb.fsu.edu/vshute/pdf/shute%20pres_h.pdf.

[13] Shute, V. and Y. Kim (2013), “Formative and stealth assessment”, in Spector, J. et al. (eds.), Handbook of Research on Educational Communications and Technology (4th Edition), Lawrence Erlbaum, New York.

[27] Shute, V. et al. (2016), “Advances in the science of assessment”, Educational Assessment, Vol. 21/1, pp. 1-27, https://doi.org/10.1080/10627197.2015.1127752.

[11] Thornton, S. (2012), “Issues and controversies associated with the use of new technologies”, in Teaching Politics and International Relations, Palgrave Macmillan UK, London, https://doi.org/10.1057/9781137003560_8.

[1] Timmis, S. et al. (2016), “Rethinking assessment in a digital age: Opportunities, challenges and risks”, British Educational Research Journal, Vol. 42/3, pp. 454-476, https://doi.org/10.1002/berj.3215.

[28] Wilson, M. (2018), “Making measurement important for education: The crucial role of classroom assessment”, Educational Measurement: Issues and Practice, Vol. 37/1, pp. 5-20, https://doi.org/10.1111/emip.12188.

[20] Wolf, M. et al. (2016), “Integrating scaffolding strategies into technology-enhanced assessments of English learners: Task types and measurement models”, Educational Assessment, Vol. 21/3, pp. 157-175, https://doi.org/10.1080/10627197.2016.1202107.

[19] Xu, L. and M. Davenport (2020), “Dynamic knowledge embedding and tracing”, in Raffety, A. et al. (eds.), Proceedings of the 13th International Conference on Educational Data Mining (EDM 2020), https://files.eric.ed.gov/fulltext/ED607819.pdf.

[8] Zenisky, A. and S. Sireci (2002), “Technological innovations in large-scale assessment”, Applied Measurement in Education, Vol. 15/4, pp. 337-362, https://doi.org/10.1207/S15324818AME1504_02.

[6] Zhai, X. et al. (2020a), “From substitution to redefinition: A framework of machine learning-based science assessment”, Journal of Research in Science Teaching, Vol. 57/9, pp. 1430-1459, https://doi.org/10.1002/tea.21658.

[25] Zhai, X. et al. (2020b), “Applying machine learning in science assessment: A systematic review”, Studies in Science Education, Vol. 56/1, pp. 111-151, https://doi.org/10.1080/03057267.2020.1735757.