This section provides a detailed overview of the assessment methodology for the 2022 SME Policy Index.

SME Policy Index: Western Balkans and Turkey 2022

Annex A. Methodology for the 2022 Small Business Act assessment

Overview of the 2022 assessment framework and scoring

The process comprises two parallel assessments, a self-assessment by the government and an independent assessment by the OECD and its partner organisations’ independent assessment, with inputs from a team of local experts (see the Policy Framework and Assessment Process chapter).

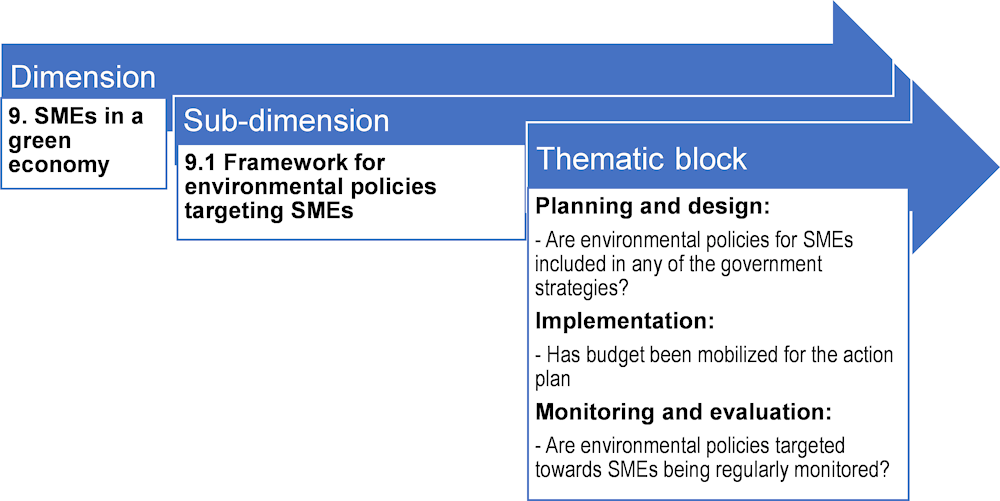

The assessment grid is built upon the ten principles of the SBA, divided into 34 sub-dimensions. The sub-dimensions are usually also divided into thematic blocks, each with its own set of indicators. The thematic blocks are typically broken down into three components, representing different stages of the policy cycle: planning and design, implementation, and monitoring and evaluation (Figure A A.1). In a few sub-dimensions where this approach is not applicable, for example in relation to the SME definition or the availability of some financial instruments within the access to finance dimension, thematic blocks may differ.

Figure A A.1. Dimension, sub-dimension and indicator level examples

The approach of assigning scores to reflect different stages of the policy cycle, allows governments to identify and target the stages where they have particular strengths or weaknesses. Each policy dimension, and their constituting parts, is assigned a numerical score ranging from 1 to 5 according to the level of policy development and implementation, so that performance can be compared across economies and over time. Level 1 is the weakest level, whist level 5 is the strongest, indicating a level of development commensurate with OECD good practices (Table A A.1). The levels are determined through a participatory and analytical process, which is conducted by means of a self-assessment by the government and an independent assessment by local consultants.

Table A A.1. Description of score levels

|

Level 5 |

Level 4 plus results of monitoring and evaluation inform policy framework design and implementation |

|

Level 4 |

Level 3 plus evidence of a concrete record of effective policy implementation. |

|

Level 3 |

A solid framework, addressing the policy area concerned, is in place and officially adopted. |

|

Level 2 |

A draft or pilot framework exists, with some signs of government activity to address the policy area concerned. |

|

Level 1 |

No framework (e.g. law, institution) exists to address the policy topic concerned. |

The assessment framework comprises qualitative and quantitative indicators, which are scored based on questions in the following forms:

Binary questions, allowing yes or no answers, such as “Does your government have a strategy / policy / plan in place for digital government?”

Multiple-choice questions, such as “Is it possible to conduct online company registration?” These questions offer a drop-down list of answers, such as “yes, and it applies to all phases of the company registration process”, “yes, but it does not apply to all phases of the company registration process”, and “no”.

Open-ended questions, acquiring further descriptive evidence to add to the binary and multiple-choice questions. These questions are not themselves scored. Examples include “What is the budget for the SME implementation agency?” or “How many people work in the agency?” and “How many ministries are represented in the governance board?”

Whenever possible, economies are asked to support their answers with evidence, by providing source documents such as strategies and budget plans. Assessment framework is accompanied by a Glossary of key terms and concepts, which contains a comprehensive set of definitions of key terminology and concepts used in the qualitative questionnaires, The Glossary consists of two sections: 1) glossary of general terms relevant across 12 policy dimensions of the SME Policy Index and 2) glossary of dimension specific terms, if relevant for a given dimension. The terms are largely supported with references and examples to help respondents answer the questions.

Each of the core questions is scored equally within the thematic block. For binary questions, a “yes” is awarded full points and a “no” receives zero points. For multiple-choice questions, scores for the different options range between zero and full points, depending on the indicated level of policy development.

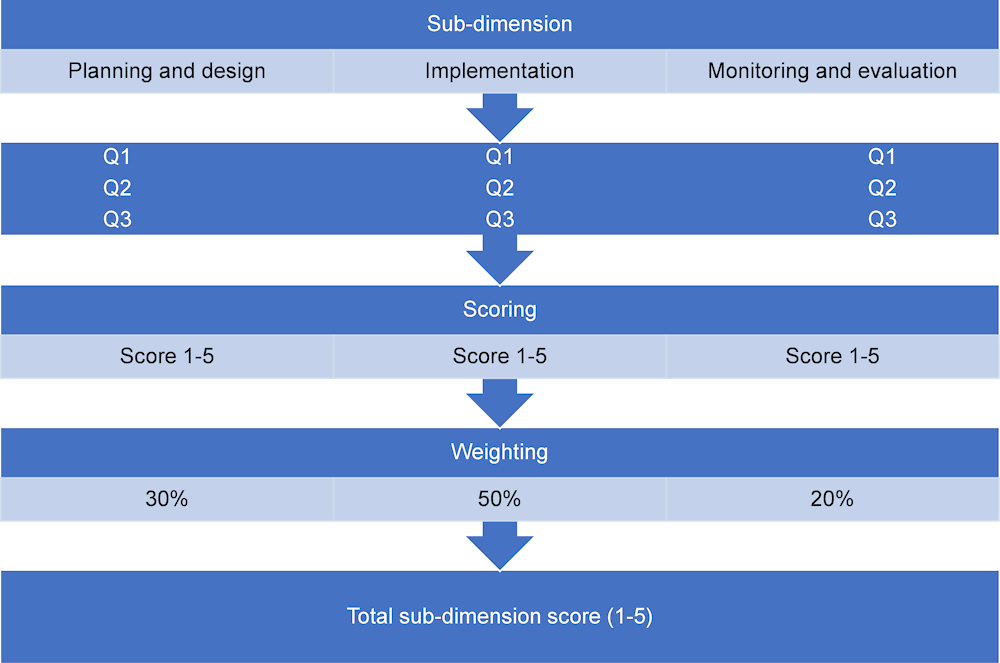

The scores for the core questions are then added up to provide a score for each component. Scores are initially derived as percentages (0-100) and then converted into the 1-5 scale. Scores for the thematic blocks are then aggregated to attain a score for the sub-dimension, with each thematic block being weighted based on expert consultation. In general, planning and design accounts for 30% of the score, implementation 50%, and monitoring and evaluation 20%, to emphasise the importance of policy implementation (Figure A A.2). Weights were applied at sub-dimension level and thematic block level in the same way.

Figure A A.2. Scoring breakdown per sub-dimension

Main changes to the assessment framework since the 2019 SBA assessment

Since 2019, the SBA assessment framework has been updated to reflect the impact of the COVID-19 pandemic and governments’ responses to it. This was done through the introduction of open-ended questions, none of which were scored, but which impacted the narrative of the report and helped put some government policies into context, particularly related to any challenges that they may have encountered (i.e. redirection of resources to more immediate support to SMEs and subsequent delays in implementation of previously planned policies).

Across several dimensions, new questions were horizontally added to increasingly capture governments’ policies related to SME greening and digitalisation, namely in areas where this is relevant for the WBT region’s post-COVID recovery and EU accession contexts (i.e. on the consideration of environmental and social criteria for public procurement bids for Dimension 5b or on the development of digital tools by export promotion agencies for Dimension 10).

Statistical data have been collected to allow for comparison of performance over time

As in the previous assessment cycle, the approach to data collection integrates national statistics, company-level data from other international organisations, or independent company surveys to measure the performance of policies on the ground. Table A A.2 provides an overview of the types of statistics and data collected, their purpose within the SME Policy Index, and their main sources.

Table A A.2. Data types and sources

|

Type of data |

Main purpose within the SME Policy Index |

Source |

|---|---|---|

|

Macroeconomic data and business statistics |

Statistical information for the economy reports Support of the policy narrative written by the independent expert |

National statistical offices International databases and entrepreneurship indicator programmes (OECD, Eurostat, World Bank, etc.) |

|

Statistics on policy outputs |

Measurement of the policy intensity and the actual policy output Measurement of policy convergence towards the EU average |

Section on open questions in the SBA assessment questionnaires Data from the SBA Factsheets |

|

Survey data |

Measurement of SME perceptions of the effectiveness and usefulness of certain SME policies Measurement of policy convergence with the EU average |

BEEPS Survey Eurobarometer data from the SBA Factsheet where available Balkan Barometer – Business Opinion survey |