This chapter discusses the role that evaluation and assessment practices have in supporting student learning, schools and the education system in Mexico. In particular, this chapter reviews efforts towards increasing and establishing evaluation and assessment processes at the national level with a National System (SNEE) underpinned by the INEE and the SEP; the contribution that the National Plan for Students’ Learning Evaluations PLANEA can have in bringing the benefits of standardised student assessments results in the classroom; and the substantial progress Mexico has made in gathering data and information for guiding policy makers, educational actors and the general public in education policy. The chapter concludes with recommendations for future policy development to enhance the contribution of evaluation and assessment practices to student learning and the operation of schools.

Strong Foundations for Quality and Equity in Mexican Schools

Chapter 5. Focusing evaluation and assessment on schools and student learning

Abstract

The statistical data for Israel are supplied by and under the responsibility of the relevant Israeli authorities. The use of such data by the OECD is without prejudice to the status of the Golan Heights, East Jerusalem and Israeli settlements in the West Bank under the terms of international law.

Introduction

Assessment and evaluation are increasingly used by education actors such as governments, education policy makers, school leaders and teachers as tools for better understanding how well students are learning, for providing information to parents and society at large about educational performance and for improving school, school leadership and teaching practices. Furthermore, results from assessment and evaluation practices are becoming critical to establishing how well school systems are performing and for providing feedback, all with the goal of helping all students to do better (OECD, 2013[1])

In Mexico, evaluation and assessment practices have evolved to play an important role to support quality and equity in education. One of the major aspects of recent education reforms in Mexico (2012‑13) was providing autonomy to the National Institute for Education Evaluation (Instituto Nacional para la Evaluación de la Educación, INEE, 2002) and entrusting it with the responsibility of co‑ordinating the national system of education evaluation (SNEE). This is a major institutional effort to support the provision of quality with equity in education, together with the National Plan for Learning Assessment PLANEA (Plan Nacional para la Evaluación de los Aprendizajes) as a crucial instrument to achieve this goal.

Mexico has also placed considerable efforts in reinforcing a series of instruments (the school improvement route) and bodies (School Technical Council, CTE) to support the connection between policymaking at the macro level and implementation and adjustment at the school level. This chapter reviews the development of current evaluation and assessment practices in Mexico. It:

Gives recognition to the continuous efforts to consolidate the vision that evaluation and assessment mechanisms are essential inputs to improve quality and equity in education, not an end in themselves. These efforts include the provision of evaluation and assessment information to guide the work and decisions of policy makers, school leaders, teachers, students, families, researchers and stakeholders.

Recognises that PLANEA is a major step towards reinforcing the role of standardised assessment instruments for students as a tool to improve learning and that more resources should be invested to make sure teachers use all the materials derived from PLANEA to improve student learning and adapt it to student needs.

Calls for actions to promote and use system evaluation information in education to identify disadvantaged students and to provide guidance in the construction of policy instruments to support them better.

Identifies the need to invest more in development of and capacity for evaluation and assessment practices at the state and school levels. It is particularly important to promote self-evaluation in schools through instruments and bodies such as the school improvement route (Ruta de Mejora Escolar) and CTEs, as supported by the SATE (Servicio de Asistencia Tecnica a la Escuela, Technical Support Service for the School) and Zone Technical Councils (Consejo Técnico de Zona, CTZs).

Recognises the need to continue enriching the knowledge and managing tools in the education system to inform and support the activities of policy makers, educational authorities at federal and state levels, supervisors, school leaders and teachers through services such as the System of Educational Information and Management (Sistema de Información y Gestión Educativa, SIGED) whose potential is enormous, especially at the school level.

The chapter is divided into three sections. Following this introduction, the first section discusses the main characteristics of the evaluation and assessment system in Mexico, while the second section makes an assessment of its recent performance. The chapter concludes with a section that reflects on remaining challenges and policy recommendations. This chapter does not include teachers’ appraisal, which is analysed in Chapter 4 on teachers and schools.

Policy issues: Evaluation and assessment practices to support quality and equity in education

The formative value of standardised student assessment

Student assessment refers to processes in which evidence of learning is collected in a planned and systematic way in order to make a judgment about student learning. This information can also shed light on individual school performance and the school system in general when data and information are considered at the aggregated level (OECD, 2013[1]). In general, assessments can be distinguished in terms of their summative and formative role: summative assessment aims to record, mark or certify learning achievements. On the other hand, formative assessment aims to identify aspects of learning and is developed in order to deepen and shape subsequent learning (OECD, 2013[1]). At the same time, there are also distinctions between internal or school-based assessment and standardised (external) assessment. Internal assessment is designed and marked by the students’ own teachers, often in collaboration with the students themselves, and implemented as part of regular classroom instruction, within lessons or at the end of a teaching unit, year level or educational cycle. Standardised assessment is designed and marked outside individual schools to ensure that the questions, conditions for administering, scoring procedures and interpretations are consistent and comparable among students (Popham, 1999[2]).

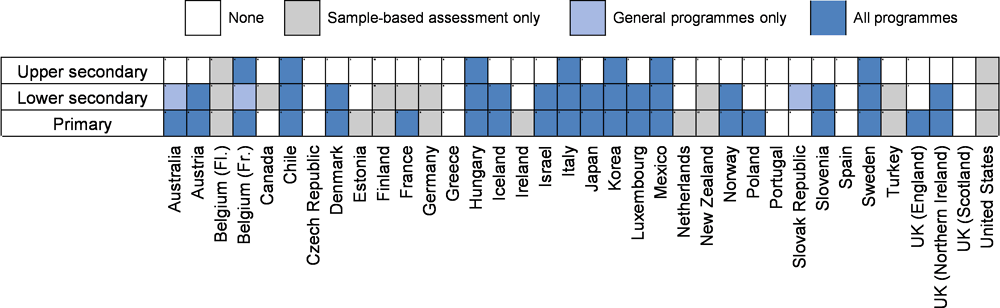

Positive effects of using student results from large-scale assessments to inform teaching may include greater differentiation of instruction, greater collaboration among colleagues, an increased sense of efficacy and improved identification of students’ learning needs (van Barneveld, 2008[3]). At the same time, these benefits depend on a number of factors, including providing the results in a timely manner for teachers to be able use them with their students (Wiliam et al., 2004[4]) and offering the support necessary for them to understand what the results say about student learning needs and what strategies teachers can adopt to help their students. Centralised assessments are indeed used for several purposes, including monitoring, which limits the depth of the diagnosis that can be made on individual student learning (OECD, 2013[1]). As shown in Figure 5.1, a few countries use centralised student assessments with no stakes in all three cycles of primary and secondary education (Mexico included).

Overall, there is some evidence that data from large-scale assessments are being used successfully to identify students’ strengths and weaknesses, to change regular classroom practice or to make decisions about resource allocation (Anderson, MacDonald and Sinnemann, 2004[5]; Shepard and Cutts-Dougherty, 1991[6]). However, they need to be embedded in broader, more comprehensive assessment systems that include a range of summative and formative assessments, curriculum tasks, instructional tools and professional development that helps teachers understand which assessment information is most appropriate for a particular purpose (OECD, 2013[1]).

Figure 5.1. Existence of standardised central assessments with no stakes for students, 2012

Note: Before 2012/13, Portugal had national assessments in Portuguese and mathematics.

Source: OECD (2013[1]), Synergies for Better Learning: An International Perspective on Evaluation and Assessment, http://dx.doi.org/10.1787/9789264190658-en.

Reinforcing evaluation and assessment institutions

Mexico has made significant progress in building a range of evaluation and assessment measures to ensure accountability in education and provide the basis for school and student learning improvement. These measures include the assessment of students, the appraisal of teachers (reviewed in the previous chapter) and school leaders, and the evaluation of schools and the school system.

A fundamental part of the Mexican effort to make sure that the constitutional right to education is being guaranteed to all students was the development of the national system of education evaluation (Sistema Nacional de Evaluación Educativa, SNEE) under the co‑ordination of the INEE. Following the Law of the National Institute for Education Evaluation (2013), the existing National Institute for Education Evaluation (INEE) was transformed into an autonomous body with the functions of co‑ordinating the National System for Education Evaluation. It was given the mandate to assess the quality, performance and results of the national education system of basic and upper secondary education; and the function to design and conduct measurements and evaluations of the components, processes and outcomes related to the attributes of learners, teachers and school authorities, as well as the characteristics of institutions, policies and educational programmes.

Governance

The Secretariat of Public Education (Secretaría de Educación Pública, SEP) and the INEE became responsible at the federal level for the development, implementation and co‑ordination of evaluation in the education system. The INEE, in particular, co‑ordinates the National System for Educational Evaluation (Sistema Nacional de Evaluación Educativa, SNEE). Evaluation and assessment at the higher education level are carried out by the National Assessment Centre for Higher Education (CENEVAL), which administers standard exams for entry into a large part of undergraduate tertiary education and exams to assess qualifications at the completion stage of higher education courses (OECD, 2018[7]).

External monitoring of schools is undertaken at the state level by the supervision systems of individual states. There are 14 197 supervisors in Mexico, who work with a wide range average of between 6 and 50 schools, having the responsibility of attending and monitoring the educational service they provide. Around 80% of primary schools and 50% of lower secondary schools are inspected annually, with the main focus of inspections on the monitoring of compliance with rules and regulations. The results of inspections are not publicly available and not widely shared among educational authorities. According to PISA 2015, schools in Mexico are slightly more likely than average to conduct a self-evaluation of their school (93.9% compared to the OECD average of 87.1%) and to have external school evaluations (74.7% compared to the OECD average of 63.2%) (OECD, 2018[7]).

Main components of the assessment and evaluation system in basic education in Mexico

Student assessment

Student performance in basic education in Mexico is assessed by a wide range of instruments, ranging from national standardised assessments to continuous formative assessment in the classroom. Teachers take the main responsibility for student assessment. All students are assessed in an ongoing manner throughout the school year in each curriculum area or subject.

In Mexico, national student performance assessment is primarily carried out through the National Plan for Learning Assessment (Plan Nacional para la Evaluación de los Aprendizajes, PLANEA). PLANEA was developed as a centralised assessment, which can be used for formative purposes and has a modality for educational authorities (ELSEN) and another modality for schools (ELCE). PLANEA replaced the previous school and student assessments, Evaluación Nacional del Logro Académico en Centros Escolares (ENLACE) and Examen para la Calidad y el Logro Educativo (EXCALE). It was first implemented in 2015 in two domains (language and communication and mathematics), and a second round took place in 2016. As a formative assessment, it aims to inform how students are progressing in the system. Unlike its predecessor ENLACE (Evaluación Nacional del Logro Académico en Centros Escolares), PLANEA is not intended for ranking of schools or other formal consequences for students, teachers or schools.

PLANEA combines three distinct standardised assessments and evaluations1 that monitor student learning outcomes at different levels of the education system, including national and subnational data and information on schools and individual students. These three modalities, reviewed separately in the following sections, are:

ELSEN: a sample-based national system-level evaluation (Evaluación del Logro Referida al Sistema Educativo Nacional).

ELCE: a sample-based national school-level evaluation (Evaluación del Logro referida a los Centros Escolares).

EDC: a diagnostic census-based assessment (Evaluación Diagnóstica Censal).

EDC, which is a formative census-based standardised student assessment in Year 4, is implemented every year and covers the subjects of language and communication and mathematics. At the student level, results are used formatively to inform subsequent teaching strategies. It is implemented by schools and teachers at the beginning of the school year. Results are disclosed just to the schools themselves.

EDC has been developed with the purpose of offering information for the improvement of teaching processes in schools. In particular, the design of EDC allows teachers to have support in front of a group with an instrument that facilitates the diagnosis of their students at the beginning of the school year because it provides information about the knowledge and skills that students should have achieved during previous courses. This information should allow teachers to:

Adjust pedagogical interventions to the characteristics and needs of students.

Detect those students who will require more attention to conclude the course successfully.

Provide personalised feedback to students.

Establish a baseline for the school community to identify the effectiveness of pedagogical interventions.

EDC is meant to offer Year 4 teachers an additional standardised instrument (besides the pedagogical material teachers might already have in their classes) with three important characteristics:

The selection of contents for the assessment is carried out collegially by specialists in the basic education curriculum, so the instruments are balanced in terms of the diversity of issues considered.

Teachers from different classrooms, and even from different schools, have elements in common for reflection and dialogue between peers.

Together with the results of PLANEA, teachers are provided with a guide that includes the purposes of this assessment, how to apply it and analyse the results; the intention is to encourage a broad reflection on what the teacher can do considering student’s initial knowledge level at the beginning of the course.

This component of PLANEA is operated by the SEP while the INEE provides tables of contents, curricular analysis, the set of questions with its psychometric assessment, technical support throughout its development, and when appropriate, (technical) approval of the results. Both the SEP and the INEE analyse and select the most appropriate questions for the purposes of this version of the assessment and then the SEP distributes the instruments to Year 4 primary school teachers at the beginning of the school year. EDC is jointly designed by the SEP and the INEE, and implemented and marked by teachers at the classroom level.

Furthermore, EDC assessment is a part of the pedagogical activity carried out by the teacher, and the results obtained are meant to be used only within the school; they are not disclosed outside the school and are not used for accountability. EDC started in the 2015/16 school year with language and communication, and mathematics assessments. The SEP is in charge of offering guidelines to mark and interpret the results, and optional support software for the generation of reports for the teacher and school community (INEE, 2015[8]).

School evaluation and system evaluation2

In terms of school evaluation, school-level aggregated data, including results in PLANEA assessments, provide general information on student performance against state and national averages. Schools are encouraged to engage in self-evaluation, while advice and instruments are provided nationally. In particular, Mexico has put in place the Ruta de Mejora Escolar (school improvement route) mechanism, which is a set of processes meant to guide the School Technical Council (CTE) in its tasks of managing school improvement. This route considers five steps: i) planning – phase in which the CTE makes a diagnosis of the school situation and identifies priorities for improvement; ii) implementation – the operationalisation of the actions to achieve the priorities for improvement identified by the CTE in the planning phase; iii) monitoring – the set of actions the CTE and teaching staff agree upon to make sure there is adequate progress made to achieve the identified priorities for improvement; iv) evaluation – the systematic process of collecting data with the purpose of assessing if the priorities identified have been achieved; v) accountability – the process in which the school leader and teaching staff report to the school community about the results obtained in the application of the Ruta de Mejora Escolar (school improvement route).

At the same time, there is a long-established tradition of oversight of school work by supervisors and other personnel external to the school, and their role has been largely associated with ensuring schools’ compliance with regulations and other administrative tasks. However, in recent times they are supposed to play a more significant role in both pedagogical and administrative support tasks for the schools. For example, supervisors are expected to offer much more pedagogical support to schools through the recently created the SATE (Servicio de Asistencia Tecnica a la Escuela, Technical Support Service for the School) or/and accompany the process of the school improvement route.

For schools and system evaluation, PLANEA includes two instruments: ELSEN and ELCE. ELSEN (Evaluación de Logro Referida al Sistema Educativo Nacional, Evaluation of the National System) is a sample-based standardised student assessment used for national (and subnational) monitoring of student learning outcomes. Results are made public at the national and subnational levels. It covers the last year of pre-school and Years 6, 9 and 12 (the final years of basic education – primary, lower secondary and upper secondary respectively), first contemplating the subjects of language and communication and mathematics and then, from 2017, other subjects such as natural sciences and civic and ethics education. It is implemented every two years because this frequency is considered sufficient to monitor changes in the educational system. This instrument has the specific purpose of offering the education system information to guide policy design and undertake accountability at the system and subsystem levels. The assessment is designed, applied and analysed by the INEE.

ELCE (Evaluación del Logro Referida a los Centros Escolares, Evaluation of Schools) is a standardised student assessment that covers all schools in the country – with results made public at the school level (OECD, 2018[7]). The SEP, in co‑ordination with the state education authorities, applies ELCE each year to students in schools all over the country in the final years of primary, lower secondary and upper secondary (Years 6, 9 and 12), also including the subjects of language and communication and mathematics. ELCE’s specific purpose is to offer schools information to identify areas that need improvement and plan strategies to address them accordingly. The results provide information on student learning achievements in a school over six years (in the case of primary schools). Looking at different aspects: i) results indicate what students achieved and/or failed to learn; ii) they help to identify the curricular lines that the school community must strengthen from Year 1 of primary education; and iii) they provide elements to identify actions to achieve the expected learning. In addition, the information obtained from these assessments is expected to encourage the School Technical Council (CTE) and supervisors to focus their efforts and attention more on student learning. ELCE is also designed to facilitate the monitoring of each school’s effectiveness in reaching its goals over time; it allows each school to compare itself with the aggregate results of similar institutions and promotes collaboration between them to achieve better results.

Connection between system and school evaluations

ELSEN and ELCE are aligned, as their design is based on the same curricular analysis and they share contents, so both instruments report information about exactly the same type of learning outcomes. What is more, both instruments use the same measurement units (scales) and this allows analyses at both the aggregated (national) level and that of individual schools. It should be noted that, as a requirement of the application method for each instrument, ELCE assessments are shorter than those of ELSEN. In addition, it has been decided that those schools where the INEE applies ELSEN, ELCE cannot be applied; this has no impact for schools because they receive their results in the same format regardless of the instrument.

ELCE is applied by the educational authorities and its results are jointly analysed by the INEE and education authorities. The SEP distributes results to each school, accompanied by elements that allow their proper contextualisation. In addition, students who are not selected in the sample have the option of presenting the exam on line. The table below (Table 5.1) presents the distribution of roles in the design, application, analysis and use of the three different versions of PLANEA.

Table 5.1. Distribution of roles for PLANEA

|

System evaluation: PLANEA SEN (ELSEN) |

School evaluation: PLANEA Schools (ELCE) |

Student assessment: (EDC) |

|

|---|---|---|---|

|

Who develops the instrument |

INEE |

INEE |

SEP and INEE |

|

Who applies the instrument |

INEE |

SEP |

Teachers |

|

Who marks and analyse the results |

INEE |

SEP and INEE |

Teachers |

|

Who uses the results (mainly) |

National and state authorities, the general public, INEE |

Schools |

Teachers (classroom level) and schools |

EDC: Evaluación Diagnóstica Censal, Diagnostic Census Assessment.

SEN: Sistema Educativo Nacional, National Education System.

Source: OECD elaboration based on INEE (2015[8]), Plan Nacional para la Evaluación de los Aprendizajes (PLANEA), Instituto Nacional para la Evaluación Educativa (INEE), Mexico City.

Basic conditions of schools

The law established that the INEE design and conduct evaluations of the components, processes or outcomes of the national education system as well as of the attributes of learners, teachers and school authorities, and the characteristics of institutions, policies and educational programmes. To undertake this mandate, the INEE organises and implements the evaluation of basic conditions for teaching and learning (Evaluación de Condiciones Básicas para la Enseñanza y el Aprendizaje, ECEA) to generate relevant information to support decisions aimed at improving the conditions in which the country's schools operate (INEE, 2016[9]).

The conceptual model of ECEA defines the basic conditions necessary for the school to operate properly. It synthesises Mexican norms, as well as recommendations from different theoretical and research traditions, and establishes the standards or technical criteria for school operation in six domains: i) physical infrastructure; ii) furniture and basic equipment for teaching and learning; iii) educational support material; iv) staff working in schools; v) learning management; and vi) school organisation.

The ECEA is conducted on a representative sample of all schools in the country and takes place every four years. The respondents of the questionnaire were (in the 2014 version): the school leaders; teachers from Years 4, 5 and 6; students from Years 4, 5 and 6; and one member of the board of the parents’ association (INEE, 2018[10]).

School and system management

The School Technical Council (CTE) is a central piece in school management and leadership. The CTE is a school body composed of the leadership and teaching staff, including all the educational actors directly involved in the teaching and learning processes in the school. It is the responsibility of the CTE to analyse and make decisions to support and improve teaching practices for students to achieve the expected learning outcomes, so the school fulfils its mission. In other words, the CTE should make sure that education policy at the school level truly reflects the mandate given by the constitution. In addition, the Social Participation Councils (Consejos Escolares de Participación Social en la Educación, CEPSE) include parent representatives and community spokespersons; and state education authorities should collaborate with school improvement decision making and implementation. One of the fundamental actors in the provision of support for teachers in the school is be the Pedagogic and Technical Advisor, known by its acronym (ATP). This post should be occupied by teaching staff that has complied with the requirements established by the General Law of the Teacher Professional Service. The ATP has the responsibility to provide support to teachers in demand and play an active role to promote the improvement of education quality in schools, based on their pedagogical and technical functions.

One major change, introduced in Mexico on a path to improve pedagogical and management support for schools, has been the creation of the SATE, also discussed in other sections of this report. The general lines for the operation of this service were created in 2017. The main goals of the SATE are: i) improve teaching practices; ii) identify the training needs of teaching and administrative staff; iii) reinforce the operation and organisation of schools (through the use of the school improvement route mechanism previously mentioned); iv) support teachers in the practice of internal formative assessments; v) support teachers in the comprehension and use of standardised assessments for pedagogical purposes in the classroom; and vi) deliver counselling and technical pedagogical support for schools aiming at the improvement of student learning, teaching and school leadership practices, and school organisation and operation.

The Zone Technical Council (Consejo Técnico de Zona, CTZ) is an intermediate step between schools and state government authorities. CTZ aims to be a collegial body, offering a space for analysis, deliberation and decision making regarding the school zone’s educational matters. The CTZ constitutes a mechanism to support professional teacher development and school improvement. CTZ tasks consist of undertaking collaborative work between school leaders and supervisors to review educational and learning outcomes and professional practices (both teaching and managerial activities), in order to make decisions and establish agreements to improve schools.

Information for decision making and administration

Between September and November 2013, the INEGI (National Institute of Statistics and Geography) conducted a census called the CEMABE (Census of Schools, Teachers and Students of Basic and Special Education). This census collected information about:

teachers and students from pre-school, primary and secondary schools

schools, teachers and students in special education services

teachers who carry out their activities in administrative offices, supervision and teacher centres, among others.

At its completion, the census obtained information on 273 317 schools, 1 987 511 individuals working in these schools and their 23 667 973 students. This effort created a database that describes the Mexican basic education system entirely and serves as a baseline for any educational diagnosis (Box 5.1 presents the CEMABE’s basic results).

Box 5.1. Result of the Census of Schools, Teachers and Students in Basic and Special Education (CEMABE), 2013

Some of the basic results of the CEMABE (2013) indicate that:

Of the 236 973 registered schools, 207 682 or 87.6% are basic and special education schools and 12.4% are other types of work centres.

Of the total number of schools surveyed, 86.4% are public and 13.6% are private.

By school level, the distribution of schools was as follows: pre‑school, 40.1%; primary, 42.5%; secondary school, 16.7%; and multi-service centres, 0.7%.

Of the basic services, 51.6% of public schools have drainage, 69% a supply of drinking water, 87.2% toilets and 88.8% electricity. On the other hand, private schools almost meet 100% of these service requirements.

The total number of students in registered schools was 23 562 183. Of these, 18.3% belong to pre‑school, 55.8% to primary school, 25.6% to secondary school and 0.3% to multiple care centres.

Registered staff reached the number of 1 949 105 individuals. Of these, 88.1% perform their jobs in basic education schools, 2% in special education schools and 9.9% in other types of work centres.

Teaching staff in front of a group was initially estimated as 1 128 319 individuals. However, since this count refers to the number of individual classes (not teachers), this number was overestimated so the number of people that work in front of a group is estimated at approximately 978 118 individuals.

Source: FLACSO (2014[11]), Presenta INEGI Resultados del Censo de Escuelas, Maestros y Alumnos de Educación Básica y Especial, http://www.flacso.edu.mx/noticias/Presenta-INEGI-resultados-del-Censo-de-Escuelas-Maestros-y-Alumnos-de-Educacion-Basica-y (accessed on 27 August 2018).

The CEMABE was the first step in the construction of the SIGED (System of Educational Information and Management) created by the SEP. The potential use of this instrument should not be underestimated. Once completed, this system can produce information that sheds light on many aspects of the educational system and on a single platform. It can become an essential tool for policymaking in education at the macro level but also for guiding decisions at the school level. In addition to administrative data, it will be possible to follow the school trajectory and records of each student in the Mexican educational system. In other words, education authorities could know each student’s grade and academic performance (SEP, 2015[12]).

In addition to the SIGED, and shortly after the educational reform in 2012‑13, the INEE created an Integral System of Evaluation Results (Sistema Integral de Resultados de las Evaluaciones, SIRE), a strategic information system that collects, stores and organises information of evaluation results, on physical, sociodemographic and economic context of the student population, as well as other information of the SNEE in a single platform. The objectives of the SIRE are to: i) strengthen the capacities for evaluating the quality, performance and results of the National Education System (SEN) in compulsory education; ii) support the implementation of the National Policy on Educational Evaluation (PNEE) within the framework of the National System of Educational Evaluation (SNEE); and iii) disseminate data and information about the results of educational evaluations.

Assessment

Mexico has put considerable effort into creating and reinforcing evaluation and assessment mechanisms that cover all areas of the education system. It can be analysed in line with comprehensive knowledge about the main general directions that should be followed in the design of evaluation and assessment practices in education policy gathered by OECD (2013[1]). They include the following:

Take a holistic approach: The various components of assessment and evaluation should form a coherent whole. This can generate synergies between components, avoid duplications and encourage consistency of objectives; Mexico has achieved considerable progress on this front with the organisation and operation of the National System of Educational Evaluation (SNEE).

Align evaluation and assessment with educational goals: Evaluation and assessment should serve and advance educational goals and student learning objectives. This involves aspects such as alignment with the principles embedded in educational goals, designing fit-for-purpose evaluations and assessments, and ensuring a clear understanding of educational goals by school agents; in this regard, PLANEA instruments (replacing EXCALE) are also a significant step taken by Mexico to reinforce formative student assessments.

Focus on improving classroom practices: The point of evaluation and assessment is to improve classroom practice and student learning. With this in mind, all types of evaluation and assessment should have educational value and practical benefits for those who participate in them, especially students and teachers. The support material accompanying the EDC version of PLANEA (Evaluación Diagnóstica Censal) is a strong contribution to encourage the proper use of the results of standardised formative assessment in the classroom to improve student learning.

Avoid distortions: Because of their role in providing accountability, evaluation and assessment systems can distort how and what students are taught. For example, if teachers are judged largely on results from standardised student tests, they may “teach to the test”, focusing solely on skills that are tested and giving less attention to students’ wider developmental and educational needs. It is important to minimise these unwanted side-effects by, for example, using a broader range of approaches to evaluate the performance of schools and teachers. This is one of the areas where more effort should be made because, until know, there is limited knowledge regarding the extent to which standardised formative assessments are effectively and adequately used in Mexican classrooms.

Put students at the centre: The fundamental purpose of evaluation and assessment is to improve student learning and therefore students should be placed at the centre. They should be fully engaged with their learning and empowered to assess their own progress (which is also a key skill for lifelong learning). It is important, too, to monitor broader learning outcomes, including the development of critical thinking, social competencies, engagement with learning and overall well-being. These are not amenable to easy measurement, which is also true of the wide range of factors that shape student learning outcomes. Thus, performance measures should be broad, not narrow, drawing on both quantitative and qualitative data as well as high-quality analysis. This is also an area where Mexico has made important progress, not just in terms of implementing solid standardised student assessments but also with the implementation of the new curriculum and associated marking scales (for more information please consult the chapter on the new educational model in this report).

Build capacity at all level: Creating an effective evaluation and assessment framework requires capacity development at all levels of the education system. For example, teachers may need training in the use of formative assessment, school officials may need to upgrade their skills in managing data, and principals – who often focus mainly on administrative tasks – may need to reinforce their pedagogical leadership skills. In addition, a centralised effort may be needed to develop a knowledge base, tools and guidelines to assist evaluation and assessment activities. This is a second area that should be reinforced in the Mexican system, while policy design quality and expertise are strong at the federal level, there is strong variation at the state and school levels. As a result of these asymmetrical capacities, learning outcomes of students might experience substantial variations depending on their geographic location.

Manage local needs: Evaluation and assessment frameworks need to find the right balance between consistently implementing central education goals and adapting to the particular needs of regions, districts and schools. This can involve setting down national parameters but allowing flexible approaches within these to meet local needs. This dimension is closely connected with capacity; in this regard, Mexico has achieved mixed results because on the one hand, the education reform has made an effort to provide more flexibility to schools in some aspects (like curriculum design). However autonomy, as well as the resources and expertise to exercise it, remain insufficient at present (Chapter 2 reviews financial resources available to schools in Mexico).

Design successfully, build consensus: To be designed successfully, evaluation and assessment frameworks should draw on informed policy diagnosis and best practice, which may require the use of pilots and experimentation. To be implemented successfully, a substantial effort should be made to build consensus among all stakeholders, more likely to accept change if they understand its rationale and potential usefulness. In this respect, the implementation and development of evaluation and assessment practices in Mexico (especially standardised assessments) have been a great achievement. These are valuable to improve student learning in the classroom while offering solid information about the performance of schools and the system as a whole.

Overall, there is a consensus that much of the efforts made by Mexico in recent years in the area of evaluation and assessment are positive and that much of the current practice takes a holistic approach, looking to align assessment and evaluation practices with student learning goals and the improvement of classroom practices. This effort also puts the student at the centre (with formative assessment). At the same time, the evaluation and assessment system still needs to improve in trying to build local capacity to make sure that all the instruments are properly used at school level while making sure that all the information generated is also used to inform policy design and implementation to improve student learning. The following sections reflect on the main contributions of the current system.

An autonomous (and collaborative) evaluation and assessment system

Thanks to the collaboration between the INEE, the SEP and state authorities, Mexico has designed a complex and powerful evaluation and assessment system in education – including assessment for students, appraisal for teachers as well as evaluations for the system’s policies and processes in place.

The National Institute for the Evaluation of Education (INEE) was created by presidential decree on 8 August 2002. Before being constituted as an autonomous body, the INEE operated from 2002 to 2012 as a decentralised body of the Secretariat of Public Education and, then from 2012 to 2013, as a non-sectoral decentralised body. As established by the Law of the National Institute for the Evaluation of Education, the INEE became an autonomous public body with a legal identity and own assets. In this phase, the INEE's main task is to evaluate the quality, performance and results of the National Education System in pre-school, primary, secondary and high school education, that is to say all the levels of compulsory education in Mexico. To comply with this mandate, The INEE undertakes an ambitious agenda that covers three main areas: i) designs performance measurements for all the components, processes or outcomes of the education system; ii) issues the guidelines to be followed by federal and state educational authorities to carry out the evaluation functions allocated to them; and iii) generates and disseminates information through its attributes to issue guidelines to build mechanisms and policies meant to contribute to improving quality and equity in education.

In terms of governance and building capacity, the INEE co‑ordinates the National System of Educational Evaluation (SNEE). In a short time, the INEE built all the legal architecture of the SNEE, and facilitated its operation. Among other initiatives, the INEE developed the criteria for having representation at the SNEE from all the relevant educational actors and organised the SNEE Conference (Conferencia del SNEE). The latter gave a structure for the various education authorities to exchange information and experience related to education evaluation. These sessions constitute crucial spaces for discussion and analysis, an outcome of which was the elaboration of the National Policy on Education Evaluation (Política Nacional de Evaluación Educativa, PNEE) (Miranda López, 2016[13]).

The content of the PNEE (National Policy on Education Evaluation) is prepared in a collaborative manner (Box 5.2). All education authorities and the INEE’s Board of Directors discussed and agreed on the policy through a series of Regional Dialogues for the Elaboration of the PNEE (Diálogos Regionales para la Construcción del PNEE). In this regard, it is worth noting that the INEE has supported the different states in the construction of their specific evaluation strategies. This is extremely important since subnational authorities are ultimately in charge of conducting evaluations. The State Programmes for Educational Evaluation and Improvement (Programas Estatales de Evaluación y Mejora Educativa, PEEME) are the reference documents for each state to determine its initiatives in evaluation and how they contribute to the improvement of their compulsory education system. In particular, the evaluation and improvement programmes of the PEEME are elaborated according to a diagnosis of the most pressing issues that challenge the achievement of more education equity and quality in each state.

In general, the INEE, in collaboration with the SEP, has been successfully fulfilling its responsibilities as the co‑ordinator and driving force of the SNEE. Among the evaluations developed under INEE supervision, three are crucial to contribute to enhancing learning for all: the national student assessment (Plan Nacional para la Evaluación de los Aprendizajes, PLANEA), the evaluation of basic conditions for teaching and learning (Evaluación de Condiciones Básicas para la Enseñanza y el Aprendizaje, ECEA) and the new teacher appraisal system (a topic that can be found in Chapter 4 on teachers and schools).

A high-quality standardised instrument for formative student assessment, system evaluation and school monitoring: PLANEA

Mexico has made remarkable progress in establishing standardised student assessment mechanisms. The INEE designed and co‑ordinated the implementation of the National Plan for Learning Assessment (Plan Nacional para la Evaluación de los Aprendizajes, PLANEA) in collaboration with the SEP and state authorities. PLANEA monitors student learning outcomes at different levels of the education system, including national and subnational data and information on schools and individual students. PLANEA replaces the previous national assessment called ENLACE.

The National Plan for Learning Assessment (PLANEA) was put into operation by the Secretariat of Public Education (SEP), in co‑ordination with the National Institute for the Evaluation of Education (INEE) and the educational authorities of the states, from the 2014/15 school year. Its main purpose is to evaluate the performance of the National Education System (SEN) regarding learning in compulsory education and provide feedback to all school communities in the country of primary, secondary and upper secondary education, with respect to the learning achieved by their students in two areas of competency: language and communication and mathematics.

PLANEA classifies students’ performance into four levels. Level 1 performance reflects insufficient knowledge of the subject tested and requires focused pedagogical intervention to give these students the opportunity to learn what they have not yet learned. Level 2 performance indicates that students have developed only some elementary knowledge and skills of the subjects tested and therefore intervention is needed for improvement. Those students performing at Level 3 have a satisfactory knowledge of the subjects tested. Finally, Level 4 performance indicates an advanced knowledge level and students in this category might be exposed to more challenging activities (more often than other students).

An especially valuable feature of PLANEA is that all its tests are produced with items calibrated to a single measurement scale. Thus, the pedagogical interventions suggested by ELSEN, ELCE and EDC are based on the same learning objectives and can therefore be harmonised pedagogically. This is a relevant feature, produced by the reform of the SNEE that is particularly significant as a contribution of the new mechanisms. It should be noted that the scales used by EXCALE and ENLACE (former national student assessments) were not the same; therefore, the pedagogical interpretation of the results was not always congruent. The harmonisation of assessment scales is a significant step ahead and it is expected to make it easier for teachers and schools to use PLANEA results as a complementary tool to inform their pedagogical practice.

In this regard, the INEE is committed to encouraging this practice and making sure the educational community understands how to link PLANEA to their regular pedagogical approaches. The institute publishes a calendar of the dates on which the assessments will be carried out and the results are disclosed on line (through the SIGED) and as an institute publication. Furthermore, the SEP and the INEE publish material aimed at informing teachers, school leaders and sector supervisors about the structure and specifications of PLANEA. For instance, the SEP publishes every year a handbook for the implementation and analysis of EDC – also known as “PLANEA Diagnóstica” (see for instance the 2016 edition of the SEP/INEE manual (SEP/INEE, 2016[14]). The results are also sent to each school. Therefore, in the years in which PLANEA is carried out, every school knows which learning objectives in reading and mathematics their students have reached. This is crucial information for the design of pedagogical interventions aiming at providing the learning of students.

Recent changes to PLANEA

In May 2018, the INEE issued new guidelines for PLANEA, which replace the ones from December 2015. In this document only two versions of the standardised instrument are considered:

PLANEA related to the Compulsory Education System (PLANEA SEN). Co‑ordinated by the INEE with the purpose of providing information to federal education authorities, state, decentralised agencies and the general public, on the achievement of key learning acquired by students of the National Education System (SEN).

PLANEA for school communities (PLANEA Schools). Co‑ordinated by the SEP with the purpose of offering information to teachers and school leaders on the achievement of key learning of their students.

Both modalities are complementary. They are applied in the same academic years, to the students that conclude the sixth grade of primary, third of secondary and of the last year of upper secondary education and under very similar protocols. The substantial difference is that PLANEA SEN includes several application formats, in a matrix design, with the intention of evaluating an extensive set of learnings, while PLANEA Schools includes only one application format, which is common to all students evaluated. PLANEA SEN offers national, state and school stratified information from representative samples; on the other hand, PLANEA Schools provides information at the educational level, when it is applied in all the schools to a sample of students.

Items used in these two versions of PLANEA are multiple choice and are designed, marked and analysed by the INEE, based on the identification of key learning aspects established in corresponding plans and study programmes as well as other curricular references (such as textbooks, materials for teachers, etc.). As of 2018, the evaluations of PLANEA SEN will alternately add Natural Sciences and Civic Education and Ethics. The results of the evaluation are presented in two formats:

On a scale of 200 to 800 points, with an average of 500 points in the case of PLANEA SEN.

Through four levels of achievement for PLANEA SEN and PLANEA Schools. These levels go from 1 to 4 in progressive order, with 1 as the lowest level and the highest 4.

Despite this change in name, PLANEA SEN and PLANEA Schools still offer the same differences and improvements relative to the previous assessment, ENLACE (see Table 5.1 for details about the difference between the two mechanisms). Finally, it should be noted that, despite PLANEA SEN and PLANEA Schools being the mechanisms in place since May 2018, the SEP will continue developing the Diagnostic Census Assessment (EDC), which for 2019 is scheduled to be applied to students starting 3rd and 5th primary education years of all schools in the country.

Table 5.2. Main differences between ENLACE and PLANEA for Schools

|

ENLACE |

PLANEA for Schools (including SEN version when relevant) |

|

|---|---|---|

|

Target population |

Students who conclude: ● 3rd, 4th, 5th and 6th years of primary education ● 1st, 2nd and 3rd years of upper secondary education ● Last year of upper secondary education |

Students who conclude: ● 6th year of primary education ● 3rd year of lower secondary education ● Last year of upper secondary education |

|

Application coverage |

● School census ● Student census |

● School census ● Students sample |

|

Periodicity |

Annual |

Triannual |

|

Subjects tested |

● Spanish ● Mathematics ● 3rd alternating subject (civics, history and sciences) |

● Language and communication ● Mathematics ● In PLANEA SEN an alternating subject (natural sciences and civic and ethical training) |

|

Test applied |

A common test for all students |

● Six tests applied to the national sample of schools (PLANEA SEN) ● A test common to all students in the rest of the schools |

|

Application in schools |

● An application co‑ordinator external to the school ● School teachers apply to a different group than the one they teach |

● An external examiner (to the school) for each group |

|

Population tested to obtain national and subnational results |

All students assessed |

National sample of schools (PLANEA SEN) |

|

Rating method |

Theory of response of three parameters per item |

Theory of response of one parameter per item (Rasch) |

|

Scale of the results |

● 4 levels ● Scale of 200-800 points, except in upper secondary education |

● 4 levels ● Scale of 200-800 points |

|

Release of results |

Three months after the application |

Five to seven months after the application |

Source: OECD elaboration based on information provided by the SEP.

Enriching knowledge for the administration of the education system

A significant amount of empirical evidence has been generated in five years since the establishment of the SNEE, both through the INEE’s evaluations and assessments and through the systematisation of administrative data. In addition, the contribution of the CEMABE has been central to raise the amount of information on the system significantly. Having such detailed data at hand allows the INEE, the SEP and state authorities, in principle, to produce better informed and targeted policies and regulations.

In addition, the INEE also organises and implements the evaluation of basic conditions for teaching and learning, ECEA (Evaluación de Condiciones Básicas para la Enseñanza y el Aprendizaje), its purpose is to generate the information needed to make decisions about improving the conditions in which schools operate. As described in a previous section of this chapter, the conceptual model of ECEA defines the basic conditions necessary for the school to guarantee proper conditions and environments for learning.

The first ECEA took place in November 2014 in 1 425 primary schools selected randomly in 31 federal entities, which allowed the results to be representative at the national level. ECEA has an implementation plan spread over 8 years, with 2 diagnostics per education level: primary schools were to be assessed in 2014 and again in 2019, upper secondary schools were scheduled in 2016 and 2020, pre‑schools in 2017 and 2021, and lower secondary in 2018 and 2022 (INEE, 2016[15]). If the evaluations are carried out as planned, the information gathered can bring support to the design and monitoring of Mexican education policies and should feed the SIRE system as well as the SIGED.

To share information with the general public, the INEE created the Integral System of Evaluation Results (Sistema Integral de Resultados de las Evaluaciones, SIRE) that collects, stores and organises information on evaluation results, on the physical, sociodemographic and economic context as well as other information of the SNEE in a single platform. Its objectives are to strengthen the capacities for evaluating the quality, performance and results of the National Education System (SEN) in compulsory education; to support the implementation of the National Policy on Educational Evaluation (PNEE) within the framework of the National System of Educational Evaluation (SNEE); and to disseminate data and information about the results of educational evaluation in a transparent way. If it is kept up to date and publicised, an information system like the SIRE holds great potential as a means to develop a culture of using evaluation information as a tool for improvement in education. In this sense, the INEE co‑ordinates a number of initiatives to maintain, develop and diffuse knowledge about the existence of the SIRE (INEE, 2018[16]), an instrument that, in turn, can be also linked to the SIGED.

The Information and Management System of Education, SIGED (Sistema de Información y Gestión Educativa), was created to provide the national education system in Mexico with a unique information platform, enabling authorities to plan, operate, administer and evaluate the system while providing transparency and accountability. The SIGED is an articulated body that covers processes, guidelines, norms, tools, actions and technological systems that allows gathering, administering, processing and distributing information about the national education system. The information is generated by the system’s staff and authorities in order to support the processes of operation, administration and evaluation of the national education system (INEE, 2015[17]; SEP, 2015[18]). Because of its considerable potential, the SIGED must be seen as an extremely valuable tool for designing, implementing and monitoring education policy in Mexico.

The SIGED organises information around four main domains that can be observed in the education system data at the school, state and national levels: i) students: PLANEA results, although data on students are still scarce due to the restrictions imposed by personal data protection legislation; ii) teachers: registers about their place of work, entry date into the profession, training and professional trajectory; iii) school: data captured from specific instruments through the SEP (such as Formato 911) and the INIFED (National Institute for Physical Educational Infrastructure, Instituto Nacional de la Infraestructura Física Educativa) data; and iv) documentation from different areas of the education system (INEE, 2015[17]; SEP, 2015[18]).

Finally, in an effort to provide the teaching profession with transparency and accountability, Mexico established the Fund for Education and Payroll Operating Expenses (Fondo de Aportaciones para la Nómina Educativa y Gasto Operativo, FONE). (INEE, 2015[17]). Created in 2013, the FONE has been operating since 1 January 2015 as a tool for the Secretariat of Finances (SHCP) and the Secretariat of Education (SEP) to have centralised and transparent control (and reporting) on the educational payroll for all 32 states. What is more, the FONE seeks to align teacher remuneration with the objectives and guidelines of the Teacher Professional Service (Box 5.2 offers more information about the creation of the FONE).

Box 5.2. Fund for Education and Payroll Operating Expenses (FONE)

Within the framework of the National Agreement for the Modernisation of Basic Education (ANMEB), the management and administration of basic education services were transferred to the states (Official Gazette of the Federation, 19 May 1992). In 1998, a reform of the Fiscal Co‑ordination Law created the Contribution Fund for Basic Education (FAEB), through which federal resources were transferred to the states.

During the years of operation of the FAEB, a series of inconsistencies were detected in the administration of the fund, as well as questionable practices in matters of wage agreements such as the so-called “double negotiation”. The double negotiation consisted in the existence of a national negotiation between the Secretariat of Public Education and the National Union of Education Workers (SNTE), followed by another negotiation at the local level between the state authorities and the local union sections of the SNTE.

With the aim of ordering, making transparent and optimising the resources for the payment of the educational payroll in 2015 and replacing the FAEB, the Contribution Fund for Payroll and Operating Expense (FONE) was created (reform of the Fiscal Co‑ordination Law, 9 December 2013). The FONE concentrates the federal education payroll in 31 of the 32 federal entities of the country (except Mexico City) that amounts to almost MXN 360 billion for 2018, corresponding to just over 1 million workers and representing about 45% of federal resources earmarked for education.

Source: OECD elaboration based on communication with the SEP.

Strong capacity at national level and commendable efforts and school level

The capacity for evaluation and assessment at the federal level is impressive in Mexico. A large number (millions) of student assessments and teacher appraisals are processed every year in an effort that requires considerable logistical capacity but also high levels of technical expertise on the matter. According to previous OECD analysis, this can be attributed to the extensive technical knowledge accumulated in institutions such as the National Assessment Centre for Higher Education (CENEVAL), expert methodological guidance from the INEE, and strong policy and implementation capacity from the SEP (Santiago et al., 2012[19]). Areas such as educational measurement, psychometrics, test development, validation of test items or scaling methods are well developed in the country.

At school level, there are also efforts to improve the competencies of school leaders in evaluation and assessment practices, more concretely in relation to making sure that meaningful school self-evaluation processes take place and that pedagogical guidance and coaching to teachers is effectively provided. These concerns are at the centre of recent policy developments in education in Mexico. In addition to the creation of the SATE, the reinforcement of ATPs, the promotion of the school improvement route approach and the replacement of ENLACE by PLANEA (with all its formative tools to support teachers at classroom level) are just some of the elements that are meant to improve evaluation and assessment capacity at the school level for self-evaluation. Overall, these instruments have been well designed but their effective implementation and positive impact will largely depend on the extent to which the INEE, the SEP and state authorities succeed in working together to align resources and priorities to ensure that these instruments permeate to individual schools and establish themselves as part of the everyday culture of every one of them in Mexico.

Identifying inequalities in the system

There is evidence that student results in the education system are strongly influenced by socio-economic and cultural factors. Research undertaken by the INEE based on national student assessments in basic education indicates that there is a strong and positive relationship between student performance and his/her family’s socio-economic and cultural background. In particular, considerable educational gap can exist between students in the same grade, which in some cases can represent up to four years of schooling; to a great extent, such gaps are the product of social inequalities reproduced in the school system. Thus, socio-economic and cultural backgrounds explain the greatest share of variation in education performance among students in the country (Santiago et al., 2012[19]).

In recent years, Mexico’s authorities have gone to great lengths to incorporate the social context dimension of assessment and evaluation of education performance in the system. In the INEE publication Panorama Educativo de México (Mexico’s Education at a Glance), an entire section is dedicated to the discussion of how the social context impacts and shapes education in Mexico. The indicators used are extracted and analysed from the INEGI (Instituto Nacional de Estadística y Geografía, National Institute for Statistics and Geography) instruments such as the National Census and the National Household Survey and include elements such as: size and type of the location (rural, semi-urban, urban and large urban); ethnicity (if the individual speaks an indigenous language); afro-descendant (if the individual reports having a cultural and historic tie with the African culture); levels of marginalisation (low and high levels according to the classification of the National Population Council, CONAPO); minimum welfare line (equal to the minimal monetary value of monthly food expenses for an individual); poverty level (either extreme poverty or moderate poverty as per the classification established by the CONEVAL); income quintile; employment type; and, finally, the presence of mental or physical incapacity. All these context indicators combined with education data are now used in Mexico to better identify inequalities in the education system. These efforts have just begun and their impact should be seen in the coming years if Mexican authorities make good use of them to properly inform and design policy instruments in the area.

Recommendations for future policy development and implementation

Mexico has made important progress in the consolidation of a comprehensive national system for education evaluation and assessment. This system is essential to support quality and equity in education as mandated by the Mexican constitution (Article 3 and the General Education Law). In this regard, at an instrumental level, PLANEA is a major step towards making the assessment and evaluation system more formative and the actions undertaken by the INEE and the SEP to develop evaluation and assessment capacities at subnational level are commendable. These include the national evaluation system (Sistema Nacional de Evaluación Educativa, SNEE) and the design of a national evaluation programme (Programa Nacional de Evaluación Educativa, PNEE). As part of this strategy, Mexico has started making a considerable effort to gather, analyse and disseminate evaluation and assessment information that is meant to guide policy design and support monitoring activity at the macro level while providing schools and teachers valuable input to improve their operation and pedagogical practices.

To build on the progress made, Mexico might consider giving priority, attention and resources to the following: i) ensure that evaluation and assessment results are used to improve policies and practices; ii) use system evaluation to identify vulnerable student groups and effectively informs policy instruments to support them; iii) invest more in evaluation and assessment capacity development at the state and school levels; iv) encourage the formative use of the results of standardised student assessment to improve classroom practice; and v) use the mechanisms for educational information and management to their full potential at the national, state and school levels.

Ensure that all evaluation and assessment information (like PLANEA results and all the information contained in the SIRE) is used to improve policies and school practices

The accountability function of the evaluation and assessment system is essential to secure quality and equity in education as mandated by law, and Mexico has made substantial progress thanks to the co‑ordination of the INEE, the SEP, state authorities and relevant stakeholders. Providing autonomy to the INEE and entrusting it with the co-ordination of the SNEE are important steps to consolidate an independent and solid evaluation and assessment system in Mexico. In only a few years, the INEE, the SEP and state authorities have undertaken significant steps in the design and implementation of assessment, appraisal and evaluation tools for students, teachers, schools and for the education system as a whole.

In this process, the INEE has also contributed to the collection and processing of an impressive amount of information that can be vital for the further development of the education system in Mexico. It is important to give more support to the effective use of this evaluation and assessment information for the purpose of guiding the work and decisions made by policy makers, schools, teachers, students, families, unions, researchers and other stakeholders. Mexico might consider the following:

Support schools and state authorities to use the information generated by evaluation and assessment practices. This can be done by promoting the use of evaluation and assessment information as indispensable evidence required in order to improve quality and equity in education. More concretely, making sure that evaluation and assessment results and information are systematically used by schools and state authorities through bodies such as the CTE, CTZ, CEPSE and the SATE. A first step could be to provide further consideration to the communication of the aims of evaluation and assessment practices and all the information derived from them. In this regard, Mexico could reflect on the experience of Canada when trying to make more explicit the link between evaluation and assessment practices and pedagogical materials for teachers, or the experience of New Zealand in trying to communicate more clearly and effectively the role of assessment and evaluation practice as key elements to improve the whole education system (Box 5.3 presents the experience of Canada and New Zealand in more detail).

Box 5.3. Defining and communicating the purposes of assessment

In Canada, the Principles for Fair Student Assessment Practices for Education in Canada outline key elements for assessment practice that have served as foundations for teacher handbooks, board policies and departments of education policy documents on assessment and test development in all Canadian jurisdictions. The principles were developed in response to what was perceived as assessment practices not deemed appropriate for Canadians students. These principles and guidelines intended for both assessment practitioners and policy makers to identify the issues to be taken into account in order that assessment exercises be deemed fair and equitable. The text acts both as a set of parameters and a handbook for assessment. The first part deals with developing and choosing methods for assessment, collecting assessment information, judging and scoring student performance, summarising and interpreting results, and reporting assessment findings. It is directed towards practising teachers and the application of assessment modes in the classroom setting. The second part is aimed at developers of external assessments such as jurisdictional ministry/department personnel, school boards/districts, and commercial test developers. It includes sections on developing and selecting methods for assessment, collecting and interpreting assessment information, informing students being assessed, and implementing mandated assessment programmes (for more information, see:

https://www.wcdsb.ca/wp-content/uploads/sites/36/2017/03/fairstudent.pdf).

The New Zealand Ministry of Education Position Paper on Assessment (2010) provides a formal statement of its vision for assessment. It describes what the assessment landscape should look like if assessment is to be used effectively to promote system-wide improvement within and across all layers of the schooling system. The paper places assessment firmly at the heart of effective teaching and learning. The key principles highlighted and explained in the paper are: the student is at the centre; the curriculum underpins assessment; building assessment capability is crucial to achieving improvement; an assessment capable system is an accountable system; a range of evidence drawn from multiple sources potentially enables a more accurate response; effective assessment is reliant on quality interactions and relationships. To support effective assessment practice at the school level, the Ministry of Education is also currently conducting an exercise which maps existing student assessment tools. The purpose is to align some of the assessment tools to the National Standards and provide an Assessment Resource Map to help school professionals select the appropriate assessment tool to fit their purpose.

Source: OECD (2013[1]), Synergies for Better Learning: An International Perspective on Evaluation and Assessment, http://dx.doi.org/10.1787/9789264190658-en.

Continue encouraging independent research using evaluation and assessment data and information and make sure that it is extensively disseminated. Mexican authorities should encourage a larger number of studies to identify the explanatory factors of the evaluation and assessment results at the system, school and student levels and make sure that this research is disseminated and used at all levels of the education system. More concretely, authorities in Mexico should encourage more research on school self-evaluation methodologies and support for students, teachers and families. There are already efforts on this front, such as the Sectoral Fund of Research for Education Evaluation, from the National Council of Science and Technology (CONACYT), that should be expanded and receive more attention (CONACYT-INEE, 2018[20]). Some international experience can be of help for Mexico in this task, for example, the education authority in Ontario has a section of their website that outlines all the reports and a portal encouraging the use of their data for independent research (EQAO, 2018[21]). Another example can be found in New Zealand where the Ministry of Education tries to explain how rigorous evidence collected through assessment and evaluation mechanisms can help to make a difference in the constructions of well-informed policy devices (more information about the general lines of this strategy can be found in Box 5.4).

Box 5.4. Support for evidence-based policy making in New Zealand

In New Zealand, the Ministry of Education runs an Iterative Best Evidence Synthesis programme to compile “trustworthy evidence about what works and what makes a bigger difference in education”. A Strategy and System Performance Group within the ministry has core responsibility for system evaluation and assessment and runs this programme. Evidence collected in this programme showing the impact on student outcomes feeds into the development of education indicators that are used to evaluate the performance of the education system overall and the quality of education provided in individual schools. The policy significance of the Best Evidence Syntheses has been recognised by the International Academy of Education and the International Bureau of Education. Summaries of recent Best Evidence Syntheses are published on the United Nations Educational, Scientific and Cultural Organization (UNESCO) website, see: www.ibe.unesco.org/en/services/publications/educationalpractices.html.

Source: OECD (2013[1]), Synergies for Better Learning: An International Perspective on Evaluation and Assessment, http://dx.doi.org/10.1787/9789264190658-en.

Use system evaluation to identify vulnerable student groups and inform policy instruments to support them

System evaluation in Mexico has considerable potential to inform policies to tackle inequalities in education and monitor their progress. In this sense, it is important to reinforce the connection between evaluation evidence on the one hand and equity policy and mechanisms on the other. Within the overall evaluation and assessment framework, education system evaluation has arguably the strongest potential to pay attention to equity issues and inform current policies and programmes (e.g. PROSPERA) on how to address these and target support more effectively. In this domain, Mexico might consider the following:

Ensure and reinforce the monitoring of student performance across specific groups (e.g. by gender, socio-economic or immigrant/cultural background, special needs, remote/rural location, as already established in the INEE’s Panorama Educativo de México). To capture the performance of specific groups in a more efficient way, it could be reasonable to require that indicators of socio-economic level, ethnicity and gender should always be collected as background information in standardised tests at the school level. In addition to the monitoring exercise, it is also important to always use these indicators to contextualise the results obtained in all assessment mechanisms (as it is already happening with PLANEA results).

Take action to develop solid instruments and programmes to tackle the challenges of disadvantaged students. Mexico has already substantial experience on the ground of social policy executed by other ministries (such as the Social Development Ministry, SEDESOL). So, the SEP, the INEE and state authorities might continue incorporating some of the substantial experience accumulated in these programmes (for more information about them please consult the chapter on equity in this report) and adjust them for education purposes. For example, programmes like Escuelas de Tiempo Completo (Full-time Schools), Convivencia Escolar (School Environment), Inclusión y Equidad Educativa (Education Inclusiveness and Equity), among others, already include in their operation guidelines the undertaking of evaluation instruments to identify their impact on students; this work should be reinforced.

Invest more in evaluation and assessment capacity development at the state and school levels

The development of an effective evaluation and assessment framework involves considerable investment in developing competencies and skills for evaluation and assessment at all levels. As the evaluation and assessment framework develops and gains coherence, an area for policy priority is consolidating efforts to improve the capacity for evaluation and assessment. As in Mexico, the evaluation capability deficit is greater at the state levels and it is important that capacity building responds to the diverse needs of state educational authorities, supervision structures, school management and teachers.

State education authorities have a key role to play in education system evaluation in Mexico. Given the dimensions of the Mexican education system, the possibilities for the central level to develop richer evaluation processes are limited. If evaluations are designed and implemented centrally by the national government, they are likely to be restricted to standardised student assessments and collections of data. In order to go beyond standardised instruments and promote the deeper study and analysis of school quality, it is important to count on entities that are closer to the school level. The management of education sub-systems by the state authorities offers the potential for closer monitoring of school practices than a fully centralised system would allow, while also providing opportunities to recognise regional realities and constraints.

The state authorities can also play a key role in supporting the creation of networks among municipalities, school zones and sectors, allowing professionals at the local level to meet with their peers. Such networks can be a platform to share experiences across schools, analyse results in national student assessments, discuss local approaches to school self-evaluation, teacher appraisal and student assessment and develop common projects, materials and approaches. They can also be a starting point to identify professional development needs at the local level and develop common strategies for capacity development. In some states, there is incipient activity by state evaluation institutes to organise regional meetings and workshops with a focus on building evaluation and assessment capacity. In order to create and consolidate capacity at the state and school levels, Mexico might consider the following options: