Sara Willermark

University West, School of Business, Economics and IT, Sweden

Teaching as a Knowledge Profession

3. Teachers' technology-related knowledge for 21st century teaching

Abstract

This chapter addresses the questions of how to explore the knowledge and skills teachers need for effectively integrating technology in their teaching in an international study. It begins by underlining the importance of including technology-related knowledge in an assessment of teachers’ pedagogical knowledge for teaching in the 21st century. Then, it outlines the type of knowledge and skills teachers need for effectively integrating technology in their teaching and how these can be measured across countries. Drawing on previous research, different measurement approaches will be discussed. Despite a focus on teacher knowledge, the chapter includes suggestions for exploring teacher knowledge in the broader context of teachers' overall conditions, attitudes, and application of technology in teaching practice.

Background

The digitalisation of society and school do not merely support (or in worse case inhibit) learning. It transforms learning and how teaching and learning is interpreted (Billett, 2006[1]; Bergöö, 2005[2]; Säljö, 2010[3]). For decades, there has been extensive investment both in technology and professional development initiatives to promote digitalisation (Agyei and Voogt, 2012[4]; Howell, 2012[5]; Egeberg et al., 2012[6]; Olofsson et al., 2011[7]). Nevertheless, integrating technology in teaching has proven to be a complex process (Erstad and Hauge, 2011[8]; Mishra and Koehler, 2006[9]). Despite decades of investments, many studies show that the high expectations on how this would change teaching practices were not fulfilled (Cuban, 2013[10]; Olofsson et al., 2011[7]). According to the large-scale assessment of the Teaching and Learning International Survey (TALIS), many teachers feel unprepared to use technology in teaching and there is inequality regarding access to, and use of, technology in teaching (OECD, 2020[11]).

In addition to issues of equality, the outcome of technology usage is unclear. The introduction of technology in teaching has been reported as having positive effects on students’ engagement, motivation and achievements, as well as on teachers’ teaching methods (Apiola, Pakarinen and Tedre, 2011[12]; Bebell and Kay, 2010[13]; Cristia et al., 2017[14]; Keengwe, Schnellert and Mills, 2012[15]; Martino, 2010[16]; Azmat et al., 2020[17]; Azmat et al., 2021[18]). Yet, many studies also report how technology use can have negative effects by causing additional distraction and therefore interfering with learning (Bate, MacNish and Males, 2012[19]; Islam and Grönlund, 2016[20]). Educational technology has been described as an ‘intellectual and social amplifier’ which can help make ‘‘good’’ schools better but also increase problems at low achieving schools (Islam and Grönlund, 2016[20]; Warschauer, 2006[21]). Thus, there is clear evidence that technology use by itself does not improve teaching and learning outcomes. Instead, only its effective pedagogical use can guarantee improvements (Burroughs et al., 2019[22]; Mishra and Koehler, 2006[9]; Islam and Grönlund, 2016[20]). Thus, an informed and conscious use of technology for educational purposes is crucial.

The focus of research and practice should therefore be on what technology ought to be used for, and what type of teaching and learning activities technology can enhance. To make sure technology use improves education on a large-scale, many scholars have highlighted the need for support and active leadership (Kafyulilo, Fisser and Voogt, 2016[23]; Dexter, 2008[24]; Islam and Grönlund, 2016[20]; Kafyulilo, Fisser and Voogt, 2016[23]). An important part of support initiatives is about identifying teachers' existing knowledge, usage and learning needs at large. Although a lot has been learned from international surveys, such as TALIS, there is more to learn about teachers’ technology-related knowledge to support effective teaching in the 21st century.

This chapter sets out for an exploration of how to better understand teachers’ technology‑related knowledge and skills. First, ideas for conceptualising these skills are provided, then different measurement approaches compared. Finally, the chapter lists concrete recommendations for exploring teachers’ knowledge and effective use of technology in an international large-scale survey.

Conceptualising knowledge to integrate technology in teaching

Numerous attempts have been made to elaborate on what digital competence is needed for teaching in a digitalised school (Ferrari, 2012[25]; Hatlevik and Christophersen, 2013[26]; Kivunja, 2013[27]; Krumsvik, 2008[28]; Howell, 2012[5]). Scholars commonly stress that teachers' digital competence is embedded into complex organisational systems. Therefore, it denotes a more multifaceted set of competencies compared to ‘digital competences’ needed in other areas of society (Instefjord and Munthe, 2016[29]; Krumsvik, 2008[28]; Pettersson, 2018[30]). Teachers need more than fundamental technological skills to be digitally competent, as it is about applying technological skills in an educational context, as a pedagogical resource. For example, Kivunja (2013, p. 131[27]) described such digital competence as ‘the art of teaching, computer-driven digital technologies, which enrich learning, teaching, assessment, and the whole curriculum.

Krumsvik (2008[28]) suggests that teachers' digital competence entails teachers' proficiency in using technology in a professional context, with good pedagogic-didactic judgement and awareness of its implications for learning strategies. From these perspectives, technologies are considered as a way to support pedagogical knowledge and methods. However, most of the widely used technology is not designed to operate in educational contexts. Many of the popular software programmes are not primarily intended for educational purposes but rather business purposes. In the same way, web-based services are primarily designed for entertainment, communication and social networking (Koehler, Mishra and Cain, 2013[31]). This means that teachers need to develop methods, strategies and applications of technology which are suitable in a teaching and learning context (Kivunja, 2013[27]; Krumsvik, 2008[28]; Mishra and Koehler, 2006[9]). This can partly explain why many teachers experience difficulties in integrating technology into teaching. A framework that highlights such complexity and that has reached great impact both in research and in practice is discussed below.

TPACK: A framework on technology integration and its relation to teachers’ general pedagogical knowledge

TPACK, denoting Technological Pedagogical and Content Knowledge, has emerged as a theoretical framework aiming at specifying what knowledge is required for teaching in the 21st century. It has attracted much attention within the educational field (Willermark, 2018[32]). TPACK constitutes the development of Shulman’s Pedagogical Content Knowledge model (PCK) (Shulman, 1986[33]). In the original work, Shulman stressed the importance of integrating teachers’ content knowledge with pedagogical knowledge. Shulman defined PCK as going beyond content or subject matter knowledge to include knowledge about how to teach a particular content.

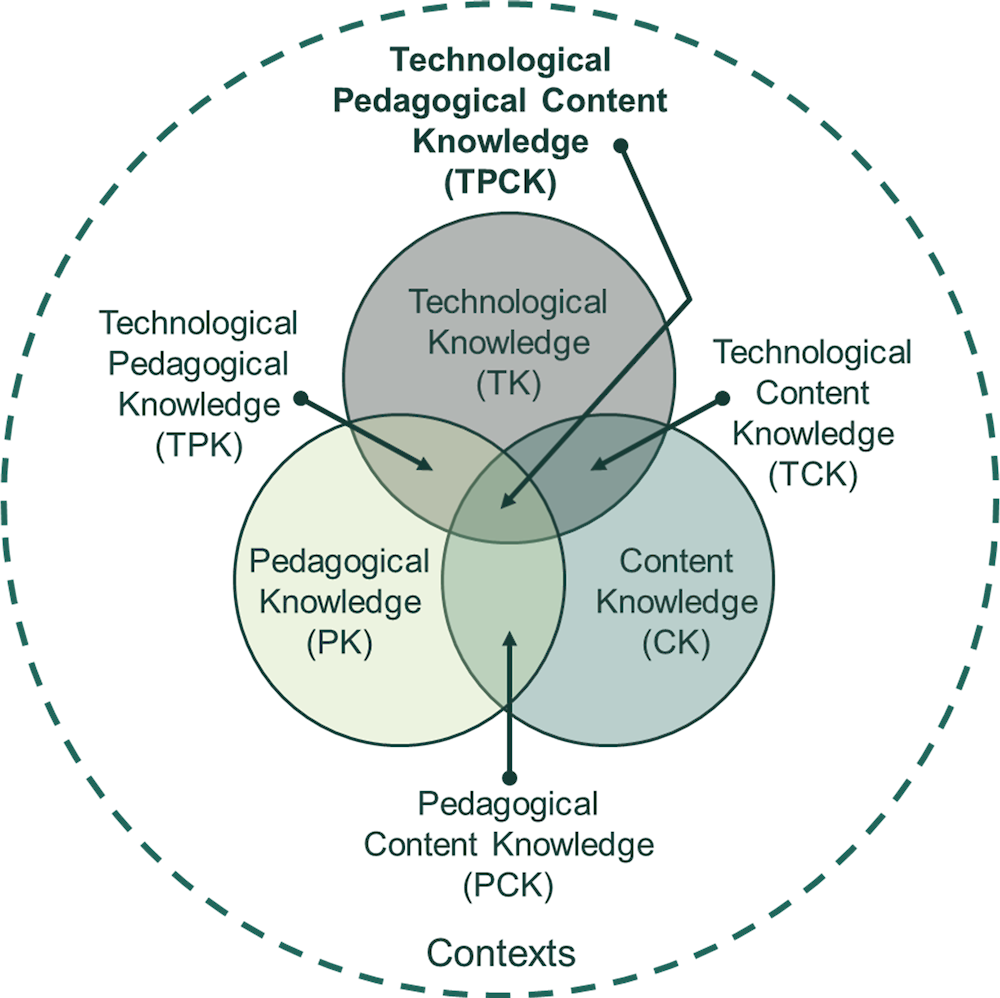

In Mishra and Koehler’s (2006[9]) development of the work, the aspect of Technological Knowledge (TK) was added. The work refers to TK as the knowledge of how to work with and apply technological recourses. The framework stresses the complex intersection of technological, pedagogical and content knowledge within given contexts. The framework suggests that apart from considering these components in isolation, it is necessary to look at them in pairs as “Pedagogical Content Knowledge” (PCK), “Technological Content Knowledge” (TCK), “Technological Pedagogical Knowledge” (TPK), and finally, all three taken together, as “Technological Pedagogical and Content Knowledge” (TPACK) (see Figure 3.1).

From this point of view, teaching entails developing a nuanced understanding of the complex relationship between technology, pedagogy and content, and using this understanding to develop suitable context-specific strategies and representations of content. The great impact of TPACK may be because it constitutes a theoretical framework that focuses on how technology is integrated into teaching. TPACK represents a holistic view of the knowledge teachers need to effectively apply technology in teaching (Willermark, 2018[32]; Mishra and Koehler, 2006[9]).

The framework has received criticism for not being practically useful. In particular, the technology domain has been criticised for being vague (Cox and Graham, 2009[34]; Graham, 2011[35]). The argument of this chapter, however, is that TPACK constitutes a fruitful framework to explore technology integration in teaching practices. This is due to the holistic approach to technology integrating into teaching, within a specific context. It stresses the qualitative aspects of technology usage and goes beyond a simplified approach to technology as having an intrinsic value. It is consistent with previous research that highlights the complexity of technology use in teaching (Burroughs et al., 2019[22]; Islam and Grönlund, 2016[20]; Willermark and Pareto, 2020[36]).

Figure 3.1. The TPACK framework

Reproduced by permission of the publisher, © 2012 by http://tpack.org.

Source: (TPACK ORG, 2012[37])

TPK: Teachers subject-independent knowledge and skills to effectively use technology

General pedagogical knowledge has been highlighted as an important ingredient for high‑quality teaching. In a recent review of research on the relevance of general pedagogical knowledge, it has been identified that deep and broad knowledge about general pedagogy allows for successful teaching-learning events. It includes greater efficacy in teaching and the successful management of multicultural classrooms (Ulferts, 2019[38]). Studies to date, however, provide little insights into the subject-independent knowledge that teachers need for the effective use of technology in their work. Based on these findings, there are reasons to explore teachers' abilities to use technology to support their general pedagogical knowledge.

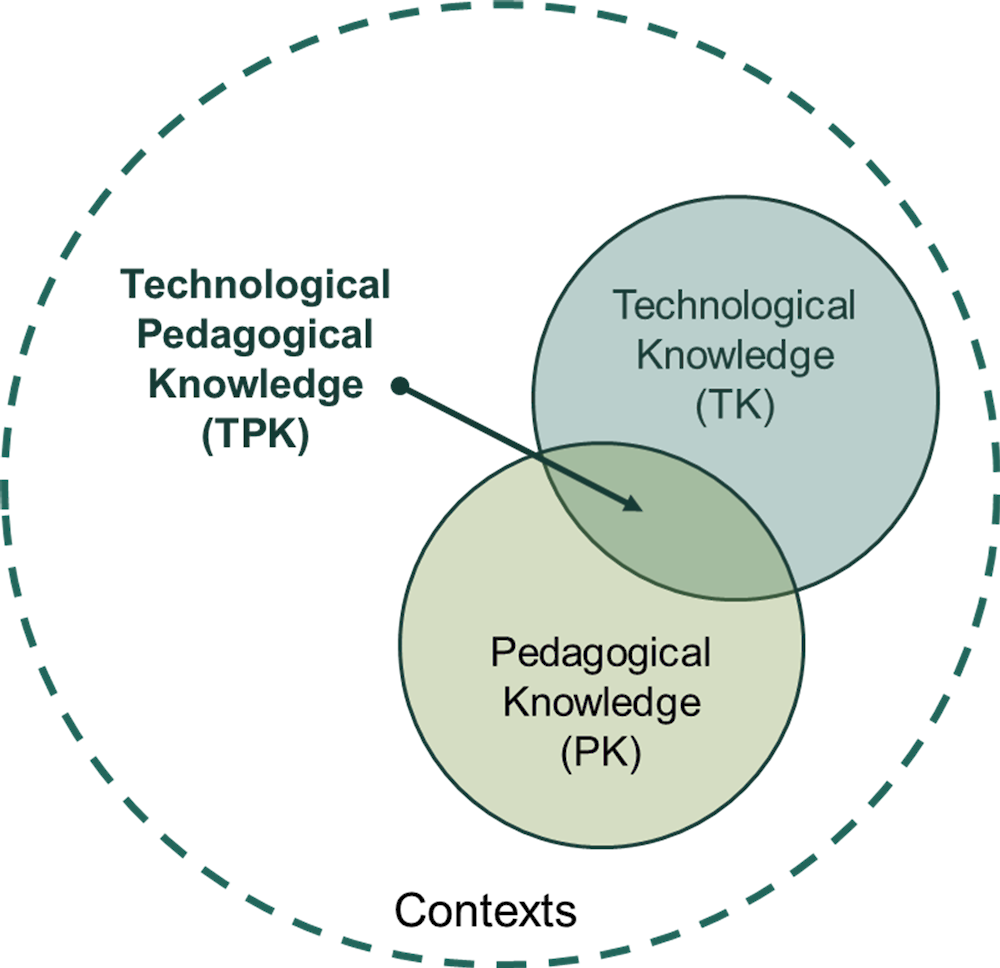

In the TPACK framework, the “Content Knowledge” constitutes a basic component together with “Pedagogical Knowledge” and “Technological Knowledge”. However, the construct of “Technological Pedagogical Knowledge” (TPK) captures the subject independent knowledge teachers need as a foundation to effectively use technology in their teaching. It stresses the relationship between general pedagogical knowledge, i.e. the specialised knowledge of teachers for creating effective teaching and learning environments for all students independent of subject matter (Guerriero, 2017[39]), and general technological knowledge, i.e. a basic understanding of technology use, skills required to operate particular technologies and the ability to learn and adapt to new technologies. “Technological Pedagogical Knowledge” is knowledge of the existence and capabilities of various technologies that are used in teaching and learning settings, and knowing how teaching can transform when using particular technologies. This is based on an understanding that a range of tools exists for a particular task.

The framework illustrates the skills needed to select a suitable tool and strategies for using the tool’s affordances, i.e. the possibilities and permissions that a technological artefact invites to. It also includes knowledge of pedagogical strategies and the ability to apply those strategies along with technology (Mishra and Koehler, 2006[9]). Such a broad definition is necessary to capture teacher’s “Technological Pedagogical Knowledge” across disciplines and educational levels. Furthermore, as technology is constantly evolving, the meaning of “Technological Knowledge” needs to be in constant motion, since a narrow definition risks becoming quickly outdated. Since change and development are part of the theoretical framework, it also has the opportunity to stay relevant over time.

Figure 3.2. Teachers’ subject-independent knowledge to effectively use technology in teaching

Reproduced by permission of the publisher, © 2012 by http://tpack.org.

Source: (TPACK ORG, 2012[37])

The next section discusses ways to measure teacher’s knowledge based on the TPACK framework. The focus is on “Technological Pedagogical Knowledge” (see Figure 3.2). Even though the intersection of technology and pedagogy is in focus, it can also be of interest to explore each component separately, in order to investigate the strengths and weaknesses of teachers’ knowledge base. Such an identification can be important to be able to identify the type of support that teachers may need.

Measuring teachers’ technological knowledge and skills in an international survey

There are two ways which are most commonly used to evaluate teachers’ knowledge and skills of using technology in teaching; through self-reporting or though performance‑analysis of teaching‑related activities (Willermark, 2018[32]). In addition to these approaches, tests can also be used to measure teachers’ knowledge (Maderick et al., 2016[40]; Drummond and Sweeney, 2017[41]). The approaches offer different opportunities and challenges, which will be discussed below, followed by an overview of existing instruments.

Opportunities and challenges of different measurement approaches

Self-reporting via questionnaires and objective knowledge assessments

The most frequently used approach to measure teachers' technology-related knowledge and skills consists of self-reporting via questionnaires but studies have also used interviews and diary entry questions, in which teachers document and reflect upon their performance (Archambault and Crippen, 2009[42]; Schmidt et al., 2009[43]; Chai et al., 2013[44]; Lux, Bangert and Whittier, 2011[45]). The approach has obvious advantages, such as enabling efficient, comprehensive and comparable studies from a large amount of data (Bryman, 2015[46]). Furthermore, self-reporting questionnaires offer an opportunity of highlighting teachers’ perspectives, as well as offering opportunities for reflection on teachers' own technology-related knowledge and skills.

Due to the limitations of self-reporting there are also disadvantages. Studies show that making accurate evaluations of one’s own abilities is a difficult task. There is a risk of ‘socially desirable responding’, which has been described as the tendency of people to answer in a way that is more socially acceptable (Nederhof, 1985[47]), and the tendency to give overly positive self-descriptions (Paulhus, 2002[48]). People can be unaware of their lack of technology-related knowledge and/or under- or over-estimate their abilities. In an educational context, Lawless and Pellegrino (2007[49]) show that gains in teachers’ self‑reported knowledge over time reflect their increased confidence rather than their actual increased knowledge in practice.

This phenomenon has been recognised in research on TPACK as well. For example, a study by Drummond and Sweeney (2017[41]) showed that self-reported TPACK of pre-service teachers revealed only a weak correlation with knowledge test. In another study, Maderick, Zhang, Hartley and Marchand (2016[40]) came to similar conclusions. Various factors can affect the difficulty of making a realistic assessment of one’s own ability, such as how important the knowledge is to the self-reporter but also how well the questions are specified (Ackerman, Beier and Bowen, 2002[50]). Thus, the ecological validity of self-report can be questioned as it is hard to tell what is measured: the desired personal characteristic, or how much respondents can stretch the image of themselves; respondent’s self-confidence or actual knowledge?

Still, self-reports tend to detect teachers’ self-efficacy, which is a crucial component of teachers’ technology-related knowledge and a predictor of actual teacher behaviour (Tschannen-Moran, 2001[51]). Thus, teachers need positive beliefs, motivation and knowledge to effectively integrate technology in teaching. It makes self-efficacy a relevant, but an insufficient, aspect to explore when measuring teachers’ technological knowledge and skills of using technology in teaching.

Socially desirable responding and self-awareness aside, there is additional difficulty linked to standardised measurement instruments such as questionnaires to measure teacher technology-related knowledge. Thus, questionnaires usually reflect a simplified approach towards knowledge as something stable that the individual possesses, regardless of situation or context (Willermark, 2018[32]). Yet, knowledge cannot be considered exclusively as a static embedded capability nor a stable disposition of actors. Instead, it constitutes a situated ongoing accomplishment that is constituted and reconstituted as one engages in practice (Orlikowski, 2002[52]). Thus, what it means to be technologically knowledgeable is complex.

Studies show that although teachers may have technological knowledge, it does not automatically mean they are capable of using them in teaching practice (So and Kim, 2009[53]; Tatto, 2013[54]). To address these issues of transfer, it becomes important to use contextualised questionnaires that use statements or questions that refer to concrete teaching tasks and situations. That is, to not just ask questions of the character if/how the respondent feels technologically knowledgeable in general, but rather in what situations and in relation to what activities. Contextualised self‑reports have been shown to yield on average more moderate results than self-reports of a general nature (Ackerman, Beier and Bowen, 2002[50]). Designing a questionnaire where questions are of specific and context-bound character, rather than of general nature, is a way to get closer to measuring teachers’ knowing in practice (Willermark, 2018[32]).

In addition to measurement based on self-reporting, there is also the possibility of using assessments that test teachers’ technology-related knowledge. This approach can be used to collect more objective data of teachers’ TPACK than self-reporting. Although the approaches offer more objective data than self‑reporting, it still involves several challenges. First, there is a lack of existing instrument which have been widely applied and validated in different contexts. Second, existing instruments provide a rather narrow picture of technology use in teaching and technology-related knowledge of teachers (see the Section Overview of existing instruments and synthesis for examples). Similar to self‑reporting on questionnaires, assessments do not capture how teachers' knowledge and skills are manifested in practice. For example, even though teachers know the strict definition of an artefact, it does not mean that they are capable or motivated to use it in practice, or vice versa. It becomes especially difficult to capture the complex knowledge that the intersection of knowledge domains constitutes and how these are manifested in a given context. Furthermore, questions on teachers’ technological knowledge risks becoming quickly dated, due to rapid technological development (therefore making the instrument subject to temporal limitations).

Performance-analysis of teaching activities

Performance‑analysis on different teaching-related activities is often carried out as tasks in which teachers are asked to perform teaching actions, such as planning or implementing teaching in a fictional or authentic setting, and where the performance is documented and analysed (Curaoglu et al., 2010[55]; Graham, Cox and Velasquez, 2009[56]; Graham, Borup and Smith, 2012[57]; Harris, Grandgenett and Hofer, 2010[58]; Kereluik, Casperson and Akcaoglu, 2010[59]; Suharwoto, 2006[60]; Pareto and Willermark, 2018[61]). Evaluating teachers’ technological knowledge via performance-analysis on teaching activities brings the benefit of capturing teachers’ manifestation of technology-related knowledge and skills in practice. That is, how knowledge about technology and pedagogy is applied in a teaching situation. Depending on the evaluation design, it can capture how teaching is orchestrated in interplay with students, technology and other elements, which influence the teaching dynamics. The approach is advantageous since teaching involves not only adapting to a range of predictable parameters, such as student group composition or classroom environment and the school's digital infrastructure. It also includes situational aspects, such as timing (to continually make instantaneous decisions regarding what, when and how to provide students with feedback); classroom management, such as balancing the need of the individual with the rest of the student group; and coping with unforeseen events and technological problems (Willermark, 2018[32]).

However, inferring a teacher’s technology-related knowledge solely by direct observation entails disadvantages as well. Neither can the decision-making processes that led to the observed actions and interactions be identified, nor can the rationale that undergirds those actions be detected. To compensate for these shortcomings, observations can be supplemented with an analysis of teaching materials, such as instructional plans and student materials. These materials may capture the intention of the teaching design. Qualitatively oriented researchers have developed in‑depth coding schemes, models and rubrics to classify material representing authentic teaching. For example, lesson plans, videotaped classroom instruction or teachers' retrospective reflections according to the particular level of teachers’ technology-related knowledge (Harris, Grandgenett and Hofer, 2010[58]; Pareto and Willermark, 2018[61]; Schmid, Brianza and Petko, 2020[62]).

The approach to measure teachers' technological knowledge through performance is less common, particularly in the more comprehensive studies (Willermark, 2018[32]). Although the approach can provide valuable insights of teaching quality, performance analysis often means that only one or a few activities are analysed, which is not necessarily representative of the teacher's general competence. Preferably, teachers' performance should be studied over time. This is, however, resource intensive and can be difficult to realise.

Nevertheless, there are examples of large-scale international studies that use performance‑analysis. The OECD Global Teaching InSights (GTI) study involves video-recording of mathematics lessons taught by a representative sample of 85 lower secondary teachers in each participating country. In addition to video‑recordings, the study included teacher and student surveys, as well as teaching and learning material such as lesson plans, homework and assessments (OECD, 2020[63]). Thus, follow-up studies or smaller cross-country surveys that evaluate teachers' technological knowledge via performance on teaching activities could yield promising results.

Overview of existing instruments and synthesis

Self-reporting instruments and objective assessments

A literature review shows that questionnaires are the most frequently used approach to measure teachers’ TPACK (Willermark, 2018[32]). Often, participants are asked to numerically rate statements on a five or seven-point Likert scale. Teacher’s knowledge within the domains of Technology and Pedagogy and Content is measured consistently both individually and within their intersections. Many instruments have been developed and applied in different ways to operationalise teachers’ knowledge. The addressed instruments cover several or all seven TPACK components, and scales show overall high reliability (see Table 3.1 for details).

Table 3.1. Overview of selected TPACK questionnaires

|

Author |

Samples |

Region |

Number of items |

Cronbach’s alpha |

|---|---|---|---|---|

|

Schmidt et al. (2009[43]) |

Pre-service teachers |

USA |

Overall: 47; Subscales: TK: 7, CK: 12, PK: 7, TPK: 5, PCK: 4, TCK: 4, TPACK: 8 |

TK = 0.82, CK = 0.81, PK = 0.84, PCK = 0.85, TCK = 0.80, TPK = 0.86, TPACK = 0.92 |

|

Chai et al. (2011[64]) |

Pre-service teachers |

Singapore |

Overall: 31; Subscales: TK: 6, CK: 4, TPK: 3, TPACK: 5, TCK: 1, PKML: 13 |

TK = 0.90, CK = 0.91, TPK = 0.86, TPACK = 0.95, TCK = not reported, PKML = 0.91 |

|

Archambault and Crippen (2009[42]) |

In-service teachers |

USA |

Overall: 24; Subscales: TK: 3, CK: 3, PK: 3, TPK: 4, PCK: 4, TCK: 3, TPACK: 4 |

TK = 0.88, CK = 0.76, PK = 0.77, PCK = 0.79, TCK = 0.69, TPK = 0. 77, TPACK = 0.78 |

|

Lux et al. (2011[45]) |

Pre-service teachers |

Western region |

Overall: 27; Subscales: TPACK: 8, TPK: 5, PK: 4, CK: 3, TK: 4, PCK: 3 |

TPACK = 0.90, TPK = 0.84, PK = 0.77, CK = 0.77, TK = 0.75, PCK = 0.65 |

|

Jang and Tsai (2013[65]) |

In-service teachers |

Taiwan |

Overall: 30; Subscales: CK: 5, PCKCx: 9, TK: 4, TPCKCx: 12 |

CK = 0.86, PCK = 0.91, TK = 0.89, TPCKCx = 0.97 |

Note: The instruments by Schmidt et al. (2009[43]) and Archambault and Crippen (2009[42]) are of particular interest for designing an international large-scale survey.

A frequently used questionnaire was developed by Schmidt et al. (2009[43]). The questionnaire was originally developed to assess pre-service teacher knowledge. Participants were asked to rate statements on a five-point Likert scale and the instrument includes 47 items. For example, it includes statements such as “I can choose technologies that enhance the teaching lesson” or “I am thinking critically about how to use technology in my classroom” or “I can select technologies to use in my classroom that enhance what I teach, how I teach and what students learn” (reflecting Technological Pedagogical Knowledge). Other examples include “I know how to solve my own technical problems” or “I can learn technology easily (reflecting Technological Knowledge) or “I can adapt my teaching style to different learners” or “I can assess student learning in multiple ways” (reflecting Pedagogical Knowledge).

Many scholars have used the instrument in its original form or have somewhat modified it to fit to particular needs, such as translation. The questionnaires have been used in diverse contexts including pre-service and in-service teachers, different teaching grades, different disciplines and different countries [including, for example, France (Azmat et al., 2020[17]; Azmat et al., 2021[18]), Indonesia (Ansyari, 2015[66]), Kuwait (Alayyar, Fisser and Voogt, 2012[67]),Taiwan (Chen and Jang, 2014[68]),Turkey (Calik et al., 2014[69]) and USA (Banas and York, 2014[70]; Doering et al., 2014[71])].

Building on the work of Schmidt et al. (2009[43]), another frequently used questionnaire was developed by Chai, et al. (2011[64]). They used a seven-point Likert scale and suggest a 31‑item questionnaire building on items from Schmidt et al.’s (2009[43]) to evaluate Singaporean pre-service teachers TPACK. Revisions of the items have been made, for example, they include items that address web-based competencies, such as “I am able to teach my student to use web 2.0 tools (e.g. Blog, Wiki, Facebook)” or “I am able to use conferencing software (Yahoo, IM, MSN Messenger, ICQ, Skype, etc.)” (reflecting Technological Knowledge). The instrument has also been applied in different countries and to in-service and pre-service teachers directly or with some modification. Examples include in-service Chinese language teachers’ (Chai et al., 2013[44]), pre-service Singapore teachers (Chai et al., 2011[64]) and pre‑service Swiss upper secondary school teachers (Schmid, Brianza and Petko, 2020[62]).

Furthermore, Archambault and Crippen (2009[42]) designed a questionnaire to measure American online K-12 teachers TPACK. A total of 24 items was applied to measure each of the seven TPACK dimensions using a 5-point Likert scale. Respondents were asked the overall question: “How would you rate your own knowledge in doing the following tasks associated with teaching in a distance education setting?” Items included: “My ability to moderate online interactivity among students” (reflecting Technological Pedagogical Knowledge) or “My ability to determine a particular strategy best suited to teach a specific concept” (reflecting Pedagogical Knowledge) or “My ability to troubleshoot technical problems associated with hardware (e.g. network connections)” (reflecting Technological Knowledge). The instrument has been applied by other researchers as is or with some modifications, including pre-service (Han and Shin, 2013[72]) and in-service (Joo, Lim and Kim, 2016[73]) teachers in South Korea.

There are additional examples of TPACK questionnaires instruments. For example, Lux, Bangert, and Whittier (2011[45]) developed a 45-item questionnaire to address the need for an instrument for assessing pre-service teacher TPACK, referred to as “PT-TPACK”. The questionnaires explore to what extent western pre-service teachers perceive to be prepared for teaching based on the different TPACK constructs. The questionnaires were designed so that participants responded to each item by indicating to what extent they agree with the statement: “My teacher preparation education prepared me with X”. For example, it includes statements such as “An understanding that in certain situations technology can be used to improve student learning” or “An understanding of how to adapt technologies to better support teaching and learning” (reflecting Technological Pedagogical Knowledge). This is in line with the teacher survey in TALIS, which asks similar questions about whether certain elements were included in teachers’ formal education or training, and to what extent teachers feel prepared for these in their teaching. In TALIS, one item is “Use of ICT (information and communication technology) for teaching”. Items from the questionnaire of Lux, Bangert, and Whittier (2011[45]) could provide valuable complementary information. For example, “An understanding of how technology can be integrated into teaching and learning in order to help students achieve specific pedagogical goals and objectives” or “Knowledge of hardware, software, and technologies that I might use for teaching”.

Furthermore, there are examples of instruments that explore specific technologies or phenomenon. Jang and Tsai (2013[65]) developed a 30-item questionnaire to explore interactive whiteboards in relation to TPACK (“IWB-based TPACK”). The instrument was explored in the context of Taiwanese elementary mathematics and science teachers. Lee and Tsai (2010[74]) developed a 30-item questionnaire, referred to as “Web Pedagogical Content Knowledge”, which explores teachers’ attitudes toward web-based instruction in the context of elementary to high school level in Taiwan. Besides, many studies explore technology integration in relation to different subject domains. For example, mathematics (Agyei and Voogt, 2012[4]; Corum et al., 2020[75]), chemistry (Calik et al., 2014[69]), geography (Hong and Stonier, 2015[76]), language (Baser, Kopcha and Ozden, 2016[77]; Hsu, Liang and Su, 2015[78]) and social sciences (Akman and Güven, 2015[79]).

There are also examples of instruments that utilise open-ended questionnaires. Typically, questionnaires contain items that ask teachers to write about their overall experience in an educational technology course or professional development programme that is designed to promote pre-or in-service teachers’ Technological Pedagogical Content Knowledge (Koehler et al., 2014[80]). For instance, So and Kim (2009[53]) used the following question: “What do you see as the main strength and weakness of integrating ICT tools into your PBL (problem-based learning) lesson?” The authors then coded teachers’ responses focusing on their representations of content knowledge in relation to pedagogical and technological aspects of the course. Open-response items could be applied to gain more in‑depth knowledge of teachers’ motives, reason and actions in different situations. For example, it could involve questions concerning what teachers perceive to be the main opportunities and challenges of integrating technology into their teaching, or in what way the use of technology affects their work. In a large-scale survey, open response items should be used with caution. However, short answer constructed-response could be valuable and used in a few carefully selected cases.

Only few assessments of teachers’ technology-related knowledge exist. For example, Drummond and Sweeney (2017[41]) developed a test to measure teachers' TPACK. The test included 16 items, in which teachers were asked whether a series of statements about technology use for teaching were true or false. For example; “Research suggests that technology generally motivates students to participate in the teaching and learning process” or “To get the sound to play across multiple slides in Microsoft PowerPoint, you should use commands in the Transitions Menu”.

In another study, Maderick et al. (2016[40]) designed a test to explore pre-service teachers' digital competence. The test included 48 multiple choice questions, distributed on seven topics: General Computer Knowledge, Word Processing, Spreadsheets, Databases, E‑Mail/Internet, Web 2.0. and Presentation Software. For example: “The process of encoding data to prevent unauthorised access is known as: a) locking out, b) encryption, c) compilation, d) password protection or e) I do not know” or “Details of business transactions, which are unprocessed, would be classified as: a) information, b) bytes, c) data, d) files, or e) I do not know” (reflecting General Computer Knowledge).

The overview of existing self-reporting instruments demonstrated that the TPACK framework has been widely applied to measure teachers’ knowledge and skills of using technology in teaching via questionnaires in various contexts across countries, disciplines and educational levels. Instruments that can be particularly interesting for an international large-scale questionnaire are Schmidt et al. (2009[43]), as well as Archambault and Crippen (2009[42])1. The instrument by Schmidt et al. (2009[43]) has shown particularly widespread use in various contexts and regions, which is an indication of cross-country feasibility. The instruments offer several items to measure teachers' Technological Pedagogical Knowledge that can easily be integrated into a questionnaire of an international survey on teacher knowledge.

Though assessments that test teachers’ technology-related knowledge exist, they often provide a rather narrow picture of technology use in teaching and technology-related knowledge of teachers. Thus, the development of an objective assessment of teachers’ technology-related knowledge requires more effort. As highlighted in Figure 2.2 in Chapter 2, this knowledge is transversal. Teachers can use technology to support their instruction, their assessment practices but also to foster individual and group learning. Consequently, an assessment should include technology-related items for the three content areas (“knowledge dimensions“) of instruction, assessment and learning.

Performance-based instruments

There are examples of approaches and instruments that measure teacher’s performance on teaching‑related activities (see Table 3.2 for an overview). For example, teachers’ lesson planning has been explored. Koh, Chai and Tay (2014[81]) examined in-service teachers’ conversations during group-based lesson planning sessions. They categorised teachers’ comments about ‘‘content’’, ‘‘technology’’, ‘‘pedagogy’’ and the intersections. The unit of analysis was design-talk as a lesson plan product, and teachers’ knowledge were measured through analysing teachers’ discussions.

Table 3.2. Overview of selected TPACK performance-based instruments

|

Author |

Sample |

Region |

Activity |

Description of measurement approach |

|---|---|---|---|---|

|

Koh et al. (2014[81]) |

In-service teachers |

Singapore |

Planning |

TPACK is measured via a coding protocol as the frequency with which comments refer to the seven components: subject matter (CK) technologies and their features (TK), processes or methods of teaching (PK), subject matter representation with technology (TCK) using technology to implement different teaching methods, (TPK), teaching methods for different types of subject matter, (PCK) and, using technology to implement teaching methods for different types of subject matter (TPACK). |

|

Graham et al. (2012[57]) |

Pre-service teachers |

USA |

Planning |

Student rationales were qualitatively analysed for evidence of TPACK including: TK, TPK and TPACK. |

|

Harris et al. (2010[58]) |

Pre-service teachers |

USA |

Planning |

TPACK is measured via a “Technology Integration Assessment Rubric”. The rubric measures four aspects: 1) Curriculum Goals and Technologies, 2) Instructional Strategies and Technologies, 3) Technology Selection(s) and, 4) Fit. |

|

Kafyulilo et al. (2016[23]) |

Pre-service teachers |

Tanzania |

Teaching |

TPACK is measured via an observation checklist inspired by Technology Integration Assessment Rubric, using a dual response scale of “No” and “Yes”. |

|

Maeng et al. (2013[82]) |

Pre-service teachers |

USA |

Planning Teaching Evaluation |

Participants’ use of technology for science inquiry is measured, by content area and investigation type via observations and experimental investigations. |

|

Pareto and Willermark (2018[61]) |

In-service teachers |

Sweden Norway Denmark |

Planning, Teaching, Evaluation |

TPACK is measured using didactic designs as the unit of analysis. Questions serve for the evaluation of the design qualities of a didactic design regarding the different TPACK components: TK: Which technology usages are present? CK: Which curricula content goals are addressed? PK: Which pedagogical strategies are used? TCK: How are the technology usages aligned with curricula content goals? PCK: How are the pedagogical strategies supporting curricula content goals? TPK: How are the technology usages supporting the pedagogical strategies? TPACK: How do all three components fit together? |

Furthermore, Graham, Borup and Smith (2012[57]) explored the instructional decisions that pre-service teachers make before and after completing an educational technology course. Through pre- and post‑assessments, pre-service teachers were given a design challenge and were asked to articulate how they would use technology to address specific curriculum criteria. Their responses were analysed by external evaluators of researchers. The evaluation was based on aspects such as how detailed the arguments were and whether there were multiple overlapping reasons for using a particular technology or not.

Moreover, Harris et al. (2010[58]) designed and tested an instrument (“Technology Integration Assessment Rubric”) for evaluating pre‑service teachers’ lesson plan documents. The rubric involved four themes which was graded on a four-point scale. For example: ‘‘technology use optimally supports instructional strategies’’ represents the highest score while ‘‘technology use does not support instructional strategies’’ represents the lowest score. The instrument has been disseminated in a different context by Kafyulilo, Fisser, Pieters and Voogt (2016[23]). They studied pre-service teachers’ process of integrating technology in microteaching sessions via observations and adopted an observation checklist inspired by Harris, Grandgenett, and Hofer (2010[58]). In another study, Maeng et al. (2013[82]) explored pre-service teachers who planned, implemented and evaluated teaching in an authentic setting during student teaching placements. Their teaching activities were analysed based on multiple data sources: observations, lesson plans, interviews and reflections. The analysis focused on how and to what extent participants employed TPACK by identifying instances of technology use associated with the facilitation of inquiry instruction.

Stoilescu (2015[83]) explored in-service teacher TPACK. Data included interviews, classroom observations and document analysis. Based on this, a TPACK profile for each teacher was created and the relative extent of each teacher’s TPACK domain of knowledge was estimated on a scale: unconvincing expertise, small, medium, large and extra-large. Yet, how TPACK was operationalised was not revealed since the author stated to have intuitively explored the cases in relation to TPACK. A similar approach to study in-service teachers TPACK via multiple data sources in authentic setting was conducted by Pareto and Willermark (2018[61]). A TPACK operational model was developed for designing and evaluating teachers’ didactic designs in practice.

The TPACK framework has been used to measure teachers’ application of TPACK in teaching situations. Whether it is orchestrated as a study assignment for pre-service teachers or as a way of examining in-service teachers' practice in an authentic setting, it reflects a manifestation of contextualised teacher knowledge in practice. All the discussed instruments can offer inspiration for such an approach. However, two instruments that can be particularly useful are Graham et al. (2012[57]), and Pareto and Willermark (2018[61]). This is because the studies provide: a) explicit operationalisation instruments, b) capture the quality of technology integration, c) are not subject-specific and d) have been applied in different teaching contexts.

Recommendations for an international large-scale survey

Based on what has been discussed in this chapter, implications for an international large-scale survey are discussed below (see Table 8.1 in Chapter 8 for the main takeaways from this expert chapter for TALIS and the TKS assessment module).

Conceptual underpinning through the TPACK framework

The TPACK framework has been applied to conceptualise and measure teachers’ technology-related knowledge and skills in various surveys. It avoids a common oversimplification, where technologies are perceived as merely add-on and instead highlight the complex interactions between pedagogy and technology. The framework can be used to explore teachers’ subject-independent knowledge and skills to effectively use technology in teaching and could be used as a starting point for specifying the technology‑related components that should be measured in an international study on teacher knowledge.

Measuring teacher knowledge through contextualised items drawing on existing instruments

As discussed, an objective assessment of teachers’ technology-related knowledge has certain advantages but requires some developmental effort as it cannot draw on existing instruments. However, existing self‑reporting and performance-based instruments should be reviewed as they point to important topics for assessment items. Conversely, many TPACK questionnaires exist. They are widely used in large-scale surveys such as TALIS, as they enable the collection of data which provides nuance and insight into teachers' perception of their own competence. It is also an inexpensive approach that is easy to scale up, which therefore allows for an easy analysis of results.

It is recommended to focus on items that are contextualised for the instrument development: Questions and items that are grounded in practice explore teachers’ actions in relation to their teaching practice. They should be specific and context-bound, rather than of general nature. Building on the experience from TPACK studies, the instrument should ask teachers to rate statements relating to their technology-related knowledge on Likert scales, which could easily be included into any existing questionnaire that already uses Likert scales. Although a five-point scale is the most common in TPACK questionnaires, it is suggested to harmonise the scaling to the format used in the existing questionnaire (e.g. for TALIS a four‑point scale).

To explore teachers' self-efficacy and self-rated knowledge to use technology as an educational tool, items from TPACK instruments can be used2. However, existing instruments need to be adjusted, for example several items need to be excluded and/or modified as:

Existing instruments (both questionnaires and performance instruments) contain items and constructs that are outside the scope of a study on teachers’ general pedagogical knowledge, i.e. teachers’ content knowledge (and the intersection of pedagogical knowledge and technological knowledge).

Some items are bound to a particular situation, such as online teaching, or are subject-specific.

Many of the existing statements are too general. Thus, they need to be supplemented with more situated statements on how teachers manifest their knowledge in practice.

Additionally, new items should be included because the existing questionnaires often exclude certain topics such as knowledge about copyright and personal data law; online ethics and cyberbullying, and safety. It could be of interest to explore teachers’ perspectives, experience and knowledge related to these aspects. Here, items could be added, for example; “I have good knowledge of the rules regarding copyright and what applies to publishing content online”. Such issues could be linked to the component of “Technological Knowledge”.

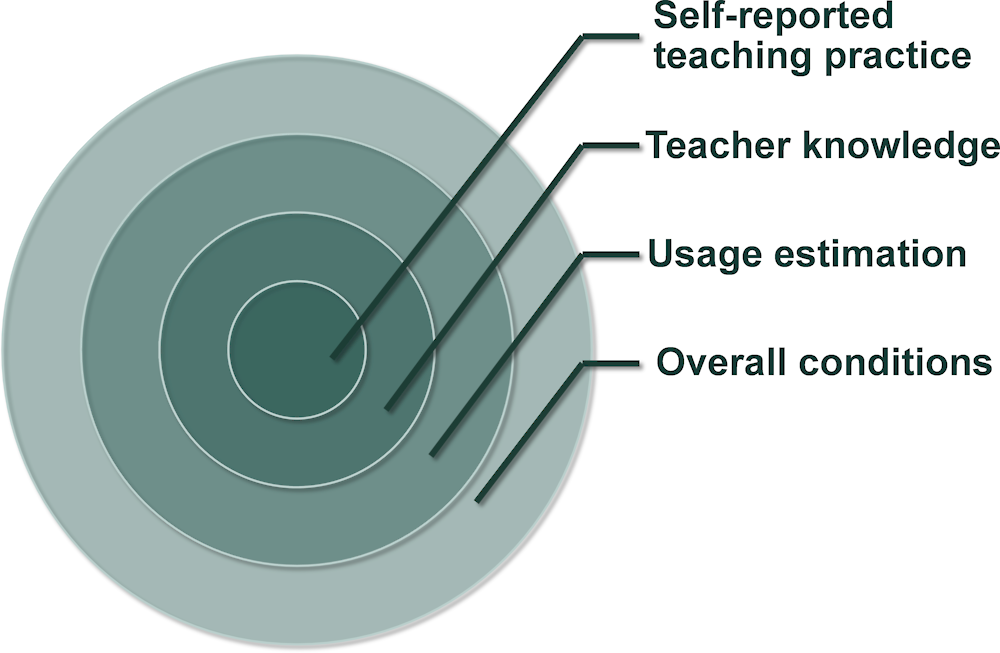

Additional questionnaires providing context information relating to teacher knowledge

In addition to teacher's assessed and self-rated knowledge of using technology in teaching, three areas are proposed for the questionnaire to provide context information teacher knowledge (see Figure 3.3). These areas are: 1) Self-reported teaching practice, 2) Usage estimation (i.e. the frequency and purpose of technology use of teachers) and 3) Overall conditions for technology use in schools. The areas can be seen as different layers, in which the core consists of self-reported teaching practice that builds on teachers’ specialised knowledge of using technology in teaching. To interpret, understand and analyse the results on the interplay between knowledge and practice, the other layers become important. The motivation for the inclusion of each layer or area, as well as suggestions on the type of items to address, are given below. Unless otherwise stated, the suggested items are developed by the author.

Figure 3.3. Aspects of teacher’s technology-related knowledge and skills

Self-reported teaching practice

It is crucial to examine how teachers’ technological knowledge and skills support their general pedagogical knowledge in practice. One way to address the issue is to link it to the core knowledge areas of teaching, which have been identified in a previous OECD report (König, 2015[84]) (see also Chapter 2), including:

1. Instruction (including teaching methods, didactics, structuring a lesson and classroom management). Items linked to this aspect could address whether teachers:

apply technology to explain and/or present learning content in a more comprehensible way, for example by making representations visual, multimodal or interactive

use technology to apply different teaching methods such as flipped classroom, explore real-world scenarios, student active methods or problem-based learning

work with students work in a digital platform

use technology to support absentee students' knowledge acquisition by sharing teaching materials and /or interacting with students.

2. Learning (including their cognitive, motivational, emotional individual dispositions; their learning processes and development; their learning as a group taking therefore into account student heterogeneity and adaptive teaching strategies). Items linked to this aspect could address whether teachers can use technology:

to increase the adaption to students’ individual needs

in a way that supports students' ability to achieve qualitative goals such as increased motivation and creativity

to increase interaction with students and support their learning process.

3. Assessment (including diagnosing principles irrespective of the subject, evaluation procedures). Items linked to this aspect could address whether teachers:

administer digital exams

use technology to increase student feedback and to vary the way they check students' knowledge

apply technology to get information about a student's level of knowledge and progress.

The statements must be sufficiently concrete so that teachers are able to make a reasonable estimate of their teaching practice. It is also important to focus on the teaching‑related activity rather than the specific tools, as it is impossible to capture all conceivable technological resources that can be used. Furthermore, it is the ability to use technology as a pedagogical tool rather than knowledge to use the tool itself that is of interest.

Usage estimation

Since previous research shows that the degree of technology use differs greatly between countries, schools and classrooms, it would be valuable to also map simple estimations of usage. That is how often teachers use technology and for what purposes, including: a) planning teaching and learning activities, b) teaching (a resource in teaching and learning activities), c) evaluation (conduct student documentation and assessment) and d) collaboration and communication with legal guardians and colleagues (e.g. sharing documents and lesson materials, communicating via forums, learning platforms, intranets, etc.) Based on the categories, a few items could be formulated such as “I use technology to plan teaching and learning activities”. Here it could be useful to apply frequency response scales (e.g. ranging from “daily” to “never”). This would be well suited for many teacher surveys, including TALIS, in which a frequency is applied to indicate how often a particular instructional practice occurs during lessons in a randomly selected target or reference class.

Overall conditions for technology use in schools

Teachers' overall conditions for technology use in schools cannot be overlooked when trying to understand teachers’ technological knowledge and skills in practice. Given that there are differences regarding access to technology between and within countries, it is of relevance to identify teachers' overall conditions to using technology, including:

Infrastructure. Access to robust infrastructure is crucial when integrating technology into teaching practice. For example, usage depends on the number of units (e.g. tablets, computers) per student that are available. This constitutes, therefore, a meaningful indicator of the overall conditions for using technology in teaching in schools. Areas for identification can include: the age and performance of computers or tablets, whether there is sufficient internet connection, the level of power supply, and the level of access to relevant software and adaptive technology (e.g. to what extent students with special needs have access to tailored digital tools).

Support functions. Local support functions (such as the level of access to [rapid] digital‑support and pedagogical IT-support) are also important aspects that can enable or hinder a teacher’s implementation of technology in teaching.

Leadership. Research emphasises the importance of an active leadership in the digitalisation process. Important aspects include concretising policies on digitalisation into realistic goals and providing teachers with appropriate professional development. It would be important to include one or a few questions about whether teachers believe that digitalisation policies are defined within their school in an international survey.

Based on the above, some core questions can be formulated to capture teachers’ overall conditions. Examples of items could be: “I have access to sufficient internet connectivity” or “Students with special needs have access to tailored digital technology”.

Complementing the survey with a performance study for ecological validity

As self‑reporting and assessments have limitations in terms of capturing teachers applied knowledge, a performance study for ecological validity is suggested as a complement to the survey. This means that a subsample of the teachers participating in the survey should be asked to conduct a performance task, in which they are asked to integrate technology into their teaching. This would provide an opportunity to validate the answers from the questionnaire and assessment with a performance‑based measurement of teachers applied knowledge. Through the Global Teaching InSights (GT) study, the OECD recently gathered experience with the large-scale use of performance measures across countries (OECD, 2020[63]).

To validate results from an international survey on teachers’ general pedagogical knowledge, it is suggested to ask a subsample of teachers participating in the survey to make a lesson plan where technology is an integral part. Teachers should also detail the rationale of the design. The design task should be based on teachers’ main subject and teaching levels. The lesson plan needs to be written in enough detail so that external evaluators can make well-informed evaluations. More specifically, the plan should address aspects such as: the overall purpose of the lesson, a detailed plan in stages, organisation of the lesson and the motives behind the organisation for each stage, as well as what technologies have been used, for what purposes, and how?

An evaluation template is recommended to support the evaluation, which should reflect the questions in the practice‑based questionnaire. For example, whether teachers plan to use the technology for the purpose of instructional design, student learning and/or assessment (relating the aspect of teachers’ self-reported practice). Furthermore, teachers’ performance could be qualitatively analysed using a standardised TPACK instrument. Here, existing performance measurement instruments can be used with some modification to reflect the focus on teachers’ Technological Pedagogical Knowledge.

Existing instruments to draw upon could be Graham, Borup and Smith (2012[57]), Pareto and Willermark (2018[61]), and Harris, Grandgenett and Hofer (2010[58]). This approach demonstrates a way of capturing teachers applied knowledge. Thus, teacher planning involves concretising the learning goals and strategies to reach these goals, as well as considering which approaches to use, what tools to involve and what resources are needed to fulfil the design idea (Willermark, 2018[32]). However, the applicability of such a lesson plan in practice is determined by the implementation in teaching. That is, realising the instructional plan in the highly dynamic and contextualised classroom practice, and when necessary modifying the approach and coping with unforeseen events (Pareto and Willermark, 2018[61]).

To capture teachers' applied knowledge, either a full-scale evaluation or a semi‑evaluation can be carried out. The alternatives capture different degrees of teachers’ applied knowledge in practice and require different amounts of resources. In the full-scale evaluation, the lesson plan should be realised and evaluated in practice, and documented by video recording. This is in line with the Global Teaching InSights (GT) study (OECD, 2020[63]). The design could be evaluated together with teachers, and be arranged so that the teacher and the observer watch the video recording together. This will allow the teacher to spontaneously comment on events or situations, with the observer having the opportunity to ask well‑informed and practice-oriented questions (e.g. “I note that you do X, can you describe how you reasoned”). In the semi‑evaluation, the lesson plan is not realised in practice. Instead it is suggested that teachers conduct a ‘light-weight evaluation’ of potential challenges of the plan during realisation (i.e. a type of risk assessment of the plan). Hence, it involves a hypothetical evaluation of the suggested approach as an additional step. Such hypothetical evaluation can reveal how aware teachers are of the risks and challenges of conducting technology‑based lesson plans in practice, i.e. it can reveal their Technological Pedagogical Knowledge.

Conclusion

Measuring a teacher’s knowledge to use technology in teaching is like hitting a moving target. This is because technology is continually changing and the nature of technological knowledge needs to continuously change as well. To be able to make a well‑founded measurement that is suitable for a large‑scale international survey, a combination of approaches is proposed. This in order to capture different aspects of teachers’ technology‑related knowledge and skills of using technology in teaching.

References

[50] Ackerman, P., M. Beier and K. Bowen (2002), “What we really know about our abilities and our knowledge”, Personality and Individual Differences, Vol. 33/4, pp. 587-605, http://dx.doi.org/10.1016/S0191-8869(01)00174-X.

[4] Agyei, D. and J. Voogt (2012), “Developing technological pedagogical content knowledge in pre-service mathematics teachers through collaborative design”, Australasian Journal of Educational Technology, Vol. 28/4, pp. 547-564, http://dx.doi.org/10.14742/ajet.827.

[79] Akman, Ö. and C. Güven (2015), “TPACK survey development study for social sciences teachers and teacher candidates”, International Journal of Research in Education and Science, Vol. 1/1, pp. 1-10.

[67] Alayyar, G., P. Fisser and J. Voogt (2012), “Developing technological pedagogical content knowledge in pre-service science teachers: Support from blended learning”, Australasian Journal of Educational Technology, Vol. 28/8, pp. 1298-1316, http://dx.doi.org/10.14742/ajet.773.

[66] Ansyari, M. (2015), “Designing and evaluating a professional development programme for basic technology integration in English as a foreign language (EFL) classrooms”, Australasian Journal of Educational Technology, Vol. 31/6, pp. 699-712, http://dx.doi.org/10.14742/ajet.1675.

[12] Apiola, M., S. Pakarinen and M. Tedre (2011), “Pedagogical outlines for OLPC initiatives: A case of Ukombozi school in Tanzania”, IEEE Africon, Vol. 11, pp. 1-7, http://dx.doi.org/10.1109/AFRCON.2011.6072084.

[42] Archambault, L. and K. Crippen (2009), “Examining TPACK among K-12 online distance educators in the United States”, Contemporary Issues in Technology and Teacher Education, Vol. 9/1, pp. 71-88.

[18] Azmat, G. et al. (2021), “Multi-dimensional evaluation of the impact of mobile digital equipment on student learnings: Preliminary results of the effects of the 2015 Digital Plan”, NOTE D’INFORMATION n° 21.05, Vol. 21.05, pp. 1-4, https://www.education.gouv.fr/media/87698/download.

[17] Azmat, G. et al. (2020), “Évaluation multidimensionnelle de l’impact de l’utilisation d’équipements numériques mobiles sur les apprentissages des élèves [Multidimensional assessment of the impact of the use of mobile digital equipment on student learning]”, Études Document de Travail, https://www.education.gouv.fr/media/74225/download.

[70] Banas, J. and C. York (2014), “Authentic learning exercises as a means to influence preservice teachers’ technology integration self-efficacy and intentions to integrate technology”, Australasian Journal of Educational Technology, Vol. 30/6, pp. 728-746, http://dx.doi.org/10.14742/ajet.362.

[77] Baser, D., T. Kopcha and M. Ozden (2016), “Developing a technological pedagogical content knowledge (TPACK) assessment for preservice teachers learning to teach English as a foreign language”, Computer Assisted Language Learning, Vol. 29/4, pp. 749-764, http://dx.doi.org/10.1080/09588221.2015.1047456.

[19] Bate, F., J. MacNish and S. Males (2012), Parent and student perceptions of the initial implementation of a 1: 1 laptop program in Western Australia, IACSIT Press, Singapore, https://researchonline.nd.edu.au/cgi/viewcontent.cgi?article=1050&context=edu_conference.

[13] Bebell, D. and R. Kay (2010), “One to one computing: A summary of the quantitative results from the Berkshire wireless learning initiative”, Journal of Technology, Learning, and Assessment, Vol. 9/2, pp. 1-59.

[2] Bergöö (2005), Vilket svenskämne? Grundskolans svenskämnen i ett lärarutbildningsperspektiv [Which Swedish Subject? Compulsory schools’ Swedish Subjects from a Teacher Education Perspective], Malmö högskola, Lärarutbildningen, Malmö.

[1] Billett, S. (2006), “Relational interdependence between social and individual agency in work and working life”, Mind, Culture, and Activity, Vol. 13/1, pp. 53-69, http://dx.doi.org/10.1207/s15327884mca1301_5.

[46] Bryman, A. (2015), Social Research Methods, Oxford University Press, Oxford.

[22] Burroughs, N. et al. (2019), “A review of the literature on teacher effectiveness and student outcomes”, in Teaching for Excellence and Equity, IEA/Springer, Cham, http://dx.doi.org/10.1007/978-3-030-16151-4_2.

[69] Calik, M. et al. (2014), “Effects of ’Environmental Chemistry’ Elective Course Via Technology-Embedded Scientific Inquiry Model on Some Variables”, Journal of Science Education and Technology, Vol. 23/3, pp. 412-430, http://dx.doi.org/10.1007/s10956-013-9473-5.

[44] Chai, C. et al. (2013), “Exploring Singaporean Chinese language teachers’ technological pedagogical content knowledge and its relationship to the teachers’ pedagogical beliefs”, The Asia-Pacific Education Researcher, Vol. 22/4, pp. 657–666, http://dx.doi.org/10.1007/s40299-013-0071-3.

[64] Chai, C. et al. (2011), “Modeling primary school pre-service teachers’ Technological Pedagogical Content Knowledge (TPACK) for meaningful learning with information and communication technology (ICT)”, Computers & Education, Vol. 57/1, pp. 1184-1193, http://dx.doi.org/10.1016/j.compedu.2011.01.007.

[68] Chen, Y. and S. Jang (2014), “Interrelationship between stages of concern and technological, pedagogical, and content knowledge: A study on Taiwanese senior high school in-service teachers”, Computers in Human Behavior, Vol. 32, pp. 79-91, http://dx.doi.org/10.1016/j.chb.2013.11.011.

[75] Corum, K. et al. (2020), “Developing TPACK for makerspaces to support mathematics teaching and learning”, Proceedings of the Forty-Second Annual Meeting of the North American Chapter of the International Group for the Psychology of Mathematics Education, National Science Foundation, Alexandria, Virginia, https://par.nsf.gov/servlets/purl/10168684.

[34] Cox, S. and C. Graham (2009), “Diagramming TPACK in practice: Using an elaborated model of the TPACK framework to analyze and depict teacher knowledge”, TechTrends, Vol. 53/60, pp. 60-69, http://dx.doi.org/10.1007/s11528-009-0327-1.

[60] Crawford, C. et al. (eds.) (2006), Developing and implementing a technology pedagogical content knowledge (TPCK) for teaching mathematics with technology, Association for the Advancement of Computing in Education (AACE), Orlando, Florida, USA.

[14] Cristia, J. et al. (2017), “Technology and child development: Evidence from the one laptop per child program”, American Economic Journal: Applied Economics, Vol. 9/3, pp. 295-320, http://dx.doi.org/10.1257/app.20150385.

[10] Cuban, L. (2013), Inside the Black Box of Classroom Practice: Change without Reform in American Education, Harvard Education Press, Cambridge.

[55] Curaoglu, O. et al. (2010), A Case Study of Investigating Preservice Mathematics Teachers’ Initial Use of the Next-Generation TI-Nspire Graphing Calculators with Regard to TPACK, Paper presented at the Society for Informtion Technology & Teacher Education International Conference, https://www.learntechlib.org/p/33973.

[24] Dexter, S. (2008), “Leadership for IT in schools”, in International Handbook of Information Technology in Primary and Secondary Education, Springer, Boston, MA.

[71] Doering, A. et al. (2014), “Technology integration in K-12 geography education using TPACK as a conceptual model”, Journal of Geography, Vol. 113/6, pp. 223-237, http://dx.doi.org/10.1080/00221341.2014.896393.

[41] Drummond, A. and T. Sweeney (2017), “Can an objective measure of technological pedagogical content knowledge (TPACK) supplement existing TPACK measures?”, British Journal of Educational Technology, Vol. 48/4, pp. 928-939, http://dx.doi.org/10.1111/bjet.12473.

[6] Egeberg, G. et al. (2012), “The digital state of affairs in Norwegian schools”, Nordic Journal of Digital Literacy, Vol. 7/1, pp. 73-77.

[8] Erstad, O. and T. Hauge (2011), Skoleutvikling og digitale medier [School Development and Digital Media], Gyldendal akademisk, Oslo.

[25] Ferrari, A. (2012), Digital Competence in Practice: An Analysis of Frameworks, Publications Office of the European Union, Luxembourg.

[35] Graham, C. (2011), “Theoretical considerations for understanding technological pedagogical content knowledge (TPACK)”, Computers & Education, Vol. 57/3, pp. 1953-1960, http://dx.doi.org/10.1016/j.compedu.2011.04.010.

[57] Graham, C., J. Borup and N. Smith (2012), “Using TPACK as a framework to understand teacher candidates’ technology integration decisions”, Journal of Computer Assisted Learning, Vol. 28/6, pp. 530-546, http://dx.doi.org/10.1111/j.1365-2729.2011.00472.x.

[56] Graham, C., S. Cox and A. Velasquez (2009), “Teaching and measuring TPACK development in two preservice teacher preparation programs”, in Proceedings of SITE 2009--Society for Information Technology & Teacher Education International Conference, Association for the Advancement of Computing in Education (AACE), Charleston, SC, USA.

[39] Guerriero, S. (2017), Pedagogical Knowledge and the Changing Nature of the Teaching Profession, OECD Publishing, Paris, http://dx.doi.org/10.1787/9789264270695-de.

[72] Han, I. and W. Shin (2013), “Multimedia case-based learning to enhance pre-service teachers’ knowledge integration for teaching with technologies”, Teaching and Teacher Education, Vol. 34, pp. 122-129, http://dx.doi.org/10.1016/j.tate.2013.03.006.

[58] Harris, J., N. Grandgenett and M. Hofer (2010), “Testing a TPACK-based technology integration assessment rubric”, in Research Highlights in Technology and Teacher Education, Society for Information Technology and Teacher Education, Waynesville, NC.

[26] Hatlevik, O. and K. Christophersen (2013), “Digital competence at the beginning of upper secondary school: Identifying factors explaining digital inclusion.”, Computers & Education, Vol. 63, pp. 240-247, http://dx.doi.org/10.1016/j.compedu.2012.11.015.

[76] Hong, J. and F. Stonier (2015), “GIS in-service teacher training based on TPACK”, Journal of Geography, Vol. 114/3, pp. 108-117, http://dx.doi.org/10.1080/00221341.2014.947381.

[5] Howell, J. (2012), Teaching with ICT: Digital Pedagogies for Collaboration and Creativity, Oxford University Press, Oxford.

[78] Hsu, C., J. Liang and Y. Su (2015), “The role of the TPACK in game-based teaching: Does instructional sequence matter?”, Asia-Pacific Education Researcher, Vol. 24/3, pp. 463-470, http://dx.doi.org/10.1007/s40299-014-0221-2.

[29] Instefjord, E. and E. Munthe (2016), “Preparing pre-service teachers to integrate technology: an analysis of the emphasis on digital competence in teacher education curricula”, European Journal of Teacher Education, Vol. 39/1, pp. 77-93, http://dx.doi.org/10.1080/02619768.2015.1100602.

[20] Islam, M. and Å. Grönlund (2016), “An international literature review of 1: 1 computing in schools”, Journal of Educational Change, Vol. 17/2, pp. 191-222, http://dx.doi.org/10.1007/s10833-016-9271-y.

[65] Jang, S. and M. Tsai (2013), “Exploring the TPACK of Taiwanese secondary school science teachers using a new contextualized TPACK model.”, Australasian Journal of Educational Technology, Vol. 29/4, pp. 566-580, http://dx.doi.org/10.14742/ajet.282.

[73] Joo, Y., K. Lim and N. Kim (2016), “The effects of secondary teachers’ technostress on the intention to use technology in South Korea”, Computers & Education, Vol. 95, pp. 114-122, http://dx.doi.org/10.1016/j.compedu.2015.12.004.

[23] Kafyulilo, A., P. Fisser and J. Voogt (2016), “Factors affecting teachers’ continuation of technology use in teaching”, Education and Information Technologies, Vol. 21/6, pp. 1535-1554, http://dx.doi.org/10.1007/s10639-015-9398-0.

[15] Keengwe, J., G. Schnellert and C. Mills (2012), “Laptop initiative: Impact on instructional technology integration and student learning”, Education and Information Technologies, Vol. 17/2, pp. 137-146, http://dx.doi.org/10.1007/s10639-010-9150-8.

[59] Kereluik, K., G. Casperson and M. Akcaoglu (2010), Coding Pre-service Teacher Lesson Plans for TPACK, Society for Information Technology & Teacher Education International Conference, San Diego, CA, https://www.learntechlib.org/p/33986/.

[27] Kivunja, C. (2013), “Embedding digital pedagogy in pre-service higher education to better prepare teachers for the digital generation.”, International Journal of Higher Education, Vol. 2/4, pp. 131-142.

[31] Koehler, M., P. Mishra and W. Cain (2013), “What is Technological Pedagogical Content Knowledge (TPACK)?”, Journal of Education, Vol. 193/3, pp. 13-19, http://dx.doi.org/10.1177/002205741319300303.

[80] Koehler, M. et al. (2014), “The technological pedagogical content knowledge framework”, in Handbook of Research on Educational Communications and Technology, Springer, New York, NY.

[81] Koh, J., C. Chai and L. Tay (2014), “TPACK-in-Action: Unpacking the contextual influences of teachers’ construction of technological pedagogical content knowledge (TPACK)”, Computers & Education, Vol. 78, pp. 20-29, http://dx.doi.org/10.1016/j.compedu.2014.04.022.

[84] König, J. (2015), Background Document: Designing an International Assessment to Assess Teachers’ General Pedagogical Knowledge (GPK), OECD Website,, http://www.oecd.org/education/ceri/Assessing%20Teachers%E2%80%99%20General%20Pedagogical%20Knowledge.pdf.

[28] Krumsvik, R. (2008), “Situated learning and teachers’ digital competence”, Education and Information Technologies, Vol. 13/4, pp. 279-290, http://dx.doi.org/10.1007/s10639-008-9069-5.

[49] Lawless, K. and J. Pellegrino (2007), “Professional development in integrating technology into teaching and learning: Knowns, unknowns, and ways to pursue better questions and answers”, Review of Educational Research, Vol. 77/4, pp. 575-614, http://dx.doi.org/10.3102/0034654307309921.

[74] Lee, M. and C. Tsai (2010), “Exploring teachers’ perceived self efficacy and technological pedagogical content knowledge with respect to educational use of the World Wide Web”, Instructional Science, Vol. 38/1, pp. 1-21, http://dx.doi.org/10.1007/s11251-008-9075-4.

[45] Lux, N., A. Bangert and D. Whittier (2011), “The development of an instrument to assess preservice teacher’s technological pedagogical content knowledge”, Journal of Educational Computing Research, Vol. 45/4, pp. 415-431, http://dx.doi.org/10.2190/EC.45.4.c.

[40] Maderick, J. et al. (2016), “Preservice teachers and self-assessing digital competence.”, Journal of Educational Computing Research, Vol. 54/3, pp. 326-351, http://dx.doi.org/10.1177/0735633115620432.

[82] Maeng, J. et al. (2013), “Preservice teachers’ TPACK: Using technology to support inquiry instruction”, Journal of Science Education and Technology, Vol. 22/6, pp. 838-857, http://dx.doi.org/10.1007/s10956-013-9434-z.

[16] Martino, J. (2010), One Laptop per Child and Uruguay’s Plan Ceibal: Impact on Special Education, University of Guelph, Ontario, Canada, https://hdl.handle.net/10214/19483.

[9] Mishra, P. and M. Koehler (2006), “Technological pedagogical content knowledge: A framework for teacher knowledge”, Teachers College Record, Vol. 108/6, pp. 1017-1054.

[47] Nederhof, A. (1985), “Methods of coping with social desirability bias: A review”, European Journal of Social Psychology, Vol. 15/3, pp. 263-280, http://dx.doi.org/10.1002/ejsp.2420150303.

[63] OECD (2020), Global Teaching InSights: A Video Study of Teaching, OECD Publishing, Paris, https://dx.doi.org/10.1787/20d6f36b-en.

[11] OECD (2020), TALIS 2018 Results (Volume II): Teachers and School Leaders as Valued Professionals, TALIS, OECD Publishing, Paris, https://dx.doi.org/10.1787/19cf08df-en.

[7] Olofsson, A. et al. (2011), “Uptake and use of digital technologies in primary and secondary schools–a thematic review of research”, Nordic Journal of Digital Literacy, Vol. 10, pp. 103-121.

[52] Orlikowski, W. (2002), “Knowing in practice: Enacting a collective capability in distributed organizing”, Organization Science, Vol. 13/3, pp. 249-273, http://dx.doi.org/10.1287/orsc.13.3.249.2776.

[61] Pareto, L. and S. Willermark (2018), “TPACK in situ: A design-based approach supporting professional development in practice”, Journal of Educational Computing Research, Vol. 57/5, pp. 1186–1226, http://dx.doi.org/10.1177/0735633118783180.

[48] Paulhus, D. (2002), “Socially desirable responding: The evolution of a construct”, in The Role of Constructs in Psychological and Educational Measurement, Lawrence Erlbaum Associates, Inc., Publishers, Mahwah, NJ, US.

[30] Pettersson, F. (2018), “On the issues of digital competence in educational contexts–a review of literature”, Education and Information Technologies, Vol. 23/3, pp. 1005-1021, http://dx.doi.org/10.1007/s10639-017-9649-3.

[3] Säljö, R. (2010), “Digital tools and challenges to institutional traditions of learning: technologies, social memory and the performative nature of learning”, Journal of Computer Assisted Learning, Vol. 26/1, pp. 53-64, http://dx.doi.org/10.1111/j.1365-2729.2009.00341.x.

[62] Schmid, M., E. Brianza and D. Petko (2020), “Developing a short assessment instrument for Technological Pedagogical Content Knowledge (TPACK. xs) and comparing the factor structure of an integrative and a transformative model.”, Computers & Education, Vol. 157/103967, pp. 1-12, http://dx.doi.org/10.1016/j.compedu.2020.103967.

[43] Schmidt, D. et al. (2009), “Technological pedagogical content knowledge (TPACK) the development and validation of an assessment instrument for preservice teachers”, Journal of Research on Technology, Vol. 42/2, pp. 123-149, http://dx.doi.org/10.1080/15391523.2009.10782544.

[33] Shulman, L. (1986), “Those who understand: Knowledge growth in teaching”, Educational Researcher, Vol. 15/2, pp. 4-14, http://dx.doi.org/10.2307/1175860.

[53] So, H. and B. Kim (2009), “Learning about problem based learning: Student teachers integrating technology, pedagogy and content knowledge”, Australasian Journal of Educational Technology, Vol. 25/1, pp. 101-116, http://dx.doi.org/10.14742/ajet.1183.

[83] Stoilescu, D. (2015), “A critical examination of the technological pedagogical content knowledge framework: Secondary school mathematics teachers integrating technology”, Journal of Educational Computing Research, Vol. 52/4, pp. 514-547, http://dx.doi.org/10.1177/0735633115572285.

[54] Tatto, M. (2013), The Teacher Education and Development Study in Mathematics (TEDS-M). Policy, Practice and Readiness to Teach Primary and Secondary Mathematics in 17 Countries: Technical Report, IEA, Amsterdam.

[37] TPACK ORG (2012), TPACK Explained, http://tpack.org/.

[51] Tschannen-Moran, M. (2001), “Teacher efficacy: Capturing an elusive construct”, Teaching and Teacher Education, Vol. 17/7, p. 17, http://dx.doi.org/10.1016/S0742-051X(01)00036-1.

[38] Ulferts, H. (2019), “The relevance of general pedagogical knowledge for successful teaching: Systematic review and meta-analysis of the international evidence from primary to tertiary education”, OECD Education Working Papers, No. 212, OECD Publishing, Paris, https://dx.doi.org/10.1787/ede8feb6-en.

[21] Warschauer, M. (2006), Laptops and Literacy: Learning in the Wireless Classroom, Teachers College Press, New York.

[32] Willermark, S. (2018), “Technological pedagogical and content knowledge: A review of empirical studies published from 2011 to 2016”, Journal of Educational Computing Research, Vol. 56/3, pp. 315-343, http://dx.doi.org/10.1177/0735633117713114.

[36] Willermark, S. and L. Pareto (2020), “Unpacking the role of boundaries in computer-supported collaborative teaching”, Computer Supported Cooperative Work (CSCW), Vol. 29, pp. 743–767, http://dx.doi.org/10.1007/s10606-020-09378-w.

Notes

← 1. Link to the questionnaire by Schmidt et al. (2009[43]) [2020-09-29]: https://matt-koehler.com/tpack2/wp-content/uploads/tpack_survey_v1point1.pdf.

Link to the questionnaire by Archambault and Crippen Crippen (2009[42]) [2020-09-29]: https://citejournal.org/volume-9/issue-1-09/general/examining-tpack-among-k-12-online-distance-educators-in-the-united-states/#appendix.

← 2. Example of items to include: Technological Pedagogical Knowledge, item 29, 35, 36, 38 from Schmidt et al. (2009[43]) and/or item h, n, l and p from Archambault and Crippen (2009[42]). Technological Knowledge, item 1-6 from Schmidt et al. (2009[43]) and/or item a, g and q, from Archambault and Crippen (2009[42]). Pedagogical Knowledge, item 20-26 from Schmidt et al. (2009[43]) and/or item j, c and r from Archambault and Crippen (2009[42]).