Mario Piacentini

OECD

Natalie Foster

OECD

Cesar A. A. Nunes

Universidade Estadual de Campinas, São Paulo

Mario Piacentini

OECD

Natalie Foster

OECD

Cesar A. A. Nunes

Universidade Estadual de Campinas, São Paulo

This chapter presents key insights from research in the learning sciences and from experimental evidence on instructional design to inform the design of next-generation assessments. It argues that research from the learning sciences emphasises that learning is a social process, and that expertise requires organised knowledge and the capacity to adapt and transfer that knowledge to novel situations. The chapter then sets forth several assessment design innovations that are aligned with these research insights, including using extended performance tasks that integrate opportunities for learning and iteration, that account for the role of knowledge in performance, and that have “high floors, low ceilings” to cater to different proficiency levels. It argues that such innovations may provide more valid information on how well educational experiences are preparing students for their future.

In order to respond to the challenges of a world that is changing at an unprecedented speed, researchers, educators, policy makers and business leaders have emphasised the need to support 21st Century competencies. As discussed in the Introduction and Chapter 1 of this report, 21st Century competencies refer to the capacity to perform real-life activities such as analysing information critically, creating innovative solutions to problems, working productively with others and communicating effectively to different audiences. These competencies allow individuals to adapt effectively to changes in the labour market and society, and perform tasks on the job or roles within their communities that computers cannot replace (Levy and Murnane, 2004[1]).

The learning experiences that support these higher-order thinking and performance skills involve inquiry and investigation, the application of knowledge to new situations and problems, and the collaborative creation of ideas and solutions (Pellegrino and Hilton, 2012[2]). In these situations, students are invited to reflect on what is important to consider, plan what to do next and decide who to call on for help or feedback. The outcomes of these active learning experiences are often referred to as ‘deeper learning’, a type of learning whose positive effects transfer beyond the initial context of instruction (National Research Council, 2001[3]). 21st Century competencies constitute the integrated body of cognitive, intrapersonal and interpersonal skills that enable students to learn in ways that support not only information retention but also competent behaviour in other situations. As such, deeper learning experiences and 21st Century competencies are connected to each other in a self-reinforcing cycle: 21st Century competencies are developed in the context of deeper learning experiences, and deeper learning does not occur unless students can mobilise multiple 21st Century competencies simultaneously.

As argued in earlier chapters of this report, assessments are important markers of teaching and learning: if we want more students to engage in deeper learning experiences and to develop 21st Century competencies, then we need to develop new assessments that are capable of eliciting and measuring these competencies. Assessments at scale and across multiple cultural contexts can also help us to better understand these new constructs and how they interact in authentic problem situations. Assessments are more likely to yield valid evidence about what students know and can do if they confront students with the types of learning situations that actually require 21st Century competencies in real life, and such an alignment can generate evidence that differentiates between those students who have benefited from experiences of deeper learning at school from those who have not.

This chapter presents insights from research in the learning sciences on how expertise develops and from experimental evidence on instructional design that has implications for how to assess 21st Century competencies. The chapter then describes a number of design innovations for the next generation of assessments of 21st Century competencies that are aligned with these research insights and that have the potential to provide more valid information on how well educational experiences have prepared students for their future.

It is increasingly recognised that expertise in a given domain is not limited to knowing facts and procedures, but extends to being able to organise this knowledge in a mental schema, to develop new solutions when established procedures fail or are unsuitable, and to communicate one’s own emerging understanding and ideas to achieve a particular outcome (National Research Council, 2001[3]). Any assessment involves observing how an individual performs in only a handful of particular situations. Traditional large-scale assessments evolved to foster time and cost efficiency and compliance with established measurement models, resulting in a narrow focus on situations where test takers demonstrate the knowledge they already master rather than interacting with peers or mentors and building new knowledge. The focus of traditional measurement approaches is also limited to the evaluation of response correctness – in other words, abstracting from the cognitive, interpersonal and intrapersonal processes that supported the response. As a result, the interpretation of a person’s performance and behaviours in the types of restricted and static situations used in traditional assessments might not provide particularly valid inferences on that person’s capabilities to think, act and learn in real-life situations outside of the test context.

It is possible to reduce this misalignment between assessments and contemporary theories of how people learn and solve problems in real life. This requires, as a first step, taking stock of the main findings from the research that has investigated the mental structures that support problem solving and learning. Some of this research has involved studies that contrast “experts” with “novices”. In what follows, we use the word “expert” in relative terms to refer to individuals who have constructed solid mental models that connect ideas central to a given domain and who have learned, through participation in key practices, how to apply these ideas to new problems. We therefore do not necessarily refer to “experts” as established professionals with extensive experience in a specific discipline. Conversely, we refer to “novices” as those individuals who have learned the basics of a given domain but who have not had opportunities to consolidate their knowledge through practice.

The second step towards reducing this misalignment – and a much more difficult one – consists of creating an internally consistent system of teaching practices and assessments that reflect these research insights: in this system, deeper learning experiences prepare students for future learning and assessments measure how effectively students have engaged with these deeper learning experiences.

Over the last two decades, one of the main conclusions from research in the learning sciences is that learning is a socially situated process (Dumont, Istance and Benavides, 2010[4]; Darling-Hammond et al., 2019[5]). People develop expertise in a variety of domains, for example literature, engineering or the culinary arts, by participating in the practice of a community of experts and by learning to use the tools, languages and strategies that have been developed within that community (Mislevy, 2018[6]; Ericsson, 2006[7]; Pellegrino and Hilton, 2012[2]). The key to becoming skilful in a domain is acquiring fluency with the language and other cultural representations of the community, learning the ways of thinking and acting that are aligned with those representations, and soliciting and using feedback from other members of the community or from the situations themselves. Throughout life people learn interactively, trying different actions and observing their effects and consequences in the social space (Piaget, 2001[8]).

The fact that learning is mediated by socially-constructed practices has clear implications for instruction. Deeper learning occurs when students are given the opportunity to engage in activities that are realistic, complex, meaningful and motivating, and when they feel part of a community of learners that they can call on for support. In a responsive social setting, learners observe the criteria that others use to judge competence and adapt to these criteria. Innovative pedagogies have engaged students in highly interactive educational activities in which everyone is responsible for each others’ learning. Some evidence shows that such practices, if carefully implemented by teachers, result in better academic results and higher enjoyment of learning – see, for example, the case of Railside school (Boaler and Staples, 2008[9]). The rewards and meaning that students derive from becoming deeply involved in collaborative knowledge building provide them with a strong motivation to learn and positive beliefs about what they can accomplish (Tan et al., 2021[10]).

A recurrent observation from studies that have compared experts to novices is that experts have strong metacognitive skills (Hatano, 1990[11]). In the course of learning and problem solving, experts display regulatory behaviours such as knowing when to apply a procedure, predicting the outcomes of an action, planning ahead, monitoring their progress and efficiently apportioning cognitive and emotional resources. They also question limitations in their existing knowledge and avoid simple interpretations. This capability for self-regulation and self-instruction extends to situations when learning and problem solving happens in collaboration with others (Hadwin, Järvelä and Miller, 2017[12]).

The capacity to regulate one own’s learning and (re)act accordingly is what distinguishes routine experts from adaptive experts, with the latter being “characterized by their flexible, innovative, and creative competencies within the domain” (Hatano and Oura, 2003, p. 28[13]). Adaptive experts are able to detect anomalies in their tasks and are consequently alerted when following established rules might result in sub-optimal outcomes; they also do not mind making errors in some situations, as errors teach them what not to do in particular situations and result in more integrated knowledge (Hatano and Inagaki, 1986[14]).

Research reveals that metacognition does not necessarily develop organically through traditional educational practices but that it can and should be explicitly taught in context (National Research Council, 2001[3]; Roll et al., 2007[15]). Reflecting on one’s own learning – a major component of metacognition – does not typically occur in the classroom: when students are unable to make progress in their learning and are asked to identify the source of difficulty, they tend to report being “stuck” without analysing what they need to make progress. However, there are some notable examples of metacognitive strategies being used to improve learning in various domains. In mathematics, for example, teachers have had success with techniques that combine problem-solving instruction with control strategies for generating alternative problem-solving approaches, evaluating among several courses of action and assessing progress (Schoenfeld, 1985[16]).

Cognitive research has shown that general problem-solving procedures (or “weak methods”) such as trial-and-error or hill climbing, are slow and inefficient (National Research Council, 2001[3]). Experts instead use deep knowledge of the domain (“strong methods”) to solve problems. This deeper knowledge cannot be reduced to sets of isolated facts or propositions; rather, it is knowledge that has been encoded in a way that closely links it with its contexts and conditions of use. When experts face a new problem, they can readily activate and retrieve the subset of their knowledge that is relevant to the task at hand (Simon, 1980[17]). For example, chess experts encode mid-game situations in terms of meaningful clusters of pieces (Chase and Simon, 1973[18]). Research shows that students progress in their mastery of a discipline through similar processes of acquisition and use of increasingly well-structured knowledge schema.

The central role of domain knowledge for performance and future learning has strong implications for the teaching of 21st Century competencies. It would be unfair to expect that students can apply these competencies to a problem they know nothing about and cannot connect to their previous learning experiences. Finding a creative solution or communicating effectively about a topic generally require a deep understanding of relevant concepts and linguistic and socio-cultural patterns in the given domain. The teaching of these higher-order thinking and behavioural skills thus needs to be embedded within the conventions and “ways of knowing” of each learning area in the curriculum, and should possibly encourage students to establish connections between different disciplines.

As Chapter 1 of this report explained, we have a good understanding of what skills students need in order to learn and function in their future roles within society – but we do not yet have enough nor sufficiently adequate instruments to measure them. The objective of the following section is to highlight some general characteristics of what we consider to be “next-generation assessments” (NGA, for short), informed by the above insights from the learning sciences, that can yield potentially valid evidence on where students are in their development of 21st Century competencies (in summative applications) and on what they need to do to progress in these skills (in formative applications).

Assessments that aim to measure how prepared students are for deeper learning have to engage students in active and authentic learning processes. As discussed earlier (see Research insight 1), students engage 21st Century competencies in situations in which they interact with others, evaluate available resources, make choices about what to focus on and disregard as well as the course of action to take, try out multiple strategies or iterations, and adapt according to the results. From an assessment perspective, this means providing students with a purposeful challenge that replicates the key features of those educational experiences where deeper learning happens as a result of interactions with the problem situation and knowledgeable others – including the capacity to make decisions (see Chapter 4 of this report for more on the importance of decision making in defining and assessing 21st Century competencies like problem solving).

In the context of summative assessments, in particular, efficiency considerations have led to short, discrete tasks being preferred over longer performance activities. In general, using many short items provides more reliable data on whether students master a given set of knowledge and can execute given procedures because the information is accumulated over a larger number of observations. Measurement is also easier: the evidence is accumulated by applying established psychometric models to items that are fully independent. However, if the purpose of assessment shifts to evaluating students’ capacity to construct new knowledge in choice-rich environments then students should be given the time they need to demonstrate what they can do in these environments. This includes giving students time and affordances for reflective activities (Research insight 2).

Extended units that include multiple activities sequenced as steps towards achieving a main learning goal can provide students with a more authentic and motivating experience of assessment. Encouraging a shift in the test taker’s mindset – from “I have to get as many of these test items right” to “I have a challenge to accomplish” – might ultimately provide more valid data (i.e. evidence that is predictive of what students are capable of doing outside of the constrained and stressful context of a test). These extended experiences are more challenging to design because developers need to establish a coherent storyline that keeps students engaged as well as address dependency problems (for example, by providing rescue points to move struggling students from one step to the next).

The OECD PISA 2025 test of Learning in the Digital World incorporates these ideas by including extended test units of 30 minutes. Each unit comprises various phases, including a learning phase (where students learn and apply concepts to simple problems) and a challenge phase (where students apply what they have learned earlier in the unit to solve a more complex problem). Another relevant model is the Cognitively-Based Assessment of, for and as Learning (CBAL) that uses scenario-based tasks designed by modelling high-quality teaching practices (Sabatini, O’Reilly and Wang, 2018[19]). A typical CBAL includes: 1) a realistic purpose; 2) sequences of tasks that follow learning progressions in a domain; and 3) learning material derived from multiple sources that reflect key practices in the content area.

Advances in technology (further explored in Part II of this report) allow much more data to be captured on how students spend their time in extended tasks by immersing them in simulated environments and communities of practice. These environments can facilitate a more open interpretation of students’ goals and their exploration of the problem constraints, reward diverse solution strategies and outcomes, and provide feedback to learners. This also makes it possible to observe metacognitive processes that are crucial to learning in a non-obtrusive way, tracking how students plan and implement strategies, how they behave when they are stuck and how they respond to feedback (Nunes, Nunes and Davis, 2003[20]). The application of a principled design process can lead to a productive use of these process data to augment the evidence that is derived from final solutions, therefore reducing the trade-off between reliability and authenticity (see Chapters 5-7 of this report).

Despite these advances, efficiency considerations (i.e. to collect as many observations as possible in limited testing time) will remain an important constraint. The argument here is thus not to shift completely from one assessment paradigm (i.e. using only short discrete items) to another (i.e. using only extended performance tasks) but rather encouraging the use of a more diversified set of assessment experiences where the breadth and depths of tasks and associated measurement models are aligned with what the assessment intends to measure.

The socially-constructed nature of learning (Research insight 1) and the important role of knowledge for real-life performance (Research insight 3) both imply that we can hardly assess students’ competencies like creativity, critical thinking or communication in a domain-neutral way. These skills are neither exercised nor observed within a vacuum. In an assessment context, students’ ability to perform these skills will always be observed in a given situation and their knowledge about this context or situation will influence the type of strategies they use as well as what they are able to accomplish (Mislevy, 2018[6]). Attempting to design completely decontextualised assessment problems or scenarios threatens validity: if a student does not require any knowledge to solve a task, can the assessment truly claim to measure the types of complex competencies it claims to be interested in?

When designing assessments of 21st Century competencies, it is important to explicitly identify the knowledge students need to meaningfully engage with test activities and evaluate the extent to which differences in prior knowledge will influence the evidence we can obtain on the target skills. Moving beyond the idea of decontextualised assessments of 21st Century competencies can also help to make more valid claims when reporting what students can or cannot do. In the context of large-scale summative assessments in particular, it might be misleading to make general claims such as “students in country A are better problem solvers than students in country B”. From a single summative assessment we might only be able to claim that “students in country A are better than students in country B at solving problems in the situations presented in the test” – most likely, a limited number of situations contextualised in one or few knowledge domains (see Chapter 3 of this report for a further discussion on how to define suitable assessment contexts for 21st Century competencies).

We suggest that measuring the relevant knowledge that students have when engaging with a performance task (for example through a short battery of items) should become an integral part of the design and assessment process in NGAs. This information can also help to interpret student’s behaviours and choices in complex performance tasks (see Chapters 6 and 9 for a detailed presentation of the PISA 2025 Learning in the Digital World assessment that adopts this approach and considers students’ actions in the context of what they already know).

Metacognition, self-regulated learning and the capacity to flexibly apply one’s knowledge to address new problems differentiates routine from adaptive experts (Research insight 2). Design choices related to the content and organisation of task sequences in NGAs can draw upon insights from research on instructional design for guidance on how to elicit these competencies in an assessment context. There is evidence that we can make robust claims about students’ preparedness to learn new things by studying how they work on problems they have not encountered before (Roll et al., 2011[21]; Schwartz and Martin, 2004[22]).

One promising method involves “invention activities” that ask students to solve problems requiring concepts or procedures that they have not yet been taught, with the aim of encouraging students to invent methods that capture deep properties of a construct before being taught expert solutions (Roll et al., 2012[23]). While inventing their own original approaches to solving novel problems, students tend to make mistakes and fail to generate canonical solutions. However, experimental evidence shows that students who learn through invention activities are better at transferring their knowledge (i.e. solving other tasks requiring the same knowledge schemes but in a different application) in comparison to students who are directly told what to do and then practice those procedures (Loibl, Roll and Rummel, 2016[24]; Kapur and Bielaczyc, 2012[25]). Invention activities therefore help students to deeply understand concepts, identify the limitations of previous interpretations and procedures when they do not work, and look for new patterns and interpretations that build upon and connect with their earlier knowledge.

Similar ideas can also be found in frameworks defining effective classroom practices, such as the “teaching for understanding” framework by Wiske (1997[26]). In the instructional context, these early attempts to solve problems prepare students to learn from subsequent instruction; in this way, they augment rather than replace instruction. When transposed to an assessment context these types of activities can provide important evidence about whether students can flexibly apply their knowledge schema to unfamiliar contexts as adaptive experts do.

Learning activities have to be carefully designed in order to support students in building their understanding while inventing and interacting with problems. In traditional tests, students are left to their own devices – they typically cannot draw upon anything other than their existing knowledge – and if they do not know the relevant procedure to follow there is little they can do to progress (Schwartz and Arena, 2013[27]). In the real world, we can access resources when learning and problem solving: we compare challenges to previous assignments, search the Internet for similar problems or solution strategies, or ask a knowledgeable other for directions (Research insight 1). Similarly, assessments that challenge students to create knowledge or solutions that are new to them should incorporate relevant resources for learning because problem solving always requires some degree of knowledge (Research insight 3). These resources should be carefully crafted so that they do not give the solution away but rather provide opportunities to learn more about the problem and the likelihood that trying a given solution or implementing a certain strategy will help them to make progress toward a solution.

Contrasting cases represent one approach to providing such structure in learning and invention activities that has proven effective in experimental settings and that could be applied to larger-scale assessments (see Box 2.1). Contrasting cases highlight key features that are relevant to specific decisions or concepts that students might overlook when addressing a problem, especially when they have nothing to compare to their current problem. With contrasting cases, students are encouraged to invent a representation that is general (i.e. works across different cases) rather than particular – thus encouraging knowledge transfer.

Integrating more than a single resource to learn from in an assessment task can provide additional evidence about whether, what and how students choose to learn. For example, Schwartz and Arena (2013) designed a game where young students are provided with a familiar problem (mixing colours) applied to an unfamiliar context (projecting lights onto a stage). Students had to learn that the result of mixing colours for light is different to mixing colours for paint, as light depends on a different set of primary colours (additive colours) than paint (subtractive colours). They had two tools from which to learn this concept – an experiment room, where they could directly mix light colours, and a set of catalogues (i.e. contrasting cases) – which made it possible to track how students decided to learn.

Schwartz and Martin (2004[22]) designed an invention activity for learning about applications of the mean deviation formula. Students had to find a procedure to identify which of four baseball-pitching machines was more reliable at aiming a target. Students were given four grids with different features including the number of balls shot by each machine. By comparing grids students could notice the effect of sample size on their measure, which helped them to understand why the standard deviation formula includes division by the number of observations.

In another experiment, first-year biology students were asked to design walls for a zoo exhibit of small rodents (Taylor et al., 2010[28]). Students were shown images of a squirrel, a chipmunk and a mouse, along with the approximate mass and dimensions of each rodent, and then provided with diagrams of two exhibits (see Figure 2.1). In both cases, the wall design needed to allow one rodent to freely pass from side to side while preventing the other rodent from doing so.

Students realised quickly that the wall in Exhibit 1 could use holes big enough for mice but too small for squirrels, but this simple solution did not work for Exhibit 2. Solution ideas for Exhibit 2 varied but typically students would use differences in mass, length of the chipmunks (to reach higher) or jumping ability to allow the chipmunks to cross. Some students also invented more original ideas, such as a tail scanner.

This problem is analogous to a problem biology students encounter in the study of living cells (e.g. the need for transport proteins to selectively allow certain molecules to cross a cell membrane) although on the surface it appears to have little to do with course material. The researchers found that students who engaged in these invention activities were much quicker to engage with unfamiliar biology problems and provided multiple reasonable hypotheses to explain unfamiliar problems compared to other students.

To complement providing resources for students to make sense of a problem and start inventing solutions (Design innovation 3), NGAs should also consider including guidance during the solution process in the form of advice, feedback or prompts. This type of instructional support can promote deep learning in beginners and enable the observation of decisions they make in their learning (Azevedo and Aleven, 2013[29]; van Joolingen et al., 2005[30]). Targeted feedback and interventions can also reduce the risk that beginners disengage from an assessment because they perceive it to be beyond their capacities – which is especially important in the context of more extended performance tasks (Design innovation 1).

Instructional support and feedback can play a variety of important functions including: 1) engaging a student’s interest when they appear disengaged; 2) increasing their understanding of the requirements of a task when they demonstrate confusion; 3) reducing the degrees of freedom or the number of constituent acts required to reach a solution; 4) maintaining a student’s direction; 5) signalling critical features including discrepancies between what a student has produced and what they recognise as correct; 6) demonstrating or modelling solutions, for example reproducing and/or completing a partial solution attempted by the student; and 7) eliciting articulation and reflection behaviour (Guzdial, Rick and Kehoe, 2001[31]).

In the context of large-scale assessments, feedback to students needs to be automated. This is clearly challenging because effective feedback is both task- and tutee-dependent: the feedback system must be based on a complete model of the task’s demands, affordances and solution space, while also adapting to the performance of the student. Without attending to both, the system cannot generate feedback that is useful for all students, in turn failing to bring each student to their zone of proximal development (Vygotsky, 1978[32]). Artificial intelligence (AI) holds promise for answering to this challenge, at least for some types of learning and assessment experiences (see Chapter 10 of this report for more on AI-enabled adaptive feedback). Another challenge relates to the fact that students often do not proactively seek feedback or consult resources in interactive learning or assessment environments. It is therefore important to design such affordances in way that they are not too intrusive and distracting while being sufficiently visible and accompanied by explanations that invite test takers to use them.

Researchers have also invested considerable effort in creating multimedia learning environments that incorporate collaboration affordances as a way of supporting and monitoring knowledge-building processes (Lei and Chan, 2012[33]). In these environments, students receive feedback directly from their peers. Integrating these real-time, collaboration features in large-scale learning and assessment landscapes remains a challenge, although explorations are underway (see Rosé and Ferschke (2016[34]) as well as examples described in Chapter 3 of this report).

Including feedback and instructional support in summative tests is often challenged because of fairness concerns (e.g. concerns about unfairly penalising students who do not need support or threats to the validity of assessment claims). Shute, Hansen and Almond (2008[35]) undertook a rigorous evaluation of the psychometric quality of an assessment of algebra delivered by a digital learning system that combined adaptive task sequencing with instructional feedback. A comparison of metrics for a treated (with feedback) and control (without feedback) sample showed that providing instructional support did not make the assessment less able to detect differences between students or less valid. Indeed, providing feedback and support to students is more reflective of authentic learning experiences (Research insight 1). From a measurement perspective, the main challenge remains to develop and validate psychometric models for large-scale assessments that take into account how these resources affect students’ measured ability, as this ability can no longer be considered as fixed but can progress as a result of using resources and feedback (see Chapters 6 and 8 of this report for a more in-depth discussion of this issue).

In NGAs, all students should be able to demonstrate their ability to learn and progress by using the tools and resources available to them regardless of their initial level of knowledge or skill. Adapting assessment challenges to different abilities not only improves the quality of the measures but also the authenticity and attractiveness of the assessment experience. In real life people seldom take on challenges that they find either too easy or impossible to achieve, yet in traditional tests this happens quite frequently.

One approach to catering to different student ability levels involves designing tasks that have so-called “low floors, high ceilings”, meaning that they are accessible to all students while still challenging top performers. Ensuring tasks are accessible for all students in terms of connecting to their previous learning experiences responds to the idea that successfully engaging 21st Century competencies requires some degree of relevant knowledge (Research insight 3). However, these types of problems are much more difficult to design than the standard problems found in traditional tests, where the item is matched to one specific level of difficulty and there is typically only one correct response.

One cluster of low floor, high ceiling problems asks students to produce an original artefact: this might be a story, a game, a design for a new product, an investigation report on some news, a speech, etc. These more open performance tasks generate a wide range of qualitatively distinct responses, and even top performers have incentives to use resources that can help them produce a solution that is more complete, richer and unique. The low floor, high ceiling design can also be used in the context of more standardised problem solving tasks if students are told that there are intermediate targets to achieve and that they are expected to progress as much as they can towards a sophisticated solution (see Box 2.2 for an example assessment experience using a low floor, high ceiling approach to task design).

The Platform for Innovative Learning Assessments (PILA) is a research laboratory coordinated by the OECD. Assessments in PILA are designed as learning experiences that provide real-time feedback on student performance, typically for use in the context of classroom instruction. One overall objective of PILA is to make assessment designers, programmers, measurement experts and educators work together to explore new ways to close the gap between learning and assessment.

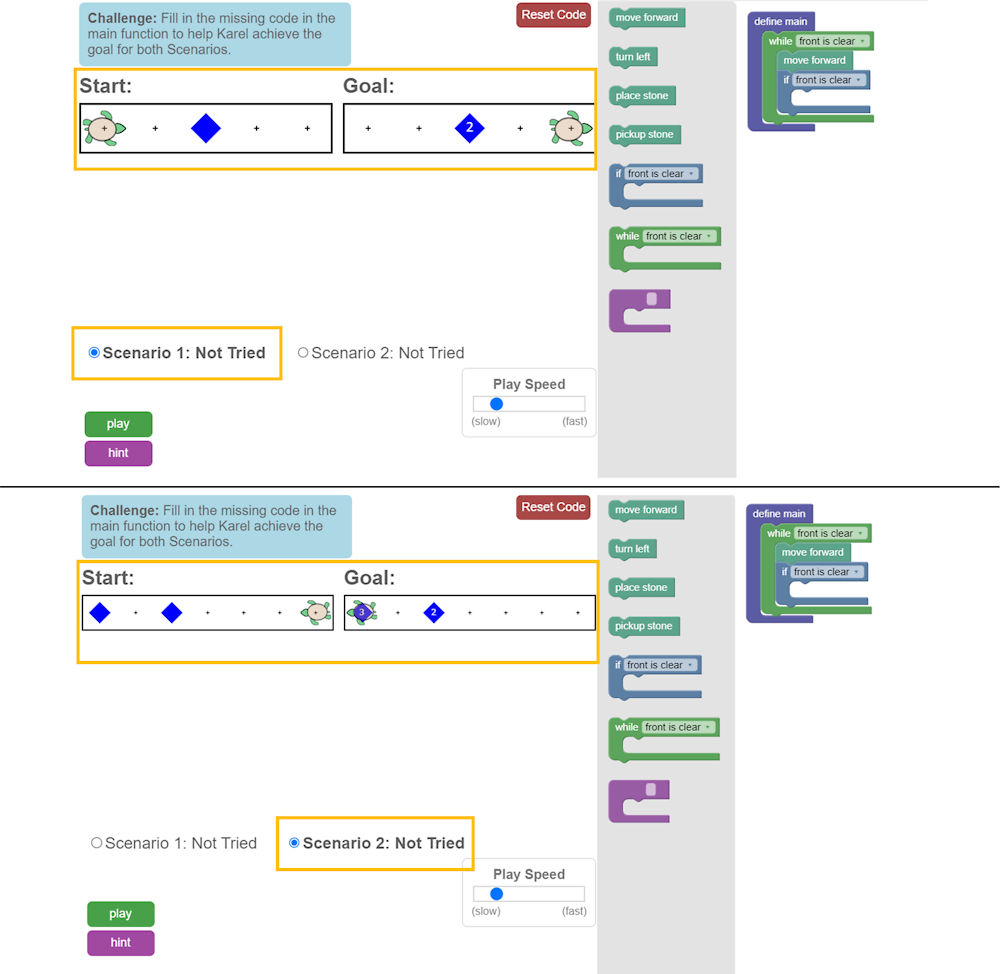

One assessment application developed in PILA focuses on computational problem solving. Students use a block-based visual programming interface to instruct a turtle robot (“Karel”) to perform certain actions. Tasks have a low floor, high ceiling: the intuitiveness of the visual programming language and the embedded instructional tools (e.g. interactive tutorial, worked examples) allow students with no programming experience to engage successfully with simple algorithmic tasks, yet the same environment can present complex problems that challenge expert programmers. Figure 2.2 shows an example task asking students to create a single program that moves Karel to the goal state in two different scenarios. To solve the problem, students can toggle between the two scenarios to visually observe the differences in the environment and how well their program works in both. Even students with solid programming skills generally require multiple iterations before finding an optimal solution for both scenarios, but the scoring models take into account partial solutions (e.g. solving the problem in only one scenario).

Source: OECD (n.d.[36]), OECD’s Platform for Innovative Learning Assessments (PILA), https://pilaproject.org/.

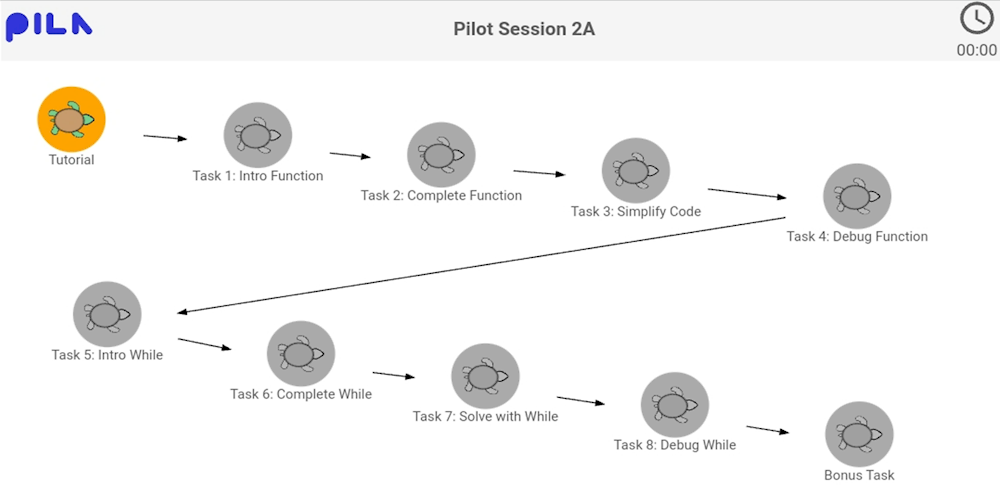

Each PILA assessment experience is also structured as a progression of increasingly complex tasks (see Figure 2.3) that have a common learning target (e.g. using functions efficiently). Assessment designers and teachers have the option of locking students within a particular task until they are able to solve it (i.e. a ‘level-up’ mechanism) or giving students control over how they move along the task sequence. Only highly skilled students are expected to finish the whole task sequence and this is communicated clearly to students at the beginning of the assessment experience to reduce potential frustration. In the future, PILA plans to include adaptive pathways (i.e. problem sequences that adapt in real time to student performance) in order to further align the experience with the students’ previous knowledge and skills.

Source: OECD (n.d.[36]), OECD’s Platform for Innovative Learning Assessments (PILA), https://pilaproject.org/.

Adaptive designs can also address the complexity of measuring learning-in-action amongst heterogenous populations of students. A relatively simple way to cater to different student ability groups involves creating scenarios where students have a complex goal to achieve and they progress towards this goal by completing a sequence of tasks that gradually increase in difficulty (similar to a “level-up” mechanism, also described in Box 2.2). More proficient students will quickly complete the initial set of simple tasks, after which they will encounter problems that challenge them. Less prepared students are also still able to engage meaningfully with the tasks and demonstrate what they can do in this task sequence design, even if they do not complete the full sequence. Both groups of students work at the cutting edge of their abilities with obvious benefits in terms of measurement quality and test engagement. With current technologies, this design could be further improved by introducing multiple adaptive paths within a scenario: on the basis of the quality of their work, students could be directed on-the-fly towards easier or more difficult sub-tasks.

This chapter has argued that the next generation of assessments should focus on observing and interpreting how students solve complex problems and learn to do new things. Exclusive reliance on traditional tests risks encouraging a skill-and-drill education system that does not prepare students adequately for future learning and for solving problems they have not yet seen. Students use 21st Century competencies to learn and solve problems in situations in which they interact with others, evaluate what course of action to take, consider their available resources, try out multiple strategies and adapt according to the results. If we want to assess those competencies, then we must reproduce the interactive features of these learning situations in assessments – otherwise we risk measuring something different.

In order to design these new assessments, we need a good understanding of what drives productive learning and therefore what students should be expected to demonstrate in such situations. Research comparing novices to experts (i.e. those that can apply their knowledge and skills to novel problems) tells us that experts acquire competence through interactions in communities of practice, organise their knowledge into schema they continuously consolidate and overcome the limits of their current knowledge through self-regulation and reflection. These processes can be reproduced and observed, at least to some extent, with extended assessment tasks that stimulate students to engage productively with learning resources and affordances in relatively open environments. The research on instructional design points towards some general design innovations that are worth trialling at scale in assessment. These include the use of interactive and extended performance tasks that confront students with problems they have not seen before, that provide them with resources to explore and build their understanding, that assist them with feedback and support when they struggle to make progress, and that adapt in terms of complexity to what students can and cannot do.

Clearly, creating these types of assessment experiences that enable the observation of learning-in-action is not without complexity from a design perspective; making sure that they work from a measurement perspective is even more challenging. Enriching the range of assessments we use to measure students’ development of 21st Century competencies will require coordinated efforts in multiple directions. First, we have to better understand how learning unfolds across different domains and types of human endeavours and how to design tasks that reproduce the key elements of the social processes behind expertise. Second, we need improved measurement models that can validly interpret complex patterns of behaviours in dynamic environments, where both a student’s knowledge and the problem state change as a result of their actions. Third, we need enhanced processes to validate the inferences we make from these more complex and performance-oriented assessments, including their sensitivity to cross-cultural differences.

Finally, we need to keep in mind that the complex patterns of behaviours generated by these assessments may ultimately not be strong or separable enough to make claims about students – but it is important to find this out. We contend that we must take on this challenge because, for better or worse, assessments drive the teaching and learning that takes place within education systems. As much as teachers, business leaders and global policymakers might affirm the importance of developing competencies like persistence, critical thinking or collaboration, ultimately students and teachers will be guided by the focus of assessments.

[29] Azevedo, R. and V. Aleven (eds.) (2013), International Handbook of Metacognition and Learning Technologies, Springer, New York, https://doi.org/10.1007/978-1-4419-5546-3.

[9] Boaler, J. and M. Staples (2008), “Creating mathematical futures through an equitable teaching approach: The case of Railside School”, Teachers College Record: The Voice of Scholarship in Education, Vol. 110/3, pp. 608-645, https://doi.org/10.1177/016146810811000302.

[18] Chase, W. and H. Simon (1973), “Perception in chess”, Cognitive Psychology, Vol. 4/1, pp. 55-81, https://doi.org/10.1016/0010-0285(73)90004-2.

[5] Darling-Hammond, L. et al. (2019), “Implications for educational practice of the science of learning and development”, Applied Developmental Science, Vol. 24/2, pp. 97-140, https://doi.org/10.1080/10888691.2018.1537791.

[4] Dumont, H., D. Istance and F. Benavides (eds.) (2010), The Nature of Learning: Using Research to Inspire Practice, OECD Publishing, Paris, https://doi.org/10.1787/9789264086487-en.

[7] Ericsson, K. (2006), “The influence of experience and deliberate practice on the development of superior expert performance”, in Ericsson, K. et al. (eds.), The Cambridge Handbook of Expertise and Expert Performance, Cambridge University Press, Cambridge, https://doi.org/10.1017/cbo9780511816796.038.

[31] Guzdial, M., J. Rick and C. Kehoe (2001), “Beyond adoption to invention: Teacher-created collaborative activities in higher education”, Journal of the Learning Sciences, Vol. 10/3, pp. 265-279, https://doi.org/10.1207/s15327809jls1003_2.

[12] Hadwin, A., S. Järvelä and M. Miller (2017), “Self-regulation, co-regulation, and shared regulation in collaborative learning environments”, in Schunk, D. and J. Greene (eds.), Handbook of Self-Regulation of Learning and Performance, Routledge, New York, https://doi.org/10.4324/9781315697048-6.

[11] Hatano, G. (1990), “The nature of everyday science: A brief introduction”, British Journal of Developmental Psychology, Vol. 8/3, pp. 245-250, https://doi.org/10.1111/j.2044-835x.1990.tb00839.x.

[14] Hatano, G. and K. Inagaki (1986), “Two courses of expertise”, in Stevenson, H., H. Azuma and K. Hakuta (eds.), Child Development and Education in Japan, Freeman, New York.

[13] Hatano, G. and Y. Oura (2003), “Commentary: Reconceptualizing school learning using insight from expertise research”, Educational Researcher, Vol. 32/8, pp. 26-29, https://doi.org/10.3102/0013189x032008026.

[25] Kapur, M. and K. Bielaczyc (2012), “Designing for productive failure”, Journal of the Learning Sciences, Vol. 21/1, pp. 45-83, https://doi.org/10.1080/10508406.2011.591717.

[33] Lei, C. and C. Chan (2012), “Scaffolding and assessing knowledge building among Chinese tertiary students using e-portfolios”, in The Future of Learning: Proceedings of the 10th International Conference of the Learning Sciences (ICLS 2012), International Society of the Learning Sciences, Sydney.

[1] Levy, F. and R. Murnane (2004), The New Division of Labor: How Computers are Creating the Next Job Market, Princeton University Press, Princeton and Oxford.

[24] Loibl, K., I. Roll and N. Rummel (2016), “Towards a theory of when and how problem solving followed by instruction supports learning”, Educational Psychology Review, Vol. 29/4, pp. 693-715, https://doi.org/10.1007/s10648-016-9379-x.

[6] Mislevy, R. (2018), Sociocognitive Foundations of Educational Measurement, Routledge, New York and London.

[3] National Research Council (2001), Knowing What Students Know: The Science and Design of Educational Assessment, The National Academies Press, Washington, D.C., https://doi.org/10.17226/10019.

[20] Nunes, C., M. Nunes and C. Davis (2003), “Assessing the inaccessible: Metacognition and attitudes”, Assessment in Education: Principles, Policy & Practice, Vol. 10/3, pp. 375-388, https://doi.org/10.1080/0969594032000148109.

[36] OECD (n.d.), Platform for Innovative Learning Assessments, https://pilaproject.org/ (accessed on 3 April 2023).

[2] Pellegrino, J. and M. Hilton (2012), Education for Life and Work: Developing Transferable Knowledge and Skills in the 21st Century, The National Academies Press, Washington, D.C., https://doi.org/10.17226/13398.

[8] Piaget, J. (2001), The Language and Thought of the Child, Routledge, London.

[21] Roll, I. et al. (2011), “Improving students’ help-seeking skills using metacognitive feedback in an intelligent tutoring system”, Learning and Instruction, Vol. 21/2, pp. 267-280, https://doi.org/10.1016/j.learninstruc.2010.07.004.

[15] Roll, I. et al. (2007), “Designing for metacognition—applying cognitive tutor principles to the tutoring of help seeking”, Metacognition and Learning, Vol. 2/2-3, pp. 125-140, https://doi.org/10.1007/s11409-007-9010-0.

[23] Roll, I. et al. (2012), “Evaluating metacognitive scaffolding in guided invention activities”, Instructional Science, Vol. 40/4, pp. 691-710, https://doi.org/10.1007/s11251-012-9208-7.

[34] Rosé, C. and O. Ferschke (2016), “Technology support for discussion-based learning: From computer supported collaborative learning to the future of Massive Open Online Courses”, International Journal of Artificial Intelligence in Education, Vol. 26/2, pp. 660-678, https://doi.org/10.1007/s40593-016-0107-y.

[19] Sabatini, J., T. O’Reilly and Z. Wang (2018), “Scenario-based assessment of multiple source use”, in Braasch, J., I. Braten and M. McCrudden (eds.), Handbook of Multiple Source Use, Routledge, New York.

[16] Schoenfeld, A. (1985), Mathematical Problem Solving, Academic Press, New York.

[27] Schwartz, D. and D. Arena (2013), Measuring What Matters Most: Choice-Based Assessments for the Digital Age, The MIT Press, https://doi.org/10.7551/mitpress/9430.001.0001.

[22] Schwartz, D. and T. Martin (2004), “Inventing to prepare for future learning: The hidden efficiency of encouraging original student production in statistics education”, Cognition and Instruction, Vol. 22/2, pp. 129-184, https://doi.org/10.1207/s1532690xci2202_1.

[35] Shute, V., E. Hansen and R. Almond (2008), “You can’t fatten a hog by weighing it - or can you? Evaluating an assessment for learning system called ACED”, International Journal of Artificial Intelligence in Education, Vol. 18/4, pp. 289-316, https://myweb.fsu.edu/vshute/pdf/shute%202008_a.pdf (accessed on 26 March 2018).

[17] Simon, H. (1980), “Problem solving and education”, in Tuma, D. and R. Reif (eds.), Problem Solving and Education: Issues in Teaching and Research, Erlbaum, Hillsdale, https://iiif.library.cmu.edu/file/Simon_box00013_fld00890_bdl0001_doc0001/Simon_box00013_fld00890_bdl0001_doc0001.pdf (accessed on 20 March 2023).

[10] Tan, S. et al. (2021), “Knowledge building: Aligning education with needs for knowledge creation in the digital age”, Educational Technology Research and Development, Vol. 69/4, pp. 2243-2266, https://doi.org/10.1007/s11423-020-09914-x.

[28] Taylor, J. et al. (2010), “Using invention to change how students tackle problems”, CBE—Life Sciences Education, Vol. 9/4, pp. 504-512, https://doi.org/10.1187/cbe.10-02-0012.

[30] van Joolingen, W. et al. (2005), “Co-Lab: Research and development of an online learning environment for collaborative scientific discovery learning”, Computers in Human Behavior, Vol. 21/4, pp. 671-688, https://doi.org/10.1016/j.chb.2004.10.039.

[32] Vygotsky, L. (1978), Mind in Society: The Development of Higher Psychological Processes, Harvard University Press, Massachussets.

[26] Wiske, M. (ed.) (1997), Teaching for Understanding: Linking Research with Practice, Jossey-Bass, San Francisco.