Mario Piacentini

OECD

Natalie Foster

OECD

Mario Piacentini

OECD

Natalie Foster

OECD

This chapter provides a simple guiding framework to identify gaps within existing assessment systems and orient decisions on which kinds of next-generation assessments to develop. The chapter does not prescribe a fixed or exhaustive list of new assessments that should be conducted nationally or internationally; rather, it provides a framing for how decision makers might map their assessment needs and define their priorities when developing new assessments. The chapter presents several examples of innovative assessments of 21st Century competencies that are consistent with this framing approach.

As set forth in the Introduction and previous chapters of this report, mastering 21st Century competencies means being ready to successfully engage with different life situations and roles including those that are difficult to anticipate. Chapter 2 also argued that it is possible to assess these competencies by observing and interpreting how students engage with complex problems in interactive and resource-rich assessment environments that include opportunities for learning. The types of situations that students will need to successfully face throughout their lives are extremely diverse and in turn will call upon a combination of 21st Century competencies. Part of the challenge when it comes to assessing these competencies thus lies in being able to develop a sufficiently rich and diverse set of assessment experiences that adequately balances and reflects this diversity.

This chapter aims to provide some guidance on the complex challenge of determining where there are gaps in assessment systems and deciding how to address them by developing next-generation assessments (NGA). It does not prescribe a fixed or exhaustive list of assessments that should be conducted nationally and internationally; rather, it provides a simple framework to help decision makers map their assessment needs and define their priorities in the context of developing new assessments that assign value to a wider set of learning outcomes and capabilities. The chapter presents several examples of innovative assessments of 21st Century competencies that are consistent with this framing.

As argued in Chapter 1 of this report, different types of authentic problems or learning activities call upon a different combination of knowledge and skills. One example might be validating a claim, which primarily requires students to understand what information needs researching, to identify the available tools to conduct the research, and to engage their motivation and self-regulation skills to conduct a thorough investigation and process information critically. Another example might be debugging a dysfunctional system or inventing a more efficient method to achieve a desired outcome; these problems primarily require exploration and ideation skills, the capacity to implement and monitor strategies, and persistence.

When it comes to defining the knowledge and skills required to tackle a particular problem or learning task, the context of application clearly matters too: developing an engaging movie idea and building an efficient IT network can both be considered creative activities but they each call upon a different set of domain-relevant knowledge and tools and emphasise different aspects of creativity-related skills and dispositions. Similarly, learning and problem solving in a group setting involves different processes and requires additional skills with respect to individual learning and problem solving.

The point here is to underline that decisions about what to assess and how to define assessment constructs depend on multiple contextual elements that need to be considered together and that need to be explicitly identified and addressed in the initial stages of assessment design. If the goal is to assess 21st Century competencies in a more valid and comprehensive manner, then we need a holistic system of assessments that reflect the diversity of authentic problems and learning activities that engage those competencies – in both disciplinary and cross-disciplinary contexts.

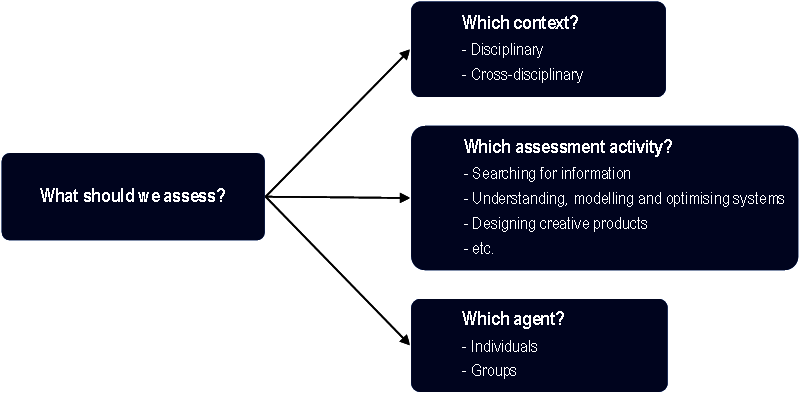

While the scope of that realisation may seem daunting, we suggest a simple framework for how we might think about determining what to assess when the intended purpose is to provide better quality information about students’ development of 21st Century competencies. We argue that three interrelated questions should guide decision making on the focus of assessments (see Figure 3.1), and therefore how target constructs are defined and assessment tasks are designed:

1. For which kinds of roles and related activities do we want to understand students’ preparedness? This question relates to explicitly defining the scenarios and assessment activity (or activities) of interest, and the relevant practices students should demonstrate while engaging in those activities.

2. In which contexts of practice can students engage in these activities? This question relates to acknowledging the relevant body of context-relevant knowledge, skills and attitudes that students need to engage productively with a given type of activity within a given context of practice. In other words, it relates to situating the activity within the boundaries of a discipline or making it cross-disciplinary but specifying the context(s) of application.

3. For the purpose of assessment, are those activities organised as an individual or a group activity? This question relates to determining whether the test taker is working independently or collaboratively during the assessment.

Our perspective is that we can make robust claims on students’ preparedness for their future if we collect data on how they engage with different types of relevant activities; if we contextualise those activities in a sufficiently diverse set of disciplinary and cross-disciplinary domains; and if we – for at least some of the activities – provide opportunities for collaborative work. These assessments can be designed as valuable learning activities in their own right, such that using them does not take time away from important educational targets.

In Chapter 2 of this report, we outlined the defining features of next-generation assessments (NGA) of 21st Century competencies and argued that the types of authentic problems suited to assess them all share a set of common characteristics. These include: 1) requiring students to draw on robust knowledge schemes within a disciplinary domain or to integrate knowledge from several domains; 2) allowing students to work through them in different ways that may lead to different, yet reasonable outcomes; 3) requiring iterative processes of reasoning and doing, and therefore calling upon metacognitive reflection; and 4) enabling most students (regardless of ability level) to make some progress towards their learning and problem-solving goal(s), while allowing proficient and motivated students to produce rich and sophisticated solutions. In sum, we suggest that NGA should be interactive and resource-rich, and based on extended, iterative and adaptive tasks that test students on their capacity to create knowledge or solve complex and authentic problems.

We also argued that a student’s performance in these types of assessments depends on their knowledge or prior experience of situations that have similar patterns to the ones presented in the assessment. In other words, the domain of application clearly matters when it comes to student performance and consequently the interpretation of their performance. It is therefore critically important at the beginning of an assessment design process to make explicit and motivated choices on whether to contextualise NGA within a specific knowledge domain or to situate tasks across multiple disciplines. Cross-disciplinary here does not mean taking a domain-general approach, as the competencies that students need to engage still depend on a well-defined set of knowledge; rather, cross-disciplinary implies only that the knowledge required to engage with those tasks is not limited by the bounds of a single discipline.

In education, the most widely used assessments of learning outcomes are typically set in one single disciplinary area (e.g. mathematics, biology, history) and primarily focus on the reproduction of acquired knowledge and procedures relevant to that discipline. We argue that, if applied to disciplinary domains along with more traditional assessment approaches, NGA could bring a better balance between the testing of disciplinary knowledge and the evaluation of students’ capacity to apply this knowledge to new problems in authentic contexts.

NGAs invite students to engage in authentic practices that reflect how disciplinary knowledge is used in real life, to address both professional and everyday problems. In science, for example, an NGA could ask students to engage in an exploration of a scientific phenomenon in a virtual lab using relevant tools and progressing through the sequence of decisions that real scientists follow in their professional practice (see Chapter 4 of this report for a more detailed proposal for assessing complex problem solving in science and engineering domains). Similarly in history, students might be asked to collaboratively investigate and find biases in an historical account of an event.

While we argue that the NGA principles outlined in Chapter 2 of this report should be adopted in subject-specific assessments to deepen evaluations of disciplinary expertise, we also acknowledge that there is value in situating NGA in contexts that do not reflect established school subjects. This is simply because life outside of school is not neatly organised by subject area. Moreover, assessment tasks that span across different disciplinary areas may better reflect the nature of classroom-based, knowledge-building activities that have proven effective for developing 21st Century competencies.

Research shows that engaging students in “epistemic games”, where they simulate the work of professionals, is a productive pedagogical choice for developing 21st Century competencies (Shaffer et al., 2009[1]). Epistemic games are immersive, technology-enhanced role-playing games where players learn to think like doctors, lawyers, engineers, architects, etc. Evidence shows that what students learn during epistemic games transfers beyond the game scenario and helps them to become fluent in valuable social practices that cross disciplinary boundaries. For example, when learning to investigate a news report as journalists do, learners acquire critical thinking skills that will be valuable in their lives no matter their future profession. Many professional roles also require individuals to connect different areas of disciplinary knowledge and use their intrapersonal and interpersonal skills to solve unfamiliar problems. Some existing innovative assessments adopt this approach and simulate or immerse students in a professional task where they have to draw upon and creatively apply their knowledge from multiple disciplines (see Box 3.1).

In SimCityEdu, students assume the role of a city planner (Mislevy et al., 2014[2]). The 3-D simulation environment includes many of the features of a real city: students can build or demolish buildings, transport systems, power plants and schools, as well as interrogate virtual agents on the state of the city. The assessment mission requires students to replace large polluters in the city with green technologies to improve the air quality while at the same time supporting the city’s employment. The assessment within the game was designed to measure students’ capacity to understand, intervene and optimise systems that are comprised of multiple variables – a 21st Century skill that is generally referred to as systems thinking or complex problem solving (Arndt, 2006[3]).

Success in the assessment demands strong metacognitive and reflective capabilities: in the fast-moving simulation, students have to analyse what happened, why it happened, what the consequences are, and what to do next. Students must also understand a city planner’s role, be able to read data on employment and pollution as well as understand written language well enough to make sense of the games’ goals, the citizens’ complaints and other live feedback. The example nicely illustrates how simulated professional contexts provide fertile grounds for assessing 21st Century competencies that cross disciplinary domains. It also demonstrates how any assessment of a particular 21st Century skill – in this case, systems thinking – cannot be decoupled from students’ prior knowledge and other skills

Simulating professional work thus represents a fertile ground for cross-disciplinary assessments. However, readiness for life – the core aspiration behind efforts to teach and measure students’ development of 21st Century competencies – implies more than just being prepared for undertaking a particular job or shifting to a new job when circumstances change. Preparing students for life also involves giving them the means to make decisions in the social and civic sphere. Making responsible decisions in these contexts requires students to develop an understanding of how various social and civic problems arise as well as how they can be solved. Arguably, multiple disciplines contribute to such a complex body of knowledge.

Research shows that the capacity to act as members of a social group and in the public sphere can be acquired through collaborative, project-based learning experiences where students participate in the life of a real or simulated community, expressing opinions on issues, practicing decision making and taking on different roles (Feldman et al., 2007[4]). It follows that some NGA should be contextualised in situations where students have to act as responsible citizens, confronting problems involving a group of peers, a neighbourhood or wider communities. Modern simulation-based assessments can incorporate many of these experiential learning techniques, affording opportunities to make social choices and develop empathetic understanding by projecting oneself through an avatar (Raphael et al., 2009[5]). These types of simulations are particularly suited to assess socio-emotional skills like communication, cooperation and empathy, and an increasing number of role-play games have been designed to assess them in a stealth way. For example in the game Hall of Heroes, students enrol in a superhero middle school where they must develop their powers and skills to make friends, resist peer pressure and save the school from a supervillain (Irava et al., 2019[6]).

One significant challenge concerning cross-disciplinary assessments of 21st Century competencies is the lack of solid theories about how knowledge and skills develop in these contexts. Precisely defining which factors should be considered construct-relevant or -irrelevant, and what exactly constitutes “good performance”, in cross-culturally valid ways, are related challenges (see Chapter 6 of this report for an in-depth example of domain analysis and modelling for complex constructs).

Not all assessment problems can give us a rich body of evidence on learners’ cognitive, metacognitive, attitudinal and socio-emotional skills. Traditional models of problem solving, known as phase models (Bransford and Stein, 1984[7]) suggest that all problems can be solved if individuals: 1) identify the problem; 2) generate alternative solutions; 3) evaluate those solutions; 4) choose and implement a solution; and 5) evaluate the effectiveness of the chosen solution. While these descriptions of general processes are useful, they might wrongly imply that problem solving is a uniform activity (Jonassen and Hung, 2008[8]). In reality, problems significantly vary in several important ways including the context in which they occur, their structure or openness, and the combination of knowledge and skills that the problem solver needs in order to reach a successful outcome.

We illustrate here three clusters of assessment activities that can likely provide valid evidence about how well learning experiences have prepared students for their future. We name these three clusters as follows: 1) searching for, evaluating and sharing information; 2) understanding, modelling and optimising systems; and 3) designing creative products. This is not by any means an exhaustive typology of NGA activities; a definitive typology does not exist and might even have the perverse effect of constraining the ideation of new assessments, not to mention the fact that the types of problems and activities for which students will need to be prepared for their future will continue to evolve over time. Moreover, we acknowledge that these three clusters of activities are not mutually exclusive and that they can overlap to some extent. This is particularly so in the context of classroom assessment where it would be possible to combine them into rich, extended experiences of knowledge creation and problem solving. However, we find these three clusters illustrative of different problem types that draw upon distinctly different sets of knowledge and skills but that each provide opportunities to observe higher-order thinking and learning processes.

In this cluster of activities, the main problem solving or learning goal for test takers consists of searching for and using information to reason about a problem and communicate a supported conclusion. These activities focus on how students interact with various types of media and information resources, and represent authentic and relevant activities in virtually any disciplinary or cross-disciplinary knowledge area (i.e. context of practice).

Engaging in these type of activities is frequently defined in the literature as information problem solving (Brand-Gruwel, Wopereis and Vermetten, 2005[9]; Wolf, Brush and Saye, 2003[10]). Information problem solving emphasises 21st Century competencies such as critical thinking, synthesis and argumentation, communication and self-regulated learning, which are all core competencies that students need for their future – and increasingly so as the rise of the Internet and social media has brought about changes to the way people consume news and information (Brashier and Marsh, 2020[11]; Flanagin, 2017[12]). However, research shows that many students are not able to solve information problems successfully (Bilal, 2000[13]; Large and Beheshti, 2000[14]).

In an NGA context, the sequencing of tasks in this activity cluster should require students to identify their information needs, locate information sources in online or offline environments, extract, organise and compare information from various sources, reconcile conflicts in the information, and make decisions about what information to share with others and how.

Several existing assessment examples focus on these type of information problems and incorporate the NGA design principles described in Chapter 2 of this report. In the United States, the National Assessment of Educational Progress (NAEP) Survey Assessments Innovation Lab (SAIL) “Virtual World for Online Inquiry” project (Coiro et al., 2019[15]) developed a virtual platform simulating a micro-city world, where students are presented with an open inquiry challenge (e.g. to find out whether an historical artefact should be displayed in the local museum). Students must build their knowledge by planning an inquiry strategy with a virtual partner, asking questions to virtual experts, searching for information on a web environment or in a virtual library, and using different digital tools to take notes and redact a report. The environment includes several adaptive design features (e.g. hints, prompts and levelling) to help students regulate their inquiry processes and to encourage efficient and effective information gathering.

Similar assessments can also be fully integrated within learning experiences, with evidence about students’ competencies extracted in a “stealth” way by analysing the sequences of choices students make during their inquiry processes in addition to the final outcome of their information search and synthesis (see Box 3.2 on the Betty’s Brain learning environment).

In the Betty’s Brain environment developed by researchers at Vanderbilt University (Biswas, Segedy and Bunchongchit, 2015[16]), students engage in an extended learning-by-teaching task in which they teach a virtual agent, “Betty”, about a scientific phenomenon. They do so by searching through hyperlinked resources and constructing a concept map that represents their emergent understanding of the phenomenon. Students can ask Betty to take tests where she responds using the information represented in the concept map; Betty’s performance on this test informs students about wrong or missing elements in the map. The students can also interact with another computer agent, “Mr. Davis”, who helps them understand how to use the system and provides useful suggestions on effective learning strategies (e.g. how to test Betty and interpret the results of the test).

The learning-by-teaching paradigm implemented through a computer-based learning environment provides a social framework that engages students and helps them learn. However, over a decade of research with Betty’s Brain shows that students experience difficulties when going through the complex task of building digital representations of their understanding (Biswas, Segedy and Bunchongchit, 2015[16]). In particular, many students are unable or not motivated enough to conduct systematic tests of their concept map. In order to be successful in the environment, students must apply metacognitive strategies for setting goals, developing plans for achieving these goals, monitoring their plans as they execute them, and evaluating their progress. The data collected in this blended learning and assessment environment are thus particularly valuable for evaluating students’ self-regulated learning skills.

The rapid evolution of digital and social media and new modes of interacting with these kinds of information resources also need to be reflected in NGA, meaning assessments centred on this activity cluster should also expand their focus to evaluating the transmission of (mis)information and fact-checking behaviours in open, networked information environments (Ecker et al., 2022[17]). Next-generation assessments focusing on these aspects of information problem solving might draw inspiration from existing digital games and simulations that promote the development of these skills. For example, games such as “Fake It To Make It” (Urban, Hewitt and Moore, 2018[18]), “Bad News” (Roozenbeek and van der Linden, 2018[19]) or “Go Viral!” (Basol et al., 2021[20]) teach players common techniques for promoting misinformation in the hope that this prepares them to respond to it. In “The Misinformation Game”, learners can engage with posts in ecologically valid ways by choosing an engagement behaviour (with options including liking, disliking, sharing, flagging and commenting), and they are provided with dynamic feedback (i.e. changes to their own simulated follower count and credibility score) depending on how they interact with posts containing either reliable or unreliable information (van der Linden, Roozenbeek and Compton, 2020[21]).

In this cluster of activities, the main problem solving or learning goal is to model a phenomenon or engineer a desired state within a dynamic system. For example, this might involve troubleshooting a malfunctioning system, generating and testing hypotheses about faulty states, or exploring a simulated environment with the goal to produce or achieve a certain output (see the simulated city example, SimCityEdu, described in Box 3.1). In short, what unites this cluster of activities is that learners have to generate their own understanding about how a (complex) system works through their interactions and experimentation with a given set of tools and then use this understanding to achieve a particular outcome or make some kind of prediction.

Engaging in these types of activities emphasises the inquiry and problem-solving practices that are the focus of modern science and technology education, such as conducting reasoned experiments, understanding systems and engineering solutions. Typically, these activities require students to plan and execute actions systematically, observe, interpret and evaluate changes resulting from their interventions, and adapt their strategies based on their observations. As such, this activity cluster works particularly well when contextualised within scientific disciplines.

However, these practices are also relevant in many real-life contexts beyond scientific disciplines and these types of problems also require significant metacognitive and self-regulated learning skills. This is in part due to the various sources of complexity that these types of problems may include related to: 1) the number of different variables; 2) the mutual dependencies between variables; 3) the role of time and developments within a system (e.g. the time between an action and observed effect); 4) transparency about the involved variables and their current values; and 5) the presence of multiple levels of analysis, with potential conflicts between levels (Dörner and Funke, 2017[22]; Wilensky and Resnick, 1999[23]). This cluster of activities can therefore also provide valuable information on how well students can address complexity and uncertainty, and how persistent and goal-oriented they are.

In an NGA context, this cluster of activities require complex system environments with multiple variables. These environments essentially function as micro-worlds in which students can make decisions about which variables to manipulate and how, and in which the environment dynamically changes either as a function of the sequence of decisions made by students or independently of them (or both).

Since the early 1980s, researchers have developed simulations of complex problems in different contexts in order to examine learning and decision making under realistic circumstances (see work by Berry and Broadbent (1984[24]) or Fischer, Greiff and Funke (2017[25]), for example). In one micro-world developed by Omodei and Wearing (1995[26]), students played the role of a Chief Fire Officer and had to combat fires spreading in a landscape using truck and helicopter fire-fighting units. The micro-world depicted a landscape comprising forest, clearings and property, the position of initial fires, the position of fire-fighting units, and the direction and strength of the wind. The problem state of the micro-world changed both independently (e.g. as a result of changes in the wind) and as a consequence of the learners’ actions. Task performance in the assessment was measured as the inverse proportion of the number of cells destroyed by fire.

More recently, many micro-worlds have been developed to assess inquiry and decision-making processes in the context of science, technology, engineering and mathematics (STEM) education. One interesting example is Inq-ITS virtual lab (Gobert et al., 2013[27]), a web-based environment in which students conduct inquiry with interactive simulations and the support of various tools. In one Inq-ITS simulation, students examine how the populations of producers, consumers and decomposers are interrelated with one another. Students are asked to stabilise the ecosystem, and in order to solve the problem they have to form a hypothesis, collect data by changing the population of a selected organism, analyse the data by examining automatically generated data tables and population graphs, and communicate the findings by completing a brief lab report. Measures of students’ skills are derived from the analysis of the processes they follow while conducting their investigation.

When it comes to designing the kinds of micro-worlds described here, one challenge – particularly in the context of summative assessments – relates to the level of complexity to include within the system. Simple micro-worlds with limited affordances, such as the Micro-Dyn problems used in the 2012 PISA problem solving assessments (Fischer, Greiff and Funke, 2017[25]), do not require an extended familiarisation process for learners. They can also typically present several shorter problems to students in time-limited assessment windows as compared to more complex systems that are characterised by multiple non-linear relationships, moderating variables and rebound effects; this in turn can increase the reliability of measurement claims as well as facilitate the generalisability of those claims (as evidence is accumulated by observing how students solve different problems with different tools). However, aiming to minimise complexity is not necessarily the best approach as simple simulations might not yield sufficiently valid insights into the way students deal with complexity and uncertainty (Dörner and Funke, 2017[22]).

The third cluster of activities we discuss here are those that engage students in creative work resulting in a variety of purposeful and expressive products. These practices and resulting products can be imagined in a variety of contexts, from the engineering space (e.g. inventing a new product) to the expressive (e.g. producing a work of art or writing a poem). Creative design problems can also clearly be cross-disciplinary, and there is a growing interest in incorporating “maker settings” in education. By blurring the boundaries between disciplinary subjects, “making” activities introduce students to the expression, exploration and design processes that are central to many professional domains as well as support the development of intrapersonal skills and interpersonal skills (Martinez and Stager, 2013[28]; Blikstein, 2013[29]).

Design problems are also good examples of open and relatively unstructured problems, in the sense that they include many degrees of freedom in the problem statement (which might only consist of desired goals, rather than achieving a specific objective or outcome). This ambiguity also extends to how students’ products should be evaluated because responses tend to be neither right nor wrong, only better or worse.

Engaging in these types of activities requires individuals to generate, elaborate and refine their ideas, emphasising 21st Century competencies like creative and critical thinking and persistence. Because of the often ill-structured and complex nature of design problems, learners also have to engage in extensive problem structuring (Goel and Pirolli, 1992[30]). Moreover, design making is an iterative activity that is not created in a vacuum: typically, the product created by the student relates to the end goal of satisfying a “client”, which in turn requires students to consider different perspectives during the design process.

Kimbell (2011[31]) validated a formative assessment activity, E-Scape, where students had to design a pill dispenser. The activity lasted about six hours and was facilitated by a teacher. In validation trials, students were organised into groups of three and each student stored their own and their groups’ work in a digital portfolio. The activity proceeded as a sequence of individual and group brainstorming sessions, where students sketched prototypes on paper and modelled them using given materials.

The assessment model alternated between creation and reflection phases. Approximately one hour into the activity, learners took photos of their modelling work and reported in the portfolio what they thought was going well (or not) with their work. Group members also exchanged their work and commented on each other’s portfolio. Before finishing the activity, learners recorded a 30-second videoclip explaining the features of their new product and how it met the demands of the specific user.

The final portfolio was evaluated using a comparative judgement approach. Pairs of portfolios were presented to experts and, guided by a set of criteria, they were asked to identify which of the two portfolios represented the better piece of work. This simple judgement process was then repeated many times, comparing many portfolios with many judges.

In a NGA context, these cluster of activities should monitor how students engage throughout the entire design process, from the initial phases of idea generation and formation (via prototyping) through to the completion and review of a product in response to external feedback. Ideally, these assessment activities would allow learners to move naturally between phases of active designing and more reflective review of their work, and evidence should be collected both on the final product and on the processes students engage in while developing their ideas.

To date, the majority of assessments focusing on design and creative activities have been conducted in the formative space. Some of these have developed sophisticated and multidimensional rubrics to evaluate the quality of students’ final products as well as their processes of invention and self-reflection (Lindström, 2006[32]) (see Box 3.3). Performance tasks replicating authentic design processes have been much rarer in summative and large-scale assessments. Beyond the constraints of available testing time, other challenges relate to providing students with resources for engaging in creative production (e.g. physical tools) and to assigning objective scores on the quality of students’ work at scale – especially if the intended use of the assessment is to compare performance across different linguistic and cultural student groups. There is, however, potential in adapting best practices that have been trialled in classroom settings to a summative context (e.g. by reducing expectations on student products or by providing them with a partially-developed product they need to finish or improve), and some performance assessments have been used successfully both at small and large scale. For example, the Assessment of Performance in Design and Technology was administered to 10 000 15-year-olds in the United Kingdom (Kimbell et al., 1991[33]).

In real life, people learn and develop their skills by solving complex problems collaboratively (either face-to-face or through digital media), and collaboration itself is a key 21st Century competency. Group work is increasingly used as a pedagogical practice despite the challenges teachers face to effectively structure and moderate collaborative learning (Gillies, 2016[34]), and researchers and teachers have become increasingly aware of the positive effects that collaboration can have on students’ achievement and social abilities (Baines, Blatchford and Chowne, 2007[35]; Gillies and Boyle, 2010[36]). We consider collaboration to be a third dimension in our simple framework for determining what to assess as it can be introduced for any of the cluster of activities outlined above and in any disciplinary or cross-disciplinary context. In other words, integrating affordances for collaboration can introduce additional target skills in an assessment, but any collaborative tasks must nonetheless be situated within a disciplinary or cross-disciplinary context and draw upon a set of interrelated practices depending on the cluster of activities chosen.

Similarly to the design activities cluster, formative assessment practices have made more progress with respect to assessing student collaboration (e.g. with teachers using rubrics to make judgements). However, two notable large-scale exceptions are the PISA 2015 collaborative problem-solving assessment and the Assessment and Teaching of 21st Century Skills (ATC21S) project (see Box 3.4 for more).

ATC21S used interactive logical problems with an asymmetrical distribution of tools and information between paired students to incentivise collaboration. For example, in the “Olive Oil” example task (Figure 3.4), Student A and Student B needed to work together to fill Student B’s jar with 4 litres of olive oil. Each student was given different tools: Student A had a 3-litre jar, an olive oil container, an “entry” pipe and a bucket, whereas Student B had a 5-litre jar, an “exit” pipe and a bucket. Neither participant was aware of what was available to their partner before interacting with them, and both students had to explore the task space to find out they needed additional resources from their partner. Students had to identify that Student A needed to fill their jar at the container and place it under the “entry” pipe so that Student B could accept the oil from the “exit” pipe. The two students could choose when and how to communicate using the free text chatbot to establish a shared understanding and solve the problem.

The PISA 2015 assessment of collaborative problem solving presented students with an interactive problem scenario and one or more “group members” (computer agents) with whom they had to interact over the course of the unit to solve the problem. Across different assessment units, the computer agent(s) were programmed to emulate different roles, attitudes and levels of competence in order to vary the type of collaborative problems students were confronted with.

The interaction of the students with the computer agent(s) were limited to pre-defined statements using a multiple-choice format and every possible intervention of the students was attached to a specific response by the computer agents or event in the problem scenario. Differing student responses could trigger different actions from the computer agents, both in terms of changes to the state of the simulation (e.g. an agent adding a piece to a puzzle) or the conversation (e.g. an agent responding to a request from the student for a piece of information).

Although targeting similar core competencies underpinning collaboration, one major difference in approach set these two large-scale assessments apart: in PISA, students interacted with computer agents, whereas in the ATC21S assessment they engaged in real human-to-human collaboration. PISA’s choice to simulate collaboration using computer agents was guided by the goals of standardising the assessment experience for students and of applying established and automated scoring methods. While the highly controlled PISA test environment represented a trade-off in terms of the authenticity of the assessment, there are obvious challenges for scoring in tasks using human-to-human collaborative approaches. In more open assessment environments, student behaviours are difficult to anticipate and the success of any student depends on the behaviour of others in their group. This generates a measurement problem in terms of how to build separate performance scores for individual students and for student groups, and raises the issue of whether it is fair to penalise a student for the lack of ability or motivation of another student in their group.

A more recent example of a collaborative, cross-disciplinary and multi-activity cluster approach to measuring 21st Century competencies developed by the Australian Council for Educational Research (ACER) asked students to collaboratively design a plan to integrate refugees in their community (Scoular et al., 2020[39]). The first collaborative task in the assessment required students to brainstorm ideas before choosing one to implement. In the second task students were assigned group roles, with each being tied to certain responsibilities and resources, and the third task required students to bring independent research into the group discussion to improve and finalise their collaborative idea. As in the ACT21S collaborative problem-solving assessment, students exchanged ideas and information using a digital chatbot. Analysis of the chat data from multiple groups showed that it is possible to differentiate the quality of collaborative work according to different criteria, such as the level of participation of each group member or the coherence of the group’s conversation. Adequate rates of agreement were reached when different raters scored the chats according to a multidimensional rubric.

Although scoring collaborative tasks at scale remains a challenge, advances in natural language processing (NLP) now make it possible to design intelligent virtual agents that “understand” what students write in open dialogues and that can respond accordingly (see Chapter 10 of this report for more on intelligent virtual agents). Providing students with choice over when and how to interact with virtual agents via open chat functions is also consistent with the vision of moving towards more choice-rich assessments and can help increase the face validity of simulated collaborative tasks by removing fixed-script constraints. Advances in NLP can also improve the quality and reduce the cost of analysing written chats among peers in genuine human-to-human collaboration, potentially enabling the automated replication of expert judgements to large sets of authentic conversational data.

One further strategy to improve inferences from conversational data is to ask students to highlight passages of their recorded conversations that they themselves consider to be evidence of good collaboration, using rubrics as a reference. These student ratings could then be used to validate the judgement of external raters or trained scoring machines and could provide in themselves additional evidence on students’ understanding of what constitutes “good” communication and collaboration practices.

The pioneering assessment experiences and analytical approaches highlighted in this chapter suggest that it is possible to imagine a not-so-distant future in which collaborative tasks become an increasingly integral component of both summative and formative assessments in order to provide a more comprehensive outlook on students’ development of 21st Century competencies. However, it is also clear that developing authentic collaborative tasks in next-generation assessments will require substantial parallel innovation in measurement as standard analytical models cannot yet deal with the many interdependencies across time and agents that inherently arise in collaborative settings.

Earlier chapters of this report established that education systems are increasingly signalling the need to develop students’ 21st Century competencies. As a result, many are reforming their assessment systems with the aim of monitoring the extent to which students have developed these skills. We argue that the first step in this process should be to map current assessment gaps in order to determine which types of next-generation assessments are needed. While these decisions need to be taken by responsible actors in each jurisdiction, according to local priorities, there is still a need for guidance from the research community on what kinds of assessment options exist, both at scale and in the classroom, and what factors are important to consider when making such decisions. This chapter offers a simple framework for guiding such decisions.

We argue that a productive way forward involves developing valid assessments of how students create knowledge and solve different types of complex problems, either on their own and collaboratively, in different contexts of application, rather than creating separate assessments for every single 21st Century skill described in the many lists that have been proposed but that abstract from authentic contexts of practice. This perspective might seem to narrow down our assessment ambitions, restricting the target to a set of skills associated with cognitive functioning – but this is not necessarily the case. Observing and interpreting how students tackle a variety of complex and contextualised problems can give us valid evidence on a wide set of cognitive, interpersonal and intrapersonal skills. Our perspective is rather to recognise one of the key conclusions from Chapters 1 and 2 of this report: that authentic problem scenarios draw upon multiple competencies simultaneously and that the context of application, the nature of the problem and the number of actors involved inherently define which combination of competencies are required for successful performance. Some clusters of activities are more similar than others in terms of the constituent elements that support performance; it follows that if we want to make claims about students’ preparation for future learning, then we need to develop several different kinds of next-generation assessments that are carefully balanced in terms of their contextualisation, the purpose and organisation of the learning activity, and opportunities for collaboration.

The examples discussed in this chapter illustrate three clusters of learning and problem-solving activities that, between them, invite students to think critically and creatively, monitor their emerging understanding, preserve their motivation and goal-orientation, and regulate their learning processes and emotions. All three clusters can be situated in disciplinary or cross-disciplinary contexts. If designed to allow for multiple students working on the same problem, it would also be possible to observe how students engage in important communication and collaboration skills. Despite their promise, however, we acknowledge that many of the examples presented in this chapter have not left the lab where they were invented; and even in cases where they have gained high research visibility, they have not (yet) changed the way that assessment is done at scale. One clear implication of that is a need for more substantial investment in assessment design and validation to bring these innovation efforts to full maturity and to scale them up when they prove they can effectively measure what is hard but nonetheless important to measure.

[3] Arndt, H. (2006), “Enhancing system thinking in education using system dynamics”, SIMULATION, Vol. 82/11, pp. 795-806, https://doi.org/10.1177/0037549706075250.

[35] Baines, E., P. Blatchford and A. Chowne (2007), “Improving the effectiveness of collaborative group work in primary schools: Effects on science attainment”, British Educational Research Journal, Vol. 33/5, pp. 663-680, https://doi.org/10.1080/01411920701582231.

[20] Basol, M. et al. (2021), “Towards psychological herd immunity: Cross-cultural evidence for two prebunking interventions against COVID-19 misinformation”, Big Data & Society, Vol. 8/1, https://doi.org/10.1177/20539517211013868.

[24] Berry, D. and D. Broadbent (1984), “On the relationship between task performance and associated verbalizable knowledge”, The Quarterly Journal of Experimental Psychology Section A, Vol. 36/2, pp. 209-231, https://doi.org/10.1080/14640748408402156.

[13] Bilal, D. (2000), “Children’s use of the Yahooligans! web search engine: I. Cognitive, physical, and affective behaviors on fact-based search tasks”, Journal of the American Society for Information Science, Vol. 51/7, pp. 646-665.

[16] Biswas, G., J. Segedy and K. Bunchongchit (2015), “From design to implementation to practice a learning by teaching system: Betty’s Brain”, International Journal of Artificial Intelligence in Education, Vol. 26/1, pp. 350-364, https://doi.org/10.1007/s40593-015-0057-9.

[29] Blikstein, P. (2013), “Digital fabrication and “making” in education: The democratization of invention”, in Büching, C. and J. Walter-Herrmann (eds.), FabLab: Of Machines, Makers and Inventors, Transcript Publishers, Bielefeld.

[9] Brand-Gruwel, S., I. Wopereis and Y. Vermetten (2005), “Information problem solving by experts and novices: Analysis of a complex cognitive skill”, Computers in Human Behavior, Vol. 21/3, pp. 487-508, https://doi.org/10.1016/j.chb.2004.10.005.

[7] Bransford, J. and B. Stein (1984), The Ideal Problem Solver: A Guide for Improving Thinking, Learning, and Creativity, Freeman, New York.

[11] Brashier, N. and E. Marsh (2020), “Judging truth”, Annual Review of Psychology, Vol. 71/1, pp. 499-515, https://doi.org/10.1146/annurev-psych-010419-050807.

[37] Care, E. and P. Griffin (2017), “Assessment of collaborative problem-solving processes”, in Csapó, B. and J. Funke (eds.), The Nature of Problem Solving: Using Research to Inspire 21st Century Learning, Educational Research and Innovation, OECD Publishing, Paris, https://doi.org/10.1787/9789264273955-16-en.

[15] Coiro, J. et al. (2019), “Students engaging in multiple-source inquiry tasks: Capturing dimensions of collaborative online inquiry and social deliberation”, Literacy Research: Theory, Method, and Practice, Vol. 68/1, pp. 271-292, https://doi.org/10.1177/2381336919870285.

[22] Dörner, D. and J. Funke (2017), “Complex problem solving: What it is and what it is not”, Frontiers in Psychology, Vol. 8/1153, pp. 1-11, https://doi.org/10.3389/fpsyg.2017.01153.

[17] Ecker, U. et al. (2022), “The psychological drivers of misinformation belief and its resistance to correction”, Nature Reviews Psychology, Vol. 1/1, pp. 13-29, https://doi.org/10.1038/s44159-021-00006-y.

[4] Feldman, L. et al. (2007), “Identifying best practices in civic education: Lessons from the Student Voices Program”, American Journal of Education, Vol. 114/1, pp. 75-100, https://doi.org/10.1086/520692.

[25] Fischer, A., S. Greiff and J. Funke (2017), “The history of complex problem solving”, in Csapó, B. and J. Funke (eds.), The Nature of Problem Solving: Using Research to Inspire 21st Century Learning, Educational Research and Innovation, OECD Publishing, Paris, https://doi.org/10.1787/9789264273955-9-en.

[12] Flanagin, A. (2017), “Online social influence and the convergence of mass and interpersonal communication”, Human Communication Research, Vol. 43/4, pp. 450-463, https://doi.org/10.1111/hcre.12116.

[34] Gillies, R. (2016), “Cooperative learning: Review of research and practice”, Australian Journal of Teacher Education, Vol. 41/3, pp. 39-54, https://doi.org/10.14221/ajte.2016v41n3.3.

[36] Gillies, R. and M. Boyle (2010), “Teachers’ reflections on cooperative learning: Issues of implementation”, Teaching and Teacher Education, Vol. 26/4, pp. 933-940, https://doi.org/10.1016/j.tate.2009.10.034.

[27] Gobert, J. et al. (2013), “From log files to assessment metrics: Measuring students’ science inquiry skills using educational data mining”, Journal of the Learning Sciences, Vol. 22/4, pp. 521-563, https://doi.org/10.1080/10508406.2013.837391.

[30] Goel, V. and P. Pirolli (1992), “The structure of Design Problem Spaces”, Cognitive Science, Vol. 16/3, pp. 395-429, https://doi.org/10.1207/s15516709cog1603_3.

[6] Irava, V. et al. (2019), “Game-based socio-emotional skills assessment: A comparison across three cultures”, Journal of Educational Technology Systems, Vol. 48/1, pp. 51-71, https://doi.org/10.1177/0047239519854042.

[8] Jonassen, D. and W. Hung (2008), “All problems are not equal: Implications for problem-based learning”, Interdisciplinary Journal of Problem-Based Learning, Vol. 2/2, pp. 6-28, https://doi.org/10.7771/1541-5015.1080.

[31] Kimbell, R. (2011), “Evolving project e-scape for national assessment”, International Journal of Technology and Design Education, Vol. 22/2, pp. 135-155, https://doi.org/10.1007/s10798-011-9190-4.

[33] Kimbell, R. et al. (1991), The Assessment of Performance in Design and Technology, School Examination and Assessment Council, London.

[14] Large, A. and J. Beheshti (2000), “The web as a classroom resource: Reactions from the users”, Journal of the American Society for Information Science, Vol. 51/12, pp. 1069-1080.

[32] Lindström, L. (2006), “Creativity: What is it? Can you assess it? Can it be taught?”, International Journal of Art & Design Education, Vol. 25/1, pp. 53-66, https://doi.org/10.1111/j.1476-8070.2006.00468.x.

[28] Martinez, S. and G. Stager (2013), Invent to Learn: Making, Tinkering, and Engineering in the Classroom, Constructing Modern Knowledge Press.

[2] Mislevy, R. et al. (2014), Psychometric Considerations in Game-Based Assessment, GlassLab Research, Institute of Play, http://www.instituteofplay.org/wp-content/uploads/2014/02/GlassLab_GBA1_WhitePaperFull.pdf (accessed on 21 April 2023).

[38] OECD (2017), PISA 2015 Results (Volume V): Collaborative Problem Solving, OECD Publishing, Paris, https://doi.org/10.1787/9789264285521-en.

[40] OECD (n.d.), Platform for Innovative Learning Assessments, https://pilaproject.org/ (accessed on 3 April 2023).

[26] Omodei, M. and A. Wearing (1995), “The Fire Chief microworld generating program: An illustration of computer-simulated microworlds as an experimental paradigm for studying complex decision-making behavior”, Behavior Research Methods, Instruments, & Computers, Vol. 27/3, pp. 303-316, https://doi.org/10.3758/bf03200423.

[5] Raphael, C. et al. (2009), “Games for civic learning: A conceptual framework and agenda for research and design”, Games and Culture, Vol. 5/2, pp. 199-235, https://doi.org/10.1177/1555412009354728.

[19] Roozenbeek, J. and S. van der Linden (2018), “The fake news game: Actively inoculating against the risk of misinformation”, Journal of Risk Research, Vol. 22/5, pp. 570-580, https://doi.org/10.1080/13669877.2018.1443491.

[39] Scoular, C. et al. (2020), Collaboration: Skill Development Framework, Australian Council for Educational Research, Camberwell, https://research.acer.edu.au/ar_misc/42.

[1] Shaffer, D. et al. (2009), “Epistemic Network Analysis: A prototype for 21st century assessment of learning”, International Journal of Learning and Media, Vol. 1/2, pp. 1-22, https://doi.org/10.1162/ijlm.2009.0013.

[18] Urban, A., C. Hewitt and J. Moore (2018), “Fake it to make it, media literacy, and persuasive design: Using the functional triad as a tool for investigating persuasive elements in a fake news simulator”, Proceedings of the Association for Information Science and Technology, Vol. 55/1, pp. 915-916, https://doi.org/10.1002/pra2.2018.14505501174.

[21] van der Linden, S., J. Roozenbeek and J. Compton (2020), “Inoculating against fake news about COVID-19”, Frontiers in Psychology, Vol. 11/566790, pp. 1-7, https://doi.org/10.3389/fpsyg.2020.566790.

[23] Wilensky, U. and M. Resnick (1999), “Thinking in levels: A dynamic systems approach to making sense of the world”, Journal of Science Education and Technology, Vol. 8/1, pp. 3-19, https://doi.org/10.1023/a:1009421303064.

[10] Wolf, S., T. Brush and J. Saye (2003), “Using an information problem-solving model as a metacognitive scaffold for multimedia-supported information-based problems”, Journal of Research on Technology in Education, Vol. 35/3, pp. 321-341, https://doi.org/10.1080/15391523.2003.10782389.