Creative thinking refers to the cognitive processes required to engage in creative work. It is a key competence to assess in the context of PISA as it is a malleable individual capacity that can be developed through practice and that all students can demonstrate in everyday contexts. This section presents the framework for how the PISA 2022 assessment measures creative thinking, including how the construct is defined, the contexts in which it is assessed, and the approach to scoring student responses.

PISA 2022 Assessment and Analytical Framework

4. PISA 2022 Creative Thinking Framework

Abstract

Why assess creative thinking in the 2022 PISA cycle?

Creative thinking is a key competence

Creativity has driven forward human culture and society in diverse areas, from the sciences and technology to philosophy, the arts and humanities (Hennessey and Amabile, 2010[1]). Organisations and societies around the world increasingly depend on innovation and knowledge creation to address emerging challenges (OECD, 2010[2]), giving urgency to innovation and creative thinking as collective enterprises.

Despite entrenched beliefs to the contrary, all individuals have the potential to think creatively (OECD, 2017[3]). Creative thinking is a tangible competence, grounded in knowledge and practice, that supports individuals (and groups) to achieve better outcomes – especially in constrained and challenging environments. Researchers and educators alike also agree that engaging in creative thinking can support a range of other skills including metacognitive, inter- and intra‑personal and problem-solving skills, as well as promoting identity development, academic achievement, social engagement and career success (Barbot and Heuser, 2017[4]; Barbot, Lubart and Besançon, 2016[5]; Beghetto, 2010[6]; Higgins et al., 2005[7]; National Advisory Committee on Creative and Cultural Education, 1999[8]; Plucker, Beghetto and Dow, 2004[9]; Smith and Smith, 2010[10]; Spencer and Lucas, 2018[11]; Gajda, Karwowski and Beghetto, 2017[12]).

Assessing creative thinking in the Programme for International Student Assessment (PISA) can encourage a wider debate on the importance of supporting students’ creative thinking through education, as well as encourage positive changes in education policies and pedagogies around the world. PISA data and instruments will provide policymakers with valid, reliable and actionable measurement tools that can support them in evidence‑based decision making.

Creative thinking can and should be developed through education

A fundamental role of education is to equip students with the competences they need to succeed in life and society. Being able to think creatively is a critical competence that young people need to develop, including in school, for several reasons:

Creative thinking helps prepare young people to adapt to a rapidly changing world that demands flexible and innovative workers equipped with “21st century skills” beyond numeracy and literacy. Children today will be employed in jobs that do not yet exist, responding to societal challenges that we cannot anticipate, and using new technologies. Developing creative thinking will help prepare them to adapt, undertake work that cannot easily be replicated by machines and address increasingly complex challenges with innovative solutions.

The importance of creative thinking extends beyond the labour market, helping students to discover and develop their potential. Schools play an important role in students’ holistic development and making them feel like they are part of the society they live in. Schools must therefore help young people to nurture their creative talents and empower them to contribute to the wider development of society (Tanggaard, 2018[13]).

Creative thinking also supports learning by helping students to interpret experiences and information in novel and personally meaningful ways, even in the context of formal learning goals (Beghetto and Kaufman, 2007[14]; Beghetto and Plucker, 2006[15]). Student-centred pedagogies that engage students’ creative thinking and encourage exploration and discovery can also increase students’ motivation and interest in learning, particularly for those who struggle with rote learning and other teacher-centred schooling methods (Hwang, 2015[16]).

Finally, creative thinking is important in a range of subject areas – from languages and the arts to science, technology, engineering and mathematics (STEM) disciplines. Creative thinking supports students to be imaginative, develop original ideas, think outside of the box and solve problems.

Just like any other ability, creative thinking can be nurtured through practical and targeted application (Lucas and Spencer, 2017[17]). Although developing students’ creative thinking skills may imply taking time away from other subjects in the curriculum, creative thinking can be developed while promoting the acquisition of content knowledge in many contexts through approaches that encourage exploration and discovery rather than rote learning and automation (Beghetto, Baer and Kaufman, 2015[18]). Teachers need support in understanding how students’ creative thinking can be recognised and encouraged in the classroom. The OECD’s Centre for Educational Research and Innovation (CERI) leads a project whose aim is to support pedagogies and practices that foster creative and critical thinking.1

A principled assessment design process: Evidence-Centred Design as a guiding framework for the PISA 2022 Creative Thinking assessment

Evidence-Centred Design (ECD) (Mislevy, Steinberg and Almond, 2003[19]) provides a conceptual framework for developing innovative and coherent assessments that are built on evidence-based arguments, connecting what students do, write or create on a computer platform with multidimensional competences (Shute, Hansen and Almond, 2008[20]; Kim, Almond and Shute, 2016[21]). ECD starts with the basic premise that assessment is a process of reasoning from evidence to evaluate claims about students’ capabilities. In essence, students’ responses to the assessment items and tasks provide the evidence for this reasoning process and psychometric analyses establish the sufficiency of the evidence for evaluating each claim.

ECD provides a strong foundation for developing valid assessments of complex and multidimensional constructs. Adopting an ECD process for the PISA 2022 Creative Thinking assessment involved the following sequence of steps:

1) Domain definition: conducting a literature review and engaging with experts to define creativity and creative thinking in an educational context. This first step clarifies the creative thinking constructs that policy makers and educators wish to promote and identifies meaningful ways in which 15‑year‑old students can express creative thinking that can be feasibly assessed in PISA.

2) Construct definition: explicitly defining the assessment constructs and specifying the claims that can be made about test takers based on the assessment. In ECD terminology, this step is referred to as defining the Student Model (Shute et al., 2016[22]).

3) Evidence identification: describing the evidence (i.e. student behaviours or performances) that can support claims about test takers’ proficiency in the target constructs. In ECD, this step is referred to as defining the Evidence Model and includes defining rules for scoring tasks and for aggregating scores across tasks.

4) Task design: designing and validating a set of tasks that can provide the desired evidence within the constraints of the PISA assessment. This stage corresponds to the Task Model step in ECD terminology.

5) Test assembly: assembling the tasks and units into test formats that support all the stated assessment claims with sufficient evidence. This corresponds to the Assembly Model step in ECD terminology.

ECD is an iterative assessment design process. For example, validation and pilot studies should, where relevant, inform further choices regarding evidence identification and task design. Validation and pilot studies are also crucial for ensuring that all assessment instruments provide reliable and comparable evidence across countries and cultural groups, which is especially important in the context of PISA. The remainder of this framework discusses each step of the ECD process in further detail for the PISA 2022 Creative Thinking assessment, before describing the approach to validation and reporting.

Defining the assessment domain: Understanding creativity and creative thinking

Creativity is a multidimensional construct

A principled assessment design process requires a strong theoretical foundation. Several researchers have established theories to describe the nature of creativity and to define creative people, processes and products. Broadly speaking, the literature defines creativity as “the interaction among aptitude, process and environment by which an individual or group produces a perceptible product that is both novel and useful as defined within a social context” (Plucker, Beghetto and Dow, 2004[9]).

Confluence approaches of creativity argue that individuals need several resources in order to produce creative work, including: 1) relevant knowledge and skills in a given field; 2) creative thinking processes; 3) task motivation; and 4) a supportive and rewarding environment (Amabile, 1983[23]; 2012[24]; Amabile and Pratt, 2016[25]). Some theories also include certain personality attributes as an important internal resource (Sternberg and Lubart, 1991[26]; 1995[27]; Sternberg, 2006[28]). These theories all understand creativity as a multidimensional construct that includes both relatively stable elements and elements that are more amenable to development and social influences. They also emphasise that it is the interaction, and not simply the availability (or not), of these resources that is important for engaging creatively with a given task. For example, low task motivation may prevent an individual from producing creative work despite domain expertise or a conducive environment.

These theories also understand the narrower construct of creative thinking as the important cognitive or “thinking” processes that enable individuals to produce creative outcomes.

Creativity can manifest in different types of ways

The literature on creativity generally distinguishes between “big-C” creativity and “little-c” creativity (Csikszentmihalyi, 2013[29]; Simonton, 2013[30]). “Big-C” creativity is associated with intellectual and/or technological breakthroughs, or artistic or literary masterpieces. These achievements demand that creative thinking processes be paired with significant talent, deep expertise in the given domain and high levels of engagement, as well as the recognition from society that the product has value.

Conversely, all people can demonstrate “little-c” (or “everyday”) creativity by engaging in creative thinking. This type of everyday creativity might include arranging photos in an unusual way, combining leftovers to make a tasty meal or finding a solution to a complex scheduling problem. Overall, the literature agrees that “little-c” creativity can be developed through practice and honed through education (Kaufman and Beghetto, 2009[31]).

Creativity draws on both domain-general and domain-specific resources

Researchers in the field have long debated whether individuals are creative in everything they do or only in certain domains (i.e. a specific area of knowledge or practice). This debate naturally extends to creative thinking and raises an important question: is creative thinking in science different to creative thinking in writing or the visual arts, for example?

The first generation of creative thinking tests reflected the notion that a set of general and enduring attributes influenced creative endeavours of all kinds, and that an individual’s capacity to be creative in one domain would readily transfer to another (Torrance, 1959[32]). However, more recent work tends to reject this generalist assumption.

Researchers now recognise that, to some extent, the internal resources needed to engage in creative work differ by domain (Baer, 2011[33]; Baer and Kaufman, 2005[34]). While agreement on the number of distinct “domains of creativity” remains an open research question, researchers have tended to agree that the capacity to engage creatively in the arts and in maths/scientific domains in particular draws upon a different set of internal resources (e.g. knowledge, skills, and attributes) (Kaufman and Baer, 2004[35]; Kaufman, 2006[36]; 2012[37]; Kaufman et al., 2009[38]; 2016[39]; Chen et al., 2006[40]; Julmi and Scherm, 2016[41]; Runco and Bahleda, 1986[42]).

Defining the construct for the PISA 2022 assessment

The PISA 2022 definition of creative thinking

While closely related to the broader construct of creativity, creative thinking refers to the cognitive processes required to engage in creative work. It is a more appropriate construct to assess in the context of PISA as it is a malleable individual capacity that can be developed through practice and does not place an emphasis on how wider society values the resulting output.

PISA defines creative thinking as “the competence to engage productively in the generation, evaluation and improvement of ideas that can result in original and effective solutions, advances in knowledge and impactful expressions of imagination”. It builds on the definition first proposed by the Strategic Advisory Group (OECD, 2017[3]), tasked with providing some initial directions for the PISA 2022 assessment, and has been subsequently developed following a comprehensive review of the literature and the guidance of a wider interdisciplinary group of experts in the field.2

The PISA definition of creative thinking is aligned with the cognitive processes and outcomes associated with “little-c” creativity – in other words, it reflects the types of creative thinking that 15-year-old students around the world can reasonably demonstrate in “everyday” contexts. It emphasises that students need to learn to engage productively in generating ideas, reflecting upon ideas by valuing their relevance and novelty, and iterating upon ideas before reaching a satisfactory outcome. This definition of creative thinking applies to learning contexts that require imagination and the expression of one’s inner world, such as creative writing or the arts, as well as contexts in which generating ideas is functional to the investigation of problems or phenomena.

Unpacking creative thinking in the classroom

Confluence approaches of creativity emphasise that both “internal” and “external” resources are needed to successfully engage in creative work. To better understand children’s creative thinking and define what information is important to collect in the PISA assessment, it is necessary to contextualise these approaches in a way that is relevant to students in their everyday school life (Glaveanu et al., 2013[43]; Tanggaard, 2014[44]). This section describes what creative thinking in the classroom looks like and the interconnected internal and external factors that can promote or hinder it.

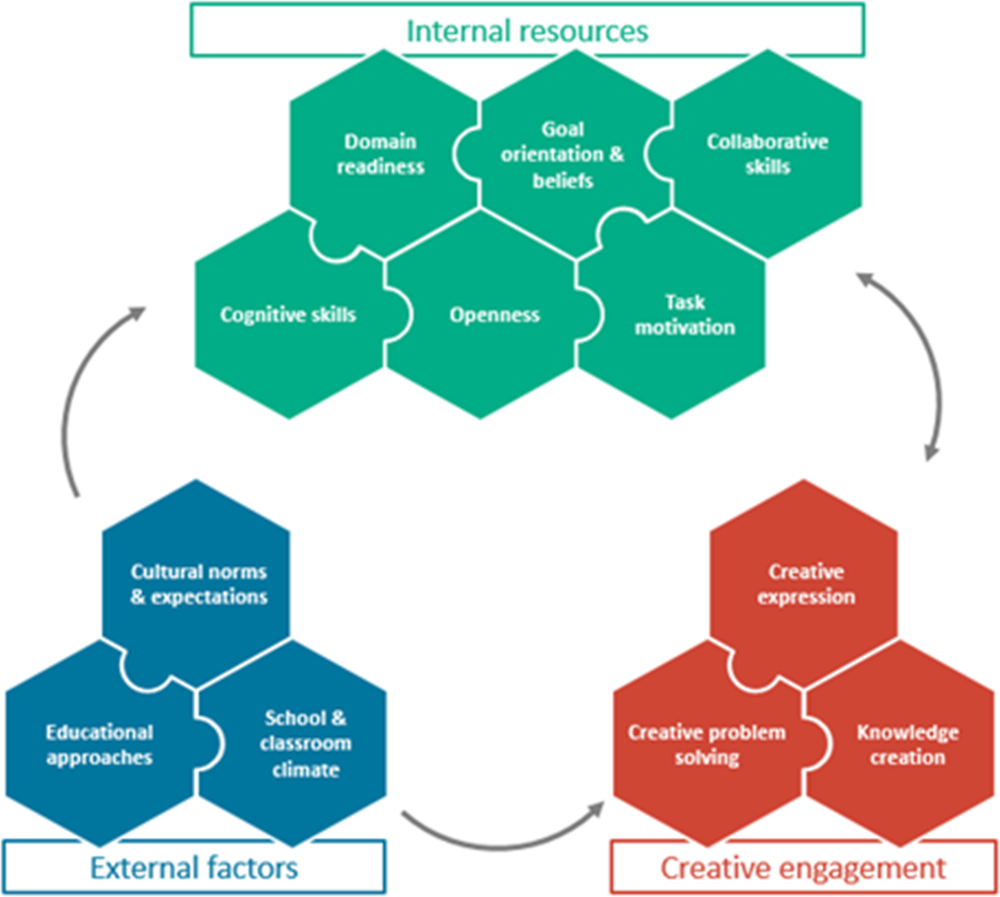

Schools can influence many of the internal resources students need to engage in creative thinking. Internal resources here essentially refer to the set of knowledge, skills and attitudes that enable creative thinking. These include: 1) cognitive skills; 2) domain readiness (i.e. domain‑specific knowledge and experience); 3) openness to new ideas and experiences; 4) goal orientation and self-belief; 5) task motivation; and 6) in some cases, collaborative skills. In terms of external factors, features of students’ environments can also incentivise or hinder their capacity to engage in creative thinking. These include the classroom culture, the educational approach of schools and wider education systems, and broader cultural norms and expectations.

Schools are also important places in which students can think creatively, either as individuals or as part of a group, and where they can produce creative work. Creative achievement and progress in the classroom can take many forms, such as creative expression (communicating one’s thoughts and imagination through various media), knowledge creation (advancing knowledge and understanding through inquiry), or creative problem solving.

Figure 4.1 summarises these elements that, together, define creative thinking in the classroom. The three sets of elements (internal resources, external factors, and creative achievement and progress) are strongly interconnected. For example, external factors includes cultural norms and expectations, which in turn influence how students’ internal resources are developed and honed as well as the types of creative work that students might choose to produce. Each of the elements in Figure 4.1 are described in further in the following section.

Figure 4.1. Unpacking creative thinking in the classroom: internal resources, external factors, and types of creative engagement

Internal resources

Cognitive skills

Both convergent thinking and divergent thinking (Guilford, 1956[45]) are widely recognised as important skills for creative thinking. Convergent thinking refers to the ability to apply conventional and logical reasoning to information (Cropley, 2006[46]). As such, convergent thinking aids in understanding the problem space and identifying and evaluating good ideas (Reiter-Palmon and Robinson, 2009[47]; Runco, 1997[48]). By contrast, divergent thinking refers to the ability to think of original ideas, to make flexible connections ideas or pieces of information, and to apply fluency of association and ideation (Cropley, 2006[46]). It also refers to the ability to break out of “fixed” performance scripts – in other words, to try new approaches, to look at problems from different angles, and to discover new methods of “doing” (Schank and Abelson, 1977[49]; Duncker, 1972[50]). In essence, divergent thinking brings forth novel, unusual or surprising ideas.

Creative thinking is often described in terms of divergent thinking and most assessments to-date have focused on measuring divergent thinking cognitive processes. However, convergent thinking cognitive processes are also important for engaging in creative work. For example, Getzels and Csikszentmihalyi (1976[51]) found that art students’ success in “problem construction” was strongly correlated with measures of the originality and aesthetic value of their resulting paintings, and that these measures were also linked to long‑term artistic success.

Domain readiness

Domain readiness conveys the idea that some prior domain knowledge and experience is needed to successfully produce creative work (Baer, 2016[52]). A better understanding of a domain is more likely to help with generating and evaluating ideas that are both novel and useful (Hatano and Inagaki, 1986[53]; Schwartz, Bransford and Sears, 2005[54]). However, this relationship may not be strictly linear – well-established routines for deploying knowledge or skills within a domain may also result in idea fixation and a reluctance to think beyond those established routines.

Openness to experience and intellect

Several studies have shown that creative people share a core set of tendencies, particularly the “Big Five” personality dimension of “openness” (Kaufman et al., 2009[38]; 2016[39]; McCrae, 1987[55]; Prabhu, Sutton and Sauser, 2008[56]; Werner et al., 2014[57]).3 In general, such empirical studies examining the personality and behaviour of creative individuals have typically employed questionnaire instruments that operationalise creativity as a relatively enduring and stable personality trait (Hennessey and Amabile, 2010[1]). Meta‑analyses of studies on creativity and personality have also found that openness appears to be a common trait in creative achievers across domains, whereas other personality traits tend to interact with creativity only insofar as they benefit individuals within specific domains (e.g. “conscientiousness” seems to enhance scientific creativity but detract from performance in the arts) (Batey and Furnham, 2006[58]; Feist, 1998[59]).

Both “openness to experience” and “openness to intellect” are included under the broader openness trait. “Openness to experience” describes an individual’s receptivity to engage with novel ideas, imagination and fantasy (Berzonsky and Sullivan, 1992[60]). Its predictive value for creative achievement across domains is likely due to its inclusion of cognitive (e.g. imagination), affective (e.g. curiosity) and behavioural aspects (e.g. adventurousness), and the links between curiosity and creativity have been further supported by several researchers (Chávez-Eakle, 2009[61]; Feist, 1998[59]; Guastello, 2009[62]; Kashdan and Fincham, 2002[63]).

“Openness to intellect” describes an individual’s receptivity to appreciate and engage with abstract and complex information, primarily through reasoning (DeYoung, 2014[64]). In contrast to “openness to experience”, which is particularly correlated with artistic creativity, the trait “openness to intellect” seems particularly correlated with scientific creativity (Kaufman et al., 2016[39]).

Goal orientation and creative self-beliefs

Persistence, perseverance and creative self‑efficacy influence creative thinking by providing a strong sense of goal orientation and the belief that creative goals can be achieved. Investing effort towards one’s goal and overcoming difficulty are essential for engaging in creative thinking, as they enable individuals to maintain concentration for long periods and deal with frustrations that arise (Cropley, 1990[65]; Torrance, 1988[66]; Amabile, 1983[23]).

Related to goal orientation is creative self-efficacy, which describes an individual’s beliefs that they are capable of successfully producing creative work (Beghetto and Karwowski, 2017[67]). Researchers consider creative self‑efficacy essential in determining whether an individual will sustain effort towards their goals in the face of resistance and ultimately succeed in performing tasks creatively (Bandura, 1997[68]). These beliefs can in turn be influenced by one’s prior experience and performance history, mood and environment (Bandura, 1997[68]; Beghetto, 2006[69]).

Task motivation

The role of task motivation as a driver of creative work has been well documented, namely in the works of Teresa Amabile (1997[70]; 2016[25]; 2010[1]; 1983[23]). The basic notion is that, as with any task, an individual will not produce creative work unless they are sufficiently motivated to do so. This motivation can be both intrinsic and extrinsic.

Intrinsic task motivation drives individuals who find their work inherently meaningful or rewarding, for reasons such as enjoyment, self-interest or a desire to be challenged. This type of task engagement is relatively insensitive to incentives or other external pressures. The experience of “creative flow” – being fully immersed in a task and disregarding other needs – is a powerful driver of creativity because individuals in flow are intrinsically motivated to engage in a task (Csikszentmihalyi, 1996[71]; Nakamura and Csikszentmihalyi, 2002[72]).

On the other hand, extrinsic task motivation refers to external incentives, goals, or pressures that motivate people to engage in a particular task. Although research emphasises the importance of intrinsic task motivation in creative performance, extrinsic motivators such as deadlines or recognition can also motivate people to persist in their creative endeavours (Eisenberger and Shanock, 2003[73]; Amabile and Pratt, 2016[25]).

Collaborative engagement

Creative work often results from interactions between individuals and their environment – including interactions with others. Research has also increasingly examined creative thinking as a collective endeavour, for example by examining the actions of teams in generating new knowledge (Thompson and Choi, 2005[74]; Prather, 2010[75]; Grivas and Puccio, 2012[76]; Scardamalia, 2002[77]). Collaboration can help individuals to explore and build upon the ideas of others as well as improve weaknesses in ideas. This can drive forward knowledge creation by facilitating the development of solutions to complex problems that are beyond the capabilities of any one person (Warhuus et al., 2017[78]).

External factors

Cultural norms and expectations

Creative work is embedded within social contexts that are inherently shaped by cultural norms and expectations. Cultural norms and expectations can influence the skills that individuals develop, the values that shape personality development, and the differences in performance expectations within societies (Niu and Sternberg, 2003[79]; Wong and Niu, 2013[80]; Lubart, 1998[81]). Some studies have investigated how cultural differences affect national measures of creativity and innovation, concluding that differences along the individualism-collectivism spectrum can significantly shape how creative work is defined and valued (Rinne, Steel and Fairweather, 2013[82]; Ng, 2003[83]).

Educational approaches

Cultural norms affect educational approaches, in particular the outcomes an education system values for its students and the content it prioritises in the curriculum. In some cases, these approaches might actively discourage creative thinking and achievement at school (Wong and Niu, 2013[80]). For example, the pressures of standardisation and accountability in educational testing systems often reduce opportunities for creative thinking in schoolwork (DeCoker, 2000[84]). Some have even claimed that increasingly narrow educational approaches and assessment methods are at the root of a “creaticide” affecting today’s young people (Berliner, 2011[85]). Schools and educational systems therefore play an important role in combatting this effect and should seek to implement policies and practices that increase the opportunities and rewards for producing creative work (and decrease the associated costs).

Classroom climate

Beyond broader cultural norms and educational systems, certain classroom practices can also stifle creative thinking – for example, perpetuating the idea that there is only one way to learn or solve problems, cultivating attitudes of submission and fear of authority, promoting beliefs that originality is a rare quality, or discouraging students’ curiosity and inquisitiveness (Nickerson, 2010[86]). Conversely, findings from organisational research has demonstrated that informal feedback, goal setting, teamwork, task autonomy, and appropriate recognition and encouragement to develop new ideas are all important enablers of creative thinking (Amabile, 2012[24]; Zhou and Su, 2010[87]). It could be argued that similar findings could also apply to creative thinking in the classroom.

Teachers’ beliefs about creativity are also important: they need to value creative work and consider it a fundamental skill that should be developed in the classroom. Teachers can actively cultivate an environment that helps students learn when creative thinking is appropriate and how to take charge of their own creativity – for example, by encouraging students to set their own goals, identify promising ideas, and take responsibility for contributing to creative teamwork (Beghetto and Kaufman, 2010[88]; 2014[89]). Employing “questions of wonderment” – or encouraging students to try to understand the world and put forth their ideas about different phenomena – can also help to promote knowledge creation in the classroom (Bereiter and Scardamalia, 2010[90]). These approaches are all supported by teachers’ beliefs that creative thinking is something that can be developed in the classroom, even if this development takes time.

Creative engagement

Creative products are both novel and useful, as defined within a particular social context. Examining the outputs of students’ creative work can provide indicators of their capacity to think creatively, particularly in tasks where much of the creative thinking process is not visible (Amabile, 1996[91]; Kaufman and Baer, 2012[92]). Students can produce different kinds of “everyday” creative work at school, either as individuals or as part of a group. These forms of creative work in the classroom are multi-disciplinary and extend beyond traditional subjects.

Creative expression

Creative expression refers to both verbal and non-verbal forms of creative engagement where individuals communicate their thoughts, emotions and imagination to others. Verbal expression involves the use of language, including both written and oral communication, whereas non-verbal expression includes drawing, painting, modelling, music, and physical movement and performance.

Knowledge creation

Knowledge creation refers to the advancement of knowledge and understanding, with a focus on making progress rather than achievement per se (e.g. improving an idea rather than achieving the optimal solution or complete understanding). Knowledge creation refers not only to important discoveries or advancements but also to the purposeful act of building upon and iterating on ideas that can happen at all levels of society and across all knowledge domains (Scardamalia and Bereiter, 1999[93]).

Creative problem solving

Not all cases of problem solving require creative thinking: creative problem solving is a distinct class of problem solving characterised by novelty, unconventionality, persistence and difficulty during problem formulation (Newell, Shaw and Simon, 1962[94]). Creative thinking becomes particularly necessary when students are challenged to solve problems outside of their realm of expertise and where the techniques with which they are familiar do not work (Nickerson, 1999[95]).

Implications of the domain and construct analysis for the test and task design

Objective and focus of the PISA 2022 Creative Thinking assessment

The PISA 2022 assessment focuses on the creative thinking processes that can be reasonably demonstrated by 15‑year‑old students. It does not aim to single out exceptionally creative individuals but describe the extent to which students are capable of thinking creatively when searching for and expressing ideas and explore how this capacity is related to teaching approaches, school activities and other features of education systems.

The main objective of PISA is to provide internationally comparable data on students’ creative thinking competence that have clear implications for education policies and pedagogies. The creative thinking processes in question therefore need to be malleable through education; the different factors enabling these thinking processes in the classroom context need to be clearly identified and related to performance in the assessment; and the assessment tasks need to align with the subjects and activities undertaken by students so that the test has some predictive validity of creative achievement and progress in school and beyond.

Assessment instruments: cognitive test and questionnaire modules

The PISA 2022 Creative Thinking assessment is composed of two parts: a cognitive test and a background questionnaire. PISA students who receive the creative thinking test will complete tasks that require them to generate, evaluate and improve ideas in different contexts. The test therefore focuses on gathering information about students’ cognitive skills involved in creative thinking. The background questionnaire module for creative thinking will gather data on students’ attitudes (openness, goal orientation and beliefs), perceptions of their school environment, and activities they participate in both inside and outside the classroom. Teachers and school leaders will also provide information about their beliefs about creativity and the activities offered in their schools.

Together, these assessment instruments will gather information on the complex set of factors that influence creative thinking in the classroom (students’ internal resources, external factors, and creative achievement and progress). However, some factors will be better measured than others: for example, while collaborative skills can influence knowledge creation in the classroom, students’ capacities to engage in collaborative creative thinking will not be directly measured in the PISA 2022 assessment (although some test tasks do ask students to evaluate and improve the work of others).

Measuring creative thinking in the PISA test: task design and scoring approach

The competency model of creative thinking

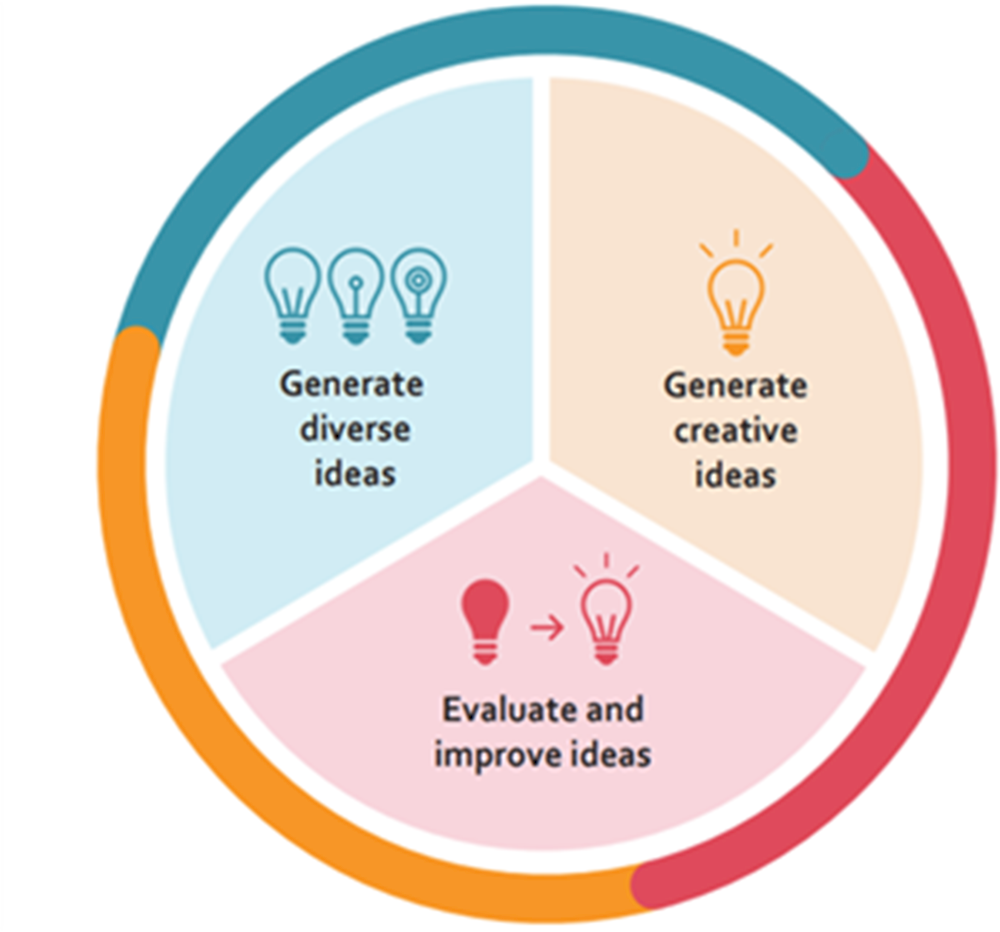

The competency model shown in Figure 4.2 illustrates how the creative thinking construct has been decomposed into three distinct facets for measurement purposes in the PISA 2022 test. These three facets are: 1) generate diverse ideas; 2) generate creative ideas; and 3) evaluate and improve ideas. These three facets reflect the PISA definition of creative thinking and encompass the cognitive skills required for creative thinking in the classroom. The competency model incorporates both divergent cognitive processes (the ability to generate diverse ideas and to generate creative ideas) and convergent cognitive processes (the ability to evaluate other people's ideas and identify improvements to those ideas).

“Ideas” in the context of the PISA assessment can take many forms. The test units provide a meaningful context and sufficiently open tasks in which students can demonstrate their capacity to produce different ideas and think outside of the box.

Figure 4.2. Competency model for the PISA 2022 test: three facets of creative

Generate diverse ideas

Typically, attempts to measure creative thinking have focused on the number of ideas that individuals are able to generate – often referred to as “ideational fluency”. Going one step further is “ideational flexibility”, or the capacity to generate ideas that are different to each other. When it comes to measuring the quality of ideas that an individual generates, some researchers have argued that fundamentally different ideas should be weighted more than similar ideas (Guilford, 1956[45]).

The facet ‘generate diverse ideas’ of the competency model encompasses these ideas and refers to a student’s capacity to think flexibly by generating multiple distinct ideas. Test items for this facet will present students with a stimulus and ask them to generate two or three appropriate ideas that are as different as possible from one another.

Generate creative ideas

The literature generally agrees that creative ideas and outputs are defined as being both novel and useful. Clearly, expecting 15-year-olds around the world to generate ideas that are completely unique or novel is neither feasible nor appropriate for the PISA assessment. In this context, originality is a useful concept as a proxy for measuring the novelty of ideas. Defined by Guildford (1950[96]) as “statistical infrequency”, originality encompasses the qualities of newness, remoteness, novelty or unusualness, and generally refers to deviance from patterns that are observed within the population at hand. In the PISA assessment context, originality is therefore a relative measure established with respect to the responses of other students who complete the same task.

The facet ‘generate creative ideas’ focuses on a student’s capacity to generate appropriate and original ideas. “Appropriate” means that ideas must comply with the task requirements and demonstrate a minimum level of usefulness. This dual criterion ensures the measurement of creative ideas – ideas that are both original and of use – rather than ideas that make random associations that are original but not meaningful. Test items for this facet will present students with a stimulus and ask them to develop one original idea.

Evaluate and improve ideas

Evaluative cognitive processes help to identify and remediate deficiencies in initial ideas as well as ensure that ideas or solutions are appropriate, adequate, efficient and effective (Cropley, 2006[46]). They often lead to further iterations of idea generation or the reshaping of initial ideas to improve a creative outcome. Evaluation and iteration are thus at the heart of the creative thinking process. Being able to provide feedback on the strengths and weaknesses of others’ ideas is also an essential part of any collective knowledge creation effort.

The facet ‘evaluate and improve ideas’ focuses on a student’s capacity to evaluate limitations in ideas and improve their originality. To reduce problems of dependency across items, students are not asked to iterate upon their own ideas but rather modify someone else’s work. Test items for this facet will present students with a given scenario and idea and ask them to suggest an original improvement, defined as a change that preserves the essence of the initial idea but that adds or incorporates original elements.

Domains of creative thinking

The literature suggests that the larger the number of domains included in an assessment of creative thinking, the better the coverage of the construct. However, certain practical and logistical constraints limit the number of possible domains that can be included in the PISA 2022 assessment of creative thinking. These constraints include:

The age of test takers. 15-year-olds have limited knowledge and experience in many domains, meaning those included in the assessment must be familiar to most students around the world and must reflect realistic manifestations of creative thinking that 15-year-olds can achieve in a constrained test context.

The available testing time. Students will sit a maximum of one hour of creative thinking items, meaning the range of possible domains must be limited to ensure sufficient data are collected from tasks in each domain. As PISA aims to provide comparable measures of performance at the country level rather than the individual level, it is possible to apply a rotated test design in which students take different combinations of tasks within domains.

The available testing technology. The PISA test is administered on standard desktop computers with no touchscreen capability or Internet connection. Although the test platform supports a range of item types and response modes, including interactive tools and basic simulations, the choice of domains and the design of the tasks needed to take into consideration the technical limitations of the platform.

Taking these main constraints into account and building upon the literature exploring different domains of creativity, the PISA 2022 test includes tasks situated within four distinct domain contexts: 1) written expression; 2) visual expression; 3) social problem solving; and 4) scientific problem solving. The written and visual expression domains involve communicating one’s imagination to others, and creative work in these domains tends to be characterised by originality, aesthetics, imagination, and affective intent and impact. In contrast, social and scientific problem solving involve investigating and solving open problems. They draw on a more functional employment of creative thinking that is a means to a better end, and creative work in these domains is characterised by ideas or solutions that are original, innovative, effective and efficient.

These four domains represent a reasonable and sufficiently diverse coverage of the different types of “everyday” creative thinking activities in which 15‑year‑olds engage. Given that differences in cultural preferences exist for certain forms of creative engagement as do differences in what is valued in education across the world, in addition to the fact that creative engagement in each domain is supported by some degree of domain readiness, we can also expect variation in student performance across domains. By having students work on more than one domain during the test, it will be possible to gain insights on country-level strengths and weakness by domain of context. Each of the four domain contexts are described in further detail below.

Written expression

Creative writing involves communicating ideas and imagination through written language. Good creative writing requires that readers understand and believe in the author’s imagination, including the rules of logic within the universe the author has created. Both fictional and non-fictional writing can be creative and learning how to express oneself creatively can help students to develop effective and impactful communication skills that they will need throughout their lifetimes.

In the PISA test students express their imagination in a variety of written formats. For example, students will caption an image, propose ideas for a short story using a given text or visual as inspiration, or will write a short dialogue between characters for a movie or comic book plot.

Visual expression

Visual expression involves communicating ideas and imagination through a range of different media. Creative visual expression has become increasingly important as the ubiquity of desktop publishing, digital imaging and design software means that nearly everyone will need to design, create or engage with visual communications at some point in their personal or professional lives.

In the PISA test, students express their imagination by using a digital drawing tool. The drawing tool does not enable free drawing, but students can create visual compositions by dragging and dropping elements from a library of images and shapes. Students are also able to resize, rotate and change the colour of elements. Students will create visual designs for a variety of purposes, such as creating a clothing design, logo or poster for an event.

Social problem solving

Young people use creative thinking every day to solve personal, interpersonal and social problems. These problems can range from the small-scale, personal level (e.g. resolving a scheduling conflict) to the wider school, community or even global levels (e.g. finding ways to improve sustainable living). Creative thinking in this domain involves understanding different perspectives, addressing the needs of others, and finding innovative and functional solutions for the parties involved (Brown and Wyatt, 2010[97]).

In the PISA test, students solve open problems that have a social focus. These problems focus on issues that affect groups within society (e.g. young people) or on issues that affect society at large (e.g. the use of global resources or the production of waste materials). Students are asked to propose ideas or solutions in response to a given scenario, or to suggest original ways to improve others’ solutions.

Scientific problem solving

Scientific problem solving involves generating new ideas and understanding, designing experiments to probe hypotheses, and developing new methods or inventions (Moravcsik, 1981[98]). Students can also demonstrate creative thinking as they engage in a process of scientific inquiry by exploring and experimenting with different ideas or materials to make discoveries and advance their knowledge and understanding (Hoover, 1994[99]).

Although creative thinking in science is related to scientific inquiry, the tasks in this domain differ fundamentally from the PISA scientific literacy tasks. In this test, students are asked to generate multiple distinct ideas or solutions, or an original idea or solution, for an open problem for which there is no pre-defined correct response. In other words, the tasks measure students’ capacity to produce diverse and original ideas not their ability to reproduce scientific knowledge or understanding. For example, in a task asking students to formulate different hypotheses to explain a phenomenon, they would be rewarded for proposing multiple plausible hypotheses regardless of whether one of those hypotheses constituted the right explanation for the phenomenon. Nonetheless, domain readiness may affect performance in this domain more than others as most tasks that can be imagined imply a minimum level of knowledge of basic scientific principles.

In the PISA test, students engage with open problems that have a scientific or engineering basis. Students are asked to propose hypotheses to explain a given scenario, or to improve or generate new methods for solving problems.

Scoring the tasks

Every task in the PISA test is open-ended, meaning there are essentially infinite ways of demonstrating creative thinking. Scoring for this assessment therefore relies on human judgement following detailed scoring rubrics and well-defined coding procedures. All items corresponding to the same facet of the competency model apply the same general coding procedure. However, as the form of response varies by domain and task (e.g. a title, a solution, a design, etc.), so do the item-specific criteria for evaluating whether an idea is different or original. The detailed coding guides describe the item-specific criteria for each item and provide annotated example responses to help human coders score consistently.

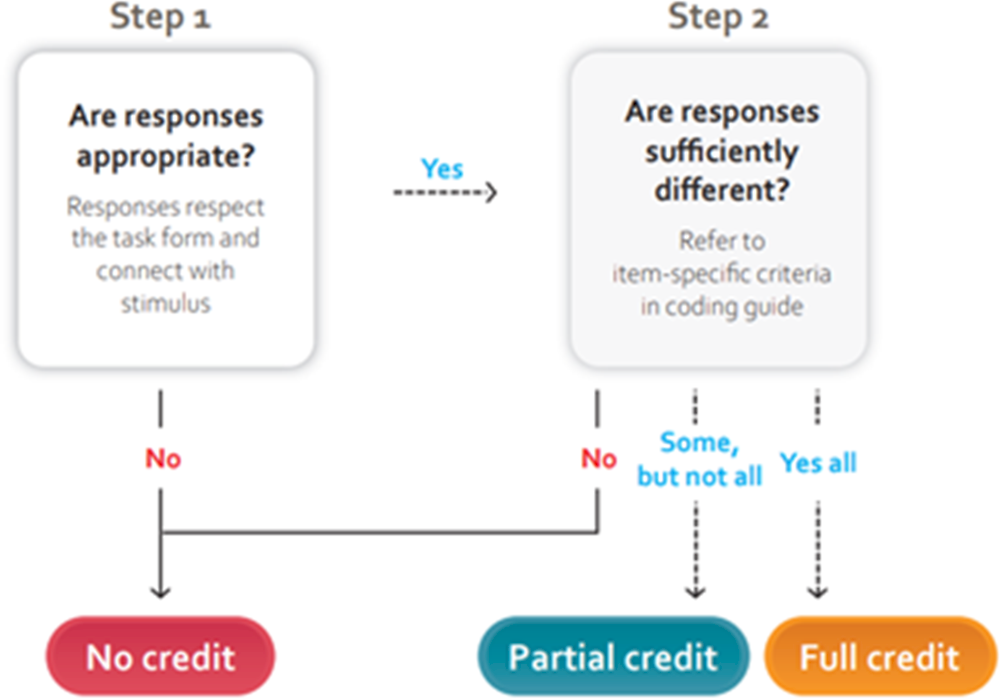

Scoring of ‘generate diverse ideas’ items

All items corresponding to the ‘generate diverse ideas’ facet of the competency model require students to provide two or three responses. The general coding procedure for these items involves two steps, as summarised in Figure 4.3. First, coders must determine whether responses are appropriate. Appropriate in the context of this assessment means that students’ responses respect the required form and connect (explicitly or implicitly) to the task stimulus. Second, coders must determine whether responses are sufficiently different from one another based on item-specific criteria described in the coding guide.

Figure 4.3. General coding process for ‘generate diverse ideas’ items

The item-specific criteria are as objective and inclusive as possible of the range of different potential responses. For example, for a written expression item, sufficiently different ideas must use words that convey a different meaning (i.e. are not synonyms). For items in the problem-solving domains, the coding guides list pre-defined response categories to help coders distinguish between similar and different ideas. The coding guides provide detailed example responses and explanations for how to code each example.

Full credit is assigned where all the responses required in the task are both appropriate and different from each other. Partial credit is assigned in tasks requiring students to provide three responses, and where two or three responses are appropriate but only two are different from each other. No credit is assigned in all other cases.

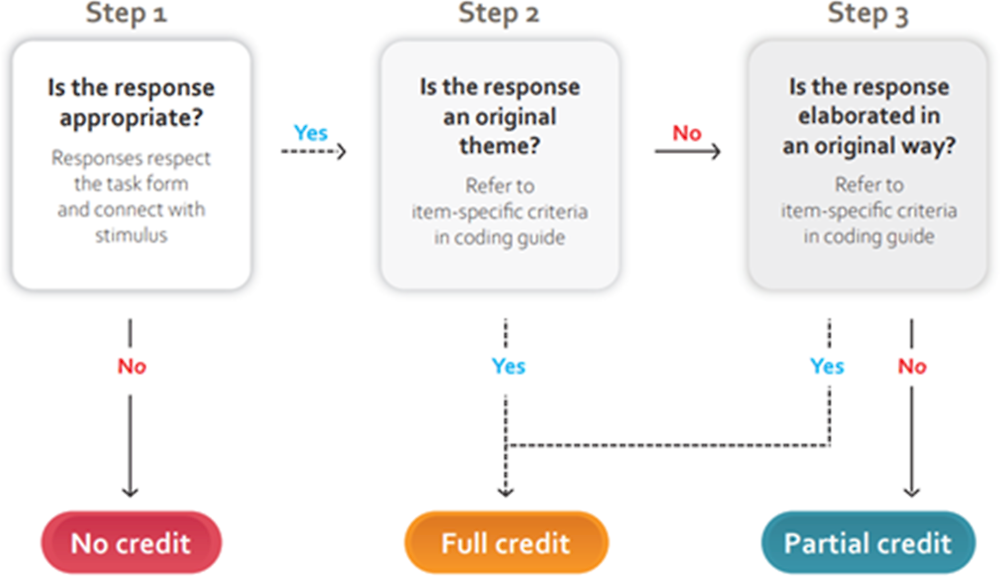

Scoring of ‘generate creative ideas’ items

All items corresponding to the facet ‘generate creative ideas’ of the competency model require a single response. The general coding procedure for these items involves two or three steps, depending on the content of the response. First, as with all items, coders must determine whether the response is appropriate. Then, coders must determine whether the response is original by considering two criteria (see Figure 4.4).

An original idea is defined as a relatively uncommon idea with respect to the entire pool of responses. The coding guide identifies one or more conventional themes for each item according to the patterns of genuine student responses revealed in multiple validation studies. If a response does not correspond to a conventional theme as described in the coding guide, it is directly coded as original. However, if an idea does correspond to a conventional theme, then coders must determine whether it is original based on its elaboration. The coding guide provides item-specific explanations and examples of original ways to elaborate on conventional themes. For example, a student might add an unexpected twist to a story idea that otherwise centres on a conventional theme.

Figure 4.4. General coding process for ‘generate creative ideas’ and ‘evaluate and improve’ items

This twofold originality criteria ensures that the scoring model takes into account both the general idea and the details of a response. While this approach does not single out the most original responses in the entire response pool, it does ensure that the coding process is less susceptible to culturally-sensitive grading styles that favour middle points or extremes, and it provides some mitigation against potential cultural bias in the identification of conventional themes across countries.

Full credit is assigned where the response is both appropriate and original. Partial credit is assigned where the response is appropriate only, and no credit is assigned in all other cases.

Scoring of ‘evaluate and improve ideas’ items

All items corresponding to the facet ‘evaluate and improve ideas’ of the competency model require a single response and generally ask students to adapt a given idea in an original way rather than coming up with an idea from scratch. The general coding procedure for these items involves the same steps as those for the ‘generate creative ideas’ items, described above and in Figure 4.4.

However, appropriate responses for these items must be both relevant and constitute an improvement. The threshold for achieving the appropriateness criteria for these items is thus somewhat strengthened with respect to items measuring the other two facets, as responses must explicitly connect to the task stimulus and attempt to address its deficiencies. The coding guide provides item-specific criteria, examples and explanations to help orient coders. For responses considered appropriate, coders must establish the originality of the improvement by considering the same two originality criteria as for ‘generate creative ideas’ items.

Full credit is assigned where the response is both appropriate and an original improvement. Partial credit is assigned where the response is appropriate only, and no credit is assigned in all other cases.

Assembling the test

Test and unit design

Students who receive a creative thinking module will spend up to one hour on creative thinking items, with the remaining hour of testing time assigned to a combination of mathematics, reading or scientific literacy items. The creative thinking items are organised into units, which in turn are organised into 30‑minute “clusters”. Each cluster includes two or more test units. The clusters are placed in multiple computer-based test formats according to a rotated test design.

Each creative thinking unit contains between one and three items, and the items are organised around a common stimulus or context. The units vary in several important ways including:

The facets of the construct (generate diverse ideas, generate creative ideas, evaluate and improve ideas) that are measured by the items in the unit;

The domain context in which the items are situated (written expression, visual expression, social problem solving or scientific problem solving);

The unit length (guidelines of 5 to 15 minutes).

While not every unit provides a point of observation for every facet of the construct, the rotated test design and the balanced variation of facets within different domain contexts ensures that, as a whole and at the population level, the test provides an adequate coverage of all the facets of creative thinking as defined by the competency model. The balanced coverage of items across the four domains will also make it possible to explore the extent to which students who demonstrate proficiency in creative thinking in one domain can also demonstrate proficiency in other domains.

Refining the item pool for the PISA 2022 Main Survey

Over the course of the test development cycle, several test units were designed, developed and piloted within the PISA testing platform, including during the limited PISA 2020 Field Trial and the full PISA 2021 Field Trial. Not all units and items that were designed or developed progressed to the Field Trial stage of test development (e.g. if the item performed poorly in earlier validation studies, development may have stopped at this point). The test units and items that progressed to the final pool of units for the PISA 2022 Main Survey were selected from this wider pool of potential units with the support of country reviewers and the Expert Group, and informed by the following key criteria:

The representation of key concepts for creative thinking (e.g. facets of the competency model, domains), as identified in the framework;

The range of tasks that can accurately discriminate proficiency;

The appropriateness and variety of the task types;

The ability to produce reliable coding and scoring;

The familiarity and relevance of topics to all students, independent of their country and socio-cultural context;

Their performance in the cognitive labs, validation studies and Field Trial(s).

Validating the tasks and scoring methods

As with any PISA assessment, but particularly the PISA innovative domain assessments, it is paramount to ensure sufficient validation throughout the test conceptualisation and development phases. There are several sources of potential measurement invariance for any large-scale international assessments. In the context of PISA, some of the most important include: 1) the similarity of the relevance and definition of the construct being measured across cultures; 2) students’ familiarity with the item format used in the test (e.g. interactive or static units, or different response types); 3) the relevance, clarity and familiarity of the item content; and 4) the quality of adaptation into different languages. The failure to investigate these aspects through validation exercises leads to the introduction of test bias and ultimately to structural and measurement non-equivalence across the groups under study (Van de Vijver and Leung, 2011[100]).

Given the complex nature of measuring creative thinking, the assessment framework, test tasks and questionnaire items, scoring materials and coder training practices have undergone extensive validation. This has included several rounds of review of the assessment materials by PISA participating countries, cognitive laboratories in 2 countries, small-scale pilot data collections in 5 countries and two Field Trial data collections (one partial and one large-scale). The following section describes the different ways in which the OECD Secretariat and the test development contractor have addressed issues of validity and comparability for the PISA 2022 Creative Thinking assessment in further detail, both through test design and development practices and through the collection and analysis of data.

Optimising cross-cultural validity and comparability of the construct (construct equivalence)

Construct equivalence refers to the degree to which the construct definition is similar for populations targeted by the assessment. The literature emphasises that creativity is embedded within social contexts, and research has found that the way creativity develops and the ways in which it manifests can differ across cultural groups (Lubart, 1998[81]; Niu and Sternberg, 2003[79]). Careful attention has thus been paid to balance measurement validity with score comparability for the PISA assessment, namely by focusing the assessment on certain aspects of the construct that optimise comparability across cultures. These include:

1. Focusing on the narrower construct of creative thinking, defined as being able to engage productively in the generation, evaluation and improvement of ideas. This narrower focus emphasises the cognitive processes related to idea generation, whereas the broader construct of creativity also encompasses personality traits and requires more subjective judgements about the creative value of students’ responses;

2. Defining creative thinking and its enablers in the context of 15-year-olds in the classroom, focusing on aspects of the construct that are more likely to be developed in schooling contexts around the world rather than outside of school;

3. Identifying cross-culturally relevant domains in which 15-year-olds are likely to be able to engage and can be expected to have practiced creative thinking;

4. Focusing scoring on the originality (i.e. statistical infrequency) and diversity of ideas (i.e. belonging to different categories), rather than the creative value or quality of ideas (that are more likely to be subject to sociocultural bias).

In addition, the assessment framework – which defines the construct and its operationalisation for the PISA 2022 assessment – has been developed under the guidance of a multicultural and multidisciplinary Expert Group with expertise in the field of creativity and its measurement, as well as subject to multiple rounds of review by PISA participating countries.

Ensuring cross-cultural validity and comparability of the tasks (test equivalence)

Test equivalence refers to the equivalence of tasks and test versions in different languages and for different student groups, including the degree to which different student groups perceive and engage with the tasks in the same way. Several activities were undertaken during the test development phase to address potential sources of test equivalence in the tasks and scoring methods, including:

1) Cross-cultural face validity and comparability reviews. Experts in the measurement of creative thinking and PISA participating countries engaged in several cycles of review of the test material and coding guides to validate the task contexts, stimuli and scoring criteria. These review exercises helped to identify and eliminate possible sources of cultural, gender and linguistic bias prior to the collection of data.

2) Cognitive laboratories. Experienced test development professionals conducted cognitive laboratories with students around the age of 15 years-old in three PISA participating countries across three continents. Students simulated completing the test units and responded to a series of questions in a “think aloud” protocol while working through the test material, explaining their thought processes and pointing out misunderstandings in the instructions or task stimuli. Problematic task content, features or instructions were subsequently modified.

3) Small-scale validation exercises. Genuine student data were collected, coded and scored in a series of small-scale pilot studies simulating PISA testing conditions (3 separate data collections across 5 countries). The analysis of the data and the coding processes in each of the studies identified items that did not perform as intended, informing iterative, evidence-based improvements to the test material, coding guide and scoring procedures.

4) Translatability reviews. Experienced test development, adaptation and translation professionals conducted translatability reviews to ensure that all of the assessment materials (items, stimuli and coding guides) could be sufficiently and appropriately translated into the many languages used in the PISA Main Study. This included ensuring a balanced adaptation of the linguistic and cultural references associated with each language group in PISA.

5) Field Trial(s) and Main Study data analysis and verification. The Field Trial, undertaken in all PISA participating countries, provides an opportunity for a full construct and measurement validation exercise prior to the Main Study. The Field Trial simulates the administration of the assessment to large representative samples of 15-year-olds across the world. Analysis of the Field Trial data is used to exclude test items that demonstrate insufficient validity and score reliability, within and across countries, in addition to differential item functioning. Given the importance of human coding for this assessment, the Field Trial also allowed a first, full-scale validation of the coding processes including the inter-rater reliability (see Box 1). Due to the global disruption to schooling caused by the COVID-19 pandemic, the PISA 2021 Main Study was postponed to 2022; a partial Field Trial was therefore conducted in 2020, followed by a full Field Trial in 2021. Analysis of the data collected in the Main Study also enabled further verification of the data quality in terms of score reliability, validity and differential item functioning. The frequency distribution of response themes across countries was also examined following the Main Study data collection, informing adjustments to the coding and scoring rules for some items to maximise cross-cultural comparability.

Box 4.1. Investigating inter-rater reliability

Ensuring the reliability and comparability of scores is a fundamental principle in all PISA assessments. In the PISA 2022 Creative Thinking assessment, the success of the scoring approach clearly depends on the quality of the scoring rubrics, coding guides and clear coding processes. The scoring rubrics and coding guides underwent a rigorous process of verification throughout the test development cycle, with input from coders in PISA participating countries on the content and language used in the coding materials. Experienced test development and scoring professionals also led several international coder training workshops to train the coders in PISA participating countries ahead of both 2020 and 2021 Field Trials, as well as the 2022 Main Study.

Inter-rater reliability (i.e. the extent to which two or more coders agree on the code assigned to a response) was also investigated in all of the validation activities that involved the collection and scoring of student responses, in line with established PISA practices, in order to understand and address issues of consistency by improving the item design or the coding guidance. In the Field Trial(s), within-country inter-rater reliability was measured by having multiple coders code a set of randomly selected 100 responses for each item. Across-country inter-rater reliability was measured by asking English-speaking coders in each country to code a set of 10 anchor responses selected from responses of real students in different countries for each item. Sufficient inter-rater reliability, as approved by the PISA Technical Advisory Group (TAG) of experts, was recorded for all items that progressed to the 2022 Main Study item pool.

The PISA background questionnaires for creative thinking

In addition to the test, PISA gathers self-reported information from students, teachers and school principals through the use of questionnaire instruments. In the PISA 2022 cycle, these questionnaire instruments will collect information about the different enablers and drivers of creative thinking outlined earlier in this framework document that are not directly measured in the test.

Curiosity and exploration

Questionnaire items will measure students’ curiosity, openness to new experiences and disposition for exploration. Questionnaire scales on openness were informed by the extensive literature on the relationship between personality and creativity as well as the existing inventory of self-report measures that have been used in previous empirical studies to identify “creative people”.

Creative self-efficacy

Students will complete items measuring the extent to which they believe in their own creative abilities, focusing on their general confidence in thinking creatively as well as their beliefs about how well they are able to think creatively in different domains.

Beliefs about creativity

One scale in the questionnaire explores various beliefs students have about creativity in general. The items ask students whether they believe creativity can be trained or it is an innate characteristic, whether creativity is only possible in the arts, whether being creative is inherently positive, and whether they hold other beliefs that might influence their motivation to learn to be creative. A similar scale also asks teachers to report their beliefs about creativity in general, including whether they value creativity and whether they belief it can be trained.

Creative activities in the classroom and at school

The student questionnaire asks students about the activities in which they participate, both inside and outside of school, which might contribute to their domain readiness and attitudes towards different creative domains. The school principal and teacher questionnaire will also gather information about creative activities included in the curriculum and offered to students in extracurricular time.

Social environment

The student, teacher and school principal questionnaires collect information about students’ school environments. Questionnaire items focus on student-teacher interactions (e.g. whether students believe that free expression in the classroom is encouraged) as well as the wider school ethos. These items can provide further information on the role of extrinsic motivation on student creative performance (e.g. students’ perception of discipline, time pressures, or assessment).

References

[24] Amabile, T. (2012), “Componential theory of creativity”, No. 12-096, Harvard Business School, http://www.hbs.edu/faculty/Publication%20Files/12-096.pdf (accessed on 28 March 2018).

[70] Amabile, T. (1997), “Motivating creativity in organizations: on doing what you love and loving what you do”, California Management Review, Vol. 40/1, pp. 39-58, https://doi.org/10.2307/41165921.

[91] Amabile, T. (1996), Creativity In Context: Update To The Social Psychology Of Creativity, Westview Press, Boulder, CO.

[23] Amabile, T. (1983), “The social psychology of creativity: A componential conceptualization”, Journal of Personality and Social Psychology, Vol. 45/2, pp. 357-376, https://doi.org/10.1037/0022-3514.45.2.357.

[25] Amabile, T. and M. Pratt (2016), The dynamic componential model of creativity and innovation in organizations: Making progress, making meaning, https://doi.org/10.1016/j.riob.2016.10.001.

[85] Ambrose, D. and R. Sternberg (eds.) (2011), Narrowing curriculum, assessments, and conceptions ow what it means to be smart in the US schools: Creaticide by Design, Routledge.

[52] Baer, J. (2016), “Creativity doesn’t develop in a vacuum”, in Barbot, B. (ed.), Perspectives on Creativity Development: New Directions for Child and Adolescent Development, Wiley Periodicals, Inc.

[33] Baer, J. (2011), “Domains of creativity”, in Runco, M. and S. Pritzker (eds.), Encyclopedia of Creativity (Second Edition), Elsevier Inc, https://doi.org/10.1016/B978-0-12-375038-9.00079-0.

[34] Baer, J. and J. Kaufman (2005), “Bridging generality and specificity: The amusement park theoretical (apt) model of creativity”, Roeper Review, https://doi.org/10.1080/02783190509554310.

[68] Bandura, A. (1997), Self-Efficacy: The Exercise of Control, Worth Publishers, https://books.google.fr/books/about/Self_Efficacy.html?id=eJ-PN9g_o-EC&redir_esc=y (accessed on 29 March 2018).

[4] Barbot, B. and B. Heuser (2017), “Creativity and Identity Formation in Adolescence: A Developmental Perspective”, in The Creative Self, Elsevier, https://doi.org/10.1016/b978-0-12-809790-8.00005-4.

[5] Barbot, B., T. Lubart and M. Besançon (2016), ““Peaks, Slumps, and Bumps”: Individual Differences in the Development of Creativity in Children and Adolescents”, New Directions for Child and Adolescent Development, https://doi.org/10.1002/cad.20152.

[58] Batey, M. and A. Furnham (2006), “Creativity, intelligence, and personality: a critical review of the scattered literature”, Genetic, Social and General Psychology Monographs, Vol. 132/4, pp. 355-429.

[6] Beghetto, R. (2010), “Creativity in the classroom”, in Kaufman, J. and R. Sternberg (eds.), The Cambridge Handbook of Creativity.

[69] Beghetto, R. (2006), “Creative Self-Efficacy: Correlates in Middle and Secondary Students”, Creativity Research Journal, Vol. 18/4, pp. 447-457, https://doi.org/10.1207/s15326934crj1804_4.

[18] Beghetto, R., J. Baer and J. Kaufman (2015), Teaching for creativity in the common core classroom, Teachers College Press.

[67] Beghetto, R. and M. Karwowski (2017), “Toward untangling creative self-beliefs”, in Karwowski, M. and J. Kaufman (eds.), The Creative Self: Effect of Beliefs, Self-Efficacy, Mindset, and Identity, Academic Press, San Diego, CA, https://doi.org/10.1016/B978-0-12-809790-8.00001-7.

[89] Beghetto, R. and J. Kaufman (2014), “Classroom contexts for creativity”, High Ability Studies, Vol. 25/1, pp. 53-69, https://doi.org/10.1080/13598139.2014.905247.

[88] Beghetto, R. and J. Kaufman (2010), Nurturing creativity in the classroom, Cambridge University Press.

[14] Beghetto, R. and J. Kaufman (2007), “Toward a broader conception of creativity: a case for "mini-c" creativity”, Psychology of Aesthetics, Creativity, and the Arts, Vol. 1/2, pp. 73-79, https://doi.org/10.1037/1931-3896.1.2.73.

[15] Beghetto, R. and J. Plucker (2006), “The relationship among schooling, learning, and creativity: “All roads lead to creativity” or “You can’t get there from here”?”, in Kaufman, J. and J. Baer (eds.), Creativity and Reason in Cognitive Development, Cambridge University Press, Cambridge, https://doi.org/10.1017/CBO9780511606915.019.

[90] Bereiter, C. and M. Scardamalia (2010), “Can Children Really Create Knowledge?”, Canadian Journal of Learning and Technology, Vol. 36/1.

[60] Berzonsky, M. and C. Sullivan (1992), “Social-cognitive aspects of identity style”, Journal of Adolescent Research, Vol. 7/2, pp. 140-155, https://doi.org/10.1177/074355489272002.

[97] Brown, T. and J. Wyatt (2010), “Design Thinking for Social Innovation |”, Stanford Social Innovation Review, https://ssir.org/articles/entry/design_thinking_for_social_innovation (accessed on 27 March 2018).

[40] Chen, C. et al. (2006), “Boundless creativity: evidence for the domain generality of individual differences in creativity”, The Journal of Creative Behavior, Vol. 40/3, pp. 179-199, https://doi.org/10.1002/j.2162-6057.2006.tb01272.x.

[46] Cropley, A. (2006), “In Praise of Convergent Thinking”, Creativity Research Journal, Vol. 18/3, pp. 391-404.

[65] Cropley, A. (1990), “Creativity and mental health in everyday life”, Creativity Research Journal, Vol. 13/3, pp. 167-178.

[29] Csikszentmihalyi, M. (2013), Creativity: The Psychology of Discovery and Invention, Harper Collins, New York.

[71] Csikszentmihalyi, M. (1996), Creativity : Flow and the Psychology of Discovery and Invention, HarperCollinsPublishers, https://books.google.fr/books/about/Creativity.html?id=K0buAAAAMAAJ&redir_esc=y (accessed on 26 March 2018).

[84] DeCoker, G. (2000), “Looking at U.S. education through the eyes of Japanese teachers”, Phi Delta Kappan, Vol. 81, pp. 780-81.

[64] DeYoung, C. (2014), “Openness/intellect: a dimension of personality reflecting cognitive exploration”, in Cooper, M. and R. Larsen (eds.), APA Handbook of Personality and Social Psychology: Personality Processes and Individual Differences, American Psychological Association, Washington DC, http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.725.2495&rep=rep1&type=pdf (accessed on 29 March 2018).

[50] Duncker, K. (1972), On problem-solving., Greenwood Press, https://books.google.fr/books/about/On_problem_solving.html?id=dJEoAAAAYAAJ&redir_esc=y (accessed on 27 March 2018).

[73] Eisenberger, R. and L. Shanock (2003), “Rewards, intrinsic motivation, and creativity: a case study of conceptual and methodological isolation”, Creativity Research Journal, Vol. 15/2-3, pp. 121-130, http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.615.6890&rep=rep1&type=pdf (accessed on 29 March 2018).

[59] Feist, G. (1998), “A meta-analysis of personality in scientific and artistic creativity”, Personality and Social Psychology Review, Vol. 2/4, pp. 290-309.

[12] Gajda, A., M. Karwowski and R. Beghetto (2017), “Creativity and academic achievement: A meta-analysis.”, Journal of Educational Psychology, Vol. 109/2, pp. 269-299, https://doi.org/10.1037/edu0000133.

[51] Getzels, J. and M. Csikszentmihalyi (1976), The Creative Vision: A Longitudinal Study Of Problem Finding In Art, John Wiley & Sons, New York, NY.

[43] Glaveanu, V. et al. (2013), “Creativity as action: Findings from five creative domains”, Frontiers in Psychology, Vol. 4/176, pp. 1-14, https://doi.org/10.3389/fpsyg.2013.00176.

[76] Grivas, C. and G. Puccio (2012), The Innovative Team: Unleashing Creative Potential for Breakthrough Results, Jossey-Bass.

[62] Guastello, S. (2009), “Creativity and personality”, in Rickards, T., M. Runco and S. Moger (eds.), The Routledge Companion to Creativity, Routledge/Taylor & Francis, New York, NY, http://psycnet.apa.org/record/2009-03983-022 (accessed on 29 March 2018).

[45] Guilford, J. (1956), “The structure of intellect”, Psychological Bulletin, Vol. 53/4, pp. 267-293, https://doi.org/10.1037/h0040755.

[96] Guilford, J. (1950), “Creativity”, American Psychologist, Vol. 5/9, pp. 444-454, https://doi.org/10.1037/h0063487.

[53] Hatano, G. and K. Inagaki (1986), “Two courses of expertise”, in Stevenson, H., H. Azuma and K. Hakuta (eds.), Child Development and Education in Japan, Freeman, New York.

[1] Hennessey, B. and T. Amabile (2010), “Creativity”, Annual Review of Psychology, Vol. 61, pp. 569-598.

[7] Higgins, S. et al. (2005), A meta-analysis of the impact of the implementation of thinking skills approaches on pupils., Eppi-Centre, University of London, http://eppi.ioe.ac.uk/.

[99] Hoover, S. (1994), “Scientific problem finding in gifted fifth‐grade students”, Roeper Review, Vol. 16/3, pp. 156-159, https://doi.org/10.1080/02783199409553563.

[16] Hwang, S. (2015), Classrooms as Creative Learning Communities: A Lived Curricular Expression, https://digitalcommons.unl.edu/teachlearnstudent/55 (accessed on 26 March 2018).

[41] Julmi, C. and E. Scherm (2016), “Measuring the domain-specificity of creativity”, No. 502, Fakultät für Wirtschaftswissenschaft der FernUniversität in Hagen, https://www.fernuni-hagen.de/imperia/md/images/fakultaetwirtschaftswissenschaft/db-502.pdf (accessed on 28 March 2018).

[63] Kashdan, T. and F. Fincham (2002), “Facilitating creativity by regulating curiosity”, The American Psychologist, Vol. 57/5, pp. 373-4, http://www.ncbi.nlm.nih.gov/pubmed/12025769 (accessed on 29 March 2018).

[37] Kaufman, J. (2012), “Counting the muses: development of the Kaufman Domains of Creativity Scale (K-DOCS)”, Psychology of Aesthetics, Creativity, and the Arts, Vol. 6/4, pp. 298-308, https://doi.org/10.1037/a0029751.

[36] Kaufman, J. (2006), “Self-reported differences in creativity by ethnicity and gender”, Applied Cognitive Psychology, Vol. 20/8, pp. 1065-1082, https://doi.org/10.1002/acp.1255.

[92] Kaufman, J. and J. Baer (2012), “Beyond new and appropriate: who decides what is creative?”, Creativity Research Journal, Vol. 24/1, pp. 83-91, https://doi.org/10.1080/10400419.2012.649237.

[35] Kaufman, J. and J. Baer (2004), “Sure, I’m creative -- but not in mathematics!: Self-reported creativity in diverse domains”, Empirical Studies of the Arts, Vol. 22/2, pp. 143-155, http://journals.sagepub.com/doi/pdf/10.2190/26HQ-VHE8-GTLN-BJJM (accessed on 28 March 2018).

[31] Kaufman, J. and R. Beghetto (2009), “Beyond Big and Little: The Four C Model of Creativity”, Review of General Psychology, https://doi.org/10.1037/a0013688.

[38] Kaufman, J. et al. (2009), “Personality and self-perceptions of creativity across domains”, Imagination, Cognition and Personality, Vol. 29/3, pp. 193-209, https://doi.org/10.2190/IC.29.3.c.

[39] Kaufman, S. et al. (2016), “Openness to experience and intellect differentially predict creative achievement in the Arts and Sciences”, Journal of Personality, Vol. 84/2, pp. 248-258, https://doi.org/10.1111/jopy.12156.

[93] Keating, D. and C. Hertzman (eds.) (1999), Schools as Knowledge-Building Organizations, Guilford.

[21] Kim, Y., R. Almond and V. Shute (2016), “Applying Evidence-Centered Design for the development of Game-Based Assessments in Physics Playground”, International Journal of Testing, Vol. 16/2, pp. 142-163, https://doi.org/10.1080/15305058.2015.1108322.

[81] Lubart, T. (1998), “Creativity Across Cultures”, in Sternberg, R. (ed.), Handbook of Creativity, Cambridge University Press, Cambridge, https://doi.org/10.1017/CBO9780511807916.019.

[102] Lucas, B., G. Claxton and E. Spencer (2013), “Progression in Student Creativity in School: First Steps Towards New Forms of Formative Assessments”, OECD Education Working Papers, No. 86, OECD Publishing, Paris, https://doi.org/10.1787/5k4dp59msdwk-en.

[103] Lucas, B., G. Claxton and E. Spencer (2013), “Progression in Student Creativity in School: First Steps Towards New Forms of Formative Assessments”, OECD Education Working Papers, No. 86, OECD Publishing, Paris, https://doi.org/10.1787/5k4dp59msdwk-en.

[17] Lucas, B. and E. Spencer (2017), Teaching Creative Thinking: Developing Learners Who Generate Ideas and Can Think Critically., Crown House Publishing, https://bookshop.canterbury.ac.uk/Teaching-Creative-Thinking-Developing-learners-who-generate-ideas-and-can-think-critically_9781785832369 (accessed on 26 March 2018).

[55] McCrae, R. (1987), “Creativity, divergent thining, and openness to experience”, Journal of Personality and Social Psychology, Vol. 52/6, pp. 1258-1265, http://psycnet.apa.org/buy/1987-28199-001 (accessed on 29 March 2018).

[101] McCrae, R. and P. Costa (1987), “Validation of the five-factor model of personality across instruments and observers.”, Journal of personality and social psychology, Vol. 52/1, pp. 81-90, http://www.ncbi.nlm.nih.gov/pubmed/3820081 (accessed on 3 April 2018).

[19] Mislevy, R., L. Steinberg and R. Almond (2003), “On the structure of educational assessments”, Measurement: Interdisciplinary Research and Perspective, Vol. 1/1, pp. 3-62, https://doi.org/10.1207/S15366359MEA0101_02.

[98] Moravcsik, M. (1981), “Creativity in science education”, Science Education, Vol. 65/2, pp. 221-227, https://doi.org/10.1002/sce.3730650212.

[72] Nakamura, J. and M. Csikszentmihalyi (2002), “The concept of flow”, in Snyder, C. and S. Lopez (eds.), Handbook of Positive Psychology, Oxford University Press, New York, NY, https://s3.amazonaws.com/academia.edu.documents/31572339/ConceptOfFlow.pdf?AWSAccessKeyId=AKIAIWOWYYGZ2Y53UL3A&Expires=1522329542&Signature=8Kciv%2BgoV2wvGr0vrMHy%2BqiR3yw%3D&response-content-disposition=inline%3B%20filename%3DConcept_Of_Flow.pdf (accessed on 29 March 2018).

[8] National Advisory Committee on Creative and Cultural Education (1999), All Our Futures: Creativity, Culture and Education, National Advisory Committee on Creative and Cultural Education.