Dirk Van Damme

OECD, France

Does Higher Education Teach Students to Think Critically?

1. Do higher education students acquire the skills that matter?

Abstract

In today’s world, higher education has acquired an economic and social status that is unprecedented in modern history. Technological changes and associated developments in the economy and labour markets have pushed the demand for high-skilled workers and professionals to ever-higher levels. Higher education has become the most important route for a country’s human capital development and an individual’s upward social mobility. It is where young people acquire advanced generic and specific skills to prosper in the knowledge economy and flourish in society. Though enrolment and graduation rates have increased massively in most countries, a higher education qualification still offers young people the prospect of significant benefits in employability and earnings. The higher education system also helps them develop the social and emotional skills to become effective citizens. Higher education attainment rates thus correlate strongly with indicators of social capital and social cohesion such as interpersonal trust, political participation and volunteering.

Introduction

On average across the OECD’s 38 member countries, 45% of the 25-34 year-old age cohort obtained a tertiary education qualification in 2020 compared with 37% in 2010 (OECD, 2021[1]). By 2030, there will be over 300 million 25-34 year-olds with a tertiary qualification in OECD and G20 countries compared to 137 million in 2013 (OECD, 2015[2]). Many OECD countries have seen steep increases in their tertiary education enrolment and graduation figures. And emerging economies such as China, India and Brazil see investments in the expansion of higher education as an important route towards economic growth and social progress.

However, in several countries questions are being raised by policy makers about the sustainability of continued growth rates. Should knowledge-intensive economies aim for 60, 70, 80% of tertiary-qualified workers in 25-34 year-old cohorts? Or does continued growth of higher education lead to over-qualification, polarisation of labour markets and substitution of jobs previously held by mid-educated workers? What are the risks associated with over-education (Barone and Ortiz, 2011[3])? Added to such questions are concerns about higher education attainment exacerbating social inequality and the marginalisation of low- and mid-educated populations.

Central to these concerns is the question about the value of higher education qualifications. Does a university degree still signal a high level of advanced cognitive skills? Or did the massification of higher education cause erosion of the skills equivalent of a tertiary degree? Is massification leading to degree inflation and, hence, the decreasing intrinsic value of qualifications? The difficult answer to these questions is: We don’t know. While the OECD’s Programme for International Student Assessment (PISA) has become the global benchmark of the learning outcomes of 15-year-old students and hence of the quality of school systems, there is no valid and reliable measure of the learning outcomes of higher education students and graduates. Indirect measures of the value of a higher education qualification such as the employment rates or earnings of graduates are distorted by labour market polarisation and substitution effects. They are increasingly seen as unsatisfactory.

According to some economists, the increase in highly qualified influx into the labour market necessarily leads to over-education and an erosion of the higher education wage premium. In 2016 The Economist argued that the relative wage advantage for the highly qualified is severely over-rated and that there are massive displacement and substitution effects (The Economist, 2016[4]). In 1970 about 51% of the highly skilled in the United States worked in jobs classified as highly skilled; in 2015 this dropped to 35%. Many highly qualified workers now work in jobs for which, strictly speaking, no higher education qualification is required. Also, according to The Economist, real wages for highly skilled workers have fallen.

There are signs that global employers have started to distrust university qualifications and are developing their own assessment tools and procedures to test students for the skills they think are important. Governments are also concerned not just about overall cost but rising per-student cost. They are confronting universities with concerns about efficiency and “value-for-money”. And, they are shifting the balance in the funding mix of higher education from public to private sources, thereby increasing the cost for students and families. When students are asked to pay more for the degree they hope to earn, they also become powerful stakeholders in the value-for-money debate. The impact of COVID-19 has accelerated the value-for-money debate: closures, poor teaching and learning experiences and disruptions in the examination and graduation procedures while maintaining high tuition fees have caused dissatisfaction among students, some of whom are reclaiming financial compensation from universities.

The traditional mechanisms of trust in higher education qualifications are under severe stress. This chapter explores these issues in more detail. In doing so, it builds a case for an assessment of the learning outcomes of higher education students and graduates. In the world of tomorrow in which skills are the new currency, qualifications – the sole monopoly of higher education systems – may lose their value if doubts about learning outcomes remain unanswered. These doubts can only be addressed by better empirical metrics of what students learn in higher education and the skills with which graduates enter the labour market. This chapter will discuss the signalling role of qualifications; transparency and trust in higher education qualifications; changing skill demand; and initiatives taken toward the assessment of students’ and graduates’ learning outcomes to rebalance information asymmetry and restore trust.

Qualifications versus skills

The value of higher education for the economy and society is mediated through the qualifications that students earn and build on in the labour market. As the sole remaining monopoly of higher education, qualifications are of critical importance to the existence of the sector. Globalisation and internationalisation have given almost universal validity to a shared qualification system based on the bachelor’s/master’s/PhD ladder. In turn, qualification frameworks are one of the most powerful drivers of skills convergence in global higher education (Van Damme, 2019[5]).

Traditional human capital theory centres on the substantive contribution the teaching and learning process makes to knowledge, skills and other attributes of students. But this traditional view is increasingly challenged by the ‘signalling’ or ‘screening’ hypothesis, which emphasises the selective functions of university programmes in providing employers with workers fit for jobs. This mechanism saves employers from expensive recruitment, selection and testing to identify the workers they need. In this approach, what students actually learn at university plays a less important role in the attribution of graduates to jobs, earnings and other status goods than selection itself.

Analysis of the OECD Survey of Adult Skills (PIAAC) has shown that across participating countries, earnings are more driven by formal education than actual skill levels (Paccagnella, 2015[6]). The institutional regulation of labour markets and professions, and symbolic power of university degrees ensure that degrees, not skills, determine access to high-level jobs and earnings. However, the meaning and value of tertiary qualifications levels is not purely symbolic. Employers value qualifications because they signal qualities the individual is perceived to possess.

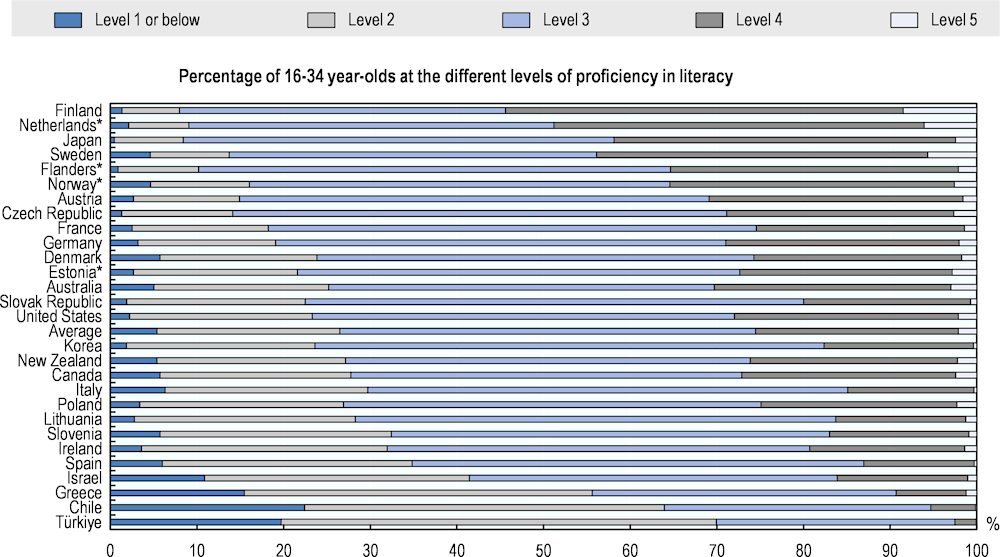

For signalling to function well, there needs to be some convergence of skills around a tertiary qualification level. However, the evidence is almost completely missing on whether the learning outcomes and skills of graduates actually warrant the view that higher education qualifications represent converging levels of equivalence. In terms of learning outcomes and skills development, differentiation seems to be more important than convergence. Data from the OECD’s Adult Skills Survey (PIAAC) of the skill levels of tertiary-educated adults point to between-country differences that remain pronounced even in areas with proclaimed convergence policies such as the European Higher Education Area. Figure 1.1 shows the percentage of higher education graduates younger than 35 who, in the Survey of Adult Skills, scored at each of five levels of proficiency on the literacy scale for each country.

Figure 1.1. Percentage of 16-34 year-old tertiary graduates at the different levels of proficiency in literacy

Note: *Participating in the Benchmarking Higher Education System Performance exercise 2017/2018. Countries are ranked in ascending order of the proportion of 16-34 year-olds with higher education who perform below level 2 in literacy proficiency.

Source: OECD (2019b), Benchmarking Higher Education System Performance, Higher Education, OECD Publishing, Paris, https://doi.org/10.1787/be5514d7-en (accessed on 1 August 2022) adapted from OECD Survey of Adult Skills, www.oecd.org/skills/piaac/data/.

Two observations can be drawn from these data. First, there is enormous variation in the literacy skills of tertiary education graduates. More than half (54.4%) of Finnish graduates scored at levels 4 or 5 compared with only 2.5% in the Republic of Türkiye. The differences between countries in the distribution of literacy skills does not seem to correlate with the share of the age cohort with a tertiary qualification. Neither massification nor globalisation seem to have had a huge impact on the skills levels of graduates. Evidence of wide differences in skills among tertiary education graduates with a similar level of qualifications contradicts a global convergence of skills equivalent to qualifications.

A second conclusion that can be drawn from these data is that a tertiary qualification does not fully protect against low skills. In many countries, even those with well-developed higher education systems, over 5% of the tertiary-educated 16-34 year-olds only perform at the lowest level of literacy proficiency, with figures higher than 15% in Greece, Chile and Türkiye. On average across OECD countries participating in the Survey of Adult Skills, over 25% of adults with a higher education degree who are younger than 35 do not reach level 3 in literacy, which can be considered as the baseline level for functioning well in the economy and society. A high share of graduates scoring at low levels of proficiency indicates that a higher education degree is not a good signal of the foundational literacy proficiency of graduates.

It is true that literacy skills are foundation skills that are not primarily supposed to be acquired in higher education. Teaching and learning in universities likely have higher added-value in more specialised skills sets. Still, these data are worrisome in that that higher education qualifications do not reliably signal a certain threshold skills level. Neither do they guarantee employers a minimum skills set.

How has the skills level of the tertiary-educated population evolved over time? One would assume that the massive introduction of tertiary qualifications would have increased the general skills level in the population. The OECD Adult Skills Survey, administered in the years 2012-15, and its predecessors, the International Adult Literacy Survey (IALS), administered in the 1990s, and the Adult Literacy and Life Skills Survey (ALL), administered in the 2000s, are the sole data sources that allow empirical testing of that hypothesis. When comparing the literacy performance of the adult population in countries that participated in these surveys, there are more indications of stability or even slight decline than of increasing skill levels (Paccagnella, 2016[7]). Changes in the composition of the populations due to ageing and migration might partly be responsible but one would expect the massive increase in tertiary qualifications to offset these changes and to result in higher skill levels.

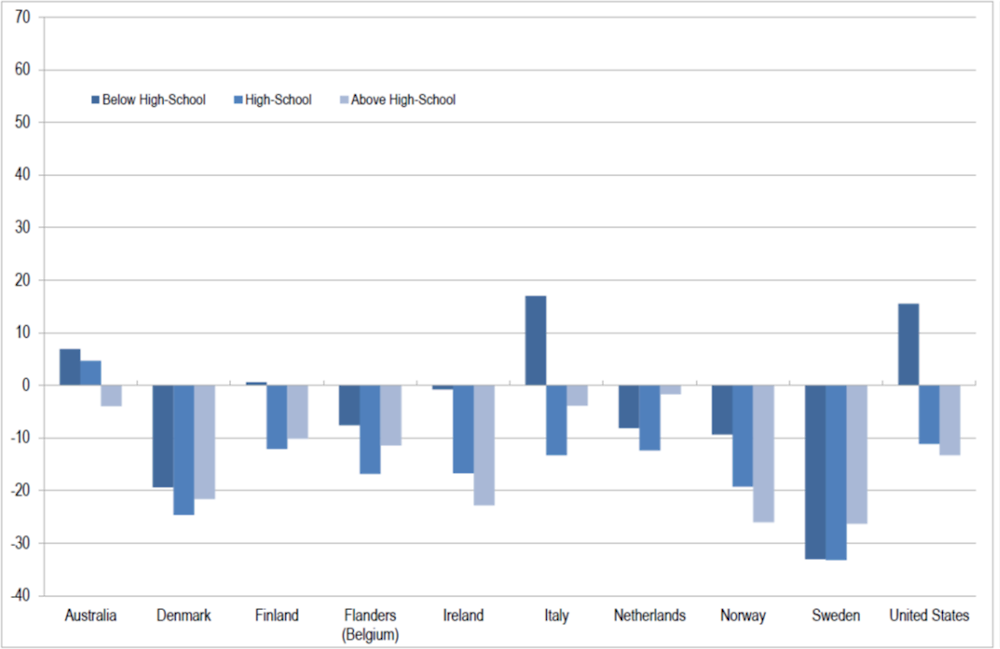

Figure 1.2 compares the literacy proficiency change in the adult population between the IALS and PIAAC surveys by educational attainment level. Individuals with an upper secondary or tertiary qualification performed worse in PIAAC than in IALS (with the exception of Australia). The proficiency of adults with less than secondary level attainment increased or remained stable in 5 out of 10 countries. But, strikingly, the proficiency of adults with a tertiary qualification dropped in most countries, with a decline of more than 20 percentage points in Denmark, Ireland, Norway and Sweden. In the United States, Finland and the Flemish Community of Belgium, countries with excellent higher education systems, the decrease exceeds 10 percentage points. Clearly, the increase in tertiary attainment levels did not result in an increase of the skills level in the adult population – on the contrary.

Figure 1.2. Comparing literacy proficiency between IALS and PIAAC by educational attainment

Source: Paccagnella, M. (2016), “Literacy and Numeracy Proficiency in IALS, ALL and PIAAC”, OECD Education Working Papers, No. 142, OECD Publishing, Paris, https://doi.org/10.1787/5jlpq7qglx5g-en (accessed on 1 August 2022) adapted from International Adult Literacy Survey (IALS) (1994-1998), and Survey of Adult Skills (PIAAC) (2012), www.oecd.org/site/piaac/publicdataandanalysis.htm.

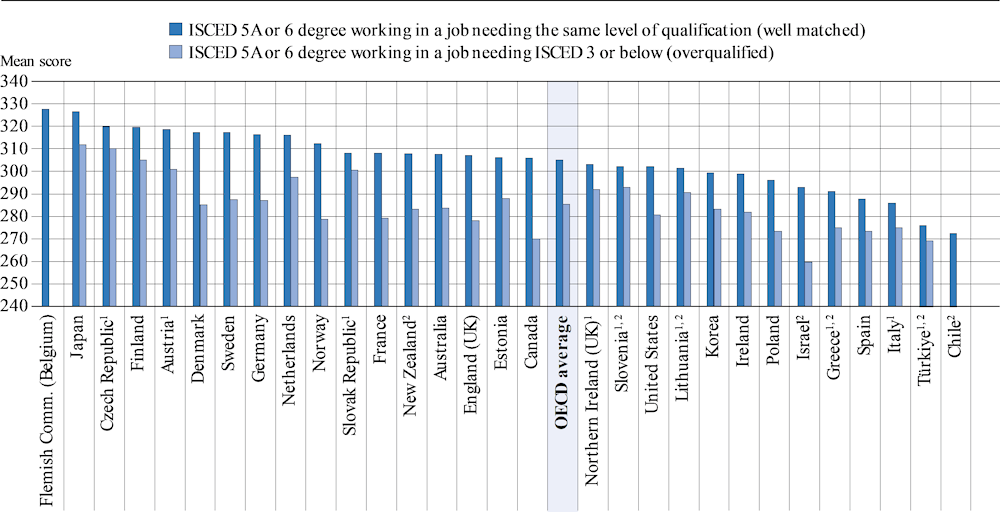

A possible explanation linking the growth of tertiary attainment and the decline of skills in the population can be obtained by looking at the impact of over-qualification on skills. Figure 1.3 shows that tertiary-educated workers in a job for which they have a well-matched qualification have on average higher numeracy skills than workers who work in a job not requiring a tertiary qualification. This could happen either through the recruitment process, which discriminates for skills, or through a process of skill decline or obsolescence when skills are not fully used. High levels of qualification mismatch thus further depreciate the value of tertiary qualifications.

Figure 1.3. Mean numeracy score among adults with ISCED 5A or 6, by selected qualification match or mismatch among workers (2012 or 2015) - Survey of Adult Skills (PIAAC), employed 25-64 year-olds

Note: 1. The difference between well-matched and overqualified workers is not statistically significant at 5%. 2. Reference year is 2015; for all other countries and economies the reference year is 2012.

Source: OECD (2018), "Graph A3.b - Mean numeracy score among adults with ISCED 5A or 6, by selected qualification match or mismatch among workers (2012 or 2015): Survey of Adult Skills (PIAAC), employed 25-64 year-olds", in Education at a Glance 2018: OECD Indicators, OECD Publishing, Paris, https://doi.org/10.1787/eag-2018-graph31-en (accessed 1 August 2022).

In conclusion, when comparing human capital growth as measured by two different metrics, educational attainment rates and foundation skill levels, the observations point in opposite directions: growth of qualifications versus decrease in skills. This conclusion further strengthens doubts about the skills equivalent of tertiary qualifications and their ability to provide reliable measures of skills.

Transparency and information asymmetry

To better understand what’s at stake here, we need to take a closer look at the nature of higher education. In most countries, higher education systems take a hybrid form, combining elements of ‘public good’ and markets in a ‘quasi-market’ arrangement. In recent years, market-oriented elements have become much more important. From essentially nationally steered and ‘public good’-oriented structures, higher education systems are increasingly moving towards a ‘private consumption’-oriented model. Influenced by economic insights on the private benefits of higher education and the ‘new public management’ doctrine, policies have strongly supported this transformation by increasing private investment in higher education, encouraging competition for status and resources, supporting internationalisation policies and turning to stronger accountability frameworks. Higher education systems have integrated market elements in their steering but have never completely transformed into real ‘capitalist markets’ (Marginson, 2013[8]).

Of course, there are still many elements and dimensions of higher education systems that can be characterised as ‘public goods’, which are critically important to governments. Policy makers still highly value the role of higher education in preserving language and culture; providing equality of opportunity for all students to access higher education; and serving as a vehicle of social mobility. And there are many other considerations that legitimise public policies in higher education.

At the same time, higher education systems also have many ‘market failures’, the most important one being the well-known problem of ‘information asymmetries’ (Dill and Soo, 2004[9]; Blackmur, 2007[10]; van Vught and Westerheijden, 2012[11]). From the perspective of students, higher education is an experience good, which will be consumed only vary rarely but which has a huge impact on one’s life chances. Information asymmetry seduces providers into maximising their power on the supply side and minimising the role on the demand side. Making the wrong choices can have huge consequences for individuals’ lives but also for the economic and social fate of nations. While privatisation of the cost of higher education has increased enormously, the student/consumer has not been empowered to make smarter choices to a similar extent. The availability and quality of information have simply not improved sufficiently to allow students to make smart choices. Instead, and rather cynically, many policy makers reproach students for making too many wrong choices.

Higher education systems and governments have reacted in two opposite ways to this problem. First, they have developed various kinds of paternalistic instruments to protect the consumer interests of students. By acting on behalf of students in making the right decisions for them, they take a ‘principal agent’ role. Students and their families – and the same mechanism applies to employers – are requested to ‘trust’ the system’s capacity to guarantee basic quality and reliably produce the desired quality and outcomes. In heavily state-driven systems in Europe, this may even take the form of an implicit public denial that there are quality differences among publicly recognised institutions and that a publicly recognised qualification will produce the same outcomes and benefits whatever the institution it comes from.

The second and far more effective way to tackle possible market failure caused by information asymmetry is by producing various instruments that are supposed to improve transparency in the system. In exchange for more institutional autonomy, institutions have been asked to provide more and better data on their performance. Some countries have developed systems of performance management, sometimes linked to funding arrangements. As well, quality assurance arrangements, often based on the trusted academic mechanism of peer review, are supposed to improve the quantity and quality of information available to the general public.

Neither quality assurance systems nor performance management systems have solved the problem of information asymmetry. Performance management systems are essentially bureaucratic tools, meant to inform public policies and steering mechanisms. Their data are often hidden or not understandable by students and the general public. The most commonly used performance indicators have a very poor relationship to the academic quality of students’ teaching and learning environments (Dill and Soo, 2004[9]). Surveys of student satisfaction and student evaluation surveys, popular tools for assessing the perceived quality of the teaching and learning experience, bear no relationship to actual student learning (Uttl, White and Gonzalez, 2017[12]).

And quality assurance arrangements, though often conceived as instruments of public accountability, rarely function as information and transparency systems. In many countries, they have moved from a focus on programmes to a focus on the institution’s internal management capacity to guarantee quality. Only in the field of research have effective measurement and transparency tools been developed. This is largely due to the fact that in the field of research, sufficient expertise and capacity have been developed to tackle measurement challenges.

In turn, the quality and availability of data on research output have stimulated the emergence of global rankings of higher education institutions. Rankings existed before high-quality research metrics but research bibliometrics have enormously contributed to the development and credibility of rankings. The phenomenon of global rankings and their – sometimes perverse – impact on higher education institutions and systems have been widely analysed and discussed, most notably in the work of Hazelkorn (2011[13]; 2014[14]), or Kehm and Stensaker (2009[15]). Despite resistance and criticism among academics and institutions, rankings have become very powerful and serve as a partial answer to the information needs of students, notably international students. Essentially, it is the lack of alternative, better transparency tools available to students, families, employers and the general public that is responsible for the rise and popularity of university rankings.

The main problem with rankings is their over-reliance on research output data. Information on the actual quality of teaching and learning, however, rely on indirect measures or indicators based on various input factors such as student/staff ratios or per student funding, which often have no evidenced relationship to quality. This has provoked a ‘mission drift’ towards research as the easiest way for institutions to improve their ranking. Rankings and the research metrics on which they are based have given way to a ‘reputation race’ among institutions (van Vught, 2008[16]). Rankings have also encouraged the reputation race by relying on reputation surveys to compensate for the lack of reliable teaching and learning metrics. Instead of tackling the information asymmetry problem upfront by opening up the ‘black box’ of teaching and learning and supporting the development of scientifically sound learning outcomes metrics, institutions have developed ‘reputation management’ to cope with the new forces in the global higher education order. Institutions have spent more resources on publicity, branding and marketing than genuine efforts to improve teaching and learning environments.

Rankings and reputation metrics also provide little or no incentive to improve teaching and learning. They basically confirm the existing hierarchies in the system. As reputations change very slowly, this jeopardises the dynamism and innovation in the system. In principle, nothing is wrong with reputation metrics. In the Internet economy where consumers are invited to rate all kinds of products and services, these measures lead to aggregate reputation metrics that guide other consumers in their decision making. But in higher education, there are few reputation measures built on reliable data provided by students and graduates. Reputation measures are based on data provided by academics through reputation surveys. They can hardly compensate for the information asymmetry problem in higher education. Yet, there often is no alternative for students, families, employers and the general public.

Distrust

The fact that degrees seem to perform badly in providing information on learning outcomes or skills of graduates further aggravates the transparency problem. It undermines the trust employers, students, policy makers and the general public put in the system. And there are no other proxies than degrees to indicate that individuals have mastered a certain level of skills. It is a rather naïve strategy for the higher education community to be confident in the sustained symbolic power of degrees.

Some observers have noted these signs of distrust in what students learn in college. In their well-known book, Academically Adrift, Arum and Roksa (2011[17]) analysed data from the Collegiate Learning Assessment (CLA) instrument administered to a large sample of undergraduate students in the United States. They concluded that 45% of students surveyed demonstrated no significant improvement in complex reasoning and critical thinking skills during the first two years of college. After four years, 36% still failed to show any improvement. In a subsequent report, the same researchers (Arum, Roksa and Cho, n.d.[18]) concluded that “Large numbers of college students report that they experience only limited academic demands and invest only limited effort in their academic endeavours”. In a follow-up study. they looked at these students’ transition to working life (Arum and Roksa, 2014[19]). They found that, after graduation, poorly performing students in college were more likely to be in unskilled jobs, unemployed or to have been fired from their jobs. The lack of generic, 21st-century workplace skills impeded the employability of graduates – despite their tertiary qualification.

Global corporations and industry leaders are signalling severely decreased levels of trust in university qualifications. The global consultancy firm, Ernst & Young, which is an important graduate recruiter, was one of the first to announce that it would drop degree requirements for its job applicants (Sherriff, 2015[20]). It argued that “there is “no evidence” success at university correlates with achievement in later life.” PricewaterhouseCoopers (PwC) quickly followed. Large companies in the information and communication technology (ICT) sector such as Google, Apple and Amazon applied the same policy. The sector had already developed its own alternative credentialing system. Significantly, another knowledge-intensive company, Penguin Random House Publishers, removed any requirement for a university degree from its new job listings (Sherriff, 2016[21]). To back its decision, the publishing group pointed to "increasing evidence that there is no simple correlation" between having a degree and work performance.

Many large companies have followed suit, often with large human resources (HR) departments, which have the capacity to assess job applicants for the skills needed. Small and medium-sized companies, however, still predominantly rely on qualifications as they do not have the resources to conduct assessments in-house. And, public-sector employers and regulated professions are obliged by law to value qualifications (Koumenta and Pagliero, 2017[22]). This shift has had a profound effect on hiring and HR policies, and practices of firms. A research report of hiring practices in the United States by Northeastern University concluded that “skills-based or competency-based hiring appears to be gaining significant interest and momentum, with a majority of HR leaders reporting either having a formal effort to deemphasize degrees and prioritize skills underway (23%) or actively exploring and considering this direction (39%)” (Gallagher, 2018[23]).

Changing skill demand

Rapidly changing skill demand has added to growing employer distrust of tertiary qualifications. Automation and digitalisation have ushered in critical changes in the task input of jobs. This requires different skill sets but higher education institutions have been slow to respond.

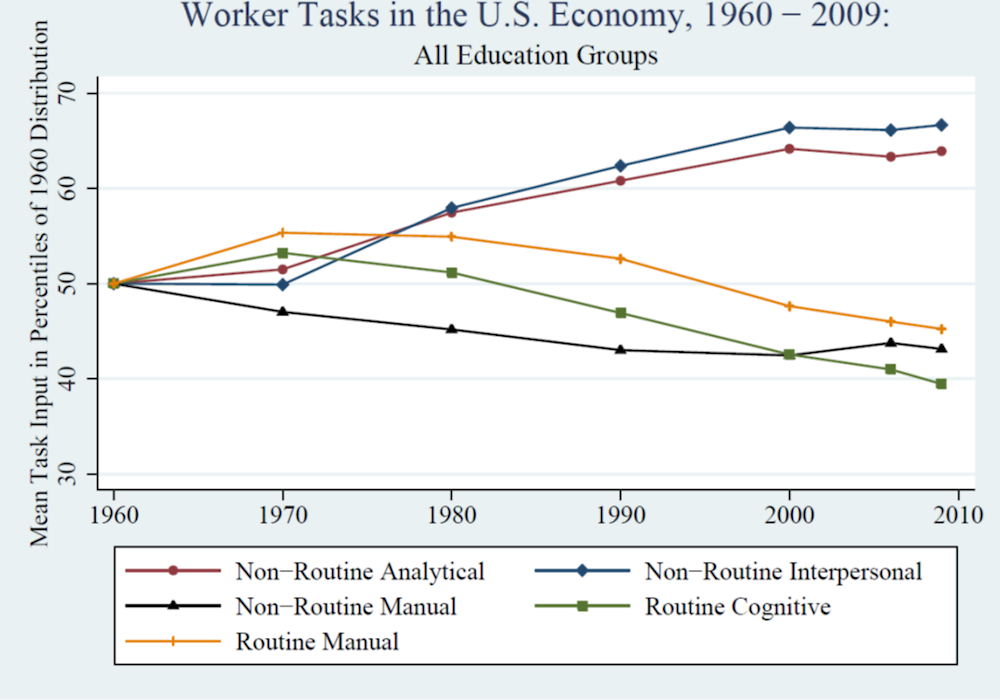

There has been a gradual decline in routine tasks. David Autor, economist at the Massachusetts Institute of Technology (MIT), has researched and documented this evolution in the economy of the United States. In 2013, he and his team replicated and expanded their original 2003 analysis (Autor and Price, 2013[24]). They showed (see Figure 1.4) that in a relatively short period of time, the share of routine manual and routine cognitive tasks declined significantly while the share of non-routine analytical and non-routine interpersonal tasks increased. It is clear that routine tasks, even in high-skilled professions, are increasingly automated. Automation does not replace human labour; it complements it. By changing the task content of existing jobs, automation creates entirely new jobs. David Autor (2015[25]) has demonstrated “that the interplay between machine and human comparative advantage allows computers to substitute for workers in performing routine, codifiable tasks while amplifying the comparative advantage of workers in supplying problem-solving skills, adaptability, and creativity”. Automation allows the value of the tasks that workers uniquely carry out to be raised by adapting their skills set.

At the higher end of skills distribution, which is the segment for which higher education prepares workers, non-routine tasks are so-called ‘abstract’ tasks. They require problem solving, intuition, persuasion, and creativity. These tasks are characteristic of professional, managerial, technical and creative occupations such as law, medicine, science, engineering, marketing and design. Workers who are most adept in these tasks typically have high levels of education and analytical capability, and they benefit from computers that facilitate the transmission, organization, and processing of information” (Autor and Price, 2013[24]).

Figure 1.4. Changing task input in the US economy (1960-2009)

Source: Autor and Price (2013[24]), The Changing Task Composition of the US Labor Market: An Update of Autor, Levy, and Murnane (2003), https://economics.mit.edu/files/9758 (accessed on 1 August 2022).

Another task category that has grown even more significantly than non-routine analytical tasks is non-routine interpersonal tasks. Complex communication tasks requiring highly developed and adaptive social skills have become more important in a wide range of professions. Computers still do poorly at simulating complex human interaction when emotional skills such as empathy come into play. With automation substituting for many tasks, complex interaction and communication tasks have grown in frequency and importance (Deming, 2017[26]). In the United States economy, jobs requiring non-routine communication skills have seen both employment and wage growth, and social skills have yielded increasing returns on the labour market (Deming, 2017[26]; Fernandez and Liu, 2019[27]).

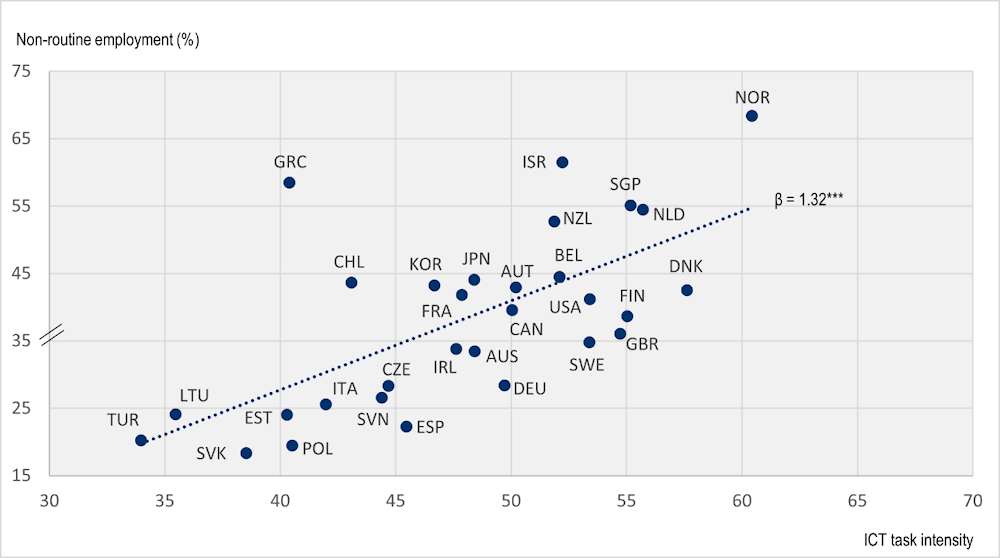

In a number of recent reports, the OECD has developed country-level indicators on non-routine job content and its relationship with digital intensity (Marcolin, Miroudot and Squicciarini, 2016[28]; OECD, 2017[29]; OECD, 2019a[30]). On the basis of PIAAC data, these analyses show a correlation between digitalisation of the work place and industries, and growth in non-routine jobs. Figure 1.5 shows the country-level correlation of these two indicators in manufacturing industries, with increased ICT task intensity going hand-in-hand with a rise in the share of non-routine employment.

Figure 1.5. Share of non-routine employment and ICT task intensity, manufacturing industries, 2012 or 2015

Source: OECD (2017[29]), OECD Science, Technology and Industry Scoreboard 2017: The digital transformation, OECD Publishing, Paris, https://doi.org/10.1787/9789264268821-en (accessed on 1 August 2022).

These changes in skill demand have already had a long-standing impact on the jobs of tertiary-educated professionals. Based on an analysis of data in the Reflex (2005) and Hegesco (2008) surveys of tertiary graduates, Avvisati, Jacotin and Vincent-Lancrin (2014[31]) looked into the skill requirements of graduates working in highly innovative jobs. They found that the critical skills that distinguish innovators most from non-innovators are creativity (“come up with new ideas and solutions” and the “willingness to question ideas”); the “ability to present ideas in audience”; “alertness to opportunities”; “analytical thinking”, “ability to coordinate activities”; and the “ability to acquire new knowledge”. These skills clearly align with what has been labelled non-routine skills.

Changes in the task-content of jobs have also impacted skill demand. Numerous surveys indicate that employers are aware that skills like creativity and analytical thinking have now become dominant in their hiring and recruitment policies (see, for example: (Hart Research Associates, 2013[32]; Kearns, 2001[33])). Terms used to denote skills necessary for successfully performing non-routine tasks include ‘21st- century skills’, ‘soft skills’, ‘generic skills’, ‘transferable skills’, ‘transversal skills’, etc. These categories usually include critical thinking, creativity, problem solving, communication, team-working and learning-to-learn skills. These skills differ from each other but they are pragmatically put under the umbrella of ‘21st-century skills’.

Fuelled by the debate on the impact of automation and digitalisation on jobs, discussions are now multiplying on what skills are needed for the future job market. In 2018, the World Economic Forum’s Future of Jobs survey of chief executive officers and chief human resource officers of multinational and large domestic companies identified analytical thinking, innovation, complex problem solving, critical thinking and creativity as the most important skills (Avvisati, Jacotin and Vincent-Lancrin, 2014[31]).

In a couple of recent papers, OECD analysts have used the Burning Glass Technologies database of online job postings and applied machine-learning technology to explore the information contained in these job postings (OECD, 2019a[30]; Blömeke et al., 2013[34]). These analyses provide overwhelming evidence of the frequency of 21st-century skill requirements in job postings. In an analysis of online job postings in the United Kingdom between 2017 and 2019, for instance, communication, teamwork, planning, problem solving, and creativity were mentioned as transversal.

Employers’ interest in generic or 21st-century skills is also related to ongoing concerns about the impact of qualification and field-of-study mismatch for graduate employability, to which we referred earlier in this chapter. The numbers of graduates in specific fields of study often do not align well with the actual demand for qualifications on the labour market. In OECD countries, an average of 36% of workers are mismatched in terms of qualifications (17% of workers reported in the PIAAC survey that they are overqualified, 19% that they are underqualified) (OECD, 2018[35]). Some 40% of workers are also working in a different field than the one they studied and thus fall under ‘field-of-study mismatch’ (Montt, 2015[36]). Economists consider mismatch to be a significant obstacle to labour productivity growth. High levels of mismatch has prompted discussions on co-ordination between education and the labour market. On graduate employability, the idea of perfect alignment has been discarded. Instead, employers now look to education to develop foundation skills and the transversal, generic skills needed for employability. More technical skill development is now shared between education and on-the-job training in the workplace. Thus, the mismatch issue has contributed to growing interest in generic, 21st-century skills.

Do we know whether higher education institutions foster generic skills such as critical thinking and problem solving? Again, the answer is: We don’t know. What students learn in universities is still generally attuned to routine cognitive tasks and procedural knowledge. Knowledge and skills that can be easily automated continue to dominate curricula. Nevertheless, universities have increased efforts on curriculum reform in response to external demands and pressures. Curriculum reform is generally in the direction of competency-based and interdisciplinary curriculum development. And in curriculum documents one finds statements emphasising the importance of generic skills valued in the workplace. But to know whether universities are fostering students’ learning of 21st-century skills, we need much better assessment systems.

Assessing generic skills

The voice of employers, concerns about graduate employability and growing interest in generic skills have influenced curriculum development, course design and teaching and learning practices in higher education institutions. There are three important dimensions in current educational reform in higher education (Zahner et al., 2021[37]): The shift from lecture format to a student-centred approach emphasising students’ active class participation; shift from curricular and textbook content to case- and problem-based materials requiring students to apply what they know to novel situations; innovation in assessment instruments from multiple-choice tests that are best used for measuring the level of content absorbed by students to open-ended assessments.

Although many higher education institutions and systems have made significant advances on the first two dimensions of this education reform movement, assessment has lagged behind. As universities focus increasingly on developing their students’ generic skills, assessments need to be able to measure how well students are learning – and institutions are teaching – them. Multiple-choice and short-answer assessments remain the dominant testing regime not only for facts but also generic skills. As a result, the testing regime is not assessing the most critical skills required of students in the workplace and – just as importantly – is not supporting the other two dimensions of reform. For educational reform to be in synch with today’s knowledge economy, open-ended, performance-based assessments are required. These have become standard practice in the workplace and contemporary human resources management approaches to recruitment, selection and upskilling.

If performance assessments are integrated into accountability systems, this should positively impact classroom practice. Class time spent preparing students to apply knowledge, analysis, and problem-solving skills to complex, real-world problems is time well spent. It will be worthwhile to investigate whether performance assessment for accountability purposes has a desirable effect on teaching and learning. It will be useful as well to investigate the perceived level of effort required to use performance assessments regularly in the classroom.

A critical shortcoming of today’s principal educational assessment regime is that it pays little attention to how much an institution contributes to developing the competencies students will need after graduation. The outcomes that are typically looked at by higher education accreditation arrangements such as an institution’s retention and graduation rates, and the percentage of its faculty in tenured positions say nothing about how well the school fosters the development of its students’ analytic reasoning, problem solving, and communication skills. This situation is unfortunate because the ways in which institutions are evaluated significantly affects institutional priorities. If institutions were held accountable for student learning gains and student achievement, they would likely direct greater institutional resources and effort toward improving teaching and learning. Assessment has an enormous potential for driving change.

Developments in assessing higher education learning outcomes

Over the past decades, several research initiatives and experimental programmes to assess the learning outcomes of students in higher education have been initiated (Douglass, Thomson and Zhao, 2012[38]; Hattie, 2009[39]; Blömeke et al., 2013[34]; Wolf, Zahner and Benjamin, 2015[40]; Coates, 2016[41]; Coates and Zlatkin-Troitschanskaia, 2019[42]). An overview of the field by the OECD identified assessment practices in six countries (Nusche, 2008[43]). In the United States, the Council for Aid for Education has developed the CLA and its more recent variant CLA+, which will be discussed in this book. The University of California has developed the Student Experience in the Research University Survey. The testing company Educational Testing Service (ETS) has developed the HEIghten™ Outcomes Assessment Suite (Liu et al., 2016[44]). The European Commission, through the Tuning initiative, has endorsed the CALOHEE project (Wagenaar, 2019[45]). Germany has initiated a large and cross-disciplinary study for modelling and measuring competencies in higher education (KoKoHs) (Blömeke et al., 2013[34]). In the United Kingdom, the Teaching Excellence Framework includes several projects on the assessment of learning outcomes in universities. And there are probably many more national and local initiatives.

In 2008, mandated by a decision of education ministers gathered in Athens in 2006, the OECD embarked on the Assessment of Higher Education Learning Outcomes (AHELO) Feasibility Study (Coates and Richardson, 2012[46]; Ewell, 2012[47]). The study, which lasted until 2013, was the first international initiative for the assessment of higher education learning outcomes. It involved 248 higher-education institutions and 23 000 students in 17 countries or economies. It included a generic skills strand for which the CLA instrument was used, and two discipline-specific strands (engineering and economics). The results, outcomes and experiences were reported in three volumes (Tremblay, Lalancette and Roseveare, 2012[48]; AHELO, 2013a[49]; AHELO, 2013b[50]). The main conclusion of the AHELO Feasibility Study was that an international assessment of students’ learning outcomes, which some people referred to as “a PISA for higher education”, was feasible, despite considerable conceptual, methodological and implementation challenges.

In 2015, the OECD proposed to member countries to move to an AHELO Main Study. Several programme proposals were discussed by the Education Policy Committee but no consensus could be reached to embark on a Main Study. At the time, the political debate around AHELO was very heated (Van Damme, 2015[51]). Strong voices of support could be heard in media such as The Economist (2015) and the Times Higher Education (Morgan, 2015a[52]; Morgan, 2015b[53]; Usher, 2015[54]). At the same time, higher education experts and organisations representing the higher education community denounced the initiative (Altbach, 2015[55]). Leading universities and university associations expressed strong concerns and outright opposition.

A serious criticisms of the OECD’s AHELO proposal is that the higher education system is too diverse to apply common measures of learning outcomes. This would immediately standardise and homogenise teaching and learning in universities. In his critique, Phil Altbach (2015[55]) concentrated on this issue. It is certainly true that higher education systems are diversifying. Heterogeneity of the student body requires specific attention when applying standardised assessment instruments (Coates and Zlatkin-Troitschanskaia, 2019[42]). But the interesting point is whether diversity and heterogeneity completely annihilate the ‘common core’ of global higher education systems. In higher education, opposite tendencies of convergence as well as divergence are at work (Van Damme, 2019[5]). However, convergence is the dominant tendency in how degrees and qualifications grant access to jobs, earnings and status. While diversity and heterogeneity might have an impact on curriculum development, course content, teaching methods and examinations, they do not disqualify the need to prepare students for employability and work (Van Damme, 2021[56]).

Methodological issues

As in the case of PISA for secondary school education, an assessment of learning outcomes should not focus on curricular knowledge and skills but, rather, attributes commonly associated with higher education. Certainly, there are culturally specific elements in how generic academic skills are defined in specific contexts but there are powerful similarities as well, and more so in higher education than school education. After all, changes in skill demand affect all economies though there are differences in each one’s skills balance and placement in global value chains. Overcoming cultural bias and diversified institutional missions is a measurement challenge, not a conceptual barrier.

Another important methodological question is whether the assessment of students’ learning outcomes should be an absolute measurement of what students have learnt at the end of their study or a relative assessment of progress, ‘learning gain’ or the value-added through the process. The main argument for a value-added approach is the huge differences in selectivity among institutions and programmes. Universities can realise excellence in students’ learning outcomes through initial selectivity or high value-added through the teaching and learning process. That said, a value-added approach complicates assessment methodologically and logistically. If the overall purpose is to provide feedback to institutions and programmes that will improve their quality, a value-added approach seems mandatory. If the overall purpose, however, is to provide reliable information on the level of generic, 21st-century skills students of a university have acquired, an assessment of absolute levels of learning outcomes makes more sense.

The failure of AHELO to establish itself as an international programme for the assessment of higher education learning outcomes did not lead to the disappearance of the idea itself (Coates and Zlatkin-Troitschanskaia, 2019[42]; Coates, 2016[41]). Several international endeavours have continued. The Educational Testing Service (ETS) has promoted the implementation of its HEIghten suite of assessments, including a critical thinking assessment in China and India. The results of their assessment of the critical thinking skills of undergraduate science, technology, engineering and math (STEM) students in these countries, compared to those in the United States, were published in Nature Human Behaviour in 2021 (Loyalka et al., 2021[57]). The data revealed limited learning gains in critical thinking in China and India over the course of a four-year bachelor programme, compared to the United States. Students in India demonstrated learning gains in academic skills in the first two years while those in China did not. The project revealed strong differences in learning outcomes and skills development among undergraduate students in these four countries, with consequences for the global competitiveness of STEM students and graduates across nations and institutions.

The New York- based Council for Aid to Education (CAE), which provided the CLA assessment instrument for the generic skills strand in the AHELO Feasibility Study, upgraded its instrument into the CLA+. CAE has started working with countries and organisations outside the United States interested in assessing generic skills (Wolf, Zahner and Benjamin, 2015[40]; Zahner et al., 2021[37]). Italy was a pioneering and particularly interesting case, where the national accreditation agency, ANVUR, implemented the CLA+ instrument in its TECO project to a large sample of Italian university students (Zahner and Ciolfi, 2018[58]); see also Chapter 11 in this volume). Other countries, systems and institutions followed. The OECD, which did not have a mandate to pursue this initiative, provided the convening space and opportunities to interact and co-ordinate for countries participating in this ‘CLA+ International Initiative’. This book brings together the data and analysis of systems that participated in this initiative between 2016 and 2021.

References

[49] AHELO (2013a), Assessment of Higher Education Learning Outcomes (AHELO) Feasibility Report, Vol. 2. Data analysis and national experiences, OECD, Paris, https://www.oecd.org/education/skills-beyond-school/AHELOFSReportVolume2.pdf.

[50] AHELO (2013b), Assessment of Higher Education Learning Outcomes (AHELO) Feasibility Report. Vol. 3. Further insights, OECD, Paris, https://www.oecd.org/education/skills-beyond-school/AHELOFSReportVolume3.pdf.

[55] Altbach, P. (2015), “AHELO: The Myth of Measurement and Comparability”, International Higher Education 367, https://doi.org/10.6017/ihe.2015.82.8861.

[19] Arum, R. and J. Roksa (2014), Aspiring Adults Adrift. Tentative Transitions of College Graduates, Chicago University Press, Chicago, IL.

[17] Arum, R. and J. Roksa (2011), Academically Adrift. Limited Learning on College Campuses, Chicago University Press, Chicago, IL.

[18] Arum, R., J. Roksa and E. Cho (n.d.), Improving Undergraduate LearnIng: Findings and Policy Recommendations from the SSRC-CLA Longitudinal Project, Social Science Research Council (SSRC), https://s3.amazonaws.com/ssrc-cdn1/crmuploads/new_publication_3/%7BD06178BE-3823-E011-ADEF-001CC477EC84%7D.pdf.

[25] Autor, D. (2015), “Why are there still so many jobs? the history and future of workplace automation”, Vol. 29(3), pp. 3-30, https://doi.org/10.1257/jep.29.3.3.

[24] Autor, D. and B. Price (2013), The Changing Task Composition of the US Labor Market: An Update of Autor, Levy, and Murnane (2003), https://economics.mit.edu/files/9758 (accessed on 1 August 2022).

[31] Avvisati, F., G. Jacotin and S. Vincent-Lancrin (2014), “Educating Higher Education Students for Innovative Economies: What International Data Tell Us”, Tuning Journal for Higher Education, Vol. 1/1, pp. 223-240, https://doi.org/10.18543/tjhe-1(1)-2013pp223-240.

[3] Barone, C. and L. Ortiz (2011), “Overeducation among European University Graduates: A comparative analysis of its incidence and the importance of higher education differentiation”, Higher Education, Vol. 61/3, pp. 325-337, https://doi.org/10.1007/s10734-010-9380-0.

[10] Blackmur, D. (2007), “The Public Regulation of Higher Education Qualities: Rationale, Processes, and Outcomes”, in Westerheijden, D. (ed.), Higher Education Dynamics, Springer, Dordrecht, https://doi.org/10.1007/978-1-4020-6012-0_1.

[34] Blömeke, S. et al. (2013), Modeling and measuring competencies in higher education: Tasks and challenges, Sense, Rotterdam, https://doi.org/10.1007/978-94-6091-867-4.

[68] Brüning, N. and P. Mangeol (2020), “What skills do employers seek in graduates?: Using online job posting data to support policy and practice in higher education”, OECD Education Working Papers, No. 231, https://doi.org/10.1787/bf533d35-en.

[41] Coates, H. (2016), “Assessing student learning outcomes internationally: insights and frontiers”, Assessment and Evaluation in Higher Education, Vol. 41/5, pp. 662-676, https://doi.org/10.1080/02602938.2016.1160273.

[46] Coates, H. and S. Richardson (2012), “An international assessment of bachelor degree graduates’ learning outcomes”, Higher Education Management and Policy, Vol. 23/3, https://doi.org/10.1787/hemp-23-5k9h5xkx575c.

[42] Coates, H. and O. Zlatkin-Troitschanskaia (2019), “The Governance, Policy and Strategy of Learning Outcomes Assessment in Higher Education”, Higher Education Policy, Vol. 32/4, pp. 507-512, https://doi.org/10.1057/s41307-019-00161-1.

[26] Deming, D. (2017), “The growing importance of social skills in the labor market”, Quarterly Journal of Economics, Vol. 132/4, pp. 1593-1640, https://doi.org/10.1093/qje/qjx022.

[9] Dill, D. and M. Soo (2004), “Transparency and Quality in Higher Education Markets”, in Teixeira, P. et al. (eds.), , Higher Education Dynamics, Kluwer, https://doi.org/10.1007/1-4020-2835-0_4.

[38] Douglass, J., G. Thomson and C. Zhao (2012), “The learning outcomes race: The value of self-reported gains in large research universities”, Higher Education, Vol. 64/3, pp. 317-335, https://doi.org/10.1007/s10734-011-9496-x.

[59] Economist (2015), “Having it All, Special Report on Universities”, http://www.economist.com/news/special-report/21646990-ideas-deliveringequity-well-excellence-having-it-all (accessed on 11 August 2015).

[47] Ewell, P. (2012), “A World of Assessment: OECD’s AHELO Initiative”, Change: The Magazine of Higher Learning, Vol. 44/5, pp. 35-42, https://doi.org/10.1080/00091383.2012.706515.

[27] Fernandez, F. and H. Liu (2019), “Examining relationships between soft skills and occupational outcomes among U.S. adults with—and without—university degrees”, Journal of Education and Work, Vol. 32/8, pp. 650-664, https://doi.org/10.1080/13639080.2019.1697802.

[23] Gallagher, S. (2018), Educational credentials come of age: a survey on the use and value of educational credentials in hiring, Northeastern University, https://cps.northeastern.edu/wp-content/uploads/2021/03/Educational_Credentials_Come_of_Age_2018.pdf.

[32] Hart Research Associates (2013), It takes more than a major: Employer priorities for college learning and student success, Washington, DC, https://www.aacu.org/leap/documents/2013_EmployerSurvey.pdf.

[39] Hattie, J. (2009), “The black box of tertiary assessment : An impending revolution”, in Meyer, L. et al. (eds.), Tertiary Assessment & Higher Education Outcomes: Policy, Practice & Research Excerpt taken from Hattie (pp.259-275), Ako Aotearoa, Wellington, New Zealand.

[14] Hazelkorn, E. (2014), “Reflections on a Decade of Global Rankings: What we’ve learned and outstanding issues”, European Journal of Education, Vol. 49/1, pp. 12-28, https://doi.org/10.1111/ejed.12059.

[13] Hazelkorn, E. (2011), Rankings and the reshaping of higher education: The battle for world-class excellence, Palgrave-MacMillan, Basingstoke, https://doi.org/10.1057/9780230306394.

[33] Kearns, P. (2001), Review of research-generic skills for the new economy, https://www.ncver.edu.au/research-and-statistics/publications/all-publications/generic-skills-for-the-new-economy-review-of-research (accessed on 1 August 2022).

[15] Kehm, B. and B. Stensaker (2009), University Rankings, Diversity and the New Landscape of Higher Education, Vol.18, Sense, Global Perspectives on Higher Education, Rotterdam.

[64] Klein, S. et al. (2007), “The collegiate learning assessment: Facts and fantasies”, Evaluation Review, Vol. 31/5, pp. 415-439, https://doi.org/10.1177/0193841X07303318.

[63] Klein, S. et al. (2005), “An Approach to Measuring Cognitive Outcomes across Higher Education Institutions”, Research in Higher Education, Vol. 46/3, pp. 251-76, https://www.jstor.org/stable/40197345.

[22] Koumenta, M. and M. Pagliero (2017), Measuring Prevalence and Labour Market Impacts of Occupational Regulation in the EU, European Commission, Brussels, https://ec.europa.eu/docsroom/documents/20362/attachments/1/translations/en/renditions/native.

[44] Liu, O. et al. (2016), “Assessing critical thinking in higher education: the HEIghten™ approach and preliminary validity evidence”, Assessment & Evaluation in Higher Education, Vol. 41/5, pp. 677-694, https://doi.org/10.1080/02602938.2016.1168358.

[57] Loyalka, P. et al. (2021), “Skill levels and gains in university STEM education in China, India, Russia and the United States”, Nature Human Behaviour, Vol. 5/7, pp. 892-904, https://doi.org/10.1038/s41562-021-01062-3.

[60] M. Rostan and M. Vaira (ed.) (2011), “The New World Order in Higher Education”, Questioning Excellence in Higher Education, Vol. 3, pp. 3-20.

[69] Marcolin, L., S. Miroudot and M. Squicciarini (2016), “The Routine Content Of Occupations: New Cross-Country Measures Based On PIAAC”, OECD Trade Policy Papers, No. 188, https://doi.org/10.1787/5jm0mq86fljg-en.

[28] Marcolin, L., S. Miroudot and M. Squicciarini (2016), “The Routine Content Of Occupations: New Cross-Country Measures Based On PIAAC”, OECD Trade Policy Papers, No. 188, OECD Publishing, Paris, https://doi.org/10.1787/5jm0mq86fljg-en.

[70] Marginson, S. (2019), “Limitations of human capital theory”, Studies in Higher Education, Vol. 44/2, pp. 287-301, https://doi.org/10.1080/03075079.2017.1359823.

[8] Marginson, S. (2013), “The impossibility of capitalist markets in higher education”, Journal of Education Policy, Vol. 28/3, pp. 353-370, https://doi.org/10.1080/02680939.2012.747109.

[36] Montt, G. (2015), “The causes and consequences of field-of-study mismatch: An analysis using PIAAC”, OECD Social, Employment & Migration Working Papers 167, https://doi.org/10.1787/5jrxm4dhv9r2-en.

[52] Morgan, J. (2015a), “OECD’s AHELO Project Could Transform University Hierarchy”, Times Higher Education, https://www.timeshighereducation.co.uk/news/oecds-ahelo-project-could-transform-university-hierarchy/2020087.article.

[53] Morgan, J. (2015b), “World’s University ‘Oligopoly’ Accused of Blocking OECD Bid to Judge Learning Quality”, Times Higher Education, https://www.timeshighereducation.co.uk/news/world%E2%80%99s-university-%E2%80%98oligopoly%E2%80%99-accused-blocking-oecd-bid-judge-learning-quality.

[43] Nusche, D. (2008), “Assessment of Learning Outcomes in Higher Education: a comparative review of selected practices”, OECD Education Working Papers, No. 15, OECD Publishing, Paris, https://doi.org/10.1787/244257272573.

[1] OECD (2021), Education at a Glance 2021: OECD Indicators, OECD Publishing, Paris, https://doi.org/10.1787/b35a14e5-en.

[35] OECD (2018), Good Jobs for All in a Changing World of Work: The OECD Jobs Strategy, OECD Publishing, Paris, https://doi.org/10.1787/9789264308817-en.

[29] OECD (2017), OECD Science, Technology and Industry Scoreboard 2017: The digital transformation, OECD Publishing, Paris, https://doi.org/10.1787/9789264268821-en.

[2] OECD (2015), “How is the global talent pool changing (2013, 2030)?”, Education Indicators in Focus, No. 31, OECD Publishing, Paris, https://doi.org/10.1787/5js33lf9jk41-en.

[73] OECD (2013), OECD Skills Outlook. First Results from the Survey of Adult Skills, OECD, Paris, https://www.oecd-ilibrary.org/education/oecd-skills-outlook-2013_9789264204256-en.

[65] OECD (ed.) (2009), The New Global Landscape of Nations and Institutions, OECD Publishing, Paris.

[61] OECD (2019b), Benchmarking Higher Education System Performance, Higher Education, OECD Publishing, Paris, https://doi.org/10.1787/be5514d7-en.

[30] OECD (2019a), OECD Skills Outlook 2019: Thriving in a Digital World, OECD Publishing, Paris, https://doi.org/10.1787/df80bc12-en.

[7] Paccagnella, M. (2016), “Literacy and Numeracy Proficiency in IALS, ALL and PIAAC”, OECD Education Working Papers, No. 142, OECD Publishing, Paris, https://doi.org/10.1787/5jlpq7qglx5g-en.

[6] Paccagnella, M. (2015), “Skills and Wage Inequality: Evidence from PIAAC”, OECD Education Working Papers, No. 114, OECD Publishing, Paris, https://doi.org/10.1787/5js4xfgl4ks0-en.

[66] Rosen, Y., S. Derrara and M. Mosharraf (eds.) (2016), Mitigation of Test Bias in International, Cross-National Assessments of Higher-Order Thinking Skills, IGI Global, Hershey, PA, https://doi.org/10.4018/978-1-4666-9441-5.ch018.

[71] Saavedra, A. and J. Saavedra (2011), “Do colleges cultivate critical thinking, problem solving, writing and interpersonal skills?”, Economics of Education Review, Vol. 30/6, pp. 1516-1526, https://doi.org/10.1016/j.econedurev.2011.08.006.

[21] Sherriff, L. (2016), “Penguin Random House Publishers Has Just Announced It’s Scrapping Degree Requirements For Its Jobs”, The Huffington Post UK, https://www.huffingtonpost.co.uk/2016/01/18/penguins-random-house-scrapping-!degree-requirements-jobs_n_9007288.html?1453113478 (accessed on 18 January 2016).

[20] Sherriff, L. (2015), “Ernst & Young Removes University Degree Classification From Entry Criteria As There’s ’No Evidence’ It Equals Success”, The Huffington Post UK, https://www.huffingtonpost.co.uk/2016/01/07/ernst-and-young-removes-degree-classification-entry-criteria_n_7932590.html (accessed on 7 January 2016).

[4] The Economist (2016), “Going to university is more important than ever for young people. But the financial returns are falling”, The Economist, https://www.economist.com/international/2018/02/03/going-to-university-is-more-important-than-ever-for-young-people (accessed on 3 February 2016).

[48] Tremblay, K., D. Lalancette and D. Roseveare (2012), “Assessment of Higher Education Learning Outcomes (AHELO) Feasibility Study”, Feasibility study report, Vol. 1, https://www.oecd.org/education/skills-beyond-school/AHELOFSReportVolume1.pdf (accessed on 1 August 2022).

[54] Usher, A. (2015), “Universities Behaving Badly”, Inside Higher Education, https://www.insidehighered.com/blogs/world-view/universities-behaving-badly.

[12] Uttl, B., C. White and D. Gonzalez (2017), “Meta-analysis of faculty’s teaching effectiveness: Student evaluation of teaching ratings and student learning are not related”, Studies in Educational Evaluation, Vol. 54, pp. 22-42, https://doi.org/10.1016/j.stueduc.2016.08.007.

[56] Van Damme, D. (2021), “Transforming Universities for a Sustainable Future”, in The Promise of Higher Education (pp. 431-438), Springer International Publishing, https://doi.org/10.1007/978-3-030-67245-4_64.

[5] Van Damme, D. (2019), “Convergence and Divergence in the Global Higher Education System: The Conflict between Qualifications and Skills”, International Journal of Chinese Education, Vol. 8/1, pp. 7-24, https://doi.org/10.1163/22125868-12340102.

[51] Van Damme, D. (2015), “Global higher education in need of more and better learning metrics. Why OECD’s AHELO project might help to fill the gap”, European Journal of Higher Education, Vol. 5/4, pp. 425-436, https://doi.org/10.1080/21568235.2015.1087870.

[74] Van Damme, D. (2009), “The Search for Transparency: Convergence and Diversity in the Bologna Process”, in F. Van Vught (ed.), Mapping the higher education landscape: towards a European classification of higher education, Springer, Dordrecht, https://doi.org/10.1007/978-90-481-2249-3_3.

[16] van Vught, F. (2008), “Mission Diversity and Reputation in Higher Education”, Higher Education Policy, Vol. 21/2, pp. 151-174, https://doi.org/10.1057/hep.2008.5.

[11] Van Vught, F. and F. Ziegele (eds.) (2012), Transparency, Quality and Accountability, Higher Education Dynamics 37, Springer, Dordrecht.

[75] Vincent-Lancrin, S. (2019), Fostering Students’ Creativity and Critical Thinking: What it Means in School, Educational Research and Innovation, OECD Publishing, Paris, https://doi.org/10.1787/62212c37-en.

[45] Wagenaar, R. (2019), Reform! Tuning the Modernisation Process of Higher Education in Europe: A blueprint for student-centred learning, Tuning Academy, Groningen, https://research.rug.nl/en/publications/reform-tuning-the-modernisation-process-of-higher-education-in-eu-2.

[62] Weingarten, H., M. Hicks and A. Kaufman (eds.) (2018), The Role of Generic Skills in Measuring Academic Quality, McGill-Queen’s UP, Kingston, ON.

[40] Wolf, R., D. Zahner and R. Benjamin (2015), “Methodological challenges in international comparative post-secondary assessment programs: lessons learned and the road ahead”, Studies in Higher Education, Vol. 40/3, pp. 471-481, https://doi.org/10.1080/03075079.2015.1004239.

[58] Zahner, D. and A. Ciolfi (2018), “International Comparison of a Performance-Based Assessment in Higher Education”, in Assessment of Learning Outcomes in Higher Education, Methodology of Educational Measurement and Assessment, Springer International Publishing, Cham, https://doi.org/10.1007/978-3-319-74338-7_11.

[37] Zahner, D. et al. (2021), “Measuring the generic skills of higher education students and graduates: Implementation of CLA+ international.”, in Assessing undergraduate learning in psychology: Strategies for measuring and improving student performance., American Psychological Association, Washington, https://doi.org/10.1037/0000183-015.

[72] Zlatkin-Troitschanskaia, O., H. Pant and H. Coates (2016), Assessing student learning outcomes in higher education: challenges and international perspectives, https://doi.org/10.1080/02602938.2016.1169501.

[67] Zlatkin-Troitschanskaia, O., R. Shavelson and C. Kuhn (2015), “The international state of research on measurement of competency in higher education”, Studies in Higher Education, Vol. 40/3, pp. 393-411, https://doi.org/10.1080/03075079.2015.1004241.