The results of the PISA 2022 test are reported in a numerical scale consisting of PISA score points. This section summarises the test-development and scaling procedures used to ensure that PISA score points are comparable across countries and with the results of previous PISA assessments.

PISA 2022 Results (Volume IV)

Annex A1. The construction of reporting scales and indices

The construction of reporting scales

Assessment framework and test development

The first step in defining a reporting scale in PISA is developing a framework for each domain assessed. This framework provides a definition of what it means to be proficient in the domain; delimits and organises the domain according to different dimensions; and suggests the kind of test items and tasks that can be used to measure what students can do in the domain within the constraints of the PISA design (OECD, 2023[1]). These frameworks were developed by a group of international experts for each domain and agreed upon by the participating countries.

The second step is the development of the test questions (i.e. items) to assess proficiency in each domain. A consortium of testing organisations under contract to the OECD on behalf of participating governments develops new items and selects items from previous PISA tests (i.e. “trend items”) of the same domain. The expert group that developed the framework reviews these proposed items to confirm that they meet the requirements and specifications of the framework.

The third step is a qualitative review of the testing instruments by all participating countries and economies to ensure the items’ overall quality and appropriateness in their own national context. These ratings are considered when selecting the final pool of items for the assessment. Selected items are then translated and adapted to create national versions of the testing instruments. These national versions are verified by the PISA consortium.

The verified national versions of the items are then presented to a sample of 15-year-old students in all participating countries and economies as part of a field trial. This is to ensure that they meet stringent quantitative standards of technical quality and international comparability. In particular, the field trial serves to verify the psychometric equivalence of items across countries and economies (for more information, see Annex A6 of Volume I).

After the field trial, material is considered for rejection, revision or retention in the pool of potential items. The international expert group for each domain then formulates recommendations as to which items should be included in the main assessments. The final set of selected items is also subject to review by all countries and economies. This selection is balanced across the various dimensions specified in the framework and spans various levels of difficulty so that the entire pool of items measures performance across all component skills and a broad range of contexts and student abilities.

Proficiency scales

Proficiency scores in mathematics, reading, science and also in financial literacy are based on student responses to items that represent the assessment framework for each domain (see section above). While different students saw different questions, the test design, which ensured a significant overlap of items across different forms, made it possible to construct proficiency scales that are common to all students for each domain. In general, the PISA frameworks assume that a single continuous scale can be used to report overall proficiency in a domain but this assumption is further verified during scaling (see section below).

PISA proficiency scales are constructed using item-response-theory models in which the likelihood that the test-taker responds correctly to any question is a function of the question’s characteristics and of the test-taker’s position on the scale. In other words, the test-taker’s proficiency is associated with a particular point on the scale that indicates the likelihood that he or she responds correctly to any question. Higher values on the scale indicate greater proficiency, which is equivalent to a greater likelihood of responding correctly to any question. A description of the modelling technique used to construct proficiency scales can be found in the PISA 2022 Technical Report.

In the item-response-theory models used in PISA, the test items characteristics are summarised by two parameters that represent task difficulty and task discrimination. The first parameter, task difficulty, is the point on the scale where there is at least a 50% probability of a correct response by students who score at or above that point; higher values correspond to more difficult items. For the purpose of describing proficiency levels that represent mastery, PISA often reports the difficulty of a task as the point on the scale where there is at least a 62% probability of a correct response by students who score at or above that point.

The second parameter, task discrimination, represents the rate at which the proportion of correct responses increases as a function of student proficiency. For an idealised highly discriminating item, close to 0% of students respond correctly if their proficiency is below the item difficulty and close to 100% of students respond correctly as soon as their proficiency is above the item difficulty. In contrast, for weakly discriminating items, the probability of a correct response still increases as a function of student proficiency, but only gradually.

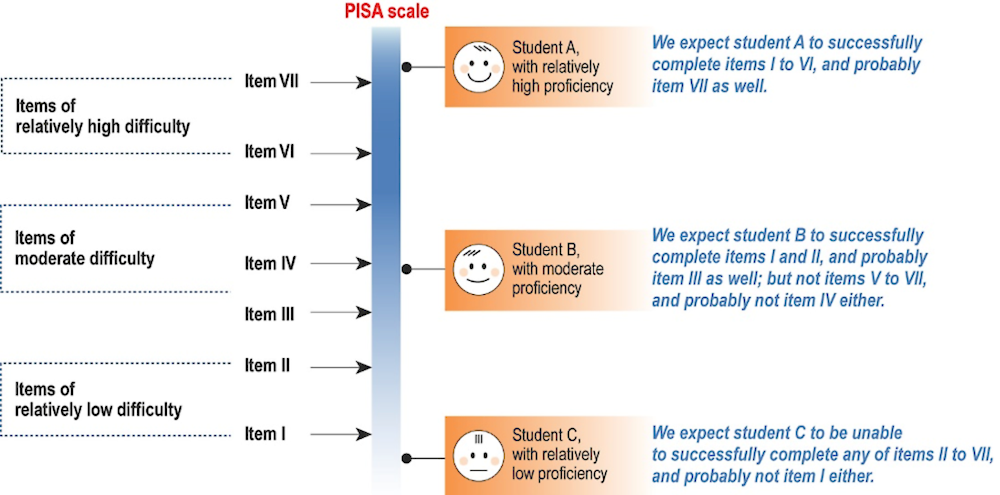

A single continuous scale can therefore show both the difficulty of questions and the proficiency of test-takers (see Figure IV.A1.1). By showing the difficulty of each question on this scale, it is possible to locate the level of proficiency in the domain that the question demands. By showing the proficiency of test-takers on the same scale, it is possible to describe each test-taker’s level of skill or literacy by the type of tasks that he or she can perform correctly most of the time.

Figure IV.A1.1. Relationship between questions and student performance on a scale

Estimates of student proficiency are based on the kinds of tasks students are expected to perform successfully. This means that students are likely to be able to successfully answer questions located at or below the level of difficulty associated with their own position on the scale. Conversely, they are unlikely to be able to successfully answer questions above the level of difficulty associated with their position on the scale.1

The higher a student’s proficiency level is located above a given test question, the more likely he or she can answer the question successfully. The discrimination parameter for this particular test question indicates how quickly the likelihood of a correct response increases. The further the student’s proficiency is located below a given question, the less likely he or she is able to answer the question successfully. In this case, the discrimination parameter indicates how fast this likelihood decreases as the distance between the student’s proficiency and the question’s difficulty increases.

How reporting scales are set and linked across multiple assessments

The reporting scale for financial literacy was originally established when it was assessed in PISA for the first time, that is PISA 2012.

The item-response-theory models used in PISA describe the relationship between student proficiency, item difficulty and item discrimination, but do not set a measurement unit for any of these parameters. In PISA, this measurement unit was chosen the first time a reporting scale was established. The score of “500” on the scale was defined as the average proficiency of students across OECD countries; “100 score points” was defined as the standard deviation (a measure of the variability) of proficiency across OECD countries.2

To enable the measurement of trends, achievement data from successive assessments are reported on the same scale. It is possible to report results from different assessments on the same scale because in each assessment PISA retains a significant number of items from previous PISA assessments. These are known as trend items. A significant number of items used to assess financial literacy (41 out of 46) were developed and already used in earlier assessments. Their difficulty and discrimination parameters were therefore already estimated in previous PISA assessments.

The answers to the trend questions from students in earlier PISA cycles, together with the answers from students in PISA 2022, were both considered when scaling PISA 2022 data to determine student proficiency, item difficulty and item discrimination. In particular, when scaling PISA 2022 data, item parameters for new items were freely estimated, but item parameters for trend items were initially fixed to their PISA 2018 values, which, in turn, were based on a concurrent calibration involving response data from multiple cycles. All constraints on trend item parameters were evaluated and, in some cases, released in order to better describe student-response patterns. See the PISA 2022 Technical Report for details.

The extent to which the item characteristics estimated during the scaling of PISA 2018 data differ from those estimated in previous calibrations is summarised in the “link error”, a quantity (expressed in score points) that reflects the uncertainty in comparing PISA results over time. A link error of zero indicates a perfect match in the parameters across calibrations, while a non-zero link error indicates that the relative difficulty of certain items or the ability of certain items to discriminate between high and low achievers has changed over time, introducing greater uncertainty in trend comparisons.

Summary descriptions of the proficiency levels of financial literacy

Table IV.A1.1 provides summary descriptions of proficiency levels of the financial literacy scale.

PISA 2022 results on financial literacy scales are included in Annex B1 (for countries and economies) and Annex B2 (for regions within countries).

Table IV.A1.1. Proficiency levels on the financial literacy scale

|

Level |

Lower score limit |

Percentage of students able to perform tasks at each level or above (OECD average) |

What students can typically do |

|---|---|---|---|

|

5 |

625 |

10.6 |

Students can apply their understanding of a wide range of financial terms and concepts to contexts that may only become relevant to their lives in the long term. They can analyse complex financial products and can take into account features of financial documents that are significant but unstated or not immediately evident, such as transaction costs. They can work with a high level of accuracy and solve non-routine financial problems, and they can describe the potential outcomes of financial decisions, showing an understanding of the wider financial landscape, such as income tax. |

|

4 |

550 |

32.0 |

Students can apply their understanding of less common financial concepts and items to contexts that will be relevant to them as they move towards adulthood, such as bank account management and compound interest in savings products. They can interpret and evaluate a range of detailed financial documents, such as bank statements, and explain the functions of less commonly used financial products. They can make financial decisions taking into account longer-term consequences, such as understanding the overall cost implication of paying back a loan over a longer period, and they can solve routine problems in less common financial contexts. |

|

3 |

475 |

59.6 |

Students can apply their understanding of commonly used financial concepts, terms, and products to situations that are relevant to them. They begin to consider the consequences of financial decisions and they can make simple financial plans in familiar contexts. They can make straightforward interpretations of a range of financial documents and can apply a range of basic numerical operations, including calculating percentages. They can choose the numerical operations needed to solve routine problems in relatively common financial literacy contexts, such as budget calculations. |

|

2 |

400 |

82.1 |

Students begin to apply their knowledge of common financial products and commonly used financial terms and concepts. They can use given information to make financial decisions in contexts that are immediately relevant to them. They can recognise the value of a simple budget and can interpret prominent features of everyday financial documents. They can apply single basic numerical operations, including division, to answer financial questions. They show an understanding of the relationships between different financial elements, such as the amount of use and the costs incurred. |

|

1 |

326 |

95.0 |

Students can identify common financial products and terms and interpret information relating to basic financial concepts. They can recognise the difference between needs and wants and can make simple decisions on everyday spending. They can recognise the purpose of everyday financial documents, such as an invoice, and apply single and basic numerical operations (addition, subtraction or multiplication) in financial contexts that they are likely to have experienced personally. |

Indices from the student context questionnaire

In addition to scale scores representing performance in financial literacy, this volume uses indices derived from the PISA student questionnaires to contextualise PISA 2022 results or to estimate trends that account for demographic changes over time. The following indices and database variables are used in this report.

The PISA index of economic, social and cultural status (ESCS)

The PISA index of economic, social and cultural status (ESCS) is a composite score derived, as in previous cycles, from three variables related to family background: parents’ highest level of education in years (PAREDINT), parents’ highest occupational status (HISEI) and home possessions (HOMEPOS).

Parents’ highest level of education in years: Students’ responses to questions ST005, ST006, ST007 and ST008 regarding their parents’ education were classified using ISCED-11 (UNESCO Institute for Statistics., 2012[2]) Indices on parental education were constructed by recoding educational qualifications into the following categories: (1) ISCED Level 02 (pre-primary education), (2) ISCED Level 1 (primary education), (3) ISCED Level 2 (lower secondary), (4) ISCED Level 3.3 (upper secondary education with no direct access to tertiary education), (5) ISCED Level 3.4 (upper secondary education with direct access to tertiary education), (6) ISCED Level 4 (post-secondary non-tertiary), (7) ISCED Level 5 (short-cycle tertiary education), (8) ISCED Level 6 (Bachelor’s or equivalent), (9) ISCED Level 7 (Master’s or equivalent) and (10) ISCED Level 8 (Doctoral or equivalent). Indices with these categories were provided for a student’s mother (MISCED) and father (FISCED). In the event that student responses between ST005 and ST006 (for mother’s education) or between ST007 and ST008 (for father’s education) conflicted (e.g. in ST006 if a student indicated their parent having a postsecondary qualification but indicated in ST005 the parent had not completed lower secondary education), the higher education value provided by the student was used. This differs from the PISA 2018 procedure where the lower value was used. In addition, the index of highest education level of parents (HISCED) corresponded to the higher ISCED level of either parent. The index of highest education level of parents was also recoded into estimated number of years of schooling (PAREDINT). The conversion from ISCED levels to year of education is common to all countries. This international conversion was determined by using the cumulative years of education values assigned in PISA 2018 to each ISCED level. The correspondence is available in the PISA 2022 Technical Report (OECD, 2023[3]).

To make PAREDINT scores for PISA 2012, PISA 2015 and PISA 2018 comparable to PAREDINT scores for PISA 2022, new PAREDINT scores were created for each student who participated in previous cycles using the coding scheme used in PISA 2022. These new PAREDINT scores were used in the computation of trend ESCS scores.

Parents’ highest occupational status: Occupational data for both the student’s father and the student’s mother were obtained from responses to open-ended questions. The responses were coded to four-digit ISCO codes (ILO, 2007) and then mapped to the international socio-economic index of occupational status (ISEI) (Ganzeboom and Treiman, 2003[4]). In PISA 2022, the ISCO and ISEI in their 2008 version were used. Three indices were calculated based on this information: father’s occupational status (BFMJ2); mother’s occupational status (BMMJ1); and the highest occupational status of parents (HISEI), which corresponds to the higher ISEI score of either parent or to the only available parent’s ISEI score. For all three indices, higher ISEI scores indicate higher levels of occupational status.

Home possessions (HOMEPOS) is a proxy measure for family wealth. In PISA 2022, students reported the availability of household items at home, including books at home and country-specific household items that were seen as appropriate measures of family wealth within the country’s context. HOMEPOS is a summary index of all household and possession items (ST250, ST251, ST253, ST254, ST255, ST256). Some HOMEPOS items used in PISA 2018 were removed in PISA 2022 while new ones were added (e.g. new items developed specifically with low-income countries in mind). Furthermore, some HOMEPOS that were previously dichotomous (yes/no) items were revised to polytomous items (1, 2, 3, etc.) allowing for capturing a greater variation in responses.

For the purpose of computing the PISA index of economic, social and cultural status (ESCS), values for students with missing PAREDINT, HISEI or HOMEPOS were imputed with predicted values plus a random component based on a regression on the other two variables. If there were missing data on more than one of the three variables, ESCS was not computed and a missing value was assigned for ESCS.

In PISA 2022, ESCS was computed by attributing equal weight to the three standardised components. The three components were standardised across the OECD countries, with each OECD country contributing equally. The final ESCS variable was transformed, with 0 the score of an average OECD student and 1 the standard deviation across equally weighted OECD countries.

Immigrant background (IMMIG)

Information on the country of birth of the students and their parents was collected. Included in the database are three country-specific variables relating to the country of birth of the student, mother and father (ST019). The variables are binary and indicate whether the student, mother and father were born in the country of assessment or elsewhere. The index on immigrant background (IMMIG) is calculated from these variables, and has the following categories: (1) native students (those students who had at least one parent born in the country); (2) second-generation students (those born in the country of assessment but whose parent[s] were born in another country); and (3) first-generation students (those students born outside the country of assessment and whose parents were also born in another country). Students with missing responses for either the student or for both parents were given missing values for this variable.

Language spoken at home (ST022)

Students indicated what language they usually spoke at home, and the database includes an internationally comparable variable (ST022Q01TA) that was derived from this information and has the following categories: (1) language at home is same as the language of assessment for that student; (2) language at home is another language.

The mappings of options provided in national versions of the student questionnaire for the two possible values for the “International Language at Home” variable (ST022Q01TA) are the responsibility of national PISA centres. For example, for students in the Flemish community of Belgium, “Flemish dialect” was considered (together with “Dutch”) as equivalent to the “Language of test”; for students in the French Community and German-speaking Community (respectively), Walloon (a French dialect) and a German dialect were considered to be equivalent to “Another language”.

Indices from the financial literacy questionnaire

In addition to the indices derived from the PISA student questionnaires, tis volume also uses indices derived from the financial literacy questionnaire. The following indices and database variables are used in this report.

Familiarity with concepts of finance (FCFMLRTY)

Students were asked whether, for each of the 16 terms related to finance and economics, they had learned about the term in the 12 months prior to sitting the PISA assessment and still know its meaning (FL164). There were three response options (“Never head of it,” “Head of it, but I don’t recall the meaning,” “Learnt about it, and I know what it means”). These terms were “interest payment,” “compound interest,” “exchange rate,” “depreciation,” “shares/stocks,” “return on investment,” “dividend,” “diversification,” “debit card,” “bank loan,” “pension plan,” “budget,” “wage,” “entrepreneur,” “central bank,” and “income tax.” For each item, a value of 1 was assigned to “Learnt about it, and I know what it means” responses, and all other responses were assigned a value of 0. The index of familiarity with concepts of finance (FLFMLRTY) was defined as the total number of terms that students reported that they had learned about and still know the meaning of. Values range from 0 to 16.

Financial education in school lessons (FLSCHOOL)

The index of financial education in school lessons (FLSCHOOL) was constructed using students’ responses to a question developed for PISA 2018 (FL166). Students reported how often (“never,” “sometimes,” “often”) they encountered the following types of tasks or activities in a school lesson during the 12 months prior to sitting the PISA assessment: describing the purposes and uses of money; exploring the difference between spending money on needs and wants; exploring ways of planning to pay an expense; discussing the rights of consumers when dealing with financial institutions; discussing the ways in which money invested in the stock market changes value over time; analysing advertisements to understand how they encourage people to buy things. Positive values on this scale mean that students were more exposed to financial education in school lessons than was the average student across OECD countries and economies.

Parental involvement in matters of financial literacy (FLFAMILY)

The index of parental involvement in financial literacy (FLFAMILY) was constructed using students’ responses to a question developed for PISA 2018 (FL167). Students reported how often (“never or hardly ever,” “once or twice a month,” “once or twice a week,” “almost every day”) they discussed the following matters with their parents, guardians or relatives: their spending decisions; their savings decisions; the family budget; money for things they want to buy; and news related to finance or economics. Positive values on this scale mean that students reported their parents were more involved in matters of financial literacy with them than did the average student across OECD countries and economies.

Confidence in dealing with traditional money matters (FLCONFIN)

The index of confidence in dealing with traditional money matters (FLCONFIN) was constructed using students’ responses to a question developed for PISA 2018 (FL162). Students reported the extent to which they felt confident (“not at all confident,” “not very confident,” “confident,” “very confident”) doing the following things: making a money transfer (e.g. paying a bill); filling in forms at the bank; understanding bank statements; understanding a sales contract; keeping account of their account balance; and planning their spending in consideration of their current financial situation. Positive values on this scale mean that students expressed more confidence in dealing with traditional money matters than did the average student across OECD countries and economies.

Confidence in using digital financial services (FLCONICT)

The index of confidence in using digital financial services (FLCONICT) was constructed using students’ responses to a question developed for PISA 2018 (FL163). Students reported the extent to which they felt confident (“not at all confident,” “not very confident,” “confident,” “very confident”) doing the following things when using digital or electronic devices outside of a bank (e.g. at home or in shops): transferring money; keeping track of their balance; paying with a debit card instead of using cash; paying with a mobile device (e.g. mobile phone or tablet) instead of using cash; and ensuring the safety of sensitive information when making an electronic payment or using online banking. Positive values on this scale mean that students expressed more confidence in using digital financial services than did the average student across OECD countries and economies.

School level indices

Socio-economic profile of schools

Advantaged and disadvantaged schools are defined in terms of the socio-economic profile of schools. All schools in each PISA-participating education system are ranked according to their average PISA index of economic, social and cultural status (ESCS) and then divided into four groups with approximately an equal number of students (quarters). Schools in the bottom quarter are referred to as “socio-economically disadvantaged schools”; schools in the top quarter are referred to as “socio-economically advantaged schools.”

References

[4] Ganzeboom, H. and D. Treiman (2003), “Three Internationally Standardised Measures for Comparative Research on Occupational Status”, in Hoffmeyer-Zlotnik J.H.P and Wolf C. (eds.), Advance in Cross-National Comparison, Springer, Boston, https://doi.org/10.1007/978-1-4419-9186-7_9.

[1] OECD (2023), PISA 2022 Assessment and Analytical Framework, PISA, OECD Publishing, Paris, https://doi.org/10.1787/dfe0bf9c-en.

[3] OECD (2023), PISA 2022 Technical Report.

[2] UNESCO Institute for Statistics. (2012), International standard classification of education : ISCED 2011, UNESCO Institute for Statistics.

Notes

← 1. “Unlikely”, in this context, refers to a probability below 62%. “Likely”, in this context, refers to a probability of at least 62%.

← 2. The standard deviation of 100 score points corresponds to the standard deviation in a pooled sample of students from OECD countries, where each national sample is equally weighted.