Angelica Salvi del Pero

Annelore Verhagen

Artificial Intelligence and the Labour Market

Angelica Salvi del Pero

Annelore Verhagen

This chapter provides an overview of countries’ policy action affecting the development and use of artificial intelligence (AI) in the workplace. It looks at public policies to protect workers’ fundamental rights, ensure transparency and explainability of AI systems, and clarify accountability across the AI value chain. It explores how existing non-AI-specific laws – such as those pertaining anti-discrimination and data protection – can serve as a foundation for the governance of AI used in workplace settings. While in some countries, courts have successfully applied these laws to AI-related cases in the workplace, there may be a need for AI- and workplace‑specific policies. To date, most countries primarily rely on soft law for AI-specific matters, but a number of countries are developing new AI-specific legislative proposals applicable to AI in the workplace.

While artificial intelligence (AI) systems have the potential to improve the workplace – for example by enhancing workplace safety – if not designed or implemented well, they also pose risks to workers’ fundamental rights and well-being over and above any impact on the number of jobs. For instance, AI systems could systematise human biases in workplace decisions. Moreover, it is not always clear whether workers are interacting with an AI system or with a real person, decisions made through AI systems can be difficult to understand, and it is often unclear who is responsible if anything goes wrong when AI systems are used in the workplace.

These risks, combined with the rapid pace of AI development and deployment, underscores the urgent need for policy makers to move swiftly and develop policies to ensure that AI used in the workplace is trustworthy. Following the OECD AI Principles, “trustworthy AI” means that the development and use of AI is safe and respectful of fundamental rights such as privacy, fairness, and labour rights, and that the way it reaches employment-related decisions is transparent and understandable by humans. It also means that employers, workers, and job seekers are made aware of and are transparent about their use of AI, and that it is clear who is accountable if something goes wrong.

This chapter provides an overview of countries’ policy efforts to ensure trustworthy AI in the workplace (see Chapter 7 for social partners’ efforts in this respect). By providing a range of examples, the chapter aims to help employers, workers, and their representatives, as well as AI developers navigate the existing public policy landscape for AI, and to inspire policy makers looking to regulate workplace AI in their country. The key findings are:

When it comes to using AI in the workplace to make decisions that affect workers’ opportunities and rights, there are some avenues that policy makers are already considering: adapting workplace legislation to the use of AI; encouraging the use of robust auditing and certification tools; using a human-in-the-loop approach; developing mechanisms to explain in understandable ways the logic behind AI-powered decisions.

Currently, most OECD countries’ AI-specific measures to promote trustworthy AI in the workplace are primarily non-binding and rely on organisations’ capacity to self-regulate (i.e. soft law). The number of countries with an AI strategy has grown significantly in the past five years, particularly in Europe, North America and East Asia. Many countries are also developing ethical principles, frameworks and guidelines, technical standards, and codes of conduct for trustworthy AI.

One of the key advantages of using soft law for the governance of AI is that it can be relatively easy to implement and adjust when needed, which ensures the necessary flexibility for such a fast-changing technology. However, given that soft law often lacks enforceability, a well-co‑ordinated combination of soft law and enforceable legislation (or “hard law”) may be needed to effectively prevent or remedy AI-related harm in the workplace as the technology continues to evolve.

Existing (non-AI specific) legislation – for instance on discrimination, workers’ rights to organise, or product liability – is an important foundation for regulating workplace AI. For instance, all OECD member countries have in place laws that aim to protect data and privacy. In some countries, such as Italy, existing anti-discrimination legislation has been successfully applied in court cases related to AI use in the workplace.

However, existing legislation is often not designed to be applied to the use of AI in the workplace, and relevant case law is still limited. Case law will therefore need to be monitored to determine whether and how much existing legislation will have to be adapted to address the deployment of AI in the workplace effectively.

Some countries have taken proactive steps to prevent potential legal gaps by developing new AI-specific legislation, and these proposals often have important implications for the use of AI in the workplace. While some of these AI-specific legislative proposals may still go through significant changes before coming into effect, many represent promising progress towards ensuring the trustworthy development and use of AI in the workplace.

A notable example is the proposed EU AI Act, which seeks to regulate many aspects of AI systems in its member states. The Act takes a differentiated approach for regulating AI, which includes specific provisions for certain high-risk applications in the workplace. A risk-based approach helps to avoid regulating uses of AI that pose little risk and it allows for some flexibility.

Countries have also been developing AI-specific legislation that is narrower in its approach. For example, measures have been proposed that would require regular risk assessments and audits throughout the AI system’s lifecycle to identify and mitigate potential adverse outcomes. There are also AI-specific legislative proposals requiring that people are notified when they interact with an AI system. Additionally, there are explainability measures, such as in the Canadian Artificial Intelligence and Data Act (AIDA), that require “plain language explanations” of how AI systems reach their outcomes. Finally, new legislative efforts seek to increase the accountability of AI-based decisions by requiring human oversight.

Ensuring trustworthy AI in the workplace requires a coherent framework of soft law and legislation that addresses all dimensions of trustworthiness. Since the dimensions are interrelated, there is a potential for public policies to serve multiple purposes, which helps to minimise the regulatory burden. Transparency is essential for accountability, for example, and explainability regulations can help mitigate bias in AI systems.

At the same time, the policy framework for AI needs to be flexible and consistent within and across jurisdictions, in order not to obstruct enforcement, stifle innovation or create unnecessary barriers to trustworthy AI adoption in the workplace. Differentiated approaches to regulating AI minimise the regulatory burden and regular reviews of the definitions/frameworks used in legislation help it remain up to date with advances in AI technology. Using regulatory sandboxes to develop and test AI systems helps foster innovation and it provides regulators with practical evidence about where adjustments to the regulatory framework may be necessary. Additionally, collaboration between countries and regions is needed when developing public policies. It is also important that AI developers and users are given guidance to help them understand and comply with existing and changing hard and soft law.

To continue to improve AI policy decisions and facilitate their enforcement, it is crucial that policy makers, regulators, workers, employers and social partners understand the benefits and risks of using AI in the workplace. It is therefore important to provide access to training on the issues to all stakeholders (see also Chapter 5). Finally, evaluations and assessments will be key to determining what works and where legal gaps remain. This is particularly important, considering that countries’ policies will need to keep up with the fast pace at which AI evolves. The latest developments in generative AI and its increasingly pervasive use across various jobs and activities highlight the need to move swiftly and develop coordinated actionable and enforceable plans to ensure that the use and development of AI in the workplace is trustworthy.

To fulfil their potential to improve workplaces, AI systems need to be developed and used in a trustworthy way (hereafter: “trustworthy AI”). Following the OECD AI Principles, trustworthy AI can be defined as (see Box 6.1):

proactive engagement by AI stakeholders in responsible stewardship of AI in pursuit of beneficial outcomes for people and the planet;

respect for the rule of law, human rights and democratic values by all AI actors throughout the AI system lifecycle;

commitment by AI actors to transparency and responsible disclosure of AI systems;

robustness, security and safety of AI systems throughout their entire lifecycle;

accountability of all AI actors for the proper functioning of AI systems and for the respect of the other dimensions of trustworthiness.

Ensuring trustworthy AI in the workplace can be challenging because the technology entails risks, notably for human rights (e.g. on privacy, discrimination and labour rights), job quality, transparency, explainability, and accountability (Salvi Del Pero, Wyckoff and Vourc’h, 2022[1]).1 Moreover, it is important to identify possible risks that currently do not manifest themselves, but which may appear in the near future when new AI systems are being developed or applied in new contexts.

The risks of using AI in the workplace, coupled with the rapid pace of AI development and deployment (including the latest generative AI models), underscores the need for decisive and proactive action from policy makers to develop policies that promote trustworthy development and use of AI in the workplace. Delaying such action could result in negative impacts on society, employers and workers. In the short term, workplace AI policies will help towards ensuring the safe and responsible development and use of AI in the workplace. In the long term, they will also help to avoid unnecessary obstacles to AI adoption. Legal clarity may enhance trust amongst potential users that AI’s risks are already being mitigated. It may also alleviate ungrounded fears for litigation amongst employers and developers, which can stimulate research, development and innovation, leading to improvements in AI systems in the future.

At the same time, there are concerns that ill-designed or inconsistent policies and multiplications of standards may have the opposite effect and increase uncertainty and compliance costs, obstruct enforcement, and unnecessarily delay the adoption of beneficial and trustworthy AI. Policy makers are therefore facing the challenge of creating a clear, flexible and consistent policy framework that ensures trustworthy AI in the workplace without stifling innovation or creating unnecessary barriers to AI adoption. Recognising this difficulty, OECD and other adhering countries have recently adopted a set of detailed policy principles – AI Principles – that set standards for AI that are practical and flexible enough to stand the test of time (OECD.AI, 2023[2]), while ensuring that AI is trustworthy and respects human-centred and democratic values (see Box 6.1).

The OECD Principles on Artificial Intelligence were adopted in May 2019 by the OECD member countries. Since then, other adhering countries include Argentina, Brazil, Egypt, Malta, Peru, Romania, Singapore and Ukraine. The OECD AI Principles also form the basis for the G20 AI Principles, https://www.mofa.go.jp/files/000486596.pdf.

Stakeholders should proactively engage in responsible stewardship of trustworthy AI in pursuit of beneficial outcomes for people and the planet, such as augmenting human capabilities and enhancing creativity, advancing inclusion of underrepresented populations, reducing economic, social, gender and other inequalities, and protecting natural environments, thus invigorating inclusive growth, sustainable development and well-being.

AI actors should respect the rule of law, human rights and democratic values, throughout the AI system lifecycle. These include freedom, dignity and autonomy, privacy and data protection, non-discrimination and equality, diversity, fairness, social justice, and internationally recognised labour rights. To this end, AI actors should implement mechanisms and safeguards, such as capacity for human determination, that are appropriate to the context and consistent with the state of art.

AI actors should commit to transparency and responsible disclosure regarding AI systems. To this end, they should provide meaningful information, appropriate to the context, and consistent with the state of art i) to foster a general understanding of AI systems, ii) to make stakeholders aware of their interactions with AI systems, including in the workplace, iii) to enable those affected by an AI system to understand the outcome, and, iv) to enable those adversely affected by an AI system to challenge its outcome based on plain and easy-to‑understand information on the factors, and the logic that served as the basis for the prediction, recommendation or decision.

AI systems should be robust, secure and safe throughout their entire lifecycle so that, in conditions of normal use, foreseeable use or misuse, or other adverse conditions, they function appropriately and do not pose unreasonable safety risk. To this end, AI actors should ensure traceability, including in relation to datasets, processes and decisions made during the AI system lifecycle, to enable analysis of the AI system’s outcomes and responses to inquiry, appropriate to the context and consistent with the state of art. Moreover, AI actors should, based on their roles, the context, and their ability to act, apply a systematic risk management approach to each phase of the AI system lifecycle on a continuous basis to address risks related to AI systems, including privacy, digital security, safety and bias.

AI actors should be accountable for the proper functioning of AI systems and for the respect of the above principles, based on their roles, the context, and consistent with the state of art.

Source: OECD (2019[3]), Recommendation of the Council on Artificial Intelligence, https://legalinstruments.oecd.org/en/instruments/OECD-LEGAL-0449.

All dimensions of AI’s trustworthiness are equally important and need to be addressed in a coherent policy framework. Since the dimensions are very closely related and inter-dependent, there is a potential for policies to address multiple dimensions at once, which can help to avoid unnecessary regulatory burdens. For instance, when workers or their representatives can access algorithms and understand how an AI system reached an employment-related decision (transparency and explainability: Principle 1.3), it will be easier to identify the cause of a wrongful decision and who is responsible for it (accountability: Principle 1.5), which may encourage developers to fix the problem and prevent future harm (Principles 1.1, 1.2 and 1.4).

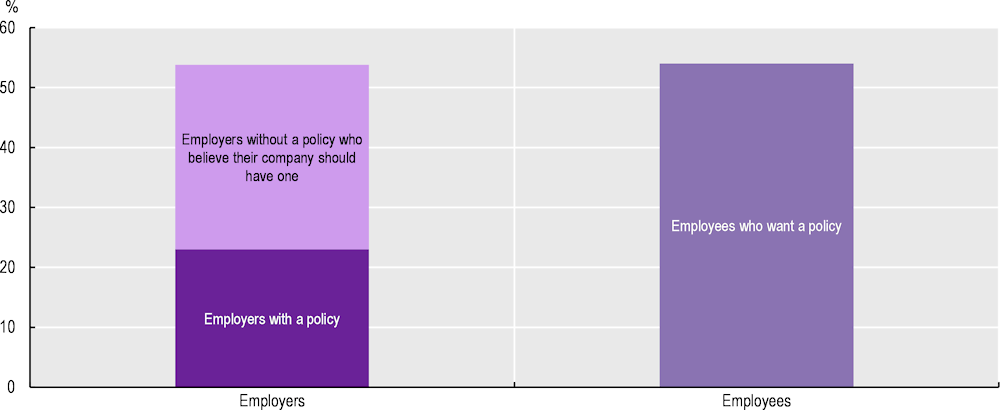

Policies to promote trustworthy AI in the workplace are important to workers, employers and social partners alike. The 2019 Genesys Workplace Survey (Genesys, 2019[4]) found that 54% of workers believe their company should have a written policy on the ethical use of AI or bots (Figure 6.1). Only 23% of surveyed employers had such a policy, while 40% of those without it (31% of all surveyed employers) think their company should have one.

Percentage of respondents in favour of a written policy on the ethical use of AI/bots

Note: Based on an opinion survey into the broad attitudes of 1 103 employers and 4 207 employees regarding the current and future effects of AI on their workplaces. The 5 310 participants were drawn from the United States, Germany, the United Kingdom, Japan, Australia and New Zealand.

Source: Elaborations based on Genesys (2019[4]), Genesys Workplace Survey, https://www.genesys.com/en-gb/company/newsroom/announcements/new-workplace-survey-finds-nearly-80-of-employers-arent-worried-about-unethical-use-of-ai-but-maybe-they-should-be.

This chapter provides an overview of public policy initiatives for the trustworthy development and use of AI in the workplace. This includes general measures that are not AI- or workplace‑specific (but which have implications for AI used in the workplace), as well as measures specific to AI and/or the workplace. It covers non-binding approaches such as AI strategies, guidelines and standards (“soft law”) as well as legally binding legislative frameworks (“hard law”). Social partners also have an important role to play in managing AI uses in the workplace, through collective bargaining and social dialogue – see Chapter 7.

Section 6.1 addresses how soft law may encourage trustworthy development and use of AI in the workplace. Section 6.2 discusses how legally binding legislative frameworks can prevent AI from causing harm to job seekers and workers and protect their fundamental rights, increase the transparency and explainability of workplace AI,2 and the extent to which accountability of AI actors can be identified in existing and draft legislation. Section 6.3 concludes.

Although this chapter discusses soft law and hard law approaches separately, in practice they are often combined. This is in part because both approaches have benefits as well as drawbacks (as discussed in Sections 6.1 and 6.2). A well-co‑ordinated framework of soft law and legislation may ensure that policies are enforceable and easy to comply with, while staying up to date with the latest developments in AI. It should also be noted that, since all dimensions of trustworthiness are inter-related, the examples of policies discussed in a particular sub section can be relevant to other dimensions of trustworthiness, too.

So far, OECD countries’ AI-specific measures to promote trustworthy AI in the workplace have been predominately focusing on soft law, i.e. non-binding approaches that rely on organisations’ capacity to self-regulate. They include, for instance, the development of ethical frameworks and guidelines, technical standards, and codes of conduct for trustworthy AI.3 In many OECD member countries, soft law is consistent with the OECD AI Principles (see Box 6.1). Trade Unions, employer organisations, as well as individual employers have also developed their own AI guidelines and principles, as well as tools strengthening trustworthy AI – see Chapter 7.

One of the key advantages of using soft law for the governance of AI is that it is easier to implement and adjust than legislation (or “hard law”) (Abbott and Snidal, 2000[5]), which helps close some of the gaps that exist or appear in AI legislation. Most AI-specific legislation is currently in development and will likely still take several years to come into effect. In the meantime, soft law for AI is a valuable governance tool to provide incentives and guidelines for trustworthy AI in the workplace. Moreover, since AI is such a rapidly evolving technology, soft law may ensure the necessary flexibility: legislation may not always be able to effectively cover the risks created by the most recent developments (Gutierrez and Marchant, 2021[6]). Soft law can also be used to facilitate legal compliance when legislation is too broad or complex for AI actors to understand or translate into practice. Finally, since soft law tends to be easier to implement, it is also being used to establish international co‑ordination and collaboration on AI policies. International co‑ordination and collaboration are important to help minimise inconsistent policies and potentially consolidate them across countries, which could decrease uncertainty and compliance costs for businesses, especially smaller ones.

While several OECD member countries are developing AI-specific legislation (see Section 6.2), some countries are managing AI predominantly through soft law, in addition to applying existing legislation to workplace AI. The UK Government is one such example, where regulators are asked to use soft law and existing processes as far as possible for the governance of AI development and use (see Box 6.2). Another example is Japan, where “legally-binding horizontal requirements for AI systems are deemed unnecessary at the moment” (METI, 2021[7]). Instead, the Japanese Government focuses on guidance to support companies’ voluntary efforts for AI governance based on multistakeholder dialogue (Habuka, 2023[8]).

On 29 March 2023, the UK Government published the AI Regulation White Paper, outlining their latest proposals for regulating AI in the United Kingdom. The proposals are based on five core principles, setting out the UK Government’s expectations for good, responsible AI systems:

Safety, security, robustness

Appropriate transparency and explainability

Fairness

Accountability and governance

Contestability and redress

The United Kingdom’s independent regulators1 would be supported by the government to interpret what these principles mean for AI development and use in their specific contexts and sectors, and to decide if, when and how to implement measures that suit the way AI is being used in their sectors. This could involve issuing guidance or creating template impact or risk assessment models. The White Paper also confirms the UK Governments’ commitment to establish a regulatory sandbox for AI to help developers navigate regulation and get their products to market. The sandbox would also help the government understand how regulation interacts with new technologies and refine this interaction where necessary.

Due to the protections available in the existing rule of law across domains and sectors, the UK Government currently does not see the need for additional or AI-specific legislation. Additionally, the White Paper emphasises that the soft law approach “will mean the UK’s rules can adapt as this fast-moving technology develops, ensuring protections for the public without holding businesses back from using AI technology” (UK Government, 2023[9]). The context-specific approach recognises that risks may differ within and across sectors and over time. To avoid incoherent and contradictory regulations, the government encourages regulatory co‑ordination, for example through the Digital Regulation Co‑operation Forum.

The regulatory framework proposed in the White Paper relies on a set of new, central functions, initially housed within central government. The new central functions include central risk assessment and will oversee and monitor the framework to ensure that it is having the required impact on risk, while ensuring that the framework is not having an unacceptable impact on innovation.

The UK Government is expected to publish a response to stakeholders following a consultation period that will engage individuals and organisations from across the AI ecosystem, wider industry, civil society, and academia (deadline mid-June 2023).

1. These are often sectoral regulatory bodies, such as the Health and Safety Executive, as well as the Equality and Human Rights Commission or the Competition and Markets Authority.

Source: UK Government (2023[9]), A pro‑innovation approach to AI regulation, https://www.gov.uk/government/publications/ai-regulation-a-pro-innovation-approach/white-paper#part-3-an-innovative-and-iterative-approach.

Countries are also developing guidance on using trustworthy AI in the workplace. For example, the Centre for Data Ethics and Innovation (CDEI)4 in the United Kingdom developed a practical guide in collaboration with the Recruitment and Employment Confederation (REC) to help recruiters effectively and responsibly deploy data-driven recruitment tools, ensure that appropriate steps have been taken to mitigate risks, and maximise opportunities (REC/CDEI, 2021[10]). In Singapore, the Info-Communications Media Development Authority (IMDA) and Personal Data Protection Commission (PDPC) are developing a toolkit – called A.I. Verify – that would enable companies to demonstrate what their AI systems can do and what measures have been taken to mitigate the risks of their systems. The toolkit would allow to verify the performance of any AI system (including workplace AI) against the developer’s claims and with respect to internationally accepted AI ethics principles (IMDA, 2023[11]).

Countries also often wrap measures to promote trustworthy AI in the workplace into AI strategies. Rogerson et al. (2022[12]) show a steep increase in the share of countries with a published national AI strategy in the past five years, particularly in Europe, North America and East Asia. For instance, Germany’s Artificial Intelligence Strategy states that AI applications must augment and support human performance. It also includes an explicit commitment to a responsible development and use of AI that serves the good of society and to a broad societal dialogue on its use (Hartl et al., 2021[13]). Spain’s National AI Strategy includes an ethics pillar, including an impetus for developing a trustworthy AI certification for AI practitioners (La Moncloa, 2020[14]). The Spanish Agency for the Oversight of Artificial Intelligence – Europe’s first AI oversight agency – will be responsible for promoting trustworthy AI and supervising AI systems that may pose significant risks to health, security and fundamental rights (España Digital, 2023[15]).5

Several countries have published strategies for the use of trustworthy AI in the public sector specifically: an initial mapping by Berryhill et al. (2019[16]) identified 36 countries with such strategies. For example, Australia’s Digital Transformation Agency developed a guide to AI adoption in the public sector, stipulating, amongst others, that human decision-makers remain responsible for decisions assisted by machines, and that they must therefore understand the inputs and outputs of the technologies (Australian Government, 2023[17]).

Countries and stakeholders are also supporting the implementation of trustworthy AI in the workplace by developing standards.6 In some cases, like the United States, the development of standards is mandated by legislation (U.S. Congress, 2021[18]). The United States National Institute of Standards and Technology (NIST) is establishing benchmarks to evaluate AI technologies, as well as leading and participating in the development of technical AI standards (NIST, 2022[19]).7 AI standards to support trustworthiness have also been the focus of international co‑operation, as set out in the EU-US Trade and Technology Council Inaugural Joint Statement (European Commission, 2021[20]), or an initiative by the United Kingdom via the Alan Turing Institute to establish global AI standards (Alan Turing Institute, 2022[21]).

Overall, this section shows that soft law is an important governance tool to encourage trustworthy development and use of AI in the workplace. However, because of its non-enforceable nature, soft law may not be sufficient to prevent or remedy AI-related harm in the workplace. For its critics, soft law for AI can even be a form of “ethics washing”, because voluntary AI ethics efforts have limited internal accountability or effectiveness in changing behaviour (Whittaker et al., 2018[22]; McNamara, Smith and Murphy-Hill, 2018[23]). Given the speed of technological change, a combination of soft and hard law may therefore be needed to continue to ensure trustworthy AI in the workplace.

The subsequent sections will discuss developments of hard law to ensure trustworthy AI in the workplace. Legislation not only has strong powers of enforceability, it is also often more detailed and precise than soft law and can have a delegate authority (e.g. judges) for interpreting and implementing the law (Abbott and Snidal, 2000[5]). Moreover, legislation necessarily goes through democratic processes such as discussions and votes in parliament, whereas this is not necessarily the case for soft law. These processes, however, make legislation less flexible than soft law, which may create legal gaps when regulating a fast-changing technology such as AI.

One approach to ensuring that legislation remains up to date with advances in AI technology is to incorporate requirements for a regular review of the legal framework. These reviews could involve input from experts in the field, as well as social partners and stakeholders such as industry groups and consumer organisations. For instance, the proposed EU AI Act and Canada’s Artificial Intelligence and Data Act (AIDA) proposal adopt a “differentiated” or “risk-based” approach, meaning that only specific (high-risk) AI applications are subject to certain regulation or are banned altogether (see Box 6.3 and Box 6.4). Regular updates of the definition of “high-risk” and “unacceptable risk” systems can improve the flexibility of the legislative framework. This differentiated approach also makes legislation more targeted and proportionate, focusing oversight on AI applications with the potential to cause most harm while minimising the burden of compliance for benign and beneficial applications (Lane and Williams, 2023[24]).

Another approach to making legislation more flexible is to develop regulatory “sandboxes”, which allow for the controlled testing of new AI technologies in a safe and regulated environment. Sandboxes provide an opportunity to explore new applications of AI without exposing users or society to undue risk, and allow for the adjustment of existing legal frameworks or the development of new ones in response to these new technologies or applications (Appaya, Gradstein and Haji Kanz, 2020[25]; Madiega and Van De Pol, 2022[26]; Attrey, Lesher and Lomax, 2020[27]).

This section investigates the role of AI- and/or workplace‑specific legislation as well as more general legislation that is applicable to AI (in the workplace), including legislation that is already in effect and other still in development. Public policies for workers whose jobs are at risk of automation from AI are discussed in Chapter 3, and collective bargaining and social dialogue for AI are discussed in Chapter 7. The section does not discuss legislation to address adverse outcomes for organisations (such as economic loss, or damage to property) as a result of using AI in the workplace.

AI has the capacity to fully automate employment-related decisions, including which job seekers see a vacancy, shortlisting candidates based on their CVs, assigning tasks at work, and for bonus, promotion, or training decisions. While this capacity potentially frees up time for managers to focus more on the interpersonal aspects of their jobs (see Chapter 4), it raises the question whether decisions that have a significant impact on people’s opportunities and well-being at work should be made without any human involvement, or at least the possibility for a human to intervene. The OECD AI Principles therefore call on AI actors to implement mechanisms and safeguards that ensure capacity for human intervention and oversight, to promote human-centred values and fairness in AI systems (OECD, 2019[3]).

To date, full automation in workplace management and evaluation of staff remains rare (see Chapter 4). In addition to the technical difficulties inherent to modelling all the tasks and uncertainties that human managers have to take into account in their work (Wood, 2021[28]), factors potentially limiting adoption include costs, lack of skills to work with AI (see Chapter 5) and in some cases regulation. For example, some countries – notably in the EU through the General Data Protection Regulation (GDPR) (see Box 6.3) – provide individuals with a right to meaningful human input on important decisions that affect them, which enables them to opt-out of fully automated decision-making in the workplace (Official Journal of the European Union, 2016[29]; UK Parliament, 2022[30]; Wood, 2021[28]).8 Additionally, there are new legislative efforts that would prevent the adoption of fully automated decision-making tools in high-risk settings such as the workplace, by requiring human oversight (i.e. a “human in the loop”). Section 6.2.3 discusses this concept more in-depth.

The EU General Data Protection Regulation (GDPR) enshrines data rights for persons located in the EU and obligations on entities processing personal data (Official Journal of the European Union, 2016[29]). These rights apply to general data gathering and processing technologies and have specific implications for AI. This is particularly the case for rights to transparent information and communication, as well as rights of access (Art. 12, 13, 15), rectification, erasure and restriction of processing (Art. 16‑17). Among other things, these rights aim to protect individuals’ personal data and increase transparency how data are processed. Article 88 of the GDPR is specifically targeted at data protection in the employment context, giving member states the ability to enact more specific rules to protect employees’ personal data.

Additionally, and importantly, GDPR Article 22 gives individuals the right “not to be subject to a decision based solely on automated processing, including profiling, which produces legal effects concerning him or her […]”. Since it can be extremely difficult to obtain this type of legal consent in employment relationships, Article 22 effectively prohibits algorithmic management that entails fully automatic decision-making (Parviainen, 2022[31]; Wood, 2021[28]). The EU Directive on Working Conditions and Platform Work provides additional protection as regards the use of algorithmic management for people working through digital labour platforms (see Chapter 4).

The 2021 proposal for the European Union’s AI Act seeks to regulate AI systems made available or used in the EU 27 member states to address risks to safety, health and fundamental rights, including specific provisions for use of certain high-risk AI applications in the workplace. It lays down a uniform legal framework to ensure that the development, marketing, and use of AI does not cause significant harmful impact on the health, safety and fundamental rights of persons in the Union (European Commission, 2021[32]).

The proposed act follows a risk-based regulatory approach that differentiates between uses of AI that generate: i) minimal risk; ii) low risk; iii) high risk; and iv) unacceptable risk. It classifies certain AI systems used for recruitment, decisions about promotion, firing and task assignment, and monitoring of persons in work-related contractual relationships as “high risk”. Due to this classification, these AI systems would be subject to legal requirements relating to risk management, data quality and data governance, documentation and recording keeping, transparency and provision of information to users, human oversight, robustness, accuracy, and security.

The risk-based approach has drawn praise (Ebers et al., 2021[33]; DOT Europe, 2021[34]; Veale and Borgesius, 2021[35]), although there is debate about what should fall under each category (Johnson, 2021[36]). The proposal, which is expected to take effect in 2025, may therefore still face significant changes before coming into effect. For instance, the Council suggests that the Commission assesses the need to amend the list of high-risk systems every 24 months (Council of the European Union, 2022[37]), and the parliament proposes additional transparency measures for generative foundation models like GPT (European Parliament, 2023[38]).

To address the main issues that raise legal uncertainty for providers and to contribute to evidence‑based regulatory learning, the act encourages EU member states to establish “AI regulatory sandboxes”, where AI systems can be developed and tested in a controlled environment in the pre‑marketing phase. For instance, the Spanish pilot for an AI regulatory sandbox aims to operationalise the requirements of the future EU AI regulation (European Commission, 2022[39]).

Even without fully automated decision-making, AI use in the workplace can reduce workers’ autonomy and agency, decrease human contact, and increase human-machine interaction. This could lead to social isolation, decreased well-being at work and – taken to the extreme – deprive workers of dignity in their work (Briône, 2020[40]; Nguyen and Mateescu, 2019[41]). Policies and regulations to address the risk of decreased human contact due to the use of AI in the workplace remain limited. Occupational safety and health regulation might cover mental health issues, but there is some uncertainty about whether psychosocial risks posed by AI systems are appropriately covered by these regulations (Nurski, 2021[42]). One exception is Germany: a report produced by the German AI Inquiry Committee highlighted the need to “ensur[e] that, as social beings, humans have the opportunity to interact socially with other humans at their place of work, receive human feedback and see themselves as part of a workforce” (Deutscher Bundestag Enquete-Kommission, 2020[43]).

Collecting and processing personal data – whether for AI systems or other purposes – poses a risk of privacy breaches if the data governance is inadequate, such as data that are misused, used without the needed consent, or inadequately protected (GPAI, 2020[44]; OECD, 2023[45]). Privacy breaches are a violation of fundamental rights enshrined in the United Nations’ Universal Declaration of Human Rights (United Nations, 1948[46]) as well as several other national and regional human rights treaties. Although the risk of a privacy breach for digital technologies is not limited to using AI, the personal data processed by AI systems are often more extensive than data collected by humans or through other technologies,9 thereby increasing the potential harm if something goes wrong (see Chapter 4). Moreover, AI systems can infer sensitive information of individuals (e.g. religion, sexual orientation, or political affiliations) based on non-sensitive data (Wachter and Mittelstadt, 2019[47]). At the same time, AI may also be part of the solution regarding privacy protection and data governance, by helping organisations automatically anonymise data and classify sensitive data in real-time, thereby ensuring compliance to existing privacy rules and regulations.

Due to AI’s reliance on data, general data protection regulations usually apply to the use of AI in the workplace. All OECD member countries, and 71% of countries around the world, have laws in place to protect (sensitive) data and privacy (UNCTAD, 2023[48]). The 2018 EU General Data Protection Regulation (GDPR) is perhaps the best known for such protection principles (see Box 6.3). Under the GDPR, organisations are required to protect personal data appropriately, such as through two‑factor authentication. This applies to applications in any context, including the workplace. Article 35 of the GDPR also requires data protection impact assessments, in particular for new technologies and when the data processing is likely to result in a high risk to the rights and freedoms of natural persons. Additionally, the GDPR requires transparency about which personal data are processed by AI systems and limits the ability to process sensitive personal data such as data revealing ethnic origin, political opinions or religious beliefs, which people may not wish to share even with the best data protection measures in place (GDPR.EU, 2022[49]).10 For instance, during April 2023, Italy’s data protection agency temporarily banned ChatGPT from processing personal data of Italian data subjects due to several alleged violations of the GDPR (Altomani, 2023[50]). Several other European countries have started investigating ChatGPT’s compliance to privacy legislation, and the European Parliament is working on stricter rules for generative foundation models like ChatGPT in the EU AI Act, distinguishing them from general purpose AI (Madiega, 2023[51]; Bertuzzi, 2023[52]; European Parliament, 2023[53]).

Several OECD member countries have EU GDPR-like legislation. For instance, the UK GDPR and Brazil’s Lei Geral de Proteçao de Dados (LGPD) are modelled directly after GDPR, and South Korea’s 2011 Personal Information Protection Act includes many GDPR-like provisions, including requirements for gaining consent (Simmons, 2022[54]; GDPR.EU, 2023[55]). However, the level of protection in countries’ data and privacy legislation varies, and in some countries it is relatively low. For example, there is no federal data privacy law that applies to all industries in the United States, and state‑level legislation is limited (IAPP, 2023[56]).

The employment context can pose distinct challenges that existing data and privacy protection legislation does not effectively address, such as the collective rights and interests of employees and the informational and power asymmetry inherent in the employment relationship (Abraha, Silberman and Adams-Prassl, 2022[57]). For instance, while privacy and data protection laws such as the GDPR often require that data subjects give explicit consent for the use of their personal data, it is uncertain whether meaningful consent can be obtained in situations of power asymmetry and dependency, such as job interviews and employment relationships. Job applicants and workers may worry that refusing to give consent may negatively impact their employment or career opportunities (Intersoft Consulting, 2022[58]). Additionally, some experts question whether workers with limited knowledge and understanding of AI systems can truly give informed consent. Indeed, the view of the European Data Protection Board is that it is “problematic for employers to process personal data of current or future employees on the basis of consent as it is unlikely to be freely given” (EDPB, 2020[59]).11 In 2019, a court in Australia upheld an appeal from a sawmill employee, concluding that he was unfairly dismissed for refusing to use fingerprint scanners to sign in and out of work (Chavez, Bahr and Vartanian, 2022[60]).

Germany is one of the few European countries that used GDPR Article 88 to develop data protection rules specifically applicable in the workplace (Abraha, Silberman and Adams-Prassl, 2022[57]). However, the independent interdisciplinary council on employee data protection recently concluded that, even with the additional regulation, the German legislative framework still does not effectively ensure legal certainty for employee data protection. For instance, the legal framework would need to include standard examples of the (in)admissibility of consent, and the council strongly recommends that the use of AI in the context of employment be regulated by law (Independent interdisciplinary council on employee data protection, 2022[61]).

Even without AI, bias and discrimination in the workplace are unfortunately not uncommon (Cahuc, Carcillo and Zylberberg, 2014[62]; Quillian et al., 2017[63]; Becker, 2010[64]; Bertrand and Mullainathan, 2004[65]) which is in violation of workers’ fundamental rights (United Nations, 1948[46]). The use of trustworthy AI in recruiting can provide data-driven, objective and consistent recommendations that can help increase diversity in the workplace and lead to selecting better performing candidates overall (Fleck, Rounding and Özgül, 2022[66]).12 Yet, many AI systems struggle with bias, because AI’s potential to decrease bias and discrimination can be hindered by bias in the specific design of the AI system and by use of biased data (Accessnow, 2018[67]; Executive Office of the President, 2016[68]; Fleck, Rounding and Özgül, 2022[66]; GPAI, 2020[44]). As a result, using AI in the workplace can cause bias regarding who can see job postings, the selection of candidates to be interviewed, and workers’ performance evaluations, amongst others – see Chapter 4.

A range of existing laws in OECD member countries against discrimination in the workplace can be applied to the use of AI in the workplace. For instance, in 2021, an Italian court applied existing anti-discrimination laws to throw out an algorithm used by the digital platform Deliveroo to assign shifts to riders. The court found that Deliveroo gave priority access to work slots to workers using an algorithm which “scored” workers based on reliability and engagement.13 The tribunal ruled that the algorithm used an unclear data processing method and no possible contextualisation for rankings and therefore indirectly discriminated against workers who had booked a shift but could not work, including if due to personal emergencies, sickness or participation in a strike (Geiger, 2021[69]; Allen QC and Masters, 2021[70]; Tribunale Ordinario di Bologna, 2020[71]).14 Bornstein (2018[72]) argues that employers in the United States may be litigable if they have intentionally chosen to feed biased data into the model that reflects past discrimination, and, as a result, AI reproduces such discrimination.

However, existing anti-discrimination legislation is usually not designed to be applied to AI use in the workplace, and relevant case law is still limited. In practice, it may therefore be difficult to contest AI-based employment-related decisions using only existing anti-discrimination laws. For instance, plaintiffs may face difficulties accessing the algorithm due to privacy and intellectual property regulations, and even if they do get access, the algorithm may be so complex that not even the programmers and administrators know or understand how the output was reached (Rudin, 2019[73]; O’Keefe et al., 2019[74]; Bornstein, 2018[72]). In addition, many applicants and workers may not even know that AI is being used to assess them, or may have neither the resources, nor the skills and tools necessary to evaluate whether the AI system is discriminating against them, which poses challenges in countries that rely heavily on individual action for seeking redress (more on this in Section 6.2.2). Case law applying anti-discrimination legislation to AI will need to be monitored, to determine whether and how much this legislation will need to be adapted to address the use of AI in the workplace.

Some institutions are also calling for strong regulation or even society-wide bans of (AI-powered) facial processing technologies,15 due to concerns about privacy and the limited accuracy of these technologies for certain groups, such as for women and ethnic minorities (Buolamwini and Gebru, 2018[75]). Additionally, some experts do not consider that facial recognition technology can reliably interpret someone’s personality or emotions (Whittaker et al., 2018[22]). In May 2020, the State of Maryland in the United States passed a law banning the use of facial recognition in employment interviews, unless the interviewee signs a waiver (Fisher et al., 2020[76]). However, it is unclear how much real choice job applicants and workers might have in signing a waiver and the law has faced criticism for leaving broad gaps in terms of what will be recognised as “facial recognition services” and “facial templates” created by the facial recognition service, and may therefore require additional interpretation (Glasser, Forman and Lech, 2020[77]). Additionally, a 2021 report by the United Nations Human Rights Office called for a temporary ban on the use of facial recognition (UN Human Rights Council, 2021[78]), and in its 2021 guidelines on how European countries should regulate the processing of biometric data, the Council of Europe called on European countries to impose a strict ban on facial analysis tools that purport to “detect personality traits, inner feelings, mental health or workers’ engagement from face images” (Council of Europe, 2021[79]).

The right of workers to form and join organisations of their choice (freedom of association) is a fundamental human right stated in the Universal Declaration of Human Rights (United Nations, 1948[46]) and the ILO Declaration of Fundamental Principles and Rights at Work (ILO, 1998[80]). This right is closely tied to the right to collective bargaining (ILO, 1998[80]). As discussed in Chapter 7, AI technologies can aid social dialogue and collective bargaining by providing information, insights, and data-driven arguments to social partners. However, using AI can also diminish workers’ bargaining power due to power imbalances and information asymmetries between workers, employers, and representatives, and AI-based monitoring can hinder collective organising and union activities (see Chapter 7).

Workers’ right to organise and participate in collective bargaining are typically translated into national labour laws, but the level of protection and enforcement of these laws varies across countries, amongst others due to varying shares of union membership (OECD, 2019[81]). Spain passed legislation in August of 2021, making it mandatory for digital platforms to provide workers’ representatives with information about the mathematical or algorithmic formulae used to determine working conditions or employment status (Pérez del Prado, 2021[82]; Aranguiz, 2021[83]). The Spanish law thereby provides for a continued role for social dialogue and collective bargaining, and further rounds of dialogue between the platforms and unions are likely, with the possibility of further policy changes.16

AI has the potential to contribute to increased physical safety for workers, for instance by taking over hazardous tasks (Lane, Williams and Broecke, 2023[84]; Milanez, 2023[85]; EU-OSHA, 2021[86]), or alerting workers who may be at risk of stepping too close to dangerous equipment (Wiggers, 2021[87]) – see Chapter 4. However, if not designed or implemented well, AI systems can also threaten the physical safety and the well-being of workers, for instance through dangerous machine malfunctioning, or by increasing work intensity brought on by higher performance targets. The need to learn how to work with new technologies and worries over greater monitoring through AI may also increase stress (Milanez, 2023[85]).

Labour law and Occupational Safety and Health (OSH) regulations often apply directly to AI use in the workplace,17 for instance by requiring employers to pre‑emptively ensure that tools used in the workplace will not harm workers (ILO, 2011[88]), which would also apply to AI-powered tools. Accountability for AI-related harm in the workplace could therefore potentially fall fully on the employer, with court cases already arising as regards AI-based decisions about hiring (Maurer, 2021[89]; Engler, 2021[90]; Butler and White, 2021[91]) and performance management (Wisenberg Brin, 2021[92]).18 Moreover, algorithmic management may put strain on bargaining and informational dynamics at the workplace level – see Chapter 7.

As AI systems become more integrated in the workplace, OSH regulation will likely need to adapt and possibly be extended to effectively address concerns raised by the use AI (Jarota, 2021[93]; Kim and Bodie, 2021[94]). However, to date, much remains uncertain about if and how these changes would take effect, and how they would interact with new legislative proposals, such as the EU AI Act, that also cover risks to occupational safety and health.

Even with the most elaborate legislative framework in place to mitigate or prevent AI-related risk, individuals and employers need to be able to verify if and how the system affects employment-related decisions. Providing this type of information (“transparency”) in an understandable way (“explainability”) not only enables individuals to take action if they suspect they are adversely affected by AI systems: it also allows workers and employers to make informed decisions about buying or using an AI system for the workplace, and it may increase acceptance and trust in AI, all of which is crucial for promoting the diffusion of trustworthy AI in the workplace.

However, implementing transparency and explainability of AI can be complicated. For example, transparency requirements may put the privacy of data subjects at risk. Requiring explainability may negatively affect the accuracy and performance of the system, as it may involve reducing the solution variables to a set small enough that humans can understand. This could be suboptimal in complex, high-dimensional problems (OECD.AI, 2022[95]). Transparency and explainability might also be hard to achieve because developers need to be able to protect their intellectual property,19 and because some AI systems – such as generative AI or deep neural networks – are so complex that even their developers will not be able to fully understand or have full insight into how it reached certain outcomes. Transparency and explainability may also increase complexity and costs of AI systems, potentially putting small businesses at a disproportionate disadvantage (OECD.AI, 2022[95]).

Nevertheless, transparency and explainability do not necessarily require an overview of the full decision-making process, but can be achieved with either human-interpretable information about the main or determinant factors in an outcome, or information about what would happen in a counterfactual (Doshi-Velez et al., 2017[96]). For example, if an employee is refused a promotion based on an AI system’s recommendation, information can be given on what factors affected the decision, whether they affect it positively or negatively and what their respective weights are. Alternatively, counterfactual models could provide a list of the most important features that the employee would need to possess in order to obtain the desired outcome, e.g. “you would have obtained the position if you had had a better level of English and at least three additional years of experience in your present role” (Loi, 2020[97]). Yet, these counterfactuals would need to be checked for fairness as well. This section discusses what countries are doing to ensure that AI used in the workplace is transparent and explainable.

People are not always aware that they are being hired, monitored, promoted, or managed via AI. A global survey20 found that 34% of respondents think they interacted with AI in the recent past, while their reported use of specific services and devices suggests that 84% have interacted with AI (Pega, 2019[98]). For instance, job seekers might not be aware that the vacancies they see are a selection made by AI, or that their CV or video interview are analysed through AI, and hence that a job offer, or rejection, is (in part) based on AI. Additionally, employers may not see the need to inform workers or job applicants about the fact that they are using AI. By contrast, transparency implies providing insight into the way in which employment-related decisions are made by or with the help of AI. For instance, there is a pending decision by the Dutch data protection authority about complaints by French Uber drivers concerning their deregistration from the platform without satisfactory explanation and denial of access to information, amongst others. (Hießl, 2023[99]).

Employers, in turn, may not be aware that their employees or job candidates are using AI to help them do their jobs. For instance, AI-powered text generators such as ChatGPT can write CVs, application letters, essays and reports (as well as pieces of code), in a writing style that is convincingly human, and which often remains undetected by current plagiarism software. So-called “deepfakes” – whereby AI systems convincingly alter and manipulate image, audio or video content to misrepresent someone as doing or saying something – are also a risk for employers (and potentially also workers). The Federal Bureau of Investigation (FBI) in the United States has warned for an increase in the use of deepfakes and stolen identities to apply for remote work positions (FBI, 2022[100]). Informing actors that they are interacting with (the output of) an AI system is a fundamental element of ensuring transparency in AI system use.

In the EU and the United Kingdom, the GDPR requires employers to ask job applicants and workers for their explicit consent when they want to use their personal data,21 and for automated decisions that involve no meaningful human involvement.22 For instance, the Dutch court23 recently ruled that the account deactivation of five Uber drivers was in violation of GDPR Article 22 because it was based on automated data processing (Hießl, 2023[99]). While the GDPR will continue to be applicable to AI systems that are built on or process data subjects’ personal data, the proposed EU AI Act would also ensure that providers of AI systems notify people of their interactions with an AI system, including those that are not based on personal data (European Commission, 2021[32]).24 The latest proposed amendments to the AI Act by the European Parliament include additional transparency measures for generative foundation models (such as ChatGPT) like disclosing that the content was generated by AI and publishing summaries of copyrighted data used for training (European Parliament, 2023[38]; European Parliament, 2023[53]).The proposed EU Directive on Working Conditions and Platform Work (Platform Work Directive for short) also includes the right to transparency regarding the use and functioning of automated monitoring and decision-making systems (European Commission, 2021[101]; European Commission, 2021[102]).

In the United States, too, some jurisdictions require that job applicants and workers are notified about their interactions with AI, but also that they need to give their consent before that interaction can take place. The Artificial Intelligence Video Interview Act of Illinois requires employers to inform candidates of their use of AI in the video interview before it starts, explain how it works, and obtain written consent from the individual (ILCS, 2019[103]).25 However, as Wisenberg Brin (2021[92]) highlights, the law is not clear on what kind of explanations need to be given to candidates, as well as the required level of algorithmic detail. The law also does not clarify what happens to the application of a candidate who refuses to be analysed in this way. In addition, this law could conflict with other federal and state laws that require the preservation of evidence.

Some countries have passed legislation to regulate transparency of AI systems in the workplace specifically. For instance, emerging “rider laws”, such as the one enacted by Spain (see the subsection on Freedom of association and the right to collective bargaining in Section 6.2.1), are expected to increase awareness and help mitigate risks associated with the transparency and explainability of AI systems for workers (De Stefano and Taes, 2021[104]). Another example of legislation for workplace AI transparency is the EU’s draft Platform Work Directive (European Commission, 2021[105]), which would specify in what form and at which point in time digital labour platforms should provide information about their use and key features of automated monitoring and decision-making systems to platform workers and their representatives, as well as to labour authorities (Broecke, 2023[106]).

Thanks to AI’s reliance on data, sufficiently transparent and explainable AI systems in the workplace may lead to better insights into how employment-related decisions are made, when AI-powered, as compared to when those decisions are made only by humans. After all, human decision-making can be opaque and hard to explain, too. AI would also open the possibility to provide feedback for AI-informed decisions to job seekers and workers systematically and at lower costs.

However, AI systems are often more complex and their outcomes more difficult to explain than other technologies and automated decision-making tools. To make AI in the workplace trustworthy, and ensure the possibility to rectify its outcomes when necessary, workers, employers and their representatives should have understandable explanations as to why and how important decisions are being made, such as decisions that affect well-being, the working environment/conditions, or one’s ability to make a living. Without being able to determine the logic of employment-related decisions made or informed by AI systems, it can be extremely difficult to rectify the outcomes of such decisions, which would violate people’s right to due process (see Section 6.2.3). Additionally, AI-based decisions that are not explainable are unlikely to be accepted by employees (Cappelli, Tambe and Yakubovich, 2019[107]).

In New Zealand, the Employment Relations Act of 2000 was used in 2013 to invalidate a decision to dismiss an employee, in part because the decision was informed by the results of an AI-powered psychometric test which the employer could not explain, or even seemingly understand. Since the algorithm information (including whether it was AI per se or a less complex algorithm) was not available to the employee, they were denied the right to “an opportunity to comment” before the decision is made (Colgan, 2013[108]; New Zealand Parliamentary Counsel Office, 2000[109]). This example highlights the need for transparency and explainability, not only for the person subject to the AI-powered decision, but also for the organisation using or deploying the AI system.

Some countries are regulating transparency and explainability of AI systems through AI-specific legislation. For instance, the proposed Canadian Artificial Intelligence and Data Act (AIDA) and Consumer Privacy Protection Act (CPPA) would oblige developers and users of automated decision-making systems and high-impact AI systems to provide “plain-language” explanations about how the systems reach a certain outcome (House of Commons of Canada, 2022[110]) – see Box 6.4.

On 16 June 2022, the Canadian Government introduced Bill C‑27, also known as the Digital Charter Implementation Act, 2022. If passed, the Charter will introduce three proposed acts: the Consumer Privacy Protection Act (CPPA), the Personal Information and Data Protection Tribunal Act (PIDTA), and the Artificial Intelligence and Data Act (AIDA) (House of Commons of Canada, 2022[110]).

The CPPA would reform existing personal data protection legislation, which the new Tribunal (PIDTA) should help to enforce. The CPPA would apply to all actors using personal information for commercial activities, thereby explicitly including automated decision systems based on, for instance, machine learning, deep learning, or neural networks.

The AIDA aims to strengthen Canadians’ trust in the development and deployment of AI systems in the private sector (Government of Canada, 2022[111]): government institutions are explicitly exempt from the AIDA. The AIDA would establish common requirements for the design, development, and use of AI systems, including measures to mitigate risks of physical or psychological harm and biased output, particularly of “high-impact AI systems”. The AIDA would also prohibit certain AI systems that may result in serious harm to individuals or their interests. However, most of the substance and details – including the definition of “high-impact” AI systems and what constitutes “biased output” (Witzel, 2022[112]) – are left to be elaborated in future regulations (Ferguson et al., 2022[113]).

Another challenge to explainability is that many managers, workers and their representatives, as well as policy makers and regulators may only have limited experience with AI and may not have the skills to understand what the AI applications are doing or how they are doing it – see Chapter 5. A 2017 survey found that more than two in five respondents in the United Kingdom and the United States admitted that “they have no idea what AI is about” (Sharma, 2017[114]).26 Although understanding AI may only require moderate digital skills, in the OECD on average, more than a third of adults lack even the most basic digital skills (Verhagen, 2021[115]).

Equipping people with better knowledge and skills about AI would help facilitate explainability (OECD, 2019[116]), which may help build trust in AI systems. In practice, countries where people report higher levels of understanding of AI tend to have more trust in companies that use AI (Ipsos, 2022[117]). This is not only an issue of transparency of AI use, but also of understanding how the technology works. Increasing understanding of AI among workers and their representatives can help better understand the benefits and risks of AI systems used in the workplace and empower them to engage in consultation and take action as needed. For instance, job seekers may not be aware that they did not even see a specific vacancy because an algorithm determined that they were not suitable for the job. Finally, it is important that policy makers, legal professionals and other regulators understand how AI systems work. Increasing people’s understanding of AI requires strengthening adult learning systems – see Verhagen (2021[115]); OECD (2019[118]); and Chapter 5. Collective bargaining on AI can also play an educational role, fostering greater understanding for both workers and employers on the risks and benefits of AI in a practical forum – see Chapter 7.

Accountability relies on being able to tie a specific individual or organisation to the proper functioning of an AI system, including harm prevention (see Section 6.2.1), ensuring transparency and explainability of the system (see Section 6.2.2), and alignment with the other OECD AI principles (OECD, 2019[3]). Besides assigning these responsibilities to different AI actors, clear accountability also enables workers or employers that have been adversely impacted by AI to contest and rectify the outcome.

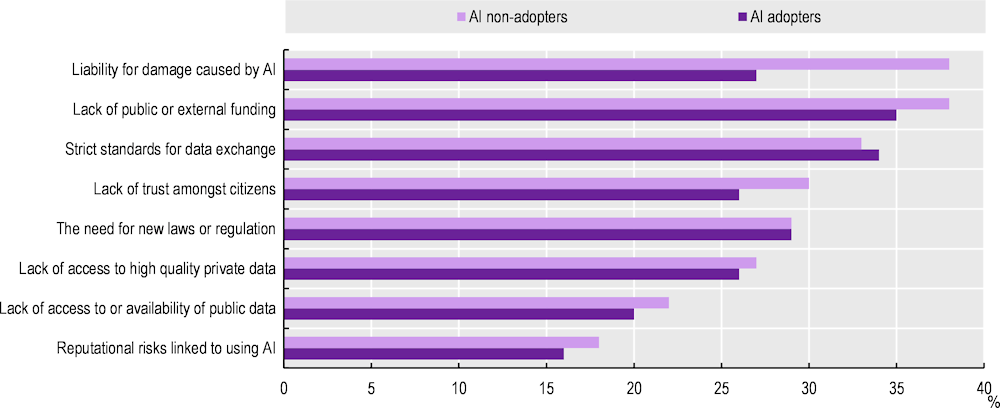

A lack of clear accountability limits the potential for the diffusion of trustworthy AI in the workplace. For instance, among European enterprises who do not currently use AI, “liability for damage caused by artificial intelligence” is the most cited barrier for AI adoption, together with a lack of funding (see Figure 6.2). Liability risks are also in the top‑3 most cited barriers or challenges experienced by AI adopters and are mentioned more frequently in large enterprises and in the healthcare sector and transport sector (European Commission, 2019[119]).

Percentage of enterprises who responded that a specific barrier is applicable to their business

Note: Responses to the question “I will name potential external obstacles to the use of artificial intelligence. Please indicate all that your company has experienced as a challenge or a barrier.” Based on responses from 8 661 enterprises in the EU27 member states.

Source: European Commission (2019[119]), European enterprise survey on the use of technologies based on artificial intelligence, https://digital-strategy.ec.europa.eu/en/library/european-enterprise-survey-use-technologies-based-artificial-intelligence.

Indeed, AI systems pose challenges for accountability, because it is not always clear which actor linked to the AI system is responsible if something goes wrong. This is related to the fact that, unlike traditional goods and services, some AI systems can change as they are used, by learning from new data. Research shows that there is no guarantee that algorithms will achieve their intended goal when applied to new cases, in a new context, or with new data (Neff, McGrath and Prakash, 2020[120]; Heavens, 2020[121]). AI’s risks for accountability are further exacerbated by recent developments in generative AI, amongst others because it is uncertain who is responsible for the content created by generative AI systems. Additionally, developers, providers and users of AI systems are not necessarily located in the same jurisdiction, and approaches to accountability may vary across them. This may put SMEs, who may not have legal expertise in-house, in a particularly difficult position.

Overall, accountability is important as a foundation for the use of trustworthy AI in the workplace. Clear accountability not only helps for holding actors accountable and potentially claim damages after harm has occurred; it can also help to pre‑emptively ensure that these risks are addressed (i.e. that AI used in the workplace is trustworthy). Without clear accountability, it would not be possible to identify which AI actor is responsible for upholding anti-discrimination principles, for example, or for ensuring that AI systems operate safely. Clear accountability is important for ensuring other dimensions of trustworthiness as well. If no one is responsible when AI systems do not work as they are reasonably expected to, transparency about the problems in the AI system will not necessarily translate into process improvements (Loi, 2020[97]).27

The importance and challenges of accountability for AI used in the workplace have been well exemplified by some platform-work cases. Drivers for Amazon Flex, for example, encountered difficulties holding AI actors accountable for adverse outcomes such as refusals to accept seemingly genuine reasons for late deliveries or removals from the platform without clear explanations, because official systems for recourse were difficult to navigate (Soper, 2021[122]). Similar complaints have also been made against Uber Eats in the United Kingdom, concerning an AI-based facial identification software, for which it was difficult to hold actors accountable because it did not allow for the possibility that the technology itself had made a mistake (Kersley, 2021[123]).28 The fact that platform workers may be self-employed or even in bogus self-employment, and have low trade union density makes it even more difficult for them to have employment decisions contested or rectified (OECD, 2019[124]).

Legislation for accountability in the context of automated decision-making processes often lies with having a human “in the loop” (e.g. they may have to approve a decision) or “on the loop” (e.g. they are able to view and check the decisions being made), in a deliberate attempt to ensure human accountability (Enarsson, Enqvist and Naarttijärvi, 2021[125]).29 However, in practice, many uncertainties remain about the legal role and accountability facing the human in or on the loop, in part because, as of yet, the terms “human in the loop” or “human on the loop” have no fixed legal meaning or effect (Enarsson, Enqvist and Naarttijärvi, 2021[125]). For instance, while the EU AI Act specifies responsibilities and obligations for AI providers, AI users, and human oversight,30 it will be left to future standard-setting to determine the exact role the human in the loop will have in ensuring trustworthy AI.

Additionally, in September 2022 the European Commission proposed a targeted harmonisation of national liability rules for AI through two proposals: a review of the Directive on liability for defective products – Product Liability Directive for short – and a proposal for a Directive on adapting non contractual civil liability rules to artificial intelligence – AI Liability Directive for short – (European Commission, 2022[126]). Current product liability rules in the EU are based on the strict liability of manufacturers, meaning that when a defective product causes harm, the product’s manufacturer must pay damages without the need for the claimant to establish the manufacturer’s fault or negligence. The revised Product Liability Directive modernises and reinforces existing product liability rules to provide legal clarity to businesses regarding fair compensation to victims of defective products that involve AI, but will maintain the strict liability regime.

In contrast to strict liability, fault-based liability regimes put the burden of proof on the claimant, who must demonstrate that the party being accused of wrongdoing (such as the manufacturer) failed to meet the standard of care expected in a given situation (Goldberg and Zipursky, 2016[127]). However, the opacity and complexity of AI systems can make it difficult and expensive for victims to build cases and explain in detail how harm was caused. To address these difficulties, the AI Liability Directive – a fault-based liability regime – proposes to alleviate the burden of proof for AI victims through a so-called rebuttable “presumption of causality” (European Commission, 2022[126]).31 The Directive also expands the definition of “harm”, from health and safety issues to infringements on fundamental rights as well (including discrimination and breaches of privacy). Furthermore, together with the revised Product Liability Directive, it aims to facilitate easier access to information about the algorithms for European courts and individuals (Goujard, 2022[128]).32

Additionally, according to the EU and UK GDPR, data controllers of automated decision systems using personal data are accountable for implementing “suitable measures to safeguard the data subject’s rights and freedoms and legitimate interests”, including the right to obtain human intervention by the controller, to express their point of view, and to contest the decision (Official Journal of the European Union, 2016[29]; GDPR.EU, 2023[55]).33

In some countries, such as Canada, the algorithmic function of an AI system does not qualify as a “product” under product liability regimes (Sanathkumar, 2022[129]), which could imply that employers would be liable for AI-related harm due to a defective algorithm. The Canadian AIDA possibly shifts some of this liability to developers of AI systems, for instance by making the assessment and mitigation of risks of harm or biased output that could result from the use of the system a shared responsibility between designers, developers and those who make the AI system available for use or manages its operation (plausibly the employer, in case of workplace AI) (House of Commons of Canada, 2022[110]).

In October 2022, following a year of public engagement, the Office of Science and Technology Policy of the White House published The Blueprint for an AI Bill of Rights: a set of five non-binding principles to promote trustworthy development and use of automated decision systems (The White House, 2022[130]), to help shape future policies related to AI. Additionally, the proposed Digital Platform Commission Act of 2022 would establish an expert Federal agency to develop and enforce legislation for digital platforms, with specific mandates to ensure that the algorithmic processes are trustworthy (US Congress, 2022[131]).

In April 2022, several members of Congress proposed the Algorithmic Accountability Act of 2022 (US Congress, 2022[132]), an updated version of the Algorithmic Accountability Act of 2019 (US Congress, 2019[133]). The bill proposes that organisations that use automated decision systems perform impact assessments both before and after the deployment of the system in order to identify and mitigate potential harms for consumers (Mökander and Floridi, 2022[134]). The same rules would apply to “augmented critical decision processes”, i.e. activities that employ an automated decision system to make a decision or judgment that can have a significant effect on a consumer’s life, including (amongst others) “employment, workers management, or self-employment” (US Congress, 2022[132]).

The focus on preventing and mitigating potential harm for consumers implies that the Federal Trade Commission (FTC) is responsible for verifying whether the impact assessment requirements are met. It is left open for the FTC to determine what documentation and information must be submitted after completing such an assessment (Mökander and Floridi, 2022[134]).

SMEs and government agencies would be exempt from the Algorithmic Accountability Act, because the bill would only apply to companies that fall under the FTC’s jurisdiction1 and have an annual turnover of more than USD 50 million, have more than USD 250 million in equity value, or process the information of more than 1 million users (US Congress, 2022[132]).

A number of states have proposed similar regulation, including California’s Automated Decision Systems Accountability Act (California Legislature, 2020[135]), New Jersey’s Algorithmic Accountability Act (State of New Jersey Legislature, 2019[136]), Washington D.C.’s Stop Discrimination by Algorithms Act (Racine, 2021[137]) and Washington’s SB 5 527 (Hasegawa et al., 2019[138]).

The National Telecommunications and Information Administration (NTIA) released a request for comments on AI system accountability measures and policies in April 2023. The deadline for submitting comments was set for mid-June 2023. The request seeks insights on self-regulatory, regulatory, and other measures and policies that are designed to provide assurance to external stakeholders that AI systems are legal, effective, ethical, safe, and otherwise trustworthy. The NTIA will use these submissions, as well as other public interactions on the subject, to publish a report on the development of AI accountability policies. The report will place significant emphasis on the AI assurance ecosystem (NTIA, 2023[139]).

1. The FTC enforces antitrust and consumer protection laws, targeting unfair competition and deceptive practices. It covers virtually every area of commerce, with some exceptions concerning banks, insurance companies, non-profits, transportation and communications common carriers, air carriers, and some other entities (Federal Trade Commission, 2023[140]).

An increasingly popular tool to assess AI systems and ensure they follow the law and/or principles of trustworthiness, is “AI auditing” or “algorithmic auditing”. Generally speaking, in an algorithmic audit, a third-party assesses to what extent and why an algorithm, AI system and/or the context of their use aligns with ethical principles or regulation. For instance, in November 2021, the New York City Council banned the use of “automated employment decision tools” without annual bias audits (Cumbo, 2021[141]). However, there are concerns that vendor-sponsored audits would “rubber-stamp” their own technology, especially since there are few specifics in terms of what an audit should look like, who should conduct the audit, and what disclosure to the auditor and public should look like (Turner Lee and Lai, 2021[142]). How algorithmic audits should be conducted to ensure they contribute to trustworthy AI is still an area of active research (Ada Lovelace Instititute, 2020[143]; Brown, Davidovic and Hasan, 2021[144]).